2.Elasticsearch集群实现

简介:

部署操作:

elasticsearch-head客户端:

cerebro客户端:

监控es集群的健康状态:

简介:

最小化安装要求:

| 操作系统 | CentOS7 Ubuntu18 |

|---|---|

| 内存 | 4G,生产建议128G以上 |

| cpu | 2核,2.2GHz |

| 数据磁盘 | 50G |

| 服务器数量 | 3,5,7以上 |

在三台服务器上安装es软件,如果选择的elasticsearch软件包是带有jdk的,可以直接安装,如果没有带jdk环境,需要提前安装jdk环境

带jdk环境的es软件包名,如:elasticsearch-7.6.2-amd64.deb

ELK集群实现:

磁盘空间、磁盘I/O:

每个服务器的磁盘都要很大,推荐 10K SAS盘*8块,或直接上企业级固态硬盘,PCI-E固态硬盘,三台服务器的磁盘都要一样大

CPU:2*E5 2660 主频 2.2GHz

内存:推荐 128G/96G

部署操作:

1、对系统进行优化:

es不允许使用root启动,会自动创建一个 elasticsearch 用户,我们需要修改这个用户的限制

root@usera:~# vim /etc/security/limits.conf root soft core unlimited root hard core unlimited root soft nproc 1000000 root hard nproc 1000000 root soft nofile 1000000 root hard nofile 1000000 root soft memlock 32000 root hard memlock 32000 root soft msgqueue 8192000 root hard msgqueue 8192000 elasticsearch soft core unlimited elasticsearch hard core unlimited elasticsearch soft nproc 1000000 elasticsearch hard nproc 1000000 elasticsearch soft nofile 1000000 elasticsearch hard nofile 1000000 elasticsearch soft memlock 32000 elasticsearch hard memlock 32000 elasticsearch soft msgqueue 8192000 elasticsearch hard msgqueue 8192000 root@usera:~# hostnamectl set-hostname es-1.xingyu.com root@usera:~# reboot

2、挂载一块磁盘到服务器上

文件格式为 xfs

挂载到 /data/esdata 下

root@es-1:~# mkfs. mkfs.bfs mkfs.cramfs mkfs.ext3 mkfs.fat mkfs.msdos mkfs.vfat mkfs.btrfs mkfs.ext2 mkfs.ext4 mkfs.minix mkfs.ntfs mkfs.xfs root@es-1:~# mkfs.xfs /dev/sdb meta-data=/dev/sdb isize=512 agcount=4, agsize=6553600 blks = sectsz=512 attr=2, projid32bit=1 = crc=1 finobt=1, sparse=1, rmapbt=0 = reflink=1 data = bsize=4096 blocks=26214400, imaxpct=25 = sunit=0 swidth=0 blks naming =version 2 bsize=4096 ascii-ci=0, ftype=1 log =internal log bsize=4096 blocks=12800, version=2 = sectsz=512 sunit=0 blks, lazy-count=1 realtime =none extsz=4096 blocks=0, rtextents=0 root@es-1:~# mkdir -p /data/esdata # 挂载磁盘最好使用uuid进行挂载 root@es-1:~# blkid /dev/sda2: UUID="bf4e28df-b2cd-47a9-a653-88977b76032c" TYPE="ext4" PARTUUID="d82a7ff8-b78e-45ed-a8c2-49b392b30978" /dev/sda3: UUID="PIV7fZ-EHS8-4tnw-fzaC-BKO0-kXMv-9hV7fM" TYPE="LVM2_member" PARTUUID="978777ea-1913-428d-8a52-18a0b278754b" /dev/mapper/ubuntu--vg-ubuntu--lv: UUID="4909f89c-7c8e-4de3-b1d9-ff73d3f36090" TYPE="ext4" /dev/loop0: TYPE="squashfs" /dev/loop1: TYPE="squashfs" /dev/loop2: TYPE="squashfs" /dev/loop3: TYPE="squashfs" /dev/loop4: TYPE="squashfs" /dev/loop5: TYPE="squashfs" /dev/sda1: PARTUUID="354b94b1-08f7-4a66-a70c-8c930cf9b4c9" /dev/sdb: UUID="5320d71f-0b12-4673-a849-90fd8f178ded" TYPE="xfs" root@es-1:~# vim /etc/fstab UUID="5320d71f-0b12-4673-a849-90fd8f178ded" /data/esdata xfs defaults 0 0 root@es-1:~# mount -a root@es-1:~# df -h Filesystem Size Used Avail Use% Mounted on udev 933M 0 933M 0% /dev tmpfs 196M 1.2M 195M 1% /run /dev/mapper/ubuntu--vg-ubuntu--lv 20G 5.9G 13G 32% / tmpfs 977M 0 977M 0% /dev/shm tmpfs 5.0M 0 5.0M 0% /run/lock tmpfs 977M 0 977M 0% /sys/fs/cgroup /dev/loop0 56M 56M 0 100% /snap/core18/2128 /dev/loop1 62M 62M 0 100% /snap/core20/1376 /dev/loop2 56M 56M 0 100% /snap/core18/2284 /dev/loop3 68M 68M 0 100% /snap/lxd/22526 /dev/loop5 44M 44M 0 100% /snap/snapd/15177 /dev/loop4 71M 71M 0 100% /snap/lxd/21029 /dev/sda2 976M 205M 705M 23% /boot tmpfs 196M 0 196M 0% /run/user/0 /dev/sdb 100G 746M 100G 1% /data/esdata

3、使用timedatectl命令修改时区:

root@es-1:~# timedatectl Local time: Wed 2022-03-16 06:26:12 UTC Universal time: Wed 2022-03-16 06:26:12 UTC RTC time: Wed 2022-03-16 06:26:11 Time zone: Etc/UTC (UTC, +0000) System clock synchronized: yes NTP service: active RTC in local TZ: no root@es-1:~# ll /etc/localtime lrwxrwxrwx 1 root root 27 Mar 10 11:30 /etc/localtime -> /usr/share/zoneinfo/Etc/UTC root@es-1:~# cat /etc/timezone Etc/UTC root@es-1:~# timedatectl list-timezones |grep Shang Asia/Shanghai ## 使用timedatectl命令修改时区 root@es-1:~# sudo timedatectl set-timezone Asia/Shanghai root@es-1:~# timedatectl Local time: Wed 2022-03-16 14:28:09 CST Universal time: Wed 2022-03-16 06:28:09 UTC RTC time: Wed 2022-03-16 06:28:09 Time zone: Asia/Shanghai (CST, +0800) System clock synchronized: yes NTP service: active RTC in local TZ: no

4、设置时间同步,时间对于服务器特别重要

root@es-1:~# apt install net-tools vim lrzsz tree screen lsof tcpdump wget ntpdate -y echo "*/5 * * * * /usr/sbin/ntpdate time3.aliyun.com &> /dev/null && hwclock -w" >> /var/spool/cron/root systemctl restart cron

5、安装自带jdk环境的 es

root@es-1:/usr/local/src# apt update root@es-1:~# cd /usr/local/src/ root@es-1:/usr/local/src# ll -h total 283M drwxr-xr-x 2 root root 4.0K Mar 16 14:53 ./ drwxr-xr-x 10 root root 4.0K Aug 24 2021 ../ -rw-r--r-- 1 root root 283M Mar 16 14:53 elasticsearch-7.6.2-amd64.deb ## 我在使用 dpkg命令时,出现如下报错 root@es-1:/usr/local/src# dpkg -i elasticsearch-7.6.2-amd64.deb dpkg: error: dpkg frontend lock is locked by another process root@es-1:/usr/local/src# lsof /var/lib/dpkg/lock-frontend COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME unattende 2033 root 8uW REG 253,0 0 917749 /var/lib/dpkg/lock-frontend root@es-1:/usr/local/src# sudo kill -9 2033 root@es-1:/usr/local/src# sudo rm -rf /var/lib/dpkg/lock-frontend root@es-1:/usr/local/src# sudo dpkg --configure -a ## 再次安装es root@es-1:/usr/local/src# dpkg -i elasticsearch-7.6.2-amd64.deb Selecting previously unselected package elasticsearch. (Reading database ... 108361 files and directories currently installed.) Preparing to unpack elasticsearch-7.6.2-amd64.deb ... Creating elasticsearch group... OK Creating elasticsearch user... OK Unpacking elasticsearch (7.6.2) ... Setting up elasticsearch (7.6.2) ... Created elasticsearch keystore in /etc/elasticsearch Processing triggers for systemd (245.4-4ubuntu3.15) ... ## 配置文件位置 root@es-1:/usr/local/src# ll /etc/elasticsearch/ total 52 drwxr-s--- 2 root elasticsearch 4096 Mar 16 14:58 ./ drwxr-xr-x 97 root root 4096 Mar 16 14:58 ../ -rw-rw---- 1 root elasticsearch 199 Mar 16 14:58 elasticsearch.keystore -rw-r--r-- 1 root elasticsearch 76 Mar 16 14:58 .elasticsearch.keystore.initial_md5sum -rw-rw---- 1 root elasticsearch 2847 Mar 26 2020 elasticsearch.yml -rw-rw---- 1 root elasticsearch 2373 Mar 26 2020 jvm.options -rw-rw---- 1 root elasticsearch 17545 Mar 26 2020 log4j2.properties -rw-rw---- 1 root elasticsearch 473 Mar 26 2020 role_mapping.yml -rw-rw---- 1 root elasticsearch 197 Mar 26 2020 roles.yml -rw-rw---- 1 root elasticsearch 0 Mar 26 2020 users -rw-rw---- 1 root elasticsearch 0 Mar 26 2020 users_roles ## elasticsearch.yml 是主配置文件 ## jvm.options jvm优化文件

6、修改配置文件

## 配置文件的修改

root@es-1:/etc/elasticsearch# egrep -v "^#|^$" elasticsearch.yml

cluster.name: escluster1 # ELK的集群名称,名称相同即属于同一个集群

node.name: node-1 # 不同的节点,主机名一定不同

path.data: /data/esdata/data # ES数据保存目录

path.logs: /data/esdata/logs # ES日志保存目录

bootstrap.memory_lock: true # 打开此项,表示锁定设置的内存,内存大小在 jvm.options 里边设置

network.host: 0.0.0.0 # 监听IP

http.port: 9200 # 监听端口

discovery.seed_hosts: ["172.31.3.128", "172.31.3.129", "172.31.3.130"] # 集群中node节点发现列表

cluster.initial_master_nodes: ["172.31.3.130"] # 初始化哪些节点可以被选举为master

gateway.recover_after_nodes: 2 # 在一个集群中的N个节点启动后,才允许进行数据恢复处理,默认是1

action.destructive_requires_name: true # 是否可以通过正则或all删除或关闭索引库,true表示必需要显示指定索引库名称,生产建议设置为true

# 修改内存限制

root@es-1:/etc/elasticsearch# vim /usr/lib/systemd/system/elasticsearch.service

LimitMEMLOCK=infinity

root@es-1:/etc/elasticsearch# egrep -v "^#|^$" jvm.options

-Xms1g # 推荐设置成30G-32G

-Xmx1g # 推荐设置成30G-32G

8-13:-XX:+UseConcMarkSweepGC

8-13:-XX:CMSInitiatingOccupancyFraction=75

8-13:-XX:+UseCMSInitiatingOccupancyOnly

14-:-XX:+UseG1GC

14-:-XX:G1ReservePercent=25

14-:-XX:InitiatingHeapOccupancyPercent=30

-Djava.io.tmpdir=${ES_TMPDIR}

-XX:+HeapDumpOnOutOfMemoryError

-XX:HeapDumpPath=/var/lib/elasticsearch

-XX:ErrorFile=/var/log/elasticsearch/hs_err_pid%p.log

8:-XX:+PrintGCDetails

8:-XX:+PrintGCDateStamps

8:-XX:+PrintTenuringDistribution

8:-XX:+PrintGCApplicationStoppedTime

8:-Xloggc:/var/log/elasticsearch/gc.log

8:-XX:+UseGCLogFileRotation

8:-XX:NumberOfGCLogFiles=32

8:-XX:GCLogFileSize=64m

9-:-Xlog:gc*,gc+age=trace,safepoint:file=/var/log/elasticsearch/gc.log:utctime,pid,tags:filecount=32,filesize=64m

7、对数据目录修改权限

root@es-1:~# chown elasticsearch.elasticsearch /data/esdata/ -R

8、在ES-2 服务器上的操作

1、对系统进行优化,修改主机名为 es-2.xingyu.com,创建挂载的目录 2、挂载新磁盘到服务器上 3、使用timedatectl命令修改时区 4、设置时间同步任务 5、安装自带jdk环境的 es 6、修改配置文件 # 从 es-1服务器上把配置文件复制到 es-2服务器上 root@es-1:/etc/elasticsearch# scp elasticsearch.yml [email protected]:/etc/elasticsearch/ The authenticity of host '172.31.3.129 (172.31.3.129)' can't be established. ECDSA key fingerprint is SHA256:9qrWXFHoFbLY0peBzZ544aYlqGdJdcGjVdXox8sv1ZU. Are you sure you want to continue connecting (yes/no/[fingerprint])? yes Warning: Permanently added '172.31.3.129' (ECDSA) to the list of known hosts. [email protected]'s password: elasticsearch.yml # 修改配置文件的主机名即可 root@es-2:/etc/elasticsearch# egrep -v "^#|^$" elasticsearch.yml cluster.name: escluster1 node.name: node-2 # 此主机名要修改 path.data: /data/esdata/data path.logs: /data/esdata/logs bootstrap.memory_lock: true network.host: 0.0.0.0 http.port: 9200 discovery.seed_hosts: ["172.31.3.128", "172.31.3.129", "172.31.3.130"] cluster.initial_master_nodes: ["172.31.3.128"] gateway.recover_after_nodes: 2 action.destructive_requires_name: true 7、对数据目录修改权限

9、在ES-3 服务器上的操作,参考 es-2 的部署步骤

1、对系统进行优化,修改主机名为 es-2.xingyu.com 2、挂载新磁盘到服务器上 3、使用timedatectl命令修改时区 4、设置时间同步任务 5、安装自带jdk环境的 es 6、修改配置文件 # 从 es-1服务器上把配置文件复制到 es-3服务器上 root@es-1:/etc/elasticsearch# scp elasticsearch.yml [email protected]:/etc/elasticsearch/ The authenticity of host '172.31.3.128 (172.31.3.128)' can't be established. ECDSA key fingerprint is SHA256:9qrWXFHoFbLY0peBzZ544aYlqGdJdcGjVdXox8sv1ZU. Are you sure you want to continue connecting (yes/no/[fingerprint])? yes Warning: Permanently added '172.31.3.128' (ECDSA) to the list of known hosts. [email protected]'s password: elasticsearch.yml # 修改配置文件的主机名即可 root@es-3:/etc/elasticsearch# egrep -v "^#|^$" elasticsearch.yml cluster.name: escluster1 node.name: node-3 # 此主机名要修改 path.data: /data/esdata/data path.logs: /data/esdata/logs bootstrap.memory_lock: true network.host: 0.0.0.0 http.port: 9200 discovery.seed_hosts: ["172.31.3.128", "172.31.3.129", "172.31.3.130"] cluster.initial_master_nodes: ["172.31.3.128"] gateway.recover_after_nodes: 2 action.destructive_requires_name: true 7、对数据目录修改权限

10、在三台服务器上启动服务

root@es-1:/etc/elasticsearch# systemctl start elasticsearch

root@es-1:/etc/elasticsearch# systemctl status elasticsearch.service

● elasticsearch.service - Elasticsearch

Loaded: loaded (/lib/systemd/system/elasticsearch.service; disabled; vendor preset: enabled)

Active: active (running) since Wed 2022-03-16 16:25:08 CST; 6s ago

Docs: http://www.elastic.co

Main PID: 20156 (java)

Tasks: 40 (limit: 2245)

Memory: 1.2G

CGroup: /system.slice/elasticsearch.service

├─20156 /usr/share/elasticsearch/jdk/bin/java -Des.networkaddress.cache.ttl=60 -Des.networkaddress.cache.negative.ttl=10 -XX:+>

└─20259 /usr/share/elasticsearch/modules/x-pack-ml/platform/linux-x86_64/bin/controller

Mar 16 16:24:20 es-1.xingyu.com systemd[1]: Starting Elasticsearch...

Mar 16 16:24:21 es-1.xingyu.com elasticsearch[20156]: OpenJDK 64-Bit Server VM warning: Option UseConcMarkSweepGC was deprecated in version>

Mar 16 16:25:08 es-1.xingyu.com systemd[1]: Started Elasticsearch.

## 由于我只有两台虚拟机做实验,所以把配置文件修改一下,内容如下: # es-1的配置 root@es-1:/etc/elasticsearch# egrep -v "^#|^$" elasticsearch.yml cluster.name: escluster1 node.name: node-1 path.data: /data/esdata/data path.logs: /data/esdata/logs network.host: 0.0.0.0 http.port: 9200 discovery.seed_hosts: ["172.31.3.129", "172.31.3.130"] action.destructive_requires_name: true # es-2的配置 root@es-2:/etc/elasticsearch# egrep -v "^#|^$" elasticsearch.yml cluster.name: escluster1 node.name: node-2 path.data: /data/esdata/data path.logs: /data/esdata/logs network.host: 0.0.0.0 http.port: 9200 discovery.seed_hosts: ["172.31.3.129", "172.31.3.130"] action.destructive_requires_name: true # 修改成以内容后,两台es服务器可以正常启动

11、通过浏览器访问

http://172.31.3.130:9200/

{

"name" : "node-1",

"cluster_name" : "escluster1",

"cluster_uuid" : "_na_",

"version" : {

"number" : "7.6.2",

"build_flavor" : "default",

"build_type" : "deb",

"build_hash" : "ef48eb35cf30adf4db14086e8aabd07ef6fb113f",

"build_date" : "2020-03-26T06:34:37.794943Z",

"build_snapshot" : false,

"lucene_version" : "8.4.0",

"minimum_wire_compatibility_version" : "6.8.0",

"minimum_index_compatibility_version" : "6.0.0-beta1"

},

"tagline" : "You Know, for Search"

}

http://172.31.3.129:9200/

{

"name" : "node-2",

"cluster_name" : "escluster1",

"cluster_uuid" : "_na_",

"version" : {

"number" : "7.6.2",

"build_flavor" : "default",

"build_type" : "deb",

"build_hash" : "ef48eb35cf30adf4db14086e8aabd07ef6fb113f",

"build_date" : "2020-03-26T06:34:37.794943Z",

"build_snapshot" : false,

"lucene_version" : "8.4.0",

"minimum_wire_compatibility_version" : "6.8.0",

"minimum_index_compatibility_version" : "6.0.0-beta1"

},

"tagline" : "You Know, for Search"

}

修改 elasticsearch 的服务启动文件,可以修改启动用户

vim /usr/lib/systemd/system/elasticsearch.service

elasticsearch-head客户端:

1、通过在chrome浏览器上安装elasticsearch-head插件

通过插件直接连接es服务器

2、在服务器上通过docker进行安装elasticsearch-head插件

先装好docker环境,再通过下边的命令直接安装容器

docker run -d -p 9100:9100 mobz/elasticsearch-head:5

安装好容器后,通过浏览器访问 http://172.31.3.130:9100

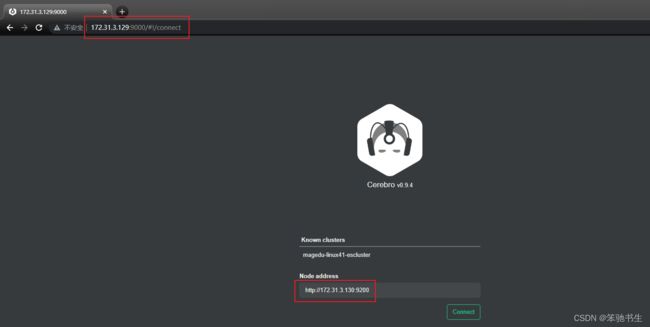

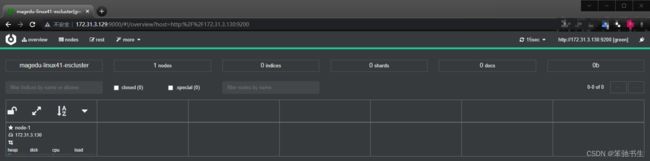

cerebro客户端:

https://github.com/lmenezes/cerebro

下载地址:

https://github.do/https://github.com/lmenezes/cerebro/releases/download/v0.9.4/cerebro-0.9.4.tgz

安装:

需要安装jdk环境,cerebro needs Java 11 or newer to run.

jdk下载地址:

Java Downloads | Oracle

jdk11下载地址:

Java Downloads | Oracle

解压:cerebro-0.9.4.tgz

配置文件:conf/application.conf

root@usera:~# apt install openjdk-11-jdk -y

root@usera:/apps/cerebro-0.9.4# java -version

openjdk version "11.0.14" 2022-01-18

OpenJDK Runtime Environment (build 11.0.14+9-Ubuntu-0ubuntu2.20.04)

OpenJDK 64-Bit Server VM (build 11.0.14+9-Ubuntu-0ubuntu2.20.04, mixed mode, sharing)

root@usera:~# mkdir /apps

root@usera:~# cd /apps/

root@usera:/apps# ll

total 55912

drwxr-xr-x 2 root root 4096 Mar 17 08:09 ./

drwxr-xr-x 21 root root 4096 Mar 17 08:08 ../

-rw-r--r-- 1 root root 57244792 Mar 17 08:09 cerebro-0.9.4.tgz

root@usera:/apps# tar zxf cerebro-0.9.4.tgz

root@usera:/apps# ll

total 55916

drwxr-xr-x 3 root root 4096 Mar 17 08:09 ./

drwxr-xr-x 21 root root 4096 Mar 17 08:08 ../

drwxr-xr-x 5 501 staff 4096 Apr 10 2021 cerebro-0.9.4/

-rw-r--r-- 1 root root 57244792 Mar 17 08:09 cerebro-0.9.4.tgz

root@usera:/apps# cd cerebro-0.9.4/

root@usera:/apps/cerebro-0.9.4# vim conf/application.conf

hosts = [

{

host = "http://172.31.3.130:9200"

name = "escluster1"

}

]

## 启动服务

root@usera:/apps/cerebro-0.9.4# ./bin/cerebro

浏览器访问:

http://172.31.3.129:9000

监控es集群的健康状态:

利用zabbix监控es集群的健康状态

自定义脚本

利用python监控es集群的健康状态

python3 monitor_es.py

如果监控的 ES服务器为集群服务器,可以设置监控的参数为keepalived,或者nginx负载均衡