跑bart代码

跑huggingface上的bart遇到的一系列问题

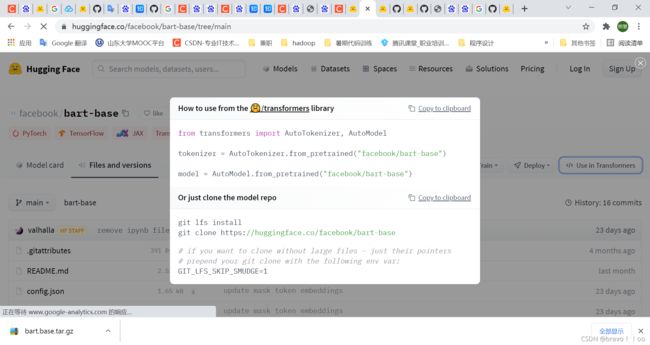

1.无法连接到huggingface

解决1:

解决2:

从官网下载下来模型并上传,讲代码中模型导入的路径改为本地路径

下载过程可以参考该博客。

不知道为啥直接变成了路径接上,不过不重要,最后也不知道怎么捣鼓的反正就是接上了,成功导入,出现另一个错误:

2.nvida版本过低

RuntimeError: The NVIDIA driver on your system is too old (found version 10010). Please update your GPU driver by downloading and installing a new version from the URL: http://www.nvidia.com/Download/index.aspx Alternatively, go to: https://pytorch.org to install a PyTorch version that has been compiled with your version of the CUDA driver.

好像是不能直接下载最新版的pytorch,跟cuda的版本对不上(好像是),所以去找一下怎么下载特定版本的pytorch,另:昨天好像手滑把另一个配好的cuda环境的pytorch搞没了,记得找到怎么下载1.1.0版本的pytorch后,重新去那个版本里再下一个。

bart要求的pytorch版本因该是>=1.6.0的。

具体cuda和pytorch、torchvision对应版本的下载语句如下网页所示:

https://pytorch.org/get-started/previous-versions/#conda-3

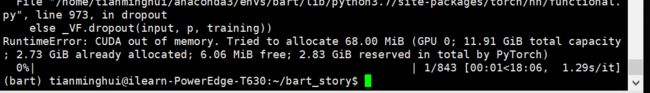

3.内存不够

nvidia-smi

os.environ['CUDA_VISIBLE_DEVICES']='3'

但是依旧报错,考虑是所有卡的空间都不太够了

因为这份代码已经添加了去掉梯度的语句,所以不可再添加去掉梯度的语句了,添加梯度的博客:

https://www.cnblogs.com/dyc99/p/12664126.html

https://blog.csdn.net/weixin_43760844/article/details/113462431

明早等卡没人用了再说。

有卡了,还是报错,先看论文吧

load reference!

0%| | 0/843 [00:00<?, ?it/s]/home/tianminghui/anaconda3/envs/bart/lib/python3.7/site-packages/transformers/tokenization_utils_base.py:2217: FutureWarning: The `pad_to_max_length` argument is deprecated and will be removed in a future version, use `padding=True` or `padding='longest'` to pad to the longest sequence in the batch, or use `padding='max_length'` to pad to a max length. In this case, you can give a specific length with `max_length` (e.g. `max_length=45`) or leave max_length to None to pad to the maximal input size of the model (e.g. 512 for Bert).

FutureWarning,

/home/tianminghui/anaconda3/envs/bart/lib/python3.7/site-packages/transformers/tokenization_utils_base.py:2217: FutureWarning: The `pad_to_max_length` argument is deprecated and will be removed in a future version, use `padding=True` or `padding='longest'` to pad to the longest sequence in the batch, or use `padding='max_length'` to pad to a max length. In this case, you can give a specific length with `max_length` (e.g. `max_length=45`) or leave max_length to None to pad to the maximal input size of the model (e.g. 512 for Bert).

FutureWarning,

/home/tianminghui/anaconda3/envs/bart/lib/python3.7/site-packages/transformers/tokenization_utils_base.py:2217: FutureWarning: The `pad_to_max_length` argument is deprecated and will be removed in a future version, use `padding=True` or `padding='longest'` to pad to the longest sequence in the batch, or use `padding='max_length'` to pad to a max length. In this case, you can give a specific length with `max_length` (e.g. `max_length=45`) or leave max_length to None to pad to the maximal input size of the model (e.g. 512 for Bert).

FutureWarning,

/home/tianminghui/anaconda3/envs/bart/lib/python3.7/site-packages/transformers/tokenization_utils_base.py:2217: FutureWarning: The `pad_to_max_length` argument is deprecated and will be removed in a future version, use `padding=True` or `padding='longest'` to pad to the longest sequence in the batch, or use `padding='max_length'` to pad to a max length. In this case, you can give a specific length with `max_length` (e.g. `max_length=45`) or leave max_length to None to pad to the maximal input size of the model (e.g. 512 for Bert).

FutureWarning,

epoch 1, step 0, loss 5.0370: 0%| | 1/843 [00:02<05:19, 2.63it/s]Traceback (most recent call last):

File "main.py", line 217, in <module>

train_one_epoch(config, train_dataloader, model, optimizer, criterion, epoch + 1)

File "main.py", line 85, in train_one_epoch

loss.backward()

File "/home/tianminghui/anaconda3/envs/bart/lib/python3.7/site-packages/torch/tensor.py", line 185, in backward

torch.autograd.backward(self, gradient, retain_graph, create_graph)

File "/home/tianminghui/anaconda3/envs/bart/lib/python3.7/site-packages/torch/autograd/__init__.py", line 127, in backward

allow_unreachable=True) # allow_unreachable flag

RuntimeError: CUDA out of memory. Tried to allocate 1.08 GiB (GPU 0; 11.91 GiB total capacity; 10.66 GiB already allocated; 377.06 MiB free; 10.95 GiB reserved in total by PyTorch)

Exception raised from malloc at /opt/conda/conda-bld/pytorch_1595629403081/work/c10/cuda/CUDACachingAllocator.cpp:272 (most recent call first):

frame #0: c10::Error::Error(c10::SourceLocation, std::string) + 0x4d (0x7f44c686d77d in /home/tianminghui/anaconda3/envs/bart/lib/python3.7/site-packages/torch/lib/libc10.so)

frame #1: + 0x20626 (0x7f44c6ac5626 in /home/tianminghui/anaconda3/envs/bart/lib/python3.7/site-packages/torch/lib/libc10_cuda.so)

frame #2: + 0x214f4 (0x7f44c6ac64f4 in /home/tianminghui/anaconda3/envs/bart/lib/python3.7/site-packages/torch/lib/libc10_cuda.so)

frame #3: + 0x21b81 (0x7f44c6ac6b81 in /home/tianminghui/anaconda3/envs/bart/lib/python3.7/site-packages/torch/lib/libc10_cuda.so)

frame #4: at::native::empty_cuda(c10::ArrayRef, c10::TensorOptions const&, c10::optional) + 0x249 (0x7f44c99d5c79 in /home/tianminghui/anaconda3/envs/bart/lib/python3.7/site-packages/torch/lib/libtorch_cuda.so)

frame #5: + 0xd25dc9 (0x7f44c79f8dc9 in /home/tianminghui/anaconda3/envs/bart/lib/python3.7/site-packages/torch/lib/libtorch_cuda.so)

frame #6: + 0xd3fbf7 (0x7f44c7a12bf7 in /home/tianminghui/anaconda3/envs/bart/lib/python3.7/site-packages/torch/lib/libtorch_cuda.so)

frame #7: + 0xe450dd (0x7f44f9b2c0dd in /home/tianminghui/anaconda3/envs/bart/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #8: + 0xe453f7 (0x7f44f9b2c3f7 in /home/tianminghui/anaconda3/envs/bart/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #9: at::empty(c10::ArrayRef, c10::TensorOptions const&, c10::optional) + 0xfa (0x7f44f9c36e7a in /home/tianminghui/anaconda3/envs/bart/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #10: at::native::empty_like(at::Tensor const&, c10::TensorOptions const&, c10::optional) + 0x49e (0x7f44f98b509e in /home/tianminghui/anaconda3/envs/bart/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #11: + 0xfe3521 (0x7f44f9cca521 in /home/tianminghui/anaconda3/envs/bart/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #12: + 0x101ecc3 (0x7f44f9d05cc3 in /home/tianminghui/anaconda3/envs/bart/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #13: at::empty_like(at::Tensor const&, c10::TensorOptions const&, c10::optional) + 0x101 (0x7f44f9c19f91 in /home/tianminghui/anaconda3/envs/bart/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #14: at::Tensor at::native::(anonymous namespace)::host_softmax_backward(at::Tensor const&, at::Tensor const&, long, bool) + 0x16c (0x7f44c9123eac in /home/tianminghui/anaconda3/envs/bart/lib/python3.7/site-packages/torch/lib/libtorch_cuda.so)

frame #15: at::native::log_softmax_backward_cuda(at::Tensor const&, at::Tensor const&, long, at::Tensor const&) + 0x8d (0x7f44c90ff17d in /home/tianminghui/anaconda3/envs/bart/lib/python3.7/site-packages/torch/lib/libtorch_cuda.so)

frame #16: + 0xd13a40 (0x7f44c79e6a40 in /home/tianminghui/anaconda3/envs/bart/lib/python3.7/site-packages/torch/lib/libtorch_cuda.so)

frame #17: + 0xe6f636 (0x7f44f9b56636 in /home/tianminghui/anaconda3/envs/bart/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #18: at::_log_softmax_backward_data(at::Tensor const&, at::Tensor const&, long, at::Tensor const&) + 0x119 (0x7f44f9be4aa9 in /home/tianminghui/anaconda3/envs/bart/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #19: + 0x2c217ff (0x7f44fb9087ff in /home/tianminghui/anaconda3/envs/bart/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #20: + 0xe6f636 (0x7f44f9b56636 in /home/tianminghui/anaconda3/envs/bart/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #21: at::_log_softmax_backward_data(at::Tensor const&, at::Tensor const&, long, at::Tensor const&) + 0x119 (0x7f44f9be4aa9 in /home/tianminghui/anaconda3/envs/bart/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #22: torch::autograd::generated::LogSoftmaxBackward::apply(std::vector >&&) + 0x1d7 (0x7f44fb7844b7 in /home/tianminghui/anaconda3/envs/bart/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #23: + 0x30d1017 (0x7f44fbdb8017 in /home/tianminghui/anaconda3/envs/bart/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #24: torch::autograd::Engine::evaluate_function(std::shared_ptr&, torch::autograd::Node*, torch::autograd::InputBuffer&, std::shared_ptr const&) + 0x1400 (0x7f44fbdb3860 in /home/tianminghui/anaconda3/envs/bart/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #25: torch::autograd::Engine::thread_main(std::shared_ptr const&) + 0x451 (0x7f44fbdb4401 in /home/tianminghui/anaconda3/envs/bart/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #26: torch::autograd::Engine::thread_init(int, std::shared_ptr const&, bool) + 0x89 (0x7f44fbdac579 in /home/tianminghui/anaconda3/envs/bart/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #27: torch::autograd::python::PythonEngine::thread_init(int, std::shared_ptr const&, bool) + 0x4a (0x7f45000db99a in /home/tianminghui/anaconda3/envs/bart/lib/python3.7/site-packages/torch/lib/libtorch_python.so)

frame #28: + 0xc9039 (0x7f4502c0e039 in /home/tianminghui/anaconda3/envs/bart/lib/python3.7/site-packages/torch/lib/../../../.././libstdc++.so.6)

frame #29: + 0x76ba (0x7f4525bb76ba in /lib/x86_64-linux-gnu/libpthread.so.0)

frame #30: clone + 0x6d (0x7f45258ed41d in /lib/x86_64-linux-gnu/libc.so.6)

epoch 1, step 0, loss 5.0370: 0%| | 1/843 [00:03<48:19, 3.44s/it