springboot集成kafka(docker)

springboot集成kafka

- 一篇看懂kakfa

- docker部署kafka集群

-

- 前提

- 拉镜像

- 搭建kafka集群

-

- 编写docker-compose.yml

- 启动kafka集群

- 查看是否成功

- 搭建springboot项目简单使用kafka

-

- 项目总揽

- 创建生产者模块`a-producter`

-

- 按照普通spring项目创建模块,模块主要包含maven是

- 编写消费者配置yml

- 编写config配置

- 编写控制器,用于发消息

- 主入口扫描包

- 测试生产者发送消息

- 创建消费者模块`b-customer`

-

- 项目创建同生产者

- 编写消费者控制器,用于监听消息

- 扫描包

- 启动项目测试

- 完结撒花

一篇看懂kakfa

kafka概念理论看这篇:大白话 kafka 架构原理

docker部署kafka集群

前提

安装docker与docker-compose

拉镜像

docker pull wurstmeister/kafka

docker pull zookeeper

搭建kafka集群

编写docker-compose.yml

version: '3'

services:

zkwt01:

image: zookeeper

restart: always

container_name: zkwt01

ports:

- "2181:2181"

environment:

ZOO_MY_ID: 1

ZOO_SERVERS: server.1=zkwt01:2888:3888;2181 server.2=zkwt02:2888:3888;2181 server.3=zkwt03:2888:3888;2181 server.4=zkwt04:2888:3888:observer;2181

networks:

- kafka-network

zkwt02:

image: zookeeper

restart: always

container_name: zkwt02

ports:

- "2182:2181"

environment:

ZOO_MY_ID: 2

ZOO_SERVERS: server.1=zkwt01:2888:3888;2181 server.2=zkwt02:2888:3888;2181 server.3=zkwt03:2888:3888;2181 server.4=zkwt04:2888:3888:observer;2181

networks:

- kafka-network

zkwt03:

image: zookeeper

restart: always

container_name: zkwt03

ports:

- "2183:2181"

environment:

ZOO_MY_ID: 3

ZOO_SERVERS: server.1=zkwt01:2888:3888;2181 server.2=zkwt02:2888:3888;2181 server.3=zkwt03:2888:3888;2181 server.4=zkwt04:2888:3888:observer;2181

networks:

- kafka-network

zkwt04:

image: zookeeper

restart: always

container_name: zkwt04

ports:

- "2184:2181"

environment:

ZOO_MY_ID: 4

PEER_TYPE: observer

ZOO_SERVERS: server.1=zkwt01:2888:3888;2181 server.2=zkwt02:2888:3888;2181 server.3=zkwt03:2888:3888;2181 server.4=zkwt04:2888:3888:observer;2181

networks:

- kafka-network

kafkawt01:

image: wurstmeister/kafka

restart: always

container_name: kafkawt01

depends_on:

- zkwt01

- zkwt02

- zkwt03

- zkwt04

networks:

- kafka-network

ports:

- "9092:9092"

environment:

KAFKA_BROKER_ID: 1

KAFKA_ZOOKEEPER_CONNECT: zkwt01:2181,zkwt02:2181,zkwt03:2181,zkwt04:2181

KAFKA_LISTENERS: PLAINTEXT://0.0.0.0:9092

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://192.168.0.205:9092 #宿主机监听端口

volumes:

- /var/run/docker.sock:/var/run/docker.sock

kafkawt02:

image: wurstmeister/kafka

restart: always

container_name: kafkawt02

depends_on:

- zkwt01

- zkwt02

- zkwt03

- zkwt04

networks:

- kafka-network

ports:

- "9093:9092"

environment:

KAFKA_BROKER_ID: 2

KAFKA_ZOOKEEPER_CONNECT: zkwt01:2181,zkwt02:2181,zkwt03:2181,zkwt04:2181

KAFKA_LISTENERS: PLAINTEXT://0.0.0.0:9092

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://192.168.0.205:9093 #宿主机监听端口

volumes:

- /var/run/docker.sock:/var/run/docker.sock

kafkawt03:

image: wurstmeister/kafka

restart: always

container_name: kafkawt03

depends_on:

- zkwt01

- zkwt02

- zkwt03

- zkwt04

networks:

- kafka-network

ports:

- "9094:9092"

environment:

KAFKA_BROKER_ID: 3

KAFKA_ZOOKEEPER_CONNECT: zkwt01:2181,zkwt02:2181,zkwt03:2181,zkwt04:2181

KAFKA_LISTENERS: PLAINTEXT://0.0.0.0:9092

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://192.168.0.205:9094 #宿主机监听端口

volumes:

- /var/run/docker.sock:/var/run/docker.sock

networks:

kafka-network:

driver: bridge

- 这里配置了4个zookeeper和3个kafka

image: 拉取的镜像名实际是docker.io/wurstmeister/kafka,image填写kafka也可以识别ZOO_SERVERS:需要在3888端口后面加上;2181,否则zookeeper无法对外提供服务,会导致Kafka无法连接上KAFKA_ADVERTISED_LISTENERS:宿主机监听端口,服务器提供服务的端口,如局域网端口是192.168.2.177,就改成PLAINTEXT://192.168.2.177:9092,这里需要根据实际情况改动- 单机版搭建看这里:springboot集成kafka(从部署到实践)

启动kafka集群

在docker-compose.yml所有文件下使用命令窗口运行

docker-compose -f docker-compose.yml up -d

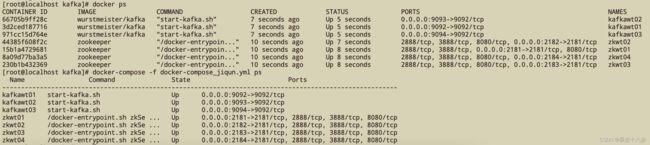

查看是否成功

查看所有

docker ps

只查看集群

docker-compose -f docker-compose.yml ps

搭建springboot项目简单使用kafka

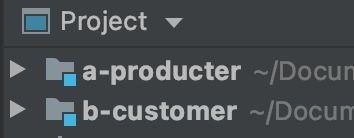

项目总揽

创建一个空项目,并排包含2个模块,分别是生产者模块与消费者模块,项目结构如下

创建生产者模块a-producter

按照普通spring项目创建模块,模块主要包含maven是

- messaging->spring for apache kafka

- web->spring web

编写消费者配置yml

#### kafka配置生产者 begin ####

spring:

kafka:

#这里根据实际ip填写

bootstrap-servers: 192.168.2.177:9092,192.168.2.177:9093,192.168.2.177:9094

producer:

# 写入失败时,重试次数。当leader节点失效,一个repli节点会替代成为leader节点,此时可能出现写入失败,

# 当retris为0时,produce不会重复。retirs重发,此时repli节点完全成为leader节点,不会产生消息丢失。

retries: 0

# 每次批量发送消息的数量,produce积累到一定数据,一次发送

batch-size: 16384

# produce积累数据一次发送,缓存大小达到buffer.memory就发送数据

buffer-memory: 33554432

#procedure要求leader在考虑完成请求之前收到的确认数,用于控制发送记录在服务端的持久化,其值可以为如下:

#acks = 0 如果设置为零,则生产者将不会等待来自服务器的任何确认,该记录将立即添加到套接字缓冲区并视为已发送。在这种情况下,无法保证服务器已收到记录,并且重试配置将不会生效(因为客户端通常不会知道任何故障),为每条记录返回的偏移量始终设置为-1。

#acks = 1 这意味着leader会将记录写入其本地日志,但无需等待所有副本服务器的完全确认即可做出回应,在这种情况下,如果leader在确认记录后立即失败,但在将数据复制到所有的副本服务器之前,则记录将会丢失。

#acks = all 这意味着leader将等待完整的同步副本集以确认记录,这保证了只要至少一个同步副本服务器仍然存活,记录就不会丢失,这是最强有力的保证,这相当于acks = -1的设置。

#可以设置的值为:all, -1, 0, 1

acks: 1

# 指定消息key和消息体的序列化编解码方式

key-serializer: org.apache.kafka.common.serialization.StringSerializer

value-serializer: org.apache.kafka.common.serialization.StringSerializer

#### kafka配置生产者 end ####

编写config配置

package com.example.aproducter.config;

import org.apache.kafka.clients.admin.NewTopic;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

@Configuration

public class KafkaInitialConfiguration {

// 创建一个名为wtopic04的Topic并设置分区数partitions为8,分区副本数replication-factor为2

@Bean

public NewTopic initialTopic() {

System.out.println("begin to init initialTopic........................");

return new NewTopic("wtopic04",8, (short) 2 );

}

// 如果要修改分区数,只需修改配置值重启项目即可

// 修改分区数并不会导致数据的丢失,但是分区数只能增大不能减小

@Bean

public NewTopic updateTopic() {

System.out.println("begin to init updateTopic........................");

return new NewTopic("wtopic04",10, (short) 2 );

}

}

编写控制器,用于发消息

package com.example.aproducter.controller;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.kafka.support.SendResult;

import org.springframework.stereotype.Controller;

import org.springframework.util.concurrent.ListenableFuture;

import org.springframework.util.concurrent.ListenableFutureCallback;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RequestParam;

import org.springframework.web.bind.annotation.ResponseBody;

/**

* 生产者控制类

*/

@Controller

public class ProducterController {

@Autowired

private KafkaTemplate<String,Object> kafkaTemplate;

@GetMapping("/send")

@ResponseBody

public boolean send(@RequestParam String message) throws Exception{

ListenableFuture<SendResult<String, Object>> future = kafkaTemplate.send("ABCD", message);

future.addCallback(new ListenableFutureCallback<SendResult<String, Object>>() {

@Override

public void onFailure(Throwable throwable) {

System.err.println("ABCD - 生产者 发送消息失败:" + throwable.getMessage());

}

@Override

public void onSuccess(SendResult<String, Object> stringObjectSendResult) {

System.out.println("ABCD - 生产者 发送消息成功:" + stringObjectSendResult.toString());

}

});

return true;

}

}

主入口扫描包

@SpringBootApplication

@ComponentScan("com.example.aproducter.controller")

@ComponentScan("com.example.aproducter.config")

public class AProducterApplication {

public static void main(String[] args) {

SpringApplication.run(AProducterApplication.class, args);

}

}

测试生产者发送消息

- 发送成功,可以进入kafka容器查看

创建消费者模块b-customer

项目创建同生产者

编写消费者控制器,用于监听消息

package com.example.bcustomer.controller;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.springframework.kafka.annotation.KafkaListener;

import org.springframework.stereotype.Component;

/**

* 消费者监听topic为ABCD的消息

*/

@Component

public class ConsumerController {

@KafkaListener(topics = "ABCD")

public void onMessage(ConsumerRecord<?, ?> record){

//消费后打印出来

System.out.println("消费了:"+record.topic()+"-"+record.partition()+"-"+record.value());

}

}

扫描包

@SpringBootApplication

@ComponentScan("com.example.bcustomer.controller")

public class BCustomerApplication {

public static void main(String[] args) {

SpringApplication.run(BCustomerApplication.class, args);

}

}

启动项目测试

完结撒花

参考:https://blog.csdn.net/qq_34533957/article/details/108350369

源码:https://gitee.com/cschina/springboot-kafka