拟牛顿法

拟牛顿法

文章目录

- 拟牛顿法

-

- 差分近似微分的思想

- 秩一近似(SR1)

-

- 秩1近似的迭代流程

- SR1算法的优缺点

- 秩2算法(DFP)

-

- DFP算法的性质

- 优缺点:

- BFGS算法

-

- Sherman-Morrison Formula公式

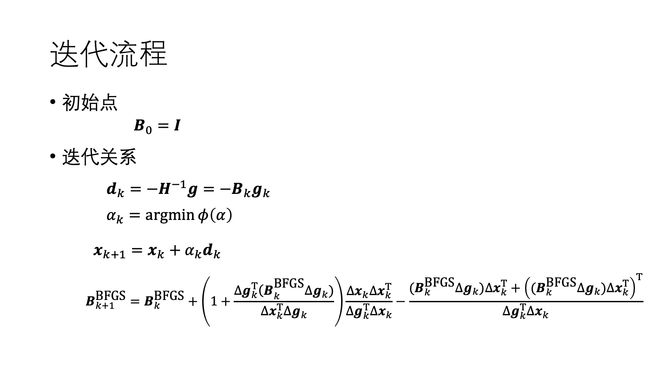

- 迭代过程

- BFGS的性质

- 优缺点

- 代码示例

-

- 代码总览

- 确定步长算法

- 迭代B算法(三种拟牛顿法)

- 检查输入

- 测试用例

- 声明

- 参考

拟牛顿法是除共轭方向法外,解决非二次型求极小值的另一种有效方法。

差分近似微分的思想

我们在二次型牛顿法中,为了不计算二阶导,减少计算量,我们采用了差分近似微分的思想,那么在多次方函数中,是否也可以推广这个思想呢?

f ′ ′ ( x k ) = f ′ ( x k ) − f ′ ( x k − 1 ) x k − x k − 1 ↓ H k + 1 ≈ F k + 1 = Δ g k Δ x k f^{''}(x_k)= \frac{f^{'}(x_{k})-f^{'}(x_{k-1})}{x_k-x_{k-1}}\\ \downarrow\\ H_{k+1}\approx F_{k+1} = \frac{\Delta g_k}{\Delta x_k} f′′(xk)=xk−xk−1f′(xk)−f′(xk−1)↓Hk+1≈Fk+1=ΔxkΔgk

由于 Δ g k 和 Δ x k \Delta g_k和\Delta x_k Δgk和Δxk都是向量,但是向量之间是没有除法的,所以做以下转换

F k + 1 Δ x k = Δ g k F_{k+1}\Delta x_k = \Delta g_k\\ Fk+1Δxk=Δgk

又因为我们多维牛顿法想要得到的并不是黑塞矩阵本身,而是黑塞矩阵的逆,所以我们做如下变换

F k + 1 − 1 Δ g k = Δ x k B k + 1 = F k + 1 − 1 B k + 1 Δ g k = Δ x k . . . ( 1 ) F_{k+1}^{-1}\Delta g_k = \Delta x_k\\ B_{k+1} = F_{k+1}^{-1}\\ B_{k+1}\Delta g_k = \Delta x_k ...(1) Fk+1−1Δgk=ΔxkBk+1=Fk+1−1Bk+1Δgk=Δxk...(1)

这样子我们就得到了一个差分近似需要满足的条件,那么我们如何构建B来满足这样子的条件呢。下面介绍其中一种方法

秩一近似(SR1)

首先假设如果我们有两个向量a和b,我们将 a ∗ b T a*b^T a∗bT的形式称为外积。

那么我们是否可以采用迭代的方式来生成B矩阵呢?

B k + 1 = B k + α k z k z k T . . . ( 2 ) B_{k+1} = B_k + \alpha_kz_kz_k^T...(2) Bk+1=Bk+αkzkzkT...(2)

并且假设z向量

z = [ a 1 a 2 a 3 ] ⟶ z ∗ z T = [ a 1 2 a 1 a 2 a 1 a 3 a 2 a 1 a 2 2 a 2 a 3 a 3 a 1 a 3 a 2 a 3 2 ] z = \begin{bmatrix} a_1\\a_2\\a_3 \end{bmatrix} \longrightarrow z*z^T = \begin{bmatrix} a_1^2 & a_1a_2 &a_1a_3\\ a_2a_1 & a_2^2 & a_2a_3\\ a_3a_1 & a_3a_2 & a_3^2 \end{bmatrix} z=⎣⎡a1a2a3⎦⎤⟶z∗zT=⎣⎡a12a2a1a3a1a1a2a22a3a2a1a3a2a3a32⎦⎤

发现 z ∗ z T z*z^T z∗zT得到的矩阵是对称并且正定的,符合H矩阵的要求,所以我们可以用外积的形式来迭代得到B矩阵

现在我们的任务就是求出 z k z_k zk。

将(2)代入(1)中可得

( B k + α k z k z k T ) Δ g k = Δ x k B k ∗ Δ g k + α k z k z k T Δ g k = Δ x k . . . ( 3 ) (B_k + \alpha_kz_kz_k^T)\Delta g_k = \Delta x_k\\ B_k* \Delta g_k + \alpha_kz_kz_k^T\Delta g_k = \Delta x_k...(3) (Bk+αkzkzkT)Δgk=ΔxkBk∗Δgk+αkzkzkTΔgk=Δxk...(3)

其中 z k T Δ g k z_k^T\Delta g_k zkTΔgk是一个标量,所以可以得到

z k = 1 α K Δ x k − B k ∗ Δ g k z k T Δ g k ↓ α k z k z k T = α k ( 1 α K z k T Δ g k ) 2 ( Δ x k − B k ∗ Δ g k ) ( Δ x k − B k ∗ Δ g k ) T ( 4 ) z_k =\frac1{\alpha_K}\frac{\Delta x_k - B_k* \Delta g_k}{z_k^T\Delta g_k}\\\downarrow\\\alpha_kz_kz^T_k = \alpha_k(\frac1{\alpha_Kz_k^T\Delta g_k})^2(\Delta x_k - B_k* \Delta g_k)(\Delta x_k - B_k* \Delta g_k)^T\\ (4) zk=αK1zkTΔgkΔxk−Bk∗Δgk↓αkzkzkT=αk(αKzkTΔgk1)2(Δxk−Bk∗Δgk)(Δxk−Bk∗Δgk)T(4)

由上面的(3)等式两边左乘以 Δ g k T \Delta g^T_k ΔgkT可以继续推导

B k ∗ Δ g k + α k z k z k T Δ g k = Δ x k Δ g k T ( B k ∗ Δ g k + α k z k z k T Δ g k ) = Δ g k T Δ x k Δ g k T B k ∗ Δ g k + α k Δ g k T z k z k T Δ g k = Δ g k T Δ x k B_k* \Delta g_k + \alpha_kz_kz_k^T\Delta g_k = \Delta x_k\\ \Delta g^T_k (B_k* \Delta g_k + \alpha_kz_kz_k^T\Delta g_k) =\Delta g^T_k \Delta x_k \\ \Delta g^T_k B_k* \Delta g_k +\alpha_k \Delta g^T_kz_kz_k^T\Delta g_k = \Delta g^T_k \Delta x_k Bk∗Δgk+αkzkzkTΔgk=ΔxkΔgkT(Bk∗Δgk+αkzkzkTΔgk)=ΔgkTΔxkΔgkTBk∗Δgk+αkΔgkTzkzkTΔgk=ΔgkTΔxk

因为 Δ g k T z k 和 z k T Δ g k \Delta g^T_kz_k和z_k^T\Delta g_k ΔgkTzk和zkTΔgk都是标量,所以:

Δ g k T B k ∗ Δ g k + α k ( z k T Δ g k ) 2 = Δ g k T Δ x k α k ( z k T Δ g k ) 2 = Δ g k T Δ x k − Δ g k T B k ∗ Δ g k \Delta g^T_k B_k* \Delta g_k + \alpha_k(z^T_k\Delta g_k)^2 = \Delta g^T_k \Delta x_k\\ \alpha_k(z^T_k\Delta g_k)^2 = \Delta g^T_k \Delta x_k - \Delta g^T_k B_k* \Delta g_k ΔgkTBk∗Δgk+αk(zkTΔgk)2=ΔgkTΔxkαk(zkTΔgk)2=ΔgkTΔxk−ΔgkTBk∗Δgk

将得到的式子代入(4)中,可以得到

B k + 1 = B k + ( Δ x k − B k ∗ Δ g k ) ( Δ x k − B k ∗ Δ g k ) T Δ g k T Δ x k − Δ g k T B k ∗ Δ g k B_{k+1} =B_k + \frac{(\Delta x_k - B_k* \Delta g_k)(\Delta x_k - B_k* \Delta g_k)^T}{\Delta g^T_k \Delta x_k - \Delta g^T_k B_k* \Delta g_k} Bk+1=Bk+ΔgkTΔxk−ΔgkTBk∗Δgk(Δxk−Bk∗Δgk)(Δxk−Bk∗Δgk)T

得到的这个式子就是B最后的迭代式啦,这个式子主需要计算一些矩阵和向量相乘,以及一些向量的外积,不用求黑塞矩阵,也不用求逆,计算量并不是很大。

秩1近似的迭代流程

-

初始点:B0 = I(单位矩阵)

-

迭代关系

d k = − H − 1 g = − B k g k α k = a r g m i n Φ ( α ) ( 一 维 线 性 搜 索 ) x k + 1 = x k + α k d k B k + 1 = B k + ( Δ x k − B k ∗ Δ g k ) ( Δ x k − B k ∗ Δ g k ) T Δ g k T Δ x k − Δ g k T B k ∗ Δ g k d_k = -H^{-1}g = -B_kg_k\\ \alpha_k = argmin\Phi(\alpha)(一维线性搜索)\\ x_{k+1} = x_k + \alpha_kd_k\\ B_{k+1} =B_k + \frac{(\Delta x_k - B_k* \Delta g_k)(\Delta x_k - B_k* \Delta g_k)^T}{\Delta g^T_k \Delta x_k - \Delta g^T_k B_k* \Delta g_k} dk=−H−1g=−Bkgkαk=argminΦ(α)(一维线性搜索)xk+1=xk+αkdkBk+1=Bk+ΔgkTΔxk−ΔgkTBk∗Δgk(Δxk−Bk∗Δgk)(Δxk−Bk∗Δgk)T

SR1算法的优缺点

- 优点:可以避免大量的计算

- 缺点:

- 迭代过程B不一定都是正定的

- 除0问题:分母可能接近0

秩2算法(DFP)

针对上面秩1矩阵的缺点,对秩1矩阵进行了改进。

B k + 1 = B k + Δ x k Δ x k T Δ g k T Δ x k − ( B k Δ g K ) ( B k Δ g K ) T Δ g k T B k Δ g k B_{k+1} = B_k + \frac{\Delta x_k \Delta x_k^T}{\Delta g_k^T\Delta x_k} -\frac{(B_k\Delta g_K)(B_k\Delta g_K)^T}{\Delta g_k^TB_k\Delta g_k} Bk+1=Bk+ΔgkTΔxkΔxkΔxkT−ΔgkTBkΔgk(BkΔgK)(BkΔgK)T

DFP算法的性质

-

DFP也是一个拟牛顿算法

证明:

-

DFP也是共轭方向法,即DFP找到的方向是共轭的

优缺点:

- 优点:迭代过程保持正定

- 缺点:不能避免奇异矩阵情形,如果出现奇异矩阵,导致算法无法继续前进

BFGS算法

B k + 1 = B k + Δ x k Δ x k T Δ g k T Δ x k − ( B k Δ g K ) ( B k Δ g K ) T Δ g k T B k Δ g k B_{k+1} = B_k + \frac{\Delta x_k \Delta x_k^T}{\Delta g_k^T\Delta x_k} -\frac{(B_k\Delta g_K)(B_k\Delta g_K)^T}{\Delta g_k^TB_k\Delta g_k} Bk+1=Bk+ΔgkTΔxkΔxkΔxkT−ΔgkTBkΔgk(BkΔgK)(BkΔgK)T

根据对偶定理可得

F k + 1 = F k + Δ g k Δ g k T Δ x k T Δ g k − ( F k Δ x K ) ( F k Δ x K ) T Δ x k T F k Δ x k . . . ( 5 ) F_{k+1} = F_k + \frac{\Delta g_k \Delta g_k^T}{\Delta x_k^T\Delta g_k} -\frac{(F_k\Delta x_K)(F_k\Delta x_K)^T}{\Delta x_k^TF_k\Delta x_k}...(5) Fk+1=Fk+ΔxkTΔgkΔgkΔgkT−ΔxkTFkΔxk(FkΔxK)(FkΔxK)T...(5)

其中 F = B − 1 F = B^{-1} F=B−1

Sherman-Morrison Formula公式

这是一个

( A + u v T ) − 1 = A − 1 − ( A − 1 u ) ( v T A − 1 ) 1 + v T A − 1 u (A + uv^T)^{-1} = A^{-1} - \frac{(A^{-1}u)(v^TA^{-1})}{1+v^TA^{-1}u} (A+uvT)−1=A−1−1+vTA−1u(A−1u)(vTA−1)

将Sherman-Morrison Formula公式应用到(5)中,可以得到

迭代过程

BFGS的性质

- 也是一个拟牛顿法

- 也是共轭方向法,寻找到的方向相互共轭

优缺点

- 优点:

- 无需计算精确的黑塞矩阵

- 逆牛顿法

- 符合共轭方向法

- B保持正定

- 步长计算精度不高时仍然稳健

- 无需计算精确的黑塞矩阵

代码示例

代码总览

using LinearAlgebra

using Gadfly

## 划界法

function inexact_alpha(f,g,xk,fk,gk,d;

α0 =1,ϵ=0.1,τ =0.5,

η = 0.5,ζ=2.0)

α = α0

Φ0 = d' * gk

δ = α .* d

xn = xk .+ δ

fn = f(xn...)

gn = g(xn...)

# Armijo 不太大条件

while fn > fk + ϵ*α*Φ0

α = τ * α

δ = α .* d

xn = xk .+ δ

fn = f(xn...)

gn = g(xn...)

end

# Wolfe 不太小条件

while d' *gn < η * Φ0

α = ζ*α

δ = α .* d

xn = xk .+ δ

fn = f(xn...)

gn = g(xn...)

end

return α,δ,xn,fn,gn

end

##割线法搜索步长

function aecant_alpha(f,g,xk,fk,gk,d;α0=1,accuracy=0.001,maxIter = 128)

# _l是当前点,k-1

# _u是下一个点,k

αl = α0

αu = α0*1.1

xl = xk .+ αl .* d

xu = xk .+ αu .* d

gl = g(xl...)

gu = g(xu...)

Φl = d' * gl

Φu = d' * gu

for i in 1:maxIter

Δ = Φu - Φl

# 防止出现分母为0的数学错误

if Δ == 0.0

println("error:出现分母为0的数学错误")

return αu,αu.*d,xu,f(xu...),gu

else

Δ = Φu * (αu - αl)/ Δ

end

if abs(Δ) <= accuracy

return αu,αu.*d,xu,f(xu...),gu

end

αl = αu

xl = xu

gl = gu

Φl = Φu

αu = αu - Δ

xu = xk .+ αu .* d

gu = g(xu...)

Φu = d' * gu

end

return αu,αu.*d,xu,f(xu...),gu

end

## B矩阵迭代函数

function SR1(B,δ,z,δg)

B = B + (δ - z)*(δ - z)' ./ (δg' * δ - δg' * (B * δg))

return B

end

function DFR(B,δ,z,δg)

B = B + (δ * δ') ./ (δg' * δ) - (B*δg)*(B*δg)' ./ (δg' * (B * δg ))

return B

end

function BFGS(B,δ,z,δg)

## 算法会出现 δ * δg接近无限大的情况,算出来的de接近无线小

## 迭代结果就会变成NaN,所以加一个判断

# a = δg' * δ

# # println(a)

# if a > 10e200

# println("-----------WARN:B迭代中出现分母接近无限大情况,已经强制停止迭代---------")

# return "error"

# end

de = 1/(δg' * δ)

# println(de)

z2 = z * δ'

B = B .+ (1 + δg' * z * de) .* δ*δ' .* de .- (z2 + z2') .* de

return B

end

function QuasiNewton(f,

g,

x0;

B0 = I,

fα = aecant_alpha,

fB = SR1,

α0 = 4.0,

ϵ = 0.001,

ϵx = ϵ,

ϵf = ϵ,

ϵg = ϵ,

convergence_rule = x -> x[3],

convergence_abs = true,

iterations = 128,

debug = false,

plotStep = true)

# 检查输入是否符合要求

try

f(x0...)

g(x0...)

catch

println("error:请输入正确的x0")

end

## 初始步

xk = x0

fk = f(xk...)

gk = g(xk...)

## 实例化初始B矩阵

n = length(xk)

B = zeros(n,n) + B0

pts = []

push!(pts,xk)

for i in 1:iterations

d = -B * gk

α,δ,xn,fn,gn = fα(f,g,xk,fk,gk,d)

if plotStep

push!(pts,xn)

end

if debug

println(i,"\tx:",xn,"\tf:",fn,"\ng:",gn,"\tα:",α,"\tδ:",δ,"\tnorm(δ):",norm(δ))

end

## 判断结束条件

conditions = [norm(δ),abs(fn-fk),norm(gn)]

denominator = [max(1,norm(xk), max(1,fk), 1)]

if !convergence_abs

conditions = conditions ./ denominator

end

if convergence_rule(conditions .< [ϵx,ϵf,ϵg])

println("---------------达到迭代结束条件,迭代结束-------")

println("number of steps:",i)

println("norm of the last step size:",norm(δ))

println("norm of the last gradient:",norm(gn))

println("最后一步的f差值:",abs(fn-fk))

return xn, fn ,gn,pts

end

##updata

xn = xk + α .* d

δg = gn - gk

z = B * δg

B = fB(B,δ,z,δg)

if B == "error"

println("number of steps:",i)

println("norm of the last step size:",norm(δ))

println("norm of the last gradient:",norm(gn))

println("最后一步的f差值:",abs(fn-fk))

return xn, fn ,gn,pts

end

# println(i,"\t",δg' * δ)

xk = xn

fk = fn

gk = gn

end

println("---------------达到最大迭代步数,迭代结束-------")

return xn, fn ,gn,pts

end

## 测试用例

# 普通2次函数

f = (x1, x2) -> 2.5*x1^2 + 0.5*x2^2 +2*x1*x2-3*x1-x2

g = (x1,x2) -> [5x1 + 2x2-3,x2+2x1-1]

x0 =[100]

## 画图的范围,记得用小数

low = -100.0

up = 100.0

## Rosenbrock函数

# f = (x1,x2) -> (1-x1)^2 + 100*(x2-x1^2)^2

# g = (x1,x2) -> [-2*(1-x1) - 400*x1*(x2-x1^2), 200*(x2-x1^2)]

# x0 =[0,0]

#

# ## 画图的范围,记得用小数

# low = -1.0

# up = 2.0

## Powell

# f = (x1,x2,x3,x4) -> (x1 + 10*x2)^2 + 5(x3-x4)^2+(x2-2*x3)^4 +10*(x1-x4)^4

# g = (x1,x2,x3,x4) -> [2(x1+10x2)+40(x1-x4)^3,

# 20(x1+10x2) + 4(x2-2x3)^3,

# 10(x3-x4) - 8(x2-2x3)^3,

# -10(x3-x4)-40(x1-x4)^3]

# x0 = [3,-1,0,1]

xn, fn ,gn,pts = QuasiNewton(f,g,

x0,

debug = false,

fB = BFGS,

fα = inexact_alpha)

# println(pts)

# 画图

n = length(pts)

rosen = layer(f,low,up,low,up,Geom.contour())

steps = layer(

## convert()函数将数据转化成了浮点型,这样子才不会报错

## 最后一点不作为起点

x = convert(Vector{Float64},[pts[i][1] for i in 1:n-1]),

y = convert(Vector{Float64},[pts[i][2] for i in 1:n-1]),

## 第一点不作为终点

xend = convert(Vector{Float64},[pts[i][1] for i in 2:n]),

yend = convert(Vector{Float64},[pts[i][2] for i in 2:n]),

Geom.point,Geom.vector(filled = true))

plot(rosen,steps,Scale.x_continuous(minvalue = low,maxvalue = up),

Scale.y_continuous(maxvalue=up,minvalue=low))

写了这么多次代码可以发现,最优化算法的代码几乎都是一样的,不同的只是搜索方向的方法不同。最优化算法存在一定的套路,固定的流程永远是:确定步长-确定搜索方向-迭代到下一点-判断是否达到结束条件-下一次循环。

确定步长算法

这里确定步长的算法依旧是划界法或者非精确搜索算法

function inexact_alpha(f,g,xk,fk,gk,d;

α0 =1,ϵ=0.1,τ =0.5,

η = 0.5,ζ=2.0)

α = α0

Φ0 = d' * gk

δ = α .* d

xn = xk .+ δ

fn = f(xn...)

gn = g(xn...)

# Armijo 不太大条件

while fn > fk + ϵ*α*Φ0

α = τ * α

δ = α .* d

xn = xk .+ δ

fn = f(xn...)

gn = g(xn...)

end

# Wolfe 不太小条件

while d' *gn < η * Φ0

α = ζ*α

δ = α .* d

xn = xk .+ δ

fn = f(xn...)

gn = g(xn...)

end

return α,δ,xn,fn,gn

end

##划界法搜索步长

function aecant_alpha(f,g,xk,fk,gk,d;α0=1,accuracy=0.001,maxIter = 128)

# _l是当前点,k-1

# _u是下一个点,k

αl = α0

αu = α0*1.1

xl = xk .+ αl .* d

xu = xk .+ αu .* d

gl = g(xl...)

gu = g(xu...)

Φl = d' * gl

Φu = d' * gu

for i in 1:maxIter

Δ = Φu - Φl

# 防止出现分母为0的数学错误

if Δ == 0.0

println("error:出现分母为0的数学错误")

return αu,αu.*d,xu,f(xu...),gu

else

Δ = Φu * (αu - αl)/ Δ

end

if abs(Δ) <= accuracy

return αu,αu.*d,xu,f(xu...),gu

end

αl = αu

xl = xu

gl = gu

Φl = Φu

αu = αu - Δ

xu = xk .+ αu .* d

gu = g(xu...)

Φu = d' * gu

end

return αu,αu.*d,xu,f(xu...),gu

end

迭代B算法(三种拟牛顿法)

这里是定义分别实现SR1,DFP,BFGS算法的函数

## B矩阵迭代函数

function SR1(B,δ,z,δg)

B = B + (δ - z)*(δ - z)' ./ (δg' * δ - δg' * (B * δg))

return B

end

function DFR(B,δ,z,δg)

B = B + (δ * δ') ./ (δg' * δ) - (B*δg)*(B*δg)' ./ (δg' * (B * δg ))

return B

end

function BFGS(B,δ,z,δg)

## 算法会出现 δ * δg接近无限大的情况,算出来的de接近无线小

## 迭代结果就会变成NaN,所以加一个判断

# a = δg' * δ

# # println(a)

# if a > 10e200

# println("-----------WARN:B迭代中出现分母接近无限大情况,已经强制停止迭代---------")

# return "error"

# end

de = 1/(δg' * δ)

# println(de)

z2 = z * δ'

B = B .+ (1 + δg' * z * de) .* δ*δ' .* de .- (z2 + z2') .* de

return B

end

其中BFGS中的注释出现无限的情况我后来发现是我的迭代公式写错了导致的,但是觉得这个处理错误的方法还可以,值得暂时保留着,防止下次还碰上这种情况。

顺便一提,这个BFGS的公式真的特别长,特别容易抄错,如果程序报错在这一行,也不要盯着自己写的代码看了,大概率看不出错误在哪,直接重新抄一遍公式得了

检查输入

# 检查输入是否符合要求

try

f(x0...)

g(x0...)

catch

println("error:请输入正确的x0")

end

使用的是try…catch函数,如果try里面的程序块报错,就会实行catch中的程序块,这里如果输入的x0和f或者g的长度不符合,就会输出:error:请输入正确的x0。

虽然后面的程序依旧会因为x0的格式不对,但是起码能第一时间提醒自己是x0的输入出了错误。

测试用例

## 测试用例

# 普通2次函数

f = (x1, x2) -> 2.5*x1^2 + 0.5*x2^2 +2*x1*x2-3*x1-x2

g = (x1,x2) -> [5x1 + 2x2-3,x2+2x1-1]

x0 =[100,100]

## 画图的范围,记得用小数

low = -100.0

up = 100.0

## Rosenbrock函数

# f = (x1,x2) -> (1-x1)^2 + 100*(x2-x1^2)^2

# g = (x1,x2) -> [-2*(1-x1) - 400*x1*(x2-x1^2), 200*(x2-x1^2)]

# x0 =[0,0]

#

# ## 画图的范围,记得用小数

# low = -1.0

# up = 2.0

## Powell,这个函数不能画图,记得把画图的代码注释掉

# f = (x1,x2,x3,x4) -> (x1 + 10*x2)^2 + 5(x3-x4)^2+(x2-2*x3)^4 +10*(x1-x4)^4

# g = (x1,x2,x3,x4) -> [2(x1+10x2)+40(x1-x4)^3,

# 20(x1+10x2) + 4(x2-2x3)^3,

# 10(x3-x4) - 8(x2-2x3)^3,

# -10(x3-x4)-40(x1-x4)^3]

# x0 = [3,-1,0,1]

xn, fn ,gn,pts = QuasiNewton(f,g,

x0,

debug = false,

fB = BFGS,

fα = inexact_alpha)

# println(pts)

# 画图

n = length(pts)

rosen = layer(f,low,up,low,up,Geom.contour())

steps = layer(

## convert()函数将数据转化成了浮点型,这样子才不会报错

## 最后一点不作为起点

x = convert(Vector{Float64},[pts[i][1] for i in 1:n-1]),

y = convert(Vector{Float64},[pts[i][2] for i in 1:n-1]),

## 第一点不作为终点

xend = convert(Vector{Float64},[pts[i][1] for i in 2:n]),

yend = convert(Vector{Float64},[pts[i][2] for i in 2:n]),

Geom.point,Geom.vector(filled = true))

plot(rosen,steps,Scale.x_continuous(minvalue = low,maxvalue = up),

Scale.y_continuous(maxvalue=up,minvalue=low))

其他地方和共轭梯度法的算法其实没有太大的区别,如果对其他代码有什么疑问,可以看我写的共轭梯度法

声明

这个博客是博主根据博主的老师上传的视频整理得到的,仅供参考和学习,也欢迎大家和我交流讨论。

参考

SR1法

DFP法

BFGS法