ELK 日志系统收集K8s中日志

容器特性给日志采集带来的困难

• K8s弹性伸缩性:导致不能预先确定采集的目标

• 容器隔离性:容器的文件系统与宿主机是隔离,导致日志采集器读取日志文件受阻。

日志按体现方式分类

应用程序日志记录体现方式分为两类:

• 标准输出:输出到控制台,使用kubectl logs可以看到。

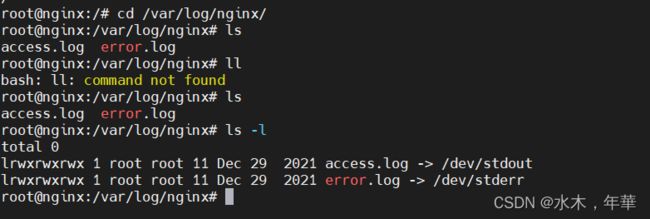

例如 nginx日志是将访问日志输出到标准输出,可以用kubectl log 查看

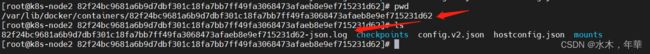

kubectl logs ==>> apiserver ==>> kubecet ==>> docker api ==>><container-id>-json.log

• 日志文件:写到容器的文件系统的文件。

Kubernetes应用日志收集

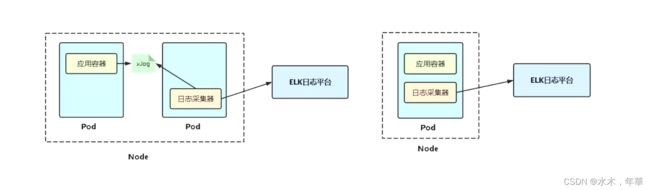

针对标准输出:以DaemonSet方式在每个Node上部署一个日志收集程序,采集

/var/lib/docker/containers/目录下所有容器日志。

针对容器中日志文件:在Pod中增加一个容器运行日志采集器,使用emptyDir共享日志目录让日志采集器读取到日志文件。

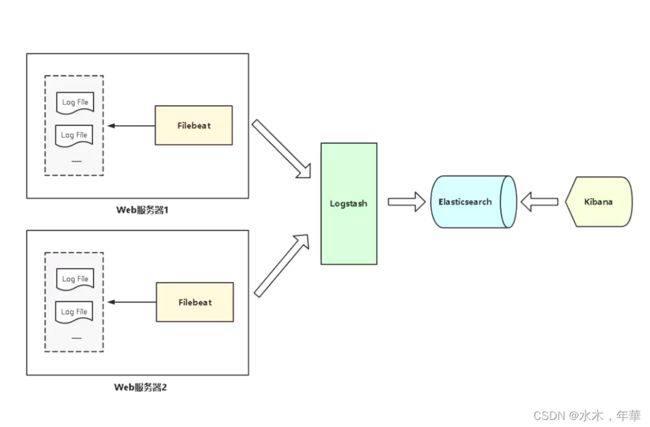

ELK 日志系统

ELK 是三个开源软件的缩写,提供一套完整的企业级日志平台解决方案。

分别是:

• Elasticsearch:搜索、分析和存储数据

• Logstash :采集日志、格式化、过滤,最后将数据

推送到Elasticsearch存储

• Kibana:数据可视化

• Beats :集合了多种单一用途数据采集器,用于实现从边缘机器向 Logstash 和 Elasticsearch 发送数

据。里面应用最多的是Filebeat,是一个轻量级日志采集器。

轻量级日志:graylog、grafana loki

搭建日志系统:

• elasticsearch.yaml # ES数据库

• kibana.yaml # 可视化展示

日志收集:

• filebeat-kubernetes.yaml # 采集所有容器标准输出

• app-log-stdout.yaml # 标准输出测试应用

• app-log-logfile.yaml # 日志文件测试应用

针对标准输出:在每个节以deamsent方式部署filebeat,采集节点上所有的日志文件,目录为/var/lib/docker/containers//容器id/xxxx-json.log

kubectl apply -f elasticsearch.yaml

kubectl apply -f kibana.yaml

kubectl apply -f filebeat-kubernetes.ya

#elasticsearch.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: elasticsearch

namespace: ops

labels:

k8s-app: elasticsearch

spec:

replicas: 1

selector:

matchLabels:

k8s-app: elasticsearch

template:

metadata:

labels:

k8s-app: elasticsearch

spec:

containers:

- image: elasticsearch:7.9.2

name: elasticsearch

resources:

limits:

cpu: 2

memory: 3Gi

requests:

cpu: 0.5

memory: 500Mi

env:

- name: "discovery.type"

value: "single-node"

- name: ES_JAVA_OPTS

value: "-Xms512m -Xmx2g"

ports:

- containerPort: 9200

name: db

protocol: TCP

volumeMounts:

- name: elasticsearch-data

mountPath: /usr/share/elasticsearch/data

volumes:

- name: elasticsearch-data

persistentVolumeClaim:

claimName: es-pvc

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: es-pvc

namespace: ops

spec:

storageClassName: "managed-nfs-storage"

accessModes:

- ReadWriteMany

resources:

requests:

storage: 10Gi

---

apiVersion: v1

kind: Service

metadata:

name: elasticsearch

namespace: ops

spec:

ports:

- port: 9200

protocol: TCP

targetPort: 9200

selector:

k8s-app: elasticsearch

#kibana.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: kibana

namespace: ops

labels:

k8s-app: kibana

spec:

replicas: 1

selector:

matchLabels:

k8s-app: kibana

template:

metadata:

labels:

k8s-app: kibana

spec:

containers:

- name: kibana

image: kibana:7.9.2

resources:

limits:

cpu: 2

memory: 2Gi

requests:

cpu: 0.5

memory: 500Mi

env:

- name: ELASTICSEARCH_HOSTS

value: http://10.244.169.139:9200

- name: I18N_LOCALE

value: zh-CN

ports:

- containerPort: 5601

name: ui

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: kibana

namespace: ops

spec:

type: NodePort

ports:

- port: 5601

protocol: TCP

targetPort: ui

nodePort: 30601

selector:

k8s-app: kibana

#filebeat-kubernetes.yaml

---

apiVersion: v1

kind: ConfigMap

metadata:

name: filebeat-config

namespace: ops

labels:

k8s-app: filebeat

data:

filebeat.yml: |-

filebeat.config:

inputs:

# Mounted `filebeat-inputs` configmap:

path: ${path.config}/inputs.d/*.yml

# Reload inputs configs as they change:

reload.enabled: false

modules:

path: ${path.config}/modules.d/*.yml

# Reload module configs as they change:

reload.enabled: false

output.elasticsearch:

hosts: ['elasticsearch.ops:9200']

---

apiVersion: v1

kind: ConfigMap

metadata:

name: filebeat-inputs

namespace: ops

labels:

k8s-app: filebeat

data:

kubernetes.yml: |-

- type: docker

containers.ids:

- "*"

processors:

- add_kubernetes_metadata:

in_cluster: true

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: filebeat

namespace: ops

labels:

k8s-app: filebeat

spec:

selector:

matchLabels:

k8s-app: filebeat

template:

metadata:

labels:

k8s-app: filebeat

spec:

serviceAccountName: filebeat

terminationGracePeriodSeconds: 30

containers:

- name: filebeat

image: elastic/filebeat:7.9.2

args: [

"-c", "/etc/filebeat.yml",

"-e",

]

securityContext:

runAsUser: 0

# If using Red Hat OpenShift uncomment this:

#privileged: true

resources:

limits:

memory: 200Mi

requests:

cpu: 100m

memory: 100Mi

volumeMounts:

- name: config

mountPath: /etc/filebeat.yml

readOnly: true

subPath: filebeat.yml

- name: inputs

mountPath: /usr/share/filebeat/inputs.d

readOnly: true

- name: data

mountPath: /usr/share/filebeat/data

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

volumes:

- name: config

configMap:

defaultMode: 0600

name: filebeat-config

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

- name: inputs

configMap:

defaultMode: 0600

name: filebeat-inputs

# data folder stores a registry of read status for all files, so we don't send everything again on a Filebeat pod restart

- name: data

hostPath:

path: /var/lib/filebeat-data

type: DirectoryOrCreate

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: filebeat

subjects:

- kind: ServiceAccount

name: filebeat

namespace: ops

roleRef:

kind: ClusterRole

name: filebeat

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: filebeat

labels:

k8s-app: filebeat

rules:

- apiGroups: [""] # "" indicates the core API group

resources:

- namespaces

- pods

verbs:

- get

- watch

- list

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: filebeat

namespace: ops

labels:

k8s-app: filebeat

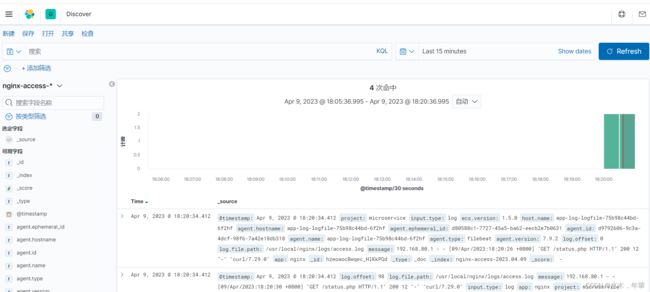

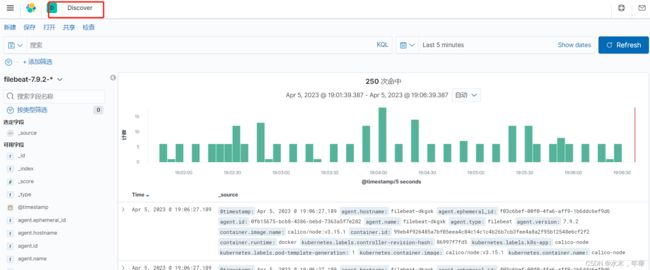

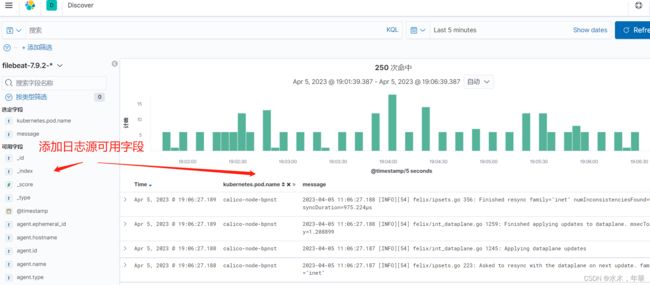

可视化展示日志:

- 查看索引(日志记录集合):Management -> Stack Management -> 索引管理

- 将索引关联到Kibana:索引模式 -> 创建 -> 匹配模式 -> 选择时间戳

- 在Discover选择索引模式查看日志

将索引关联到Kibana

Discover选择索引模式查看日志

按条件查询

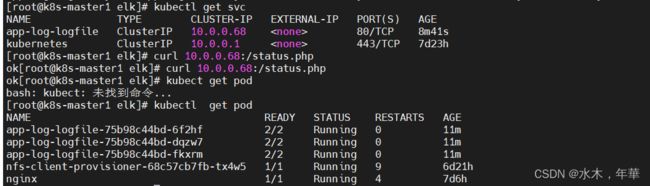

二、针对容器中日志文件

#app-log-logfile.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: app-log-logfile

spec:

replicas: 3

selector:

matchLabels:

project: microservice

app: nginx-logfile

template:

metadata:

labels:

project: microservice

app: nginx-logfile

spec:

containers:

# 应用容器

- name: nginx

image: lizhenliang/nginx-php

# 将数据卷挂载到日志目录

volumeMounts:

- name: nginx-logs

mountPath: /usr/local/nginx/logs

# 日志采集器容器

- name: filebeat

image: elastic/filebeat:7.9.2

args: [

"-c", "/etc/filebeat.yml",

"-e",

]

resources:

requests:

cpu: 100m

memory: 100Mi

limits:

memory: 500Mi

securityContext:

runAsUser: 0

volumeMounts:

# 挂载filebeat配置文件

- name: filebeat-config

mountPath: /etc/filebeat.yml

subPath: filebeat.yml

# 将数据卷挂载到日志目录

- name: nginx-logs

mountPath: /usr/local/nginx/logs

# 数据卷共享日志目录

volumes:

- name: nginx-logs

emptyDir: {}

- name: filebeat-config

configMap:

name: filebeat-nginx-config

---

apiVersion: v1

kind: Service

metadata:

name: app-log-logfile

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

project: microservice

app: nginx-logfile

---

apiVersion: v1

kind: ConfigMap

metadata:

name: filebeat-nginx-config

data:

# 配置文件保存在ConfigMap

filebeat.yml: |-

filebeat.inputs:

- type: log

paths:

- /usr/local/nginx/logs/access.log

# tags: ["access"]

fields_under_root: true

fields:

project: microservice #项目名称

app: nginx #pod名称

setup.ilm.enabled: false

setup.template.name: "nginx-access"

setup.template.pattern: "nginx-access-*"

output.elasticsearch:

hosts: ['elasticsearch.ops:9200']

index: "nginx-access-%{+yyyy.MM.dd}" #索引名称

kubectl apply -f app-log-logfile.yaml