ECM技术学习:卷积跨分量帧内预测模型(Convolutional cross-component intra prediction model)

卷积交叉分量模型(convolutional cross-component model,CCCM)基本思想和CCLM模式类似,建立亮度和色度之间模型实现从亮度重建像素预测色度像素。和CCLM一样,预测色度像素前,需要对亮度重建块进行下采样,以匹配色度块尺寸。

此外,与 CCLM 类似,可以选择使用 CCCM 的单模型或多模型变体。 多模型变体使用两个模型,一个模型用于高于平均亮度参考值的样本,另一个模型用于其余样本(和 MMLM 类似)。多模型 CCCM (Multi-model CCCM mode)模式应用于至少有 128 个参考样本可用的 PU。

1. 卷积滤波器

提出的应用 7 抽头卷积滤波器计算色度预测像素,色度预测像素的计算公式如下所示:

predChromaVal = c0C + c1N + c2S + c3E + c4W + c5P + c6B

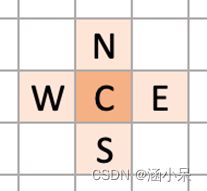

其中,C表示当前色度样本对应位置处的亮度样本,N、S、E、W分别为当前亮度样本的相邻样本,如下图所示:

非线性项 P :

P = ( C*C + midVal ) >> bitDepth

偏置项 B:

B = midVal

偏置项 B 表示输入和输出之间的标量偏移(类似于 CCLM 中的偏移项),并设置为中间色度值(对于10 bit视频,B=512)。

2. 滤波器系数的计算

通过最小化参考区域中预测和重建色度样本之间的 MSE 来计算滤波器系数 cn。

参考区域如下图所示,由 PU 上方和左侧的 6 行/列色度样本组成。 参考区域向右延伸 1 个 PU 宽度,在 PU 边界下方延伸 1 个 PU 高度。 参考区域调整为仅包含可用样本。 蓝色区域的扩展需要支持正形空间滤波器的“side samples”,通过复制相邻重建像素(绿色区域)填充得到。

通过计算参考区域中亮度重建像素的自相关矩阵和亮度重建像素与色度重建像素的之间的互相关向量来执行 MSE 最小化,如下图所示。

将自相关矩阵进行 LDL 分解,并使用反代换法计算最终的滤波器系数。 该过程大致遵循 ECM 中 ALF 滤波器系数的计算,但是选择 LDL 分解而不是 Cholesky 分解以避免使用平方根运算。 所提出的方法仅使用整数算术。

3. ECM相关代码实现

1)xCccmCreateLumaRef 函数获取参考区域和当前区域亮度重建像素的同时下采样

通过调用 xCccmCalcRefArea 函数来检查参考区域的可用像素数,并将可用的参考区域尺寸和当前区域的位置和尺寸保存下来:

m_cccmRefArea = Area( columnsLeft, rowsAbove, refWidth, refHeight); // Position with respect to the PU

// 其中

refSizeX = m_cccmRefArea.x; // Reference lines available left and above

refSizeY = m_cccmRefArea.y;

areaWidth = m_cccmRefArea.width; // Reference buffer size excluding paddings

areaHeight = m_cccmRefArea.height;void IntraPrediction::xCccmCreateLumaRef(const PredictionUnit& pu)

{

const CPelBuf recoLuma = pu.cs->picture->getRecoBuf(COMPONENT_Y);

const int maxPosPicX = pu.cs->picture->chromaSize().width - 1;

const int maxPosPicY = pu.cs->picture->chromaSize().height - 1;

xCccmCalcRefArea(pu); // Find the reference area 寻找可用参考区域

int areaWidth, areaHeight, refSizeX, refSizeY, refPosPicX, refPosPicY;

PelBuf refLuma = xCccmGetLumaRefBuf(pu, areaWidth, areaHeight, refSizeX, refSizeY, refPosPicX, refPosPicY);

int puBorderX = refSizeX + pu.blocks[COMPONENT_Cb].width;

int puBorderY = refSizeY + pu.blocks[COMPONENT_Cb].height;

// Generate down-sampled luma for the area covering both the PU and the top/left reference areas (+ top and left paddings)

// 为覆盖PU和顶部/左侧参考区域的区域生成下采样亮度(+顶部和左侧填充)

for (int y = -CCCM_FILTER_PADDING; y < areaHeight; y++)

{

for (int x = -CCCM_FILTER_PADDING; x < areaWidth; x++)

{

if (( x >= puBorderX && y >= refSizeY ) ||

( y >= puBorderY && x >= refSizeX ))

{

continue;

}

int chromaPosPicX = refPosPicX + x;

int chromaPosPicY = refPosPicY + y;

chromaPosPicX = chromaPosPicX < 0 ? 0 : chromaPosPicX > maxPosPicX ? maxPosPicX : chromaPosPicX;

chromaPosPicY = chromaPosPicY < 0 ? 0 : chromaPosPicY > maxPosPicY ? maxPosPicY : chromaPosPicY;

refLuma.at( x, y ) = xCccmGetLumaVal(pu, recoLuma, chromaPosPicX, chromaPosPicY);

}

}

CHECK( CCCM_FILTER_PADDING != 1, "Only padding with one sample implemented" );

// 填充参考区域

// Pad right of top reference area

for (int y = -1; y < refSizeY; y++)

{

refLuma.at( areaWidth, y ) = refLuma.at( areaWidth - 1, y );

}

// Pad right of PU

for (int y = refSizeY; y < puBorderY; y++)

{

refLuma.at( puBorderX, y ) = refLuma.at( puBorderX - 1, y );

}

// Pad right of left reference area

for (int y = puBorderY; y < areaHeight; y++)

{

refLuma.at( refSizeX, y ) = refLuma.at( refSizeX - 1, y );

}

// Pad below left reference area

for (int x = -1; x < refSizeX + 1; x++)

{

refLuma.at( x, areaHeight ) = refLuma.at( x, areaHeight - 1 );

}

// Pad below PU

for (int x = refSizeX; x < puBorderX + 1; x++)

{

refLuma.at( x, puBorderY ) = refLuma.at( x, puBorderY - 1 );

}

// Pad below right reference area

for (int x = puBorderX + 1; x < areaWidth + 1; x++)

{

refLuma.at( x, refSizeY ) = refLuma.at( x, refSizeY - 1 );

}

// In dualtree we can also use luma from the right and below (if not on CTU/picture boundary)

if ( CS::isDualITree( *pu.cs ) )

{

int ctuWidth = pu.cs->sps->getMaxCUWidth() >> getComponentScaleX(COMPONENT_Cb, pu.chromaFormat);

int ctuHeight = pu.cs->sps->getMaxCUHeight() >> getComponentScaleY(COMPONENT_Cb, pu.chromaFormat);

// Samples right of top reference area

int padPosPicX = refPosPicX + areaWidth;

if ( padPosPicX <= maxPosPicX && (padPosPicX % ctuWidth) )

{

for (int y = -1; y < refSizeY; y++)

{

int chromaPosPicY = refPosPicY + y;

chromaPosPicY = chromaPosPicY < 0 ? 0 : chromaPosPicY > maxPosPicY ? maxPosPicY : chromaPosPicY;

refLuma.at( areaWidth, y ) = xCccmGetLumaVal(pu, recoLuma, padPosPicX, chromaPosPicY);

}

}

// Samples right of PU

padPosPicX = refPosPicX + puBorderX;

if ( padPosPicX <= maxPosPicX && (padPosPicX % ctuWidth) )

{

for (int y = refSizeY; y < puBorderY; y++)

{

int chromaPosPicY = refPosPicY + y;

chromaPosPicY = chromaPosPicY < 0 ? 0 : chromaPosPicY > maxPosPicY ? maxPosPicY : chromaPosPicY;

refLuma.at( puBorderX, y ) = xCccmGetLumaVal(pu, recoLuma, padPosPicX, chromaPosPicY);

}

}

// Samples right of left reference area

padPosPicX = refPosPicX + refSizeX;

if ( padPosPicX <= maxPosPicX )

{

for (int y = puBorderY; y < areaHeight; y++)

{

int chromaPosPicY = refPosPicY + y;

chromaPosPicY = chromaPosPicY < 0 ? 0 : chromaPosPicY > maxPosPicY ? maxPosPicY : chromaPosPicY;

refLuma.at( refSizeX, y ) = xCccmGetLumaVal(pu, recoLuma, padPosPicX, chromaPosPicY);

}

}

// Samples below left reference area

int padPosPicY = refPosPicY + areaHeight;

if ( padPosPicY <= maxPosPicY && (padPosPicY % ctuHeight) )

{

for (int x = -1; x < refSizeX + 1; x++)

{

int chromaPosPicX = refPosPicX + x;

chromaPosPicX = chromaPosPicX < 0 ? 0 : chromaPosPicX > maxPosPicX ? maxPosPicX : chromaPosPicX;

refLuma.at( x, areaHeight ) = xCccmGetLumaVal(pu, recoLuma, chromaPosPicX, padPosPicY);

}

}

// Samples below PU

padPosPicY = refPosPicY + puBorderY;

if ( padPosPicY <= maxPosPicY && (padPosPicY % ctuHeight) )

{

for (int x = refSizeX; x < puBorderX; x++) // Just go to PU border as the next sample may be out of CTU (and not needed anyways)

{

int chromaPosPicX = refPosPicX + x;

chromaPosPicX = chromaPosPicX < 0 ? 0 : chromaPosPicX > maxPosPicX ? maxPosPicX : chromaPosPicX;

refLuma.at( x, puBorderY ) = xCccmGetLumaVal(pu, recoLuma, chromaPosPicX, padPosPicY);

}

}

// Samples below right reference area

padPosPicY = refPosPicY + refSizeY;

if ( padPosPicY <= maxPosPicY )

{

// Avoid going outside of right CTU border where these samples are not yet available

int puPosPicX = pu.blocks[COMPONENT_Cb].x;

int ctuRightEdgeDist = ctuWidth - (puPosPicX % ctuWidth) + refSizeX;

int lastPosX = ctuRightEdgeDist < areaWidth ? ctuRightEdgeDist : areaWidth;

for (int x = puBorderX + 1; x < lastPosX; x++) // Just go to ref area border as the next sample may be out of CTU (and not needed anyways)

{

int chromaPosPicX = refPosPicX + x;

chromaPosPicX = chromaPosPicX < 0 ? 0 : chromaPosPicX > maxPosPicX ? maxPosPicX : chromaPosPicX;

refLuma.at( x, refSizeY ) = xCccmGetLumaVal(pu, recoLuma, chromaPosPicX, padPosPicY);

}

}

}

}2)计算模型参数

xCccmCalcModels 函数计算参考区域中的亮度重建像素的自相关矩阵和亮度重建像素与色度重建像素的之间的互相关向量,再使用 LDL分解求解滤波器系数。

void IntraPrediction::xCccmCalcModels(const PredictionUnit& pu, CccmModel &cccmModelCb, CccmModel &cccmModelCr, int modelId, int modelThr) const

{

int areaWidth, areaHeight, refSizeX, refSizeY, refPosPicX, refPosPicY;

const CPelBuf recoCb = pu.cs->picture->getRecoBuf(COMPONENT_Cb);

const CPelBuf recoCr = pu.cs->picture->getRecoBuf(COMPONENT_Cr);

PelBuf refLuma = xCccmGetLumaRefBuf(pu, areaWidth, areaHeight, refSizeX, refSizeY, refPosPicX, refPosPicY);

int M = CCCM_NUM_PARAMS;

int sampleNum = areaWidth * areaHeight - pu.blocks[COMPONENT_Cb].width * pu.blocks[COMPONENT_Cb].height;

int sampleInd = 0;

// Collect reference data to input matrix A and target vector Y

// 收集参考数据到输入矩阵A和目标向量Y

static Pel A[CCCM_NUM_PARAMS][CCCM_MAX_REF_SAMPLES];

static Pel YCb[CCCM_MAX_REF_SAMPLES];

static Pel YCr[CCCM_MAX_REF_SAMPLES];

for (int y = 0; y < areaHeight; y++)

{

for (int x = 0; x < areaWidth; x++)

{

if ( x >= refSizeX && y >= refSizeY )

{

continue;

}

if ( modelId == 1 && refLuma.at( x, y ) > modelThr ) // Model 1: Include only samples below or equal to the threshold

{

continue;

}

if ( modelId == 2 && refLuma.at( x, y ) <= modelThr) // Model 2: Include only samples above the threshold

{

continue;

}

// 7-tap cross

A[0][sampleInd] = refLuma.at( x , y ); // C

A[1][sampleInd] = refLuma.at( x , y-1 ); // N

A[2][sampleInd] = refLuma.at( x , y+1 ); // S

A[3][sampleInd] = refLuma.at( x-1, y ); // W

A[4][sampleInd] = refLuma.at( x+1, y ); // E

A[5][sampleInd] = cccmModelCb.nonlinear( refLuma.at( x, y) );

A[6][sampleInd] = cccmModelCb.bias();

YCb[sampleInd] = recoCb.at(refPosPicX + x, refPosPicY + y);

YCr[sampleInd++] = recoCr.at(refPosPicX + x, refPosPicY + y);

}

}

if ( sampleInd == 0 ) // Number of samples can go to zero in the multimode case

{

cccmModelCb.clearModel(M);

cccmModelCr.clearModel(M);

return;

}

else

{

sampleNum = sampleInd;

}

// Calculate autocorrelation matrix and cross-correlation vector

// 计算自相关矩阵和互相关向量

static CccmCovarianceInt::TE ATA;

static CccmCovarianceInt::Ty ATYCb;

static CccmCovarianceInt::Ty ATYCr;

memset(ATA , 0x00, sizeof(TCccmCoeff) * CCCM_NUM_PARAMS * CCCM_NUM_PARAMS);

memset(ATYCb, 0x00, sizeof(TCccmCoeff) * CCCM_NUM_PARAMS);

memset(ATYCr, 0x00, sizeof(TCccmCoeff) * CCCM_NUM_PARAMS);

for (int coli0 = 0; coli0 < M; coli0++)

{

for (int coli1 = coli0; coli1 < M; coli1++)

{

Pel *col0 = A[coli0];

Pel *col1 = A[coli1];

for (int rowi = 0; rowi < sampleNum; rowi++)

{

ATA[coli0][coli1] += col0[rowi] * col1[rowi];

}

}

}

for (int coli = 0; coli < M; coli++)

{

Pel *col = A[coli];

for (int rowi = 0; rowi < sampleNum; rowi++)

{

ATYCb[coli] += col[rowi] * YCb[rowi];

ATYCr[coli] += col[rowi] * YCr[rowi];

}

}

// Scale the matrix and vector to selected dynamic range

// 将矩阵和向量缩放到选定的动态范围

int matrixShift = CCCM_MATRIX_BITS - 2 * pu.cu->cs->sps->getBitDepth(CHANNEL_TYPE_CHROMA) - ceilLog2(sampleNum);

if ( matrixShift > 0 )

{

for (int coli0 = 0; coli0 < M; coli0++)

{

for (int coli1 = coli0; coli1 < M; coli1++)

{

ATA[coli0][coli1] <<= matrixShift;

}

}

for (int coli = 0; coli < M; coli++)

{

ATYCb[coli] <<= matrixShift;

}

for (int coli = 0; coli < M; coli++)

{

ATYCr[coli] <<= matrixShift;

}

}

else if ( matrixShift < 0 )

{

matrixShift = -matrixShift;

for (int coli0 = 0; coli0 < M; coli0++)

{

for (int coli1 = coli0; coli1 < M; coli1++)

{

ATA[coli0][coli1] >>= matrixShift;

}

}

for (int coli = 0; coli < M; coli++)

{

ATYCb[coli] >>= matrixShift;

}

for (int coli = 0; coli < M; coli++)

{

ATYCr[coli] >>= matrixShift;

}

}

// Solve the filter coefficients using LDL decomposition

// 使用LDL分解求解滤波器系数

CccmCovarianceInt cccmSolver;

CccmCovarianceInt::TE U; // Upper triangular L' of ATA's LDL decomposition

CccmCovarianceInt::Ty diag; // Diagonal of D

bool decompOk = cccmSolver.ldlDecompose(ATA, U, diag, M);

cccmSolver.ldlSolve(U, diag, ATYCb, cccmModelCb.params, M, decompOk);

cccmSolver.ldlSolve(U, diag, ATYCr, cccmModelCr.params, M, decompOk);

}3)计算预测像素

通过LDL求解得到的滤波器系数,实现从亮度到色度的映射。

void IntraPrediction::xCccmApplyModel(const PredictionUnit& pu, const ComponentID compId, CccmModel &cccmModel, int modelId, int modelThr, PelBuf &piPred) const

{

const ClpRng& clpRng(pu.cu->cs->slice->clpRng(compId));

static Pel samples[CCCM_NUM_PARAMS];

CPelBuf refLumaBlk = xCccmGetLumaPuBuf(pu);

for (int y = 0; y < refLumaBlk.height; y++)

{

for (int x = 0; x < refLumaBlk.width; x++)

{

if ( modelId == 1 && refLumaBlk.at( x, y ) > modelThr ) // Model 1: Include only samples below or equal to the threshold

{

continue;

}

if ( modelId == 2 && refLumaBlk.at( x, y ) <= modelThr) // Model 2: Include only samples above the threshold

{

continue;

}

// 7-tap cross

samples[0] = refLumaBlk.at( x , y ); // C

samples[1] = refLumaBlk.at( x , y-1 ); // N

samples[2] = refLumaBlk.at( x , y+1 ); // S

samples[3] = refLumaBlk.at( x-1, y ); // W

samples[4] = refLumaBlk.at( x+1, y ); // E

samples[5] = cccmModel.nonlinear( refLumaBlk.at( x, y) );

samples[6] = cccmModel.bias();

piPred.at(x, y) = ClipPel( cccmModel.convolve(samples, CCCM_NUM_PARAMS), clpRng );

}

}

}