python爬取airbnb房屋信息

1. 必要的库

import requests

import re

import json

import urllib

import pymysql # 为了连接mysql

from time import sleep

2. 前期准备

2.1 通过浏览器抓包

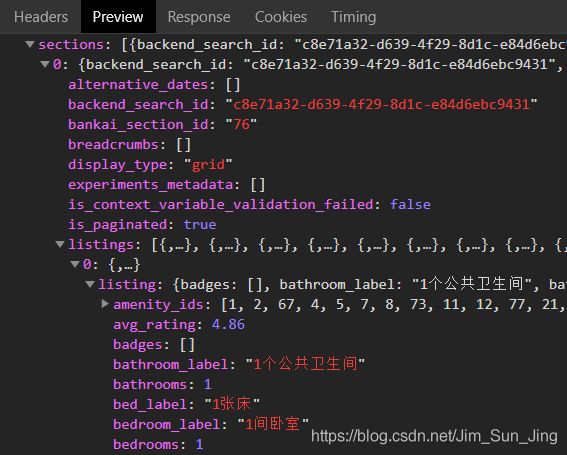

房屋信息是使用airbnb的api获取的json传输,网址的截取部分如下:

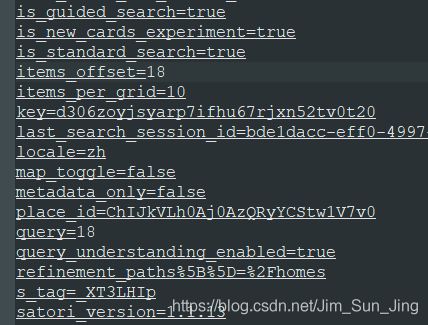

翻页后对比前后两页的网址可分辨出items_offset和query为主要的产生数据请求的数据。

于是可以用下面的代码向这个api网址发出请求获得json数据:

base_url = '''https://www.airbnb.cn/api/v2/...(省略)

&items_offset={}&items_per_grid=10&...(省略)&

query={}&...(省略)'''

2.1 中文编码

其中,城市的字段不能直接传入中文,需要使用urllib库进行转换。

def quote_word(word):

# 转换为URL所需编码

return urllib.parse.quote(word)

>>> quote_word('深圳')

'%E6%B7%B1%E5%9C%B3'

3. 编写爬虫

传入一个搜索关键字,返回一个装满了房屋信息dict的列表。

def get_json(key_word, pages = 5, base = base_url, header = header0):

'''

key_work:查询的数据

pages: 需要查询的页数

base: api网址

'''

houses = []

for i in range(pages):

sleep(1.5)

print(f'page {i}')

offset = i*10

url = base.format(offset, quote_word(key_word))

req = requests.get(url, headers = header)

dic = json.loads(req.content.decode('utf-8'))

house = dic['explore_tabs'][0]['sections'][0]['listings']

houses.append(house)

return houses

4. 保存到mysql

首先,需要编写sql语句,从python词典传入mysql. 这里用了比较基础的做法,实际上可以使用pandas辅助进行数据的操作,但这篇文章就不涉及pandas了。

SQL = """replace into house (airbnb_id, name, city, bathrooms, beds, bedrooms, superhost,

is_new_listing, lat, lng, person_capacity, preview_amenities, property_type_id, reviews_count,

room_and_property_type, room_type, tier_id, amenity_ids, can_instant_book, monthly_price_factor,

price_string, star_rating_color,rating)

values({},"{}","{}",{},{},{},{},{},"{}","{}",{},"{}",{},{},"{}","{}",{},"{}",{},{},"{}","{}","{}")"""

def insert(cursor, housedic, SQL = SQL):

for i in range(len(housedic)):

hid = housedic[i]['listing']['id']

name = housedic[i]['listing']['name'].replace('"',' ')

print(name)

city = housedic[i]['listing']['city']

bathrooms = housedic[i]['listing']['bathrooms']

try:

bedrooms = housedic[i]['listing']['bedrooms']

except:

bedrooms = 0

try:

beds = housedic[i]['listing']['beds']

except:

beds = 0

superhost = housedic[i]['listing']['is_superhost']

is_new_listing = housedic[i]['listing']['is_new_listing']

lat = housedic[i]['listing']['lat']

lng = housedic[i]['listing']['lng']

person_capacity = housedic[i]['listing']['person_capacity']

property_type_id = housedic[i]['listing']['property_type_id']

preview_amenities = housedic[i]['listing']['preview_amenities']

reviews_count = housedic[i]['listing']['reviews_count']

room_and_property_type = housedic[i]['listing']['room_and_property_type']

room_type = housedic[i]['listing']['room_type']

tier_id = housedic[i]['listing']['tier_id']

amenity_ids = ','.join(map(str,housedic[i]['listing']['amenity_ids']))

can_instant_book = housedic[i]['pricing_quote']['can_instant_book']

monthly_price_factor = housedic[i]['pricing_quote']['monthly_price_factor']

price_string = housedic[i]['pricing_quote']['price_string']

star_rating_color = housedic[i]['listing']['star_rating_color']

rating = housedic[i]['listing']['preview_tags'][0]['name']

sql = SQL.format(hid,name,city,bathrooms,beds,bedrooms,int(superhost),int(is_new_listing),

str(lat),str(lng),person_capacity,preview_amenities,property_type_id,

reviews_count,room_and_property_type,room_type,tier_id,amenity_ids[:255],

int(can_instant_book),monthly_price_factor,price_string,star_rating_color,rating)

# print(sql)

try:

cursor.execute(sql)

except Exception as e:

print(str(e).center(20,'='))

continue

接着就是使用pymysql库保存到mysql中了。

def save_to_mysql(dic_list):

# 连接数据库

conn = pymysql.connect(host='localhost',user='root',passwd='sunjing',

db='airbnb',port=3306,charset='utf8')

cursor = conn.cursor()

i=0

for page in dic_list:

print(f'page{i}')

i+=1

insert(cursor,page)

conn.commit()

cursor.close()

conn.close()

def main():

airbnb_list = get_json('深圳地铁', pages=15)

save_to_mysql(airbnb_list)