k8s集群中部署tomcat,访问方式使用Ingress和NodePort方式

文章目录

- 一、k8s部署tomcat(NodePort方式)

- 二、k8s部署tomcat(配置Ingress controller)

-

- 1、部署 Ingress-Nginx

- 2、搭建ingress-nginx的高可用

- 3、问题汇总

-

- 1、使用ingress出现404的问题解决

- 2、annotations注解的详解

一、k8s部署tomcat(NodePort方式)

1) k8s环境:

- kubelet版本:1.18.0

- docker版本:18.06.1-ce

3.集群节点配置:

master:192.168.198.130

node1:192.168.198.131

node2:192.168.198.132

2)开始部署:

[root@master tomcat]# vim tomcat.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: tomcat-deploy

spec:

replicas: 3

selector:

matchLabels:

app: tomcat9

template:

metadata:

labels:

app: tomcat9

spec:

containers:

- name: mytomcat

image: tomcat:9.0

ports:

- containerPort: 8080

---

apiVersion: v1

kind: Service

metadata:

name: tomcatservice

spec:

type: NodePort

ports:

- port: 8080

targetPort: 8080

nodePort: 30010

selector:

app: tomcat9

[root@master tomcat]# kubectl create -f tomcat.yaml

[root@master tomcat]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

tomcat-deploy-b9dd9bbdb-8sdxp 1/1 Running 0 29m 10.244.2.58 node1 <none> <none>

tomcat-deploy-b9dd9bbdb-hfgg6 1/1 Running 0 29m 10.244.2.56 node1 <none> <none>

tomcat-deploy-b9dd9bbdb-sqhln 1/1 Running 0 29m 10.244.2.57 node1 <none> <none>

[root@master tomcat]# kubectl get svc -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d8h <none>

nginx ClusterIP None <none> 8081/TCP 4d11h app=nginx

tomcatservice NodePort 10.106.222.108 <none> 8080:30010/TCP 29m app=tomcat9

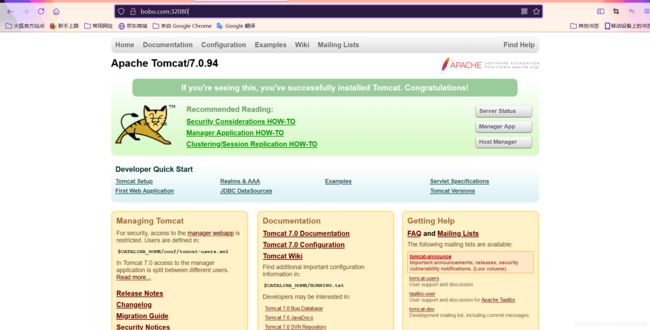

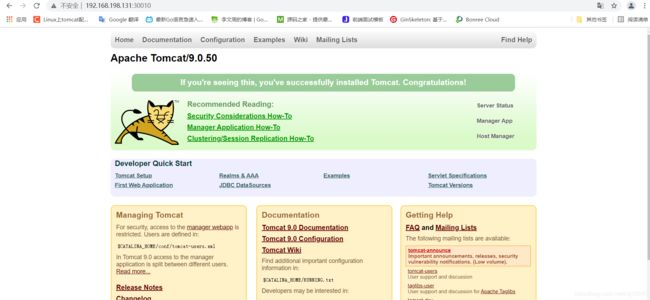

因为上面的tomcat.xml已经暴露端口30010,然后进行访问。

但是提示访问提示为找到,这面就需要查看日志了。(查看日志是相当重要的一步,能解决很多问题)

[root@master tomcat]# kubectl logs tomcat-deploy-b9dd9bbdb-8sdxp

NOTE: Picked up JDK_JAVA_OPTIONS: --add-opens=java.base/java.lang=ALL-UNNAMED --add-opens=java.base/java.io=ALL-UNNAMED --add-opens=java.base/java.util=ALL-UNNAMED --add-opens=java.base/java.util.concurrent=ALL-UNNAMED --add-opens=java.rmi/sun.rmi.transport=ALL-UNNAMED

17-Jul-2021 12:19:23.652 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Server version name: Apache Tomcat/9.0.50

17-Jul-2021 12:19:23.665 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Server built: Jun 28 2021 08:46:44 UTC

17-Jul-2021 12:19:23.665 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Server version number: 9.0.50.0

17-Jul-2021 12:19:23.666 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log OS Name: Linux

17-Jul-2021 12:19:23.666 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log OS Version: 3.10.0-1160.31.1.el7.x86_64

17-Jul-2021 12:19:23.666 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Architecture: amd64

17-Jul-2021 12:19:23.666 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Java Home: /usr/local/openjdk-11

17-Jul-2021 12:19:23.666 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log JVM Version: 11.0.11+9

17-Jul-2021 12:19:23.666 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log JVM Vendor: Oracle Corporation

17-Jul-2021 12:19:23.666 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log CATALINA_BASE: /usr/local/tomcat

17-Jul-2021 12:19:23.667 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log CATALINA_HOME: /usr/local/tomcat

17-Jul-2021 12:19:23.768 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Command line argument: --add-opens=java.base/java.lang=ALL-UNNAMED

17-Jul-2021 12:19:23.768 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Command line argument: --add-opens=java.base/java.io=ALL-UNNAMED

17-Jul-2021 12:19:23.769 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Command line argument: --add-opens=java.base/java.util=ALL-UNNAMED

17-Jul-2021 12:19:23.769 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Command line argument: --add-opens=java.base/java.util.concurrent=ALL-UNNAMED

17-Jul-2021 12:19:23.769 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Command line argument: --add-opens=java.rmi/sun.rmi.transport=ALL-UNNAMED

17-Jul-2021 12:19:23.769 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Command line argument: -Djava.util.logging.config.file=/usr/local/tomcat/conf/logging.properties

17-Jul-2021 12:19:23.769 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Command line argument: -Djava.util.logging.manager=org.apache.juli.ClassLoaderLogManager

17-Jul-2021 12:19:23.770 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Command line argument: -Djdk.tls.ephemeralDHKeySize=2048

17-Jul-2021 12:19:23.770 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Command line argument: -Djava.protocol.handler.pkgs=org.apache.catalina.webresources

17-Jul-2021 12:19:23.770 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Command line argument: -Dorg.apache.catalina.security.SecurityListener.UMASK=0027

17-Jul-2021 12:19:23.770 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Command line argument: -Dignore.endorsed.dirs=

17-Jul-2021 12:19:23.770 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Command line argument: -Dcatalina.base=/usr/local/tomcat

17-Jul-2021 12:19:23.771 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Command line argument: -Dcatalina.home=/usr/local/tomcat

17-Jul-2021 12:19:23.771 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Command line argument: -Djava.io.tmpdir=/usr/local/tomcat/temp

17-Jul-2021 12:19:23.782 INFO [main] org.apache.catalina.core.AprLifecycleListener.lifecycleEvent Loaded Apache Tomcat Native library [1.2.30] using APR version [1.6.5].

17-Jul-2021 12:19:23.782 INFO [main] org.apache.catalina.core.AprLifecycleListener.lifecycleEvent APR capabilities: IPv6 [true], sendfile [true], accept filters [false], random [true], UDS [true].

17-Jul-2021 12:19:23.782 INFO [main] org.apache.catalina.core.AprLifecycleListener.lifecycleEvent APR/OpenSSL configuration: useAprConnector [false], useOpenSSL [true]

17-Jul-2021 12:19:23.817 INFO [main] org.apache.catalina.core.AprLifecycleListener.initializeSSL OpenSSL successfully initialized [OpenSSL 1.1.1d 10 Sep 2019]

17-Jul-2021 12:19:27.069 INFO [main] org.apache.coyote.AbstractProtocol.init Initializing ProtocolHandler ["http-nio-8080"]

17-Jul-2021 12:19:27.326 INFO [main] org.apache.catalina.startup.Catalina.load Server initialization in [5873] milliseconds

看日志是启动成功了。报404一般是没找到文件,进入容器中查看。

[root@master tomcat]# kubectl get pod

NAME READY STATUS RESTARTS AGE

tomcat-deploy-b9dd9bbdb-8sdxp 1/1 Running 0 34m

tomcat-deploy-b9dd9bbdb-hfgg6 1/1 Running 0 34m

tomcat-deploy-b9dd9bbdb-sqhln 1/1 Running 0 34m

[root@master tomcat]# kubectl exec tomcat-deploy-b9dd9bbdb-8sdxp -it -- /bin/bash

root@tomcat-deploy-b9dd9bbdb-8sdxp:/usr/local/tomcat# ls

BUILDING.txt LICENSE README.md RUNNING.txt conf logs temp webapps.dist

CONTRIBUTING.md NOTICE RELEASE-NOTES bin lib native-jni-lib webapps work

root@tomcat-deploy-b9dd9bbdb-8sdxp:/usr/local/tomcat# cp -R webapps.dist/. webapps/

需要执行这个命令将webapps.dist目录下的所有文件移动到webapps下面即可访问。

出现这样的情况是因为下载的是官方的镜像,要访问的目录并不在webapps下,可以自己制作一个镜像。

二、k8s部署tomcat(配置Ingress controller)

Ingress-Nginx github 地址

Ingress-Nginx 官方网站

1、部署 Ingress-Nginx

[root@master ingress]# vi mandatory.yaml

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: nginx-configuration

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: tcp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: udp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: default-http-backend

labels:

app: default-http-backend

namespace: ingress-nginx

spec:

replicas: 1

selector:

matchLabels:

app: default-http-backend

template:

metadata:

labels:

app: default-http-backend

spec:

terminationGracePeriodSeconds: 60

containers:

- name: default-http-backend

image: registry.cn-qingdao.aliyuncs.com/kubernetes_xingej/defaultbackend-amd64:1.5 #建议提前在node节点下载镜像;

livenessProbe:

httpGet:

path: /healthz

port: 8080

scheme: HTTP

initialDelaySeconds: 30

timeoutSeconds: 5

ports:

- containerPort: 8080

resources:

# 这里调整了cpu和memory的大小,可能不同集群限制的最小值不同,看部署失败的原因就清楚

limits:

cpu: 100m

memory: 100Mi

requests:

cpu: 100m

memory: 100Mi

---

apiVersion: v1

kind: Service

metadata:

name: default-http-backend

# namespace: ingress-nginx

namespace: ingress-nginx

labels:

app: default-http-backend

spec:

ports:

- port: 80

targetPort: 8080

selector:

app: default-http-backend

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: nginx-ingress-clusterrole

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses/status

verbs:

- update

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: nginx-ingress-role

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

resourceNames:

# Defaults to "-"

# Here: "-"

# This has to be adapted if you change either parameter

# when launching the nginx-ingress-controller.

- "ingress-controller-leader-nginx"

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- ""

resources:

- endpoints

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: nginx-ingress-role-nisa-binding

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: nginx-ingress-role

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: nginx-ingress-clusterrole-nisa-binding

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nginx-ingress-clusterrole

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

replicas: 2

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

annotations:

prometheus.io/port: "10254"

prometheus.io/scrape: "true"

spec:

# wait up to five minutes for the drain of connections

terminationGracePeriodSeconds: 300

serviceAccountName: nginx-ingress-serviceaccount

nodeSelector:

kubernetes.io/os: linux

containers:

- name: nginx-ingress-controller

image: suisrc/ingress-nginx:0.30.0 #建议提前在node节点下载镜像;

args:

- /nginx-ingress-controller

- --default-backend-service=$(POD_NAMESPACE)/default-http-backend

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/udp-services

- --publish-service=$(POD_NAMESPACE)/ingress-nginx

- --annotations-prefix=nginx.ingress.kubernetes.io

securityContext:

allowPrivilegeEscalation: true

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

# www-data -> 101

runAsUser: 101

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- name: http

containerPort: 80

protocol: TCP

- name: https

containerPort: 443

protocol: TCP

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

---

apiVersion: v1

kind: LimitRange

metadata:

name: ingress-nginx

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

limits:

- min:

memory: 90Mi

cpu: 100m

type: Container

---

apiVersion: v1

kind: Service

metadata:

name: ingress-nginx

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

type: NodePort

ports:

- name: http

port: 80

targetPort: 80

protocol: TCP

# HTTP

nodePort: 32080

- name: https

port: 443

targetPort: 443

protocol: TCP

# HTTPS

nodePort: 32443

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

[root@master nodeport]# kubectl apply -f mandatory.yaml

namespace/ingress-nginx created

configmap/nginx-configuration created

configmap/tcp-services created

configmap/udp-services created

deployment.apps/default-http-backend created

service/default-http-backend created

serviceaccount/nginx-ingress-serviceaccount created

clusterrole.rbac.authorization.k8s.io/nginx-ingress-clusterrole created

role.rbac.authorization.k8s.io/nginx-ingress-role created

rolebinding.rbac.authorization.k8s.io/nginx-ingress-role-nisa-binding created

clusterrolebinding.rbac.authorization.k8s.io/nginx-ingress-clusterrole-nisa-binding created

deployment.apps/nginx-ingress-controller created

limitrange/ingress-nginx created

service/ingress-nginx created

[root@master nodeport]# kubectl get pod -n ingress-nginx

NAME READY STATUS RESTARTS AGE

default-http-backend-59c5fc7f59-7wjth 1/1 Running 0 107s

nginx-ingress-controller-5cbc696d9f-bft9g 1/1 Running 0 106s

nginx-ingress-controller-5cbc696d9f-scpjs 1/1 Running 0 106s

[root@master nodeport]# kubectl get svc -n ingress-nginx #访问ingress-nginx的服务

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default-http-backend ClusterIP 10.99.172.167 <none> 80/TCP 51m

ingress-nginx NodePort 10.104.151.116 <none> 80:32080/TCP,443:32443/TCP 51m

这个因为当前ingress-nginx服务现在还没有后端服务,这是正常的,到此Ingress控制器部署成功!

这个因为当前ingress-nginx服务现在还没有后端服务,这是正常的,到此Ingress控制器部署成功!

- 2 部署Tomcat后端服务来验证Ingress

[root@master ingress]# vi tomcat-deploy.yaml

apiVersion: v1

kind: Service

metadata:

name: tomcat

namespace: default #使用默认的名称空间即可

spec:

selector:

app: tomcat

release: canary

ports:

- name: http

port: 8080

targetPort: 8080

- name: ajp

port: 8009

targetPort: 8009

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: tomcat-deploy

spec:

replicas: 3

selector:

matchLabels:

app: tomcat

release: canary

template:

metadata:

labels:

app: tomcat

release: canary

spec:

containers:

- name: tomcat

image: tomcat:8.5

ports:

- name: httpd

containerPort: 8080

- name: ajp

containerPort: 8009

[root@master tomcat]# kubectl apply -f tomcat-deploy.yaml

[root@master tomcat]# kubectl get pod |grep tomcat-deploy #检查pod是否部署成功

tomcat-deploy-b9dd9bbdb-8sdxp 1/1 Running 0 3h33m

tomcat-deploy-b9dd9bbdb-hfgg6 1/1 Running 0 3h33m

tomcat-deploy-b9dd9bbdb-sqhln 1/1 Running 0 3h33m

3、Http访问验证

3.1将tomcat添加至ingress-nginx中

[root@master ingress]# vim ingress-tomcat.yaml

apiVersion: extensions/v1beta1 #如果版本不支持换这个试试networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-tomcat

namespace: default

annotations:

kubernets.io/ingress.class: "nginx"

spec:

rules:

- host: bobo.com #将域名与node IP 绑定写入访问节点hosts文件

http:

paths:

- path:

backend:

serviceName: tomcat

servicePort: 8080

[root@master http]# kubectl apply -f ingress-tomcat.yaml

ingress.networking.k8s.io/ingress-tomcat created

[root@master http]# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress-tomcat <none> bobo.com 10.104.151.116 80 9s

3.2、将域名解析写入访问节点hosts文件

- 4、Https访问验证

4.1、创建私有证书及secret

[root@master https]# openssl genrsa -out tls.key 2048

Generating RSA private key, 2048 bit long modulus

...............................................................+++

..+++

e is 65537 (0x10001)

[root@master https]# openssl req -new -x509 -key tls.key -out tls.crt -subj /C=CN/ST=GD/L=SZ/O=FSI/CN=bobo.com

#域名必须为提供服务的域名, 执行成功后,该目录下会生成tls.crt文件

[root@master https]# kubectl create secret tls tomcat-secret --cert=tls.crt --key=tls.key

secret/tomcat-secret created #创建名称为tomcat-secret的secret文件

- 4.2、将证书应用至tomcat服务中

[root@master https]# vi ingress-tomcat-tls.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: ingress-tomcat-tls

namespace: default

annotations:

kubernets.io/ingress.class: "nginx"

spec:

tls:

- hosts:

- bobo.com #与secret证书的域名需要保持一致

secretName: tomcat-secret #secret证书的名称

rules:

- host: bobo.com

http:

paths:

- path:

backend:

serviceName: tomcat

servicePort: 8080

[root@master https]# kubectl apply -f ingress-tomcat-tls.yaml

ingress.extensions/ingress-tomcat-tls created

[root@master https]# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress-tomcat <none> bobo.com 10.104.151.116 80 15m

ingress-tomcat-tls <none> bobo.com 10.104.151.116 80, 443 25s

root@master https]# kubectl get svc -n ingress-nginx 查看https访问的端口

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default-http-backend ClusterIP 10.99.172.167 <none> 80/TCP 107m

ingress-nginx NodePort 10.104.151.116 <none> 80:32080/TCP,443:32443/TCP 107m

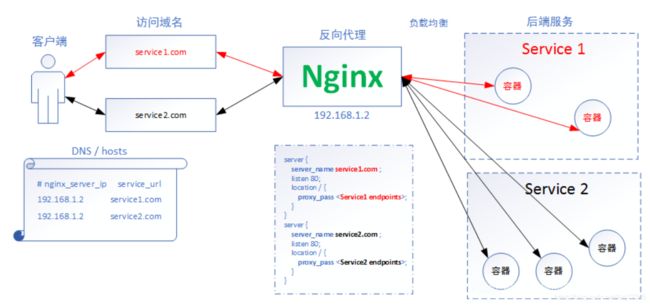

2、搭建ingress-nginx的高可用

-

搭建方式:ingress-nginx+nodeSelector(pod亲和性)+keepalived高可用(主从实现)

service官网

ingress官网

ingress安装指南 -

keepalived介绍

摘自官网:https://www.keepalived.org/doc/introduction.html

负载平衡是一种跨真实服务器集群分配 IP 流量的方法,提供一个或多个高度可用的虚拟服务。在设计负载平衡拓扑时,重要的是要考虑负载平衡器本身及其背后的真实服务器的可用性。

Keepalived 为负载平衡和高可用性提供了框架。负载平衡框架依赖于众所周知且广泛使用的 Linux 虚拟服务器 (IPVS) 内核模块,它提供第 4 层负载平衡。Keepalived 实现了一组健康检查器,以根据其健康状况动态和自适应地维护和管理负载平衡的服务器池。高可用性是通过虚拟冗余路由协议 (VRRP) 实现的。VRRP 是路由器故障转移的基础。此外,keepalived 实现了一组到 VRRP 有限状态机的钩子,提供低级和高速协议交互。每个 Keepalived 框架都可以独立使用,也可以一起使用,以提供弹性基础设施。

- keepalived安装:

| 主机 | ip |

|---|---|

| node1 | 192.168.0.109 |

| node2 | 192.168.0.227 |

为node1和node2 节点设置标签

[root@ecs-431f-0001 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 57d v1.18.0

node1 Ready <none> 12d v1.18.0

node2 Ready <none> 12d v1.18.0

[root@ecs-431f-0001 ~]# kubectl label nodes node1 ingress=true

[root@ecs-431f-0001 ~]# kubectl label nodes node2 ingress=true

[root@ecs-431f-0001 ~]# kubectl get nodes --show-labels

NAME STATUS ROLES AGE VERSION LABELS

master Ready master 57d v1.18.0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,ingress=true,kubernetes.io/arch=amd64,kubernetes.io/hostname=ecs-431f-0001,kubernetes.io/os=linux,node-role.kubernetes.io/master=

node1 Ready <none> 12d v1.18.0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,`ingress=true`,kubernetes.io/arch=amd64,kubernetes.io/hostname=ecs-431f-0002,kubernetes.io/os=linux

node2 Ready <none> 12d v1.18.0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,`ingress=true`,kubernetes.io/arch=amd64,kubernetes.io/hostname=ecs-431f-0003,kubernetes.io/os=linux

分别在node1和node2 安装keepalived

[root@ecs-431f-0001 ~]# yum intall -y keepalived

修改配置文件,node01为MASTER,node02为BACKUP

在node1执行,并进行修改。

vi /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

[email protected]

[email protected]

[email protected]

}

notification_email_from [email protected]

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 51

priority 120

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.200.16

192.168.200.17

192.168.200.18

}

}

...

在node2执行,并进行修改。

vi /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

[email protected]

[email protected]

[email protected]

}

notification_email_from [email protected]

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 51

priority 110

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.200.16

192.168.200.17

192.168.200.18

}

}

...

启动keepalived:systemctl start keepalived

开机自启动: systemctl enable keepalived

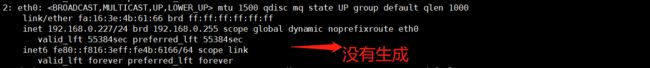

- 验证VIP的漂移

使用ip addr 在node1中查看VIP是否已经生成

使用ip addr 在node2中查看VIP是否已经生成

关闭node中的keepalived服务,再观察node1和node2发生的变化。

关闭node1中keepalived:systemctl stop keepalived,查看node1中的变化

查看node2节点,发现已经在node2节点成功部署

- Service 介绍

Service 是对一组提供相同功能的 Pods 的抽象,并为它们提供一个统一的入口。借助 Service,应用可以方便的实现服务发现与负载均衡,并实现应用的零宕机升级。Service 通过标签来选取服务后端,一般配合 Replication Controller 或者 Deployment 来保证后端容器的正常运行。这些匹配标签的 Pod IP 和端口列表组成 endpoints,由 kube-proxy 负责将服务 IP 负载均衡到这些 endpoints 上。

- Service 有四种类型:

ClusterIP:默认类型,自动分配一个仅 cluster 内部可以访问的虚拟 IP

NodePort:在 ClusterIP 基础上为 Service 在每台机器上绑定一个端口,这样就可以通过 :NodePort 来访问该服务。如果 kube-proxy 设置了 --nodeport-addresses=10.240.0.0/16(v1.10 支持),那么仅该 NodePort 仅对设置在范围内的 IP 有效。

LoadBalancer:在 NodePort 的基础上,借助 cloud provider 创建一个外部的负载均衡器,并将请求转发到 :NodePort

ExternalName:将服务通过 DNS CNAME 记录方式转发到指定的域名(通过 spec.externlName 设定)。需要 kube-dns 版本在 1.7 以上。

- ingress介绍

ingress相当于是一个网关,将我们的应用暴露给使用者,从而可以进行访问,上面使用的nodePort相对不好维护,因为每一个service都需要分配一个端口,这样的工作量就加大了,所以ingress-nginx的出现很好的解决了这个问题,我们只需要将ingress-nginx暴露一个端口即可。

不创建service,效率最高,也能四层负载的时候不修改pod的template,唯一要注意的是hostNetwork下pod会继承宿主机的网络协议,也就是使用了主机的dns,会导致svc的请求直接走宿主机的上到公网的dns服务器而非集群里的dns server,需要设置pod的dnsPolicy: ClusterFirstWithHostNet即可解决

- Ingress Controller部署

ingress的yaml文件下载

[root@ecs-431f-0001 ingress]# vi mandatory.yaml

# 创建命名空间ingress-nginx

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: nginx-configuration

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: tcp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: udp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: nginx-ingress-clusterrole

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses/status

verbs:

- update

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: nginx-ingress-role

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

resourceNames:

# Defaults to "-"

# Here: "-"

# This has to be adapted if you change either parameter

# when launching the nginx-ingress-controller.

- "ingress-controller-leader-nginx"

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- ""

resources:

- endpoints

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: nginx-ingress-role-nisa-binding

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: nginx-ingress-role

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: nginx-ingress-clusterrole-nisa-binding

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nginx-ingress-clusterrole

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

# 替换Deployment为DaemonSet,将replicas注释掉,

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

#replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

annotations:

prometheus.io/port: "10254"

prometheus.io/scrape: "true"

spec:

# wait up to five minutes for the drain of connections

terminationGracePeriodSeconds: 300

serviceAccountName: nginx-ingress-serviceaccount

hostNetwork: true #设置hostNetwork为true,Pod中所有的应用都可以看到宿主机的网络接口,宿主主机所在的局域网上所有网络接口都可以访问到该应用程序。

dnsPolicy: ClusterFirstWithHostNet #pod默认使用所在宿主主机使用的DNS,这样也会导致容器内不能通过service name 访问k8s集群中其他POD

nodeSelector:

ingress: "true" # pod亲和性,将两个工作节点打上标签

containers:

- name: nginx-ingress-controller

image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.30.0

args:

- /nginx-ingress-controller

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/udp-services

- --publish-service=$(POD_NAMESPACE)/ingress-nginx

- --annotations-prefix=nginx.ingress.kubernetes.io

securityContext:

allowPrivilegeEscalation: true

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

# www-data -> 101

runAsUser: 101

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- name: http

containerPort: 80

protocol: TCP

- name: https

containerPort: 443

protocol: TCP

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

---

apiVersion: v1

kind: LimitRange

metadata:

name: ingress-nginx

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

limits:

- min:

memory: 90Mi

cpu: 100m

type: Container

---

# 将ingress服务暴露出去32080

apiVersion: v1

kind: Service

metadata:

name: ingress-nginx

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

type: NodePort

ports:

- name: http

port: 80

targetPort: 80

protocol: TCP

# HTTP

nodePort: 32080

- name: https

port: 443

targetPort: 443

protocol: TCP

# HTTPS

nodePort: 32443

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

部署应用:kubectl get ds -n ingress-nginx ,因为前面讲过了已经成功搭建了keepalived,实现了主从的效果,这样的搭建的方式很好的预防某个节点突然宕机而不能工作的问题。

- 查看部署

因为现在还没有后端服务,如果ingress nginx启动的时候没找到这个service会无法启动,新版现在自带404页面了。

- 创建Service+Deployment

[root@ecs-431f-0001 test]# vi tomcat-deploy.yaml

apiVersion: v1

kind: Service

metadata:

name: tomcat

namespace: default #使用默认的名称空间即可

spec:

selector:

app: tomcat

release: canary

ports:

- name: http

port: 8080

targetPort: 8080

- name: ajp

port: 8009

targetPort: 8009

# 部署tomcat应用

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: tomcat-deploy

spec:

replicas: 1

selector:

matchLabels:

app: tomcat

release: canary

template:

metadata:

labels:

app: tomcat

release: canary

spec:

containers:

- name: tomcat

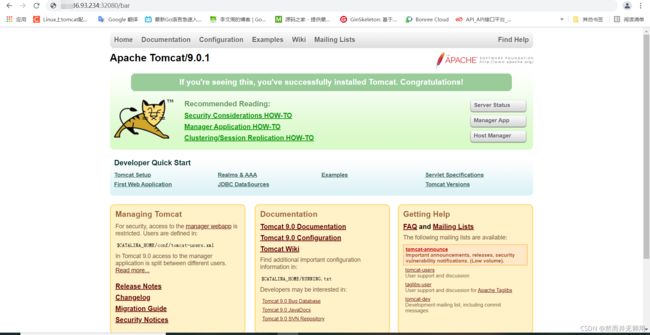

image: 39.106.254.42/harbor/tomcat:9.0 #因为已经将tomcat镜像push到了harbor仓库中

ports:

- name: httpd

containerPort: 8080

- name: ajp

containerPort: 8009

部署Service应用

[root@ecs-431f-0001 test]# kubectl apply -f tomcat-deploy.yaml

[root@ecs-431f-0001 test]# kubectl get svc

AME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 57d

tomcat ClusterIP 10.108.222.238 <none> 8080/TCP,8009/TCP 36h

[root@ecs-431f-0001 test]# kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

tomcat-deploy 1/1 1 1 36h

- 创建ingress资源

apiVersion: extensions/v1beta1 #如果版本不支持换这个试试networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-tomcat

namespace: default

annotations:

kubernets.io/ingress.class: "nginx" #指定是ingress-nginx ,如果使用ingress-nginx必须要指定class为nginx

nginx.ingress.kubernetes.io/use-regex: "true" # 指定当前虚拟主机的 Nginx 指令 location 使用正则方式进行路径匹配

nginx.ingress.kubernetes.io/rewrite-target: /$2 # 跳转到入口资源

# nginx.ingress.kubernetes.io/configuration-snippet: |

# rewrite ^/wh-console/(.*)$ /bar/wh-console/$2 redirect;

# rewrite ^/static/(.*)$ /bar/static/$2 redirect;

nginx.ingress.kubernetes.io/ssl-redirect: "false" #设置当前虚拟主机支持 HTTPS 请求时,是否将 HTTP 的请求强制跳转到 HTTPS 端口,全局默认为 true

nginx.ingress.kubernetes.io/enable-cors: "true" #是否启用跨域访问支持,默认为 false

spec:

rules:

- host: bobo.com #将域名与node IP 绑定写入访问节点hosts文件

http:

paths:

- path: /foo(/|$)(.*)

pathType: Prefix

backend:

serviceName: tomcat

servicePort: 8080

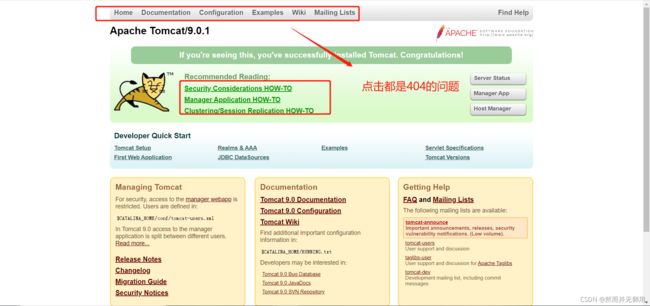

3、问题汇总

1、使用ingress出现404的问题解决

问题描述:请求的路径和实际访问的路径是不一样。

解决办法:修改请求的路径。