Hudi学习01 -- Hudi简介及编译安装

文章目录

-

-

- Hudi简介

-

- Hudi概述

- Hudi特性

- Hudi使用场景

- Hudi编译安装

-

- 安装Maven

- 编译hudi

-

- 修改pom文件

- 修改源码兼容hadoop3

- 解决spark模块依赖的问题

- hudi编译命令

-

Hudi简介

Hudi概述

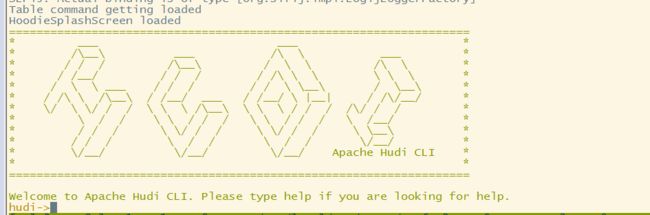

Apache Hudi (Hadoop Upserts Delete and Incremental) 是下一代流数据湖平台。Apache Hudi 将核心仓库和数据库功能直接引入数据湖。Hudi 提供了表、事务、高效的upserts/delete、高级索引、流摄取服务、数据集群/压缩优化和并发,同时保持数据的开源文件格式。

Apache Hudi 不仅适合于流工作负载,还允许创建高效的增量批处理管道。可以对接Spark、Flink、Presto、Trino、Hive等执行引擎。

Hudi特性

- 可插拔索引机制支持快速

Upsert/Delete - 支持增量拉取表变更以进行处理

- 支持事务提交回滚,并发控制

- 支持Spark、Flink、Presto、Trino、Hive等引擎的

SQL读写 - 自动管理小文件,数据压缩和清理

- 流式摄入,内置CDC源和工具

- 内置可扩展存储访问的元数据跟踪

- 向后兼容支持表结构的变更

Hudi使用场景

一、近实时写入

- 减少碎片化工具的使用

- CDC增量导入RDBMS的数据

- 限制小文件的大小和数量

二、近实时分析

- 相对于秒级存储(Druid、OpenTSDB),节省资源

- 提供分钟级别的时效性,支持更高效的查询

- Hudi作为lib,非常轻量

三、增量Pipeline

- 区分arrive time和event time来处理延迟数据

- 更短的调度interval减少端到端的延迟,从小时降低到分钟级

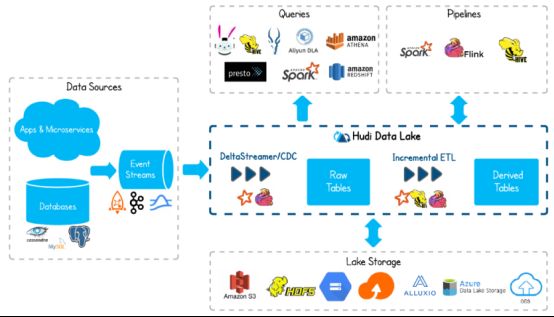

Hudi编译安装

依赖的各组件版本如下表格所示

| 组件 | 版本 |

|---|---|

| Hadoop | 3.3.2 |

| Hive | 3.1.2 |

| Spark | 3.3.1 |

| Flink | 1.14.5 |

| Hudi | 0.12.0 |

| Maven | 3.6.3 |

安装Maven

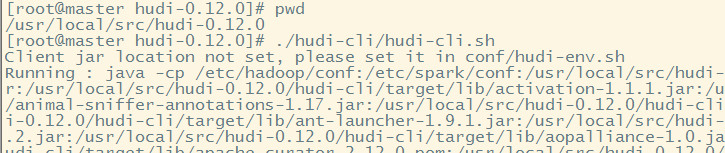

从清华镜像源下载maven的安装包apache-maven-3.6.3-bin.tar.gz,然后解压到服务器目录/usr/local/src/apache-maven-3.6.3,并设置软连接maven,创建对应的仓库路径/usr/local/src/maven_repository,以及在/etc/profile文件配置环境变量

source /etc/profile

mvn -v

修改maven的配置文件${MAVEN_HOME}/conf/settings.xml指定用阿里云镜像,并指定本地仓库路径。

<settings>

<localRepository>/usr/local/src/maven_repositorylocalRepository>

<mirrors>

<mirror>

<id>nexus-aliyunid>

<mirrorOf>centralmirrorOf>

<name>Nexus aliyunname>

<url>http://maven.aliyun.com/nexus/content/groups/publicurl>

mirror>

mirrors>

settings>

编译hudi

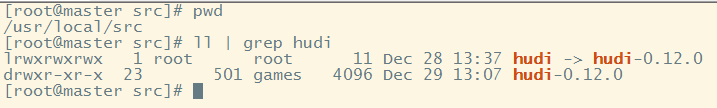

从清华镜像源下载hudi-0.12.0版本的源码包hudi-0.12.0.src.tgz,上传到服务器并解压到目录/usr/local/src/hudi-0.12.0

修改pom文件

pom.xml文件路径为 /usr/local/src/hudi-0.12.0/pom.xml

- 在hudi项目的pom.xml文件中指定阿里云仓库加速下载

<repository>

<id>nexus-aliyunid>

<name>nexus-aliyunname>

<url>http://maven.aliyun.com/nexus/content/groups/public/url>

<releases>

<enabled>trueenabled>

releases>

<snapshots>

<enabled>falseenabled>

snapshots>

repository>

- 修改依赖的组件版本

<hadoop.version>3.3.2hadoop.version>

<hive.version>3.1.2hive.version>

修改源码兼容hadoop3

hudi项目目录下 ./hudi-common/src/main/java/org/apache/hudi/common/table/log/block/HoodieParquetDataBlock.java 文件需要修改

修改第110行,原先只有一个参数,添加第二个参数null即可

否则会因为hadoop2.x和3.x版本兼容问题报错(可不修改源码然后编译试试)

解决spark模块依赖的问题

修改了Hive版本为3.1.2,其携带的jetty是0.9.3,hudi本身用的0.9.4,存在依赖冲突

一、 修改hudi-spark-bundle的pom文件,排除低版本jetty,添加hudi指定版本的jetty:

/usr/local/src/hudi-0.12.0/packaging/hudi-spark-bundle/pom.xml文件要修改,要指定jetty依赖,然后添加hudi使用的jetty依赖

否则后面在使用Spark向hudi表添加数据时会报错(不信可以试试)

java.lang.NoSuchMethodError: org.apache.hudi.org.apache.jetty.server.session.SessionHandler.setHttpOnly(Z)V

<dependency>

<groupId>${hive.groupid}groupId>

<artifactId>hive-serviceartifactId>

<version>${hive.version}version>

<scope>${spark.bundle.hive.scope}scope>

<exclusions>

<exclusion>

<artifactId>guavaartifactId>

<groupId>com.google.guavagroupId>

exclusion>

<exclusion>

<groupId>org.eclipse.jettygroupId>

<artifactId>*artifactId>

exclusion>

<exclusion>

<groupId>org.pentahogroupId>

<artifactId>*artifactId>

exclusion>

exclusions>

dependency>

<dependency>

<groupId>${hive.groupid}groupId>

<artifactId>hive-service-rpcartifactId>

<version>${hive.version}version>

<scope>${spark.bundle.hive.scope}scope>

dependency>

<dependency>

<groupId>${hive.groupid}groupId>

<artifactId>hive-jdbcartifactId>

<version>${hive.version}version>

<scope>${spark.bundle.hive.scope}scope>

<exclusions>

<exclusion>

<groupId>javax.servletgroupId>

<artifactId>*artifactId>

exclusion>

<exclusion>

<groupId>javax.servlet.jspgroupId>

<artifactId>*artifactId>

exclusion>

<exclusion>

<groupId>org.eclipse.jettygroupId>

<artifactId>*artifactId>

exclusion>

exclusions>

dependency>

<dependency>

<groupId>${hive.groupid}groupId>

<artifactId>hive-metastoreartifactId>

<version>${hive.version}version>

<scope>${spark.bundle.hive.scope}scope>

<exclusions>

<exclusion>

<groupId>javax.servletgroupId>

<artifactId>*artifactId>

exclusion>

<exclusion>

<groupId>org.datanucleusgroupId>

<artifactId>datanucleus-coreartifactId>

exclusion>

<exclusion>

<groupId>javax.servlet.jspgroupId>

<artifactId>*artifactId>

exclusion>

<exclusion>

<artifactId>guavaartifactId>

<groupId>com.google.guavagroupId>

exclusion>

exclusions>

dependency>

<dependency>

<groupId>${hive.groupid}groupId>

<artifactId>hive-commonartifactId>

<version>${hive.version}version>

<scope>${spark.bundle.hive.scope}scope>

<exclusions>

<exclusion>

<groupId>org.eclipse.jetty.orbitgroupId>

<artifactId>javax.servletartifactId>

exclusion>

<exclusion>

<groupId>org.eclipse.jettygroupId>

<artifactId>*artifactId>

exclusion>

exclusions>

dependency>

<dependency>

<groupId>org.eclipse.jettygroupId>

<artifactId>jetty-serverartifactId>

<version>${jetty.version}version>

dependency>

<dependency>

<groupId>org.eclipse.jettygroupId>

<artifactId>jetty-utilartifactId>

<version>${jetty.version}version>

dependency>

<dependency>

<groupId>org.eclipse.jettygroupId>

<artifactId>jetty-webappartifactId>

<version>${jetty.version}version>

dependency>

<dependency>

<groupId>org.eclipse.jettygroupId>

<artifactId>jetty-httpartifactId>

<version>${jetty.version}version>

dependency>

二、 修改hudi-utilities-bundle的pom文件,排除低版本jetty,添加hudi指定版本的jetty:

/usr/local/src/hudi-0.12.0/packaging/hudi-utilities-bundle/pom.xml文件要修改,要指定jetty依赖,然后添加hudi使用的jetty依赖

否则后面在使用DeltaStreamer工具向hudi表插入数据时,也会报Jetty的错误

<dependency>

<groupId>org.apache.hudigroupId>

<artifactId>hudi-commonartifactId>

<version>${project.version}version>

<exclusions>

<exclusion>

<groupId>org.eclipse.jettygroupId>

<artifactId>*artifactId>

exclusion>

exclusions>

dependency>

<dependency>

<groupId>org.apache.hudigroupId>

<artifactId>hudi-client-commonartifactId>

<version>${project.version}version>

<exclusions>

<exclusion>

<groupId>org.eclipse.jettygroupId>

<artifactId>*artifactId>

exclusion>

exclusions>

dependency>

<dependency>

<groupId>${hive.groupid}groupId>

<artifactId>hive-serviceartifactId>

<version>${hive.version}version>

<scope>${utilities.bundle.hive.scope}scope>

<exclusions>

<exclusion>

<artifactId>servlet-apiartifactId>

<groupId>javax.servletgroupId>

exclusion>

<exclusion>

<artifactId>guavaartifactId>

<groupId>com.google.guavagroupId>

exclusion>

<exclusion>

<groupId>org.eclipse.jettygroupId>

<artifactId>*artifactId>

exclusion>

<exclusion>

<groupId>org.pentahogroupId>

<artifactId>*artifactId>

exclusion>

exclusions>

dependency>

<dependency>

<groupId>${hive.groupid}groupId>

<artifactId>hive-service-rpcartifactId>

<version>${hive.version}version>

<scope>${utilities.bundle.hive.scope}scope>

dependency>

<dependency>

<groupId>${hive.groupid}groupId>

<artifactId>hive-jdbcartifactId>

<version>${hive.version}version>

<scope>${utilities.bundle.hive.scope}scope>

<exclusions>

<exclusion>

<groupId>javax.servletgroupId>

<artifactId>*artifactId>

exclusion>

<exclusion>

<groupId>javax.servlet.jspgroupId>

<artifactId>*artifactId>

exclusion>

<exclusion>

<groupId>org.eclipse.jettygroupId>

<artifactId>*artifactId>

exclusion>

exclusions>

dependency>

<dependency>

<groupId>${hive.groupid}groupId>

<artifactId>hive-metastoreartifactId>

<version>${hive.version}version>

<scope>${utilities.bundle.hive.scope}scope>

<exclusions>

<exclusion>

<groupId>javax.servletgroupId>

<artifactId>*artifactId>

exclusion>

<exclusion>

<groupId>org.datanucleusgroupId>

<artifactId>datanucleus-coreartifactId>

exclusion>

<exclusion>

<groupId>javax.servlet.jspgroupId>

<artifactId>*artifactId>

exclusion>

<exclusion>

<artifactId>guavaartifactId>

<groupId>com.google.guavagroupId>

exclusion>

exclusions>

dependency>

<dependency>

<groupId>${hive.groupid}groupId>

<artifactId>hive-commonartifactId>

<version>${hive.version}version>

<scope>${utilities.bundle.hive.scope}scope>

<exclusions>

<exclusion>

<groupId>org.eclipse.jetty.orbitgroupId>

<artifactId>javax.servletartifactId>

exclusion>

<exclusion>

<groupId>org.eclipse.jettygroupId>

<artifactId>*artifactId>

exclusion>

exclusions>

dependency>

<dependency>

<groupId>org.eclipse.jettygroupId>

<artifactId>jetty-serverartifactId>

<version>${jetty.version}version>

dependency>

<dependency>

<groupId>org.eclipse.jettygroupId>

<artifactId>jetty-utilartifactId>

<version>${jetty.version}version>

dependency>

<dependency>

<groupId>org.eclipse.jettygroupId>

<artifactId>jetty-webappartifactId>

<version>${jetty.version}version>

dependency>

<dependency>

<groupId>org.eclipse.jettygroupId>

<artifactId>jetty-httpartifactId>

<version>${jetty.version}version>

dependency>

hudi编译命令

mvn clean package -DskipTests -Dspark3.3 -Dflink1.14 -Dscala-2.12 -Dhadoop.version=3.3.2 -Pflink-bundle-shade-hive3