huggingface transformer模型库使用(pytorch)

参考: https://huggingface.co/docs

transformer库 介绍

使用群体:

- 寻找使用、研究或者继承大规模的Tranformer模型的机器学习研究者和教育者

- 想微调模型服务于他们产品的动手实践就业人员

- 想去下载预训练模型,解决特定机器学习任务的工程师

两个主要目标:

- 尽可能见到迅速上手(只有3个标准类,配置,模型,预处理类。两个API,pipeline使用模型,trainer训练和微调模型,这个库不是用来建立神经网络的模块库,你可以用Pytorch,Python,TensorFlow,Kera模块继承基础类复用模型加载和保存功能)

- 提供最先进,性能最接近原始模型(每种架构至少一个例子复现原作者产生的结果,代码尽可能接近原作者,所以可能不是pytorchic)

其他目标

- 尽可能连续公开模型内部(API,标准化)

- 同一个主观选择的,有前景的工具微调,探讨模型

- 容易在 PyTorch, TensorFlow 2.0 ,Flax互相转换,可以在一个框架上训练,另一个框架上推理

主要理念

这个库基于3种类型的类建立

- Model classes

- Configuration classes

- Preprocessing classes将原始数据转化为模型可以接收的格式

所有类可以从预训练实例种初始化,本地报错,分享到Hub上.from_pretrained(),save_pretrained(),push_to_hub()

transformers 历史

Transformer是一种用于自然语言处理的神经网络模型,由Google在2017年提出,被认为是自然语言处理领域的一次重大突破。它是一种基于注意力机制的序列到序列模型,可以用于机器翻译、文本摘要、语音识别等任务。

Transformer模型的核心思想是自注意力机制。传统的RNN和LSTM等模型,需要将上下文信息通过循环神经网络逐步传递,存在信息流失和计算效率低下的问题。而Transformer模型采用自注意力机制,可以同时考虑整个序列的上下文信息,不需要依赖于序列的顺序,从而避免了信息流失和复杂的计算。

Transformer模型由编码器和解码器两部分组成,其中编码器用于将输入序列转换为抽象的上下文向量,解码器则将上下文向量转换为目标序列。Transformer模型的每一层都由多头自注意力机制和前馈神经网络组成,使得模型具有充分的表达能力和高效的计算效率。

Transformer模型在机器翻译、文本摘要、语音识别等任务上取得了很好的效果,被广泛应用于自然语言处理领域。

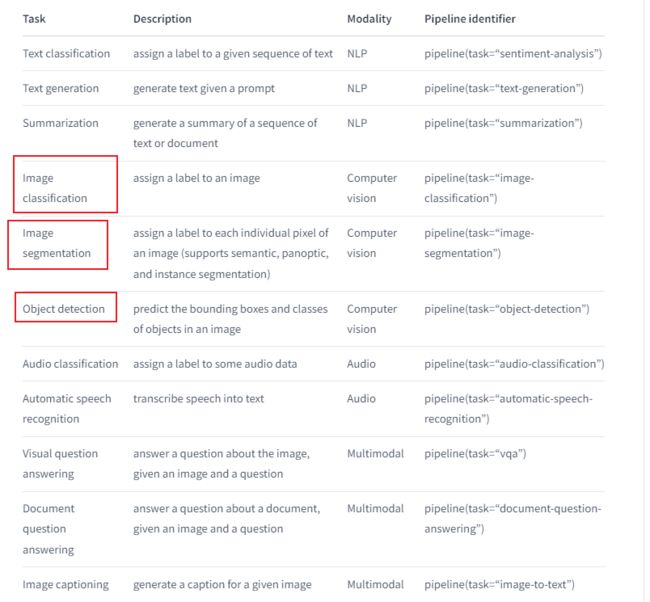

transformer 能力

是NLP,CV,audio,speech processing 任务的库,也包含了非Transformer模型

CV任务可以分成两类,使用卷积去学习图像的层次特征(从低级到高级)

把一张图像分成多块,使用一个transformer组件学习每一块之间的联系。

Audio

音频和语音处理

输入是连续信号,音频不能像文本一样(分割成词)被切割成离散的块,音频通常是在规则间断采样,采样频率越高,就越接近原始音频源。过去的处理方法是抽取特征,现在是直接把全部数据扔进特征encoder去抽取音频表示,简化了预处理步骤。

音频分类

场景分类(办公司,沙滩,体育场)

事件检测(鲸啸,玻璃碎掉,撞击)

标记(包含多个声音,鸟叫,会议中说话者识别?)

音乐分类(重金属,hip-hop,乡村)

自动化语音识别

ASR将语音转文字,在语音任务中很常见,受人类交流方式影响。ASR常嵌入到智能技术产品,电话,汽车,我们可以用虚拟助手播放音乐,设置备忘录等

transformer架构能帮助低资源的语言。通过在大量语音数据上预训练,再在只有一个小时打标低资源数据上微调,可以获取较好的结果(与过去100倍训练数据相比)

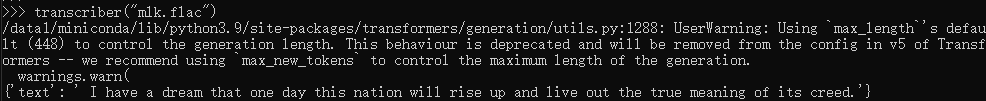

from transformers import pipeline

transcriber = pipeline(task="automatic-speech-recognition", model="openai/whisper-small")

transcriber("https://huggingface.co/datasets/Narsil/asr_dummy/resolve/main/mlk.flac")

raise KeyError(key)

KeyError: 'whisper'

transformers 版本太低,需要更新版本到4.23.1

参考:https://discuss.huggingface.co/t/deploying-open-ais-whisper-on-sagemaker/24761/2

报错

raise ValueError("ffmpeg was not found but is required to load audio files from filename") from error

ValueError: ffmpeg was not found but is required to load audio files from filename

参考:https://discuss.huggingface.co/t/audio-classification-pipeline-valueerror-ffmpeg-was-not-found-but-is-required-to-load-audio-files-from-filename/16137/2

需要下载Download FFmepeg(FFmpeg是一套可以用来记录、转换数字音频、视频,并能将其转化为流的开源计算机程序。)

centos安装FFmpeg

sudo yum localinstall --nogpgcheck https://download1.rpmfusion.org/free/el/rpmfusion-free-release-7.noarch.rpm

yum install ffmpeg ffmpeg-devel

ffmpeg -version

Computer Vision

图像分类

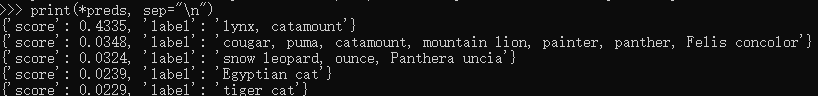

from transformers import pipeline

classifier = pipeline(task="image-classification")

preds = classifier(

"https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/pipeline-cat-chonk.jpeg"

)

preds = [{"score": round(pred["score"], 4), "label": pred["label"]} for pred in preds]

print(*preds, sep="\n")

目标检测

from transformers import pipeline

detector = pipeline(task="object-detection")

preds = detector(

"https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/pipeline-cat-chonk.jpeg"

)

preds = [{"score": round(pred["score"], 4), "label": pred["label"], "box": pred["box"]} for pred in preds]

preds

执行时报错,记得安装trim

![]()

https://github.com/rwightman/pytorch-image-models/releases/download/v0.1-rsb-weights/resnet50_a1_0-14fe96d1.pth可能出现网络问题。可以通过其他渠道将模型下载到/root/.cache/torch/hub/checkpoints

像素级别分类 (“dog-1”, “dog-2”),有实例分割和全景分割

from transformers import pipeline

segmenter = pipeline(task="image-segmentation")

preds = segmenter(

"https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/pipeline-cat-chonk.jpeg"

)

preds = [{"score": round(pred["score"], 4), "label": pred["label"]} for pred in preds]

print(*preds, sep="\n")

深度估算(报错KeyError: "Unknown task depth-estimation,,没有这个任务 待解决)

预测每一个像素距离照相机的距离,对于场景理解和重建很重远哦,如自动驾驶方面,生物或者建筑领域中2维图像转3D.

from transformers import pipeline

depth_estimator = pipeline(task="depth-estimation")

preds = depth_estimator(

"https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/pipeline-cat-chonk.jpeg"

)

preds

Natural language processing

文本分类

情感分析(政治、金融、市场的决策),领域分类(天气、运动、金融)

from transformers import pipeline

classifier = pipeline(task="sentiment-analysis")

preds = classifier("Hugging Face is fun to learn")

preds = [{"score": round(pred["score"], 4), "label": pred["label"]} for pred in preds]

preds

![]()

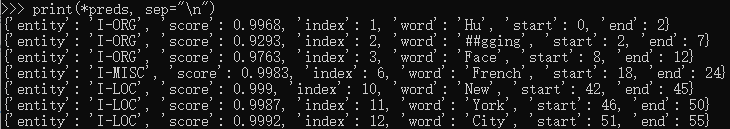

token分类(文本被分割成词或者subwords,被称作token)

- NER实体识别 (将实体打标签,组织,人,位置,日期),在医疗领域很广泛,给基因 蛋白质 药品名称打标签

- POS词性标注(动词,名词,形容词)翻译领域中识别同一个词不同场景下词性差异(bank 做名词和动词的差异)

from transformers import pipeline

classifier = pipeline(task="ner")

preds = classifier("Hugging Face is a French company based in New York City.")

preds = [

{

"entity": pred["entity"],

"score": round(pred["score"], 4),

"index": pred["index"],

"word": pred["word"],

"start": pred["start"],

"end": pred["end"],

}

for pred in preds

]

print(*preds, sep="\n")

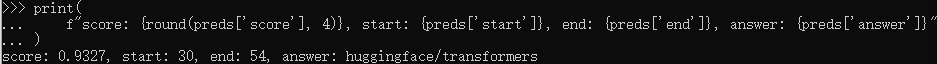

回答问题

有直接从文本中抽取答案,有从文本中生成答案

from transformers import pipeline

question_answerer = pipeline(task="question-answering")

preds = question_answerer(

question="What is the name of the repository?",

context="The name of the repository is huggingface/transformers",

)

print(

f"score: {round(preds['score'], 4)}, start: {preds['start']}, end: {preds['end']}, answer: {preds['answer']}"

)

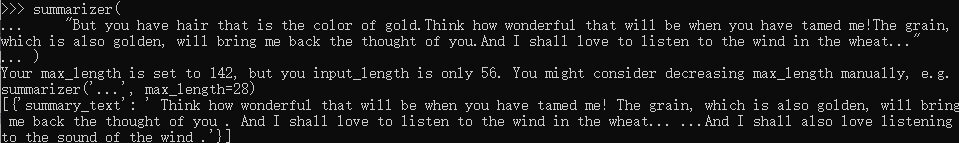

总结

跟回答问题一样,直接从原始文本抽取,或者生成总结(可能包含并不在输入文本的词语)

from transformers import pipeline

summarizer = pipeline(task="summarization")

summarizer(

"But you have hair that is the color of gold.Think how wonderful that will be when you have tamed me!The grain, which is also golden, will bring me back the thought of you.And I shall love to listen to the wind in the wheat..."

)

翻译

早前,翻译通常是一对一,现在增加了很多多语言翻译

from transformers import pipeline

text = "translate English to French: Hugging Face is a community-based open-source platform for machine learning."

translator = pipeline(task="translation", model="t5-small")

translator(text)

语言模型

预测文本中的词,NLP领域中应用很广,因为一个预训练模型可以有很多下游任务。最近,大型语言模型LLMs(只需要zero-shot学习)有受到很多关注,意味着可以解决并非它训练目的的模型。语言模型可以用于生成流利可信的文本,但可能文本真实性不太准确

有两种类型

预测下一个词(那个词之后的词会被遮盖)

from transformers import pipeline

prompt = "Hugging Face is a community-based open-source platform for machine learning."

generator = pipeline(task="text-generation")

generator(prompt) # doctest: +SKIP

- 预测需要填空的那个词 ,上下文已知(masked: the model’s objective is to predict a masked token in a sequence with full access to the tokens in the sequence)

text = "Hugging Face is a community-based open-source for machine learning."

fill_mask = pipeline(task="fill-mask")

preds = fill_mask(text, top_k=1)

preds = [

{

"score": round(pred["score"], 4),

"token": pred["token"],

"token_str": pred["token_str"],

"sequence": pred["sequence"],

}

for pred in preds

]

preds

transformer是怎么解决问题的

任务

!pip install transformers datasets

情感分析

from transformers import pipeline

classifier = pipeline("sentiment-analysis") #缓存一个默认模型

classifier("We are very happy to show you the Transformers library.")

# [{'label': 'POSITIVE', 'score': 0.9998}]

# 多个输入也可以使用列表

results = classifier(["We are very happy to show you the Transformers library.", "We hope you don't hate it."])

for result in results:

print(f"label: {result['label']}, with score: {round(result['score'], 4)}")

语音翻译

import torch

from transformers import pipeline

from datasets import load_dataset, Audio

speech_recognizer = pipeline("automatic-speech-recognition", model="facebook/wav2vec2-base-960h")

dataset = load_dataset("PolyAI/minds14", name="en-US", split="train")

# 确保sampling_rate匹配采样的模型,即也是16khz

# 模型详情https://huggingface.co/facebook/wav2vec2-base-960h

dataset = dataset.cast_column("audio", Audio(sampling_rate=speech_recognizer.feature_extractor.sampling_rate))

result = speech_recognizer(dataset[:4]["audio"])

print([d["text"] for d in result])

# ['I WOULD LIKE TO SET UP A JOINT ACCOUNT WITH MY PARTNER HOW DO I PROCEED WITH DOING THAT', "FONDERING HOW I'D SET UP A JOIN TO HELL T WITH MY WIFE AND WHERE THE AP MIGHT BE", "I I'D LIKE TOY SET UP A JOINT ACCOUNT WITH MY PARTNER I'M NOT SEEING THE OPTION TO DO IT ON THE APSO I CALLED IN TO GET SOME HELP CAN I JUST DO IT OVER THE PHONE WITH YOU AND GIVE YOU THE INFORMATION OR SHOULD I DO IT IN THE AP AN I'M MISSING SOMETHING UQUETTE HAD PREFERRED TO JUST DO IT OVER THE PHONE OF POSSIBLE THINGS", 'HOW DO I FURN A JOINA COUT']

注意:load_dataset下载数据集链接指向https://drive.google.com/uc?export=download&id=1-i7GQghI0bkXwQEhKN6IDlWjJbHIZS2s,需要翻

如果输入很大,使用生成器而不是列表

transformer入门

Pipeline

pipeline可以容纳Hub的任何模型,使用tags过滤合适的模型

from transformers import AutoTokenizer, AutoModelForSequenceClassification

model_name = "nlptown/bert-base-multilingual-uncased-sentiment"

model = AutoModelForSequenceClassification.from_pretrained(model_name)

tokenizer = AutoTokenizer.from_pretrained(model_name)

classifier = pipeline("sentiment-analysis", model=model, tokenizer=tokenizer)

lassifier("Nous sommes très heureux de vous présenter la bibliothèque Transformers.")

# [{'label': '5 stars', 'score': 0.7273}]

AutoClass

AutoTokenizer

tokenizer负责将文本转数组作为输入输给模型。tokenizaton化过程有很多规则。包括切分词的程度,切分到什么层次。

最重要的事:需要实例化tokenizer的模型名字需要同预训练模型相同的tokenizer

from transformers import AutoTokenizer

model_name = "nlptown/bert-base-multilingual-uncased-sentiment"

tokenizer = AutoTokenizer.from_pretrained(model_name)

encoding = tokenizer("Mind your own business ")

print(encoding)

![]()

tokenizer返回一个字典包含:inpurt_id,attention_mask

(attention mask是二值化tensor向量,padding的对应位置是0,这样模型不用关注padding

输入为列表,补全和截断,返回同样大小的一个批次

pt_batch = tokenizer(

["We are very happy to show you the Transformers library.", "We hope you don't hate it."],

padding=True,

truncation=True,

max_length=512,

return_tensors="pt",

)

AutoModel

Transformers提供了一个简单统一的方式加载预训练实例,可以加载一个AutoModel,跟加载AutoTokenizer一样的方式。唯一不同就是选择对于AutoModel正确的任务.比如对于文本和序列分类任务,需要加载AutoModelForSequenceClassification

from torch import nn

from transformers import AutoModelForSequenceClassification

model_name = "nlptown/bert-base-multilingual-uncased-sentiment"

pt_model = AutoModelForSequenceClassification.from_pretrained(model_name)

# 预处理的批次输入

pt_outputs = pt_model(**pt_batch)

pt_predictions = nn.functional.softmax(pt_outputs.logits, dim=-1)

print(pt_predictions)

输出最后一层logits属性。应用softmax函数到logits上获取概率

保存模型

pt_save_directory = "./pt_save_pretrained"

tokenizer.save_pretrained(pt_save_directory)

pt_model.save_pretrained(pt_save_directory)

transformer模型特性之一是保存和加载模型,既可以用pytorch框架,也可以用TensorFlow模型

tf_save_directory=''

from transformers import AutoModel

from transformers import AutoTokenizer

from transformers import AutoModelForSequenceClassification

tokenizer = AutoTokenizer.from_pretrained(tf_save_directory)

pt_model = AutoModelForSequenceClassification.from_pretrained(tf_save_directory, from_tf=True)

Custom model builds 模型编译

通过修改模型的配置类,改变一个模型是如何编译,引入AutoConfig,加载想修改的预训练模型,使用AutoConfig.from_pretrained()方法,指定想修改的属性,如attention 头的数量

from transformers import AutoConfig

my_config = AutoConfig.from_pretrained("distilbert-base-uncased", n_heads=12)

from transformers import AutoModel

my_model = AutoModel.from_config(my_config)

训练器-一个PyTorch优化后的训练环节

所有模型都是一个标准的 torch.nn.Module 。

# 1.预训练模型

from transformers import AutoModelForSequenceClassification

model = AutoModelForSequenceClassification.from_pretrained("distilbert-base-uncased")

# 2.训练参数包括模型超参数,学习率,批次大小,训练轮数,如果不指定使用默认参数

from transformers import TrainingArguments

training_args = TrainingArguments(

output_dir="path/to/save/folder/",

learning_rate=2e-5,

per_device_train_batch_size=8,

per_device_eval_batch_size=8,

num_train_epochs=2,

)

# 3.预处理类,有tokenizer,image processor,feature extractor,processor

from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("distilbert-base-uncased")

# 4.加载数据集

from datasets import load_dataset

dataset = load_dataset("rotten_tomatoes") # doctest: +IGNORE_RESULT

# 5.tokenizer数据集

def tokenize_dataset(dataset):

return tokenizer(dataset["text"])

dataset = dataset.map(tokenize_dataset, batched=True)

# 6.对数据集分批次

from transformers import DataCollatorWithPadding

data_collator = DataCollatorWithPadding(tokenizer=tokenizer)

最后,聚集Trainer所有类

from transformers import Trainer

trainer = Trainer(

model=model,

args=training_args,

train_dataset=dataset["train"],

eval_dataset=dataset["test"],

tokenizer=tokenizer,

data_collator=data_collator,

) # doctest: +SKIP

trainer.train()

可以自定义训练过程的行为,通过继承Trainer内部方法,可以自定义特征:损失函数,优化器,定时器。也可以用Callback和其他库继承来监察训练过程,报告过程或者过早停止训练

其他

更改huggingface 缓存目录

参考:https://huggingface.co/docs/transformers/installation

预训练默认下载到 ~/.cache/huggingface/hub

1.查看空间

du -h /root/.cache/

2.vim /etc/profile

export HF_HOME='' # HUGGINGFACE_HUB_CACHE/TRANSFORMERS_CACHE

3.source /etc/profile

或者加载模型,指定路径

安装

源码安装

pip install git+https://github.com/huggingface/transformers

会安装最新版本,但不是稳定版本

可编辑安装

git clone https://github.com/huggingface/transformers.git

cd transformers

pip install -e .

链接文件夹到python库

离线使用

设置环境变量TRANSFORMERS_OFFLINE=1 HF_DATASETS_OFFLINE=1

HF_DATASETS_OFFLINE=1 TRANSFORMERS_OFFLINE=1 \

python examples/pytorch/translation/run_translation.py --model_name_or_path t5-small --dataset_name wmt16 --dataset_config ro-en ...

拉取模型和tokenizers 离线使用

PreTrainedModel.from_pretrained() ,PreTrainedModel.save_pretrained()

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

tokenizer = AutoTokenizer.from_pretrained("bigscience/T0_3B")

model = AutoModelForSeq2SeqLM.from_pretrained("bigscience/T0_3B")

tokenizer.save_pretrained("./your/path/bigscience_t0")

model.save_pretrained("./your/path/bigscience_t0")

离线

tokenizer = AutoTokenizer.from_pretrained("./your/path/bigscience_t0")

model = AutoModel.from_pretrained("./your/path/bigscience_t0")

编程化下载库

python -m pip install huggingface_hub

from huggingface_hub import hf_hub_download

hf_hub_download(repo_id="bigscience/T0_3B", filename="config.json", cache_dir="./your/path/bigscience_t0")

from transformers import AutoConfig

config = AutoConfig.from_pretrained("./your/path/bigscience_t0/config.json")

注意下载到的位置是根目录下