Hdfs扩容操作

这篇文章分为两部分,第一部分主要讲述的是如何将新插入的磁盘格式化并加载到服务器中,成功加载硬盘之后再通过第二部分实现hdfs扩容操作。

1、格式化添加新硬盘

(1)磁盘分区

##注意这里是对要添加的磁盘进行分区操作,如果只是单纯的加入一整块数据盘的话应该跳过该步骤,直接操作第(2)步的磁盘格式化和挂载

[root@node1 kafka-broker]# fdisk-l

Disk /dev/sda: 1000.2 GB, 1000204886016 bytes,1953525168 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 4096 bytes

I/O size (minimum/optimal): 4096 bytes / 4096bytes

Disk label type: dos

Disk identifier: 0x0000e699

Device Boot Start End Blocks Id System

/dev/sda1 * 2048 1026047 512000 83 Linux

/dev/sda2 1026048 1953523711 976248832 8e Linux LVM

Disk /dev/mapper/centos-root:248.1 GB, 248055332864 bytes, 484483072 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 4096 bytes

I/O size (minimum/optimal): 4096 bytes / 4096 bytes

Disk /dev/mapper/centos-var:107.4 GB, 107374182400 bytes, 209715200 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 4096 bytes

I/O size (minimum/optimal): 4096 bytes / 4096 bytes

Disk /dev/mapper/centos-opt:644.2 GB, 644240900096 bytes, 1258283008 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 4096 bytes

I/O size (minimum/optimal): 4096 bytes / 4096 bytes

Disk /dev/sdb:1000.2 GB, 1000204886016 bytes, 1953525168 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 4096 bytes

I/O size (minimum/optimal): 4096 bytes / 4096 bytes

查看系统内的硬盘状况,可见/dev/sdb这块新硬盘插入但没有格式化和挂载

现在进行fdisk分区操作

[root@node1 kafka-broker]#fdisk /dev/sdb

Welcome to fdisk (util-linux 2.23.2).

Changes will remain in memory only, until you decideto write them.

Be careful before using the write command.

Device does not contain a recognizedpartition table

Building a new DOS disklabel with disk identifier0xf3238b2d.

The device presents a logical sectorsize that is smaller than

the physical sector size. Aligning to a physicalsector (or optimal

I/O) size boundary is recommended, or performance maybe impacted.

Command (m for help): n

Partition type:

p primary (0 primary, 0 extended, 4 free)

e extended

Select (default p): p

Partition number (1-4, default1):

First sector (2048-1953525167, default 2048):

Using default value 2048

Last sector, +sectors or +size{K,M,G}(2048-1953525167, default 1953525167):

Using default value 1953525167

Partition 1 of type Linux and of size 931.5 GiB is set

Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

现在再来看

[root@node1 kafka-broker]#fdisk -l

Disk /dev/sda: 1000.2 GB, 1000204886016 bytes,1953525168 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 4096 bytes

I/O size (minimum/optimal): 4096 bytes / 4096 bytes

Disk label type: dos

Disk identifier: 0x0000e699

DeviceBoot Start End Blocks Id System

/dev/sda1 * 2048 1026047 512000 83 Linux

/dev/sda2 1026048 1953523711 976248832 8e Linux LVM

Disk /dev/mapper/centos-root: 248.1 GB, 248055332864bytes, 484483072 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 4096 bytes

I/O size (minimum/optimal): 4096 bytes / 4096 bytes

Disk /dev/mapper/centos-var: 107.4 GB, 107374182400bytes, 209715200 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 4096 bytes

I/O size (minimum/optimal): 4096 bytes / 4096 bytes

Disk /dev/mapper/centos-opt: 644.2 GB, 644240900096bytes, 1258283008 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 4096 bytes

I/O size (minimum/optimal): 4096 bytes / 4096 bytes

Disk /dev/sdb: 1000.2 GB, 1000204886016bytes, 1953525168 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 4096 bytes

I/O size (minimum/optimal): 4096 bytes/ 4096 bytes

Disk label type: dos

Disk identifier: 0xf3238b2d

DeviceBoot Start End Blocks Id System

/dev/sdb1 2048 1953525167 976761560 83 Linux

(2)磁盘格式化并挂载

下面进行格式化

[root@node1 kafka-broker]# mkfs.xfs/dev/sdb1

##注意:如果没有进行第(1)步的磁盘分区的话,/dev/sdb1应该是/dev/sdb

#[root@node1 kafka-broker]# mkfs.xfs/dev/sdb

##完整命令如上所示,后面的/dev/sdb1依次类推

meta-data=/dev/sdb1 isize=256 agcount=4, agsize=61047598 blks

= sectsz=4096 attr=2, projid32bit=1

= crc=0 finobt=0

data = bsize=4096 blocks=244190390, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=0

log =internal log bsize=4096 blocks=119233,version=2

= sectsz=4096 sunit=1 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

新建目录并挂载

[root@node1 kafka-broker]# mkdir/mnt/str_sdb1

[root@node1 kafka-broker]#mount -t xfs /dev/sdb1 /mnt/str_sdb1

[root@node1 kafka-broker]# df -Th

Filesystem Type Size Used Avail Use% Mounted on

/dev/mapper/centos-root xfs 231G 3.2G 228G 2% /

devtmpfs devtmpfs 32G 0 32G 0% /dev

tmpfs tmpfs 32G 0 32G 0% /dev/shm

tmpfs tmpfs 32G 369M 32G 2% /run

tmpfs tmpfs 32G 0 32G 0% /sys/fs/cgroup

/dev/sda1 xfs 494M 130M 364M 27% /boot

/dev/mapper/centos-var xfs 100G 88G 13G 88% /var

/dev/mapper/centos-opt xfs 600G 353G 248G 59% /opt

tmpfs tmpfs 6.3G 0 6.3G 0% /run/user/0

/dev/sdb1 xfs 932G 33M 932G 1% /mnt/str_sdb1

开机自动挂载配置

[root@node1 str_sdb1]# vi/etc/fstab

1

2 #

3 #/etc/fstab

4 #Created by anaconda on Tue Jul 516:24:18 2016

5 #

6 #Accessible filesystems, by reference, are maintained under '/dev/disk'

7 # Seeman pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

8 #

9/dev/mapper/centos-root / xfs defaults 0 0

10UUID=010ecc3a-17c7-4b26-8005-f141c7db61d9 /boot xfs defaults 0 0

11/dev/mapper/centos-opt /opt xfs defaults 0 0

12/dev/mapper/centos-var /var xfs defaults 0 0

13 /dev/sdb1 /mnt/str_sdb1 xfs defaults 0 0

2、hdfs扩容和相关问题

这里介绍下利用ambari重装hadoop服务需要注意的步骤:

首先ambari-serverstop 停止ambari服务

然后利用ambari_clear.sh脚本清除下环境(注意脚本可能不够完善,最好自己检查一下相关目录有没有清除干净)

之后就是按照之前的ambari安装文档进行安装了

2.1、更新namenode和datanode的目录

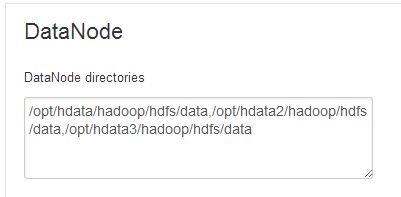

如图所示,我们将namenode和datanode的目录从默认的/var/hadoop目录改到了新挂载的硬盘上,注意:

首先手动新建/mnt/str_sdb1/hadoop/hdfs/namenode和/mnt/str_sdb1/hadoop/hdfs/datanode目录,新建的namenode目录只有在namenode节点上通过hdfs namenode -format之后有数据,且在namenode节点上有几个namenode目录就有几份一样的数据,多的用作备份,非namenode节点上的namenode目录下没有数据。

namenode节点另外注意修改权限 chmod 777 -R /mnt/str_sdb1。

如果是改为多个目录即新增目录注意目录之间的间隔符是","(逗号)。

第一次部署时,更改完配置的目录后,在hadoop目录下执行bin/hdfs namenode-format命令将硬盘hdfs格式化,之后会在nomade目录下生成相应目录和文件(注意:格式化重启hdfs之后,数据没有被删除),格式化之后会产生新的namenode目录下的文件。注意不要随意格式化,会导致VERSION内的clusterID不同而报错,如果只是单纯扩容且需要保留之前的数据,则不需要格式化,直接添加新的目录然后重启hdfs即可。

namenode节点记得修改目录权限chmod 777 -R /opt/hdata*。

最后在ambari web界面上重启所有相关服务即可,(重启之后若有的datanode目录没有成功初始化,所有节点修改下datanode目录所属用户,由root改为hdfs, chown -R hdfs /opt/hdata2/hadoop/hdfs/data/ ,2.2有详细介绍)。

TIPs:(针对namenode节点)

hdfs namenode -format执行之前:

[root@node1 ~]# ll -R /opt/hdata/hadoop/hdfs/

/opt/hdata/hadoop/hdfs/:

total 0

drwxr-x--- 2 hdfs hadoop 6 Aug 27 21:26 data

drwxr-xr-x 2 hdfs hadoop 6 Aug 27 21:37 namenode

/opt/hdata/hadoop/hdfs/data:

total 0

/opt/hdata/hadoop/hdfs/namenode:

total 0

hdfs namenode -format执行之后:

[root@node1 ~]# ll -R /opt/hdata/hadoop/hdfs/

/opt/hdata/hadoop/hdfs/:

total 0

drwxr-x--- 2 hdfs hadoop 6 Aug 27 21:26 data

drwxr-xr-x 3 hdfs hadoop 20 Aug 27 21:37 namenode

/opt/hdata/hadoop/hdfs/data:

total 0

/opt/hdata/hadoop/hdfs/namenode:

total 0

drwxr-xr-x 2 root hadoop 108 Aug 27 21:37 current

/opt/hdata/hadoop/hdfs/namenode/current:

total 16

-rw-r--r-- 1 root hadoop 345 Aug 27 21:37fsimage_0000000000000000000

-rw-r--r-- 1 root hadoop 62 Aug 27 21:37fsimage_0000000000000000000.md5

-rw-r--r-- 1 root hadoop 2 Aug 27 21:37 seen_txid

-rw-r--r-- 1 root hadoop 202 Aug 27 21:37 VERSION

2.2、节点上datanode目录无效问题

如图所示,配置了三个datanode目录,在实际使用中发现某台设备上的/opt/hdata2/hadoop/hdfs/data目录没有正确的初始化,也没有数据,目录的各项配置都是正确的,仔细检查发现:

[root@node4 opt]# ls -l hdata2/hadoop/hdfs/

total 0

drwxrwxrwx 2 roothadoop 6 Aug 26 15:37 data

drwxrwxrwx 3 root hadoop 20 Aug 26 18:46 namenode

datanode目录的所有者有问题,正确的如下:

[root@node4 opt]# ls /opt/hdata2/hadoop/hdfs/ -l

total 0

drwxr-x--- 3 hdfs hadoop 38 Aug 26 20:02 data

drwxrwxrwx 3 root hadoop 20 Aug 26 18:46 namenode

修改下目录所属用户,由root改为hdfs

chown -R hdfs /opt/hdata2/hadoop/hdfs/data/

在ambari界面上重启hdfs服务后:

[root@node4opt]# ls /opt/hdata2/hadoop/hdfs/data/ -l

total 4

drwxr-xr-x 3 hdfs hadoop 66 Aug 26 20:02 current

-rw-r--r-- 1 hdfs hadoop 16 Aug 26 20:02 in_use.lock

datanode目录已经正确初始化了。

2.3、hbase rootdir用例介绍

hbase所有的数据全部存在hdfs的/apps/hbase目录下(该目录在hbase-site.xml里配置就是hbase.rootdir),如果将这个目录删除,重启hbase又会生成新的,但是原先的数据会丢失。

若数据不重要又需要重建hdfs,则删除namenode.dir和datanode.dir(/opt/hdata*/hadoop/hdfs/data和/opt/hdata*/hadoop/hdfs/namenode)下的所有文件并执行hdfs namenode -format命令即可生成一个全新的hdfs。

正常情况下,先disable 然后 drop 某个HTable表格之后,这个表格的保存在hdfs上的所有数据都会被清空。