反爬虫破解——百度翻译

这段时间研究了下百度翻译的反爬策略感觉挺有意思的,这里给大家分享一下

思路分析

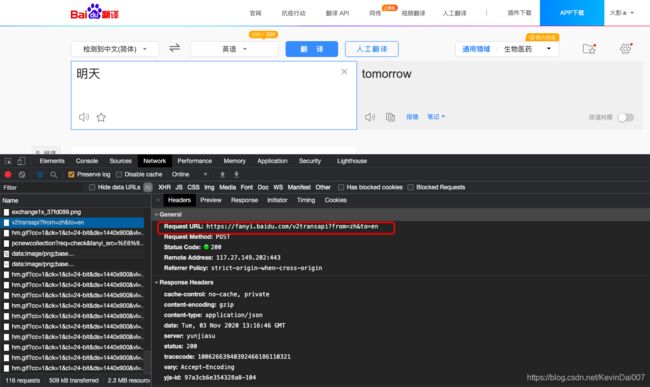

首先我们打开浏览器的控制台(alt+command+i),然后输入 https://fanyi.baidu.com/,然后随便输入一个词语翻译一下,分析network标签页能很容易找到翻译接口

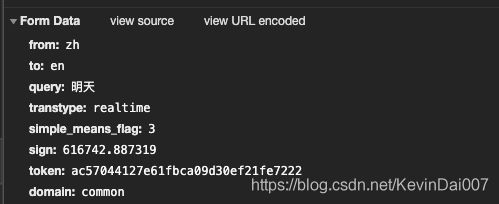

观察这个post请求的参数

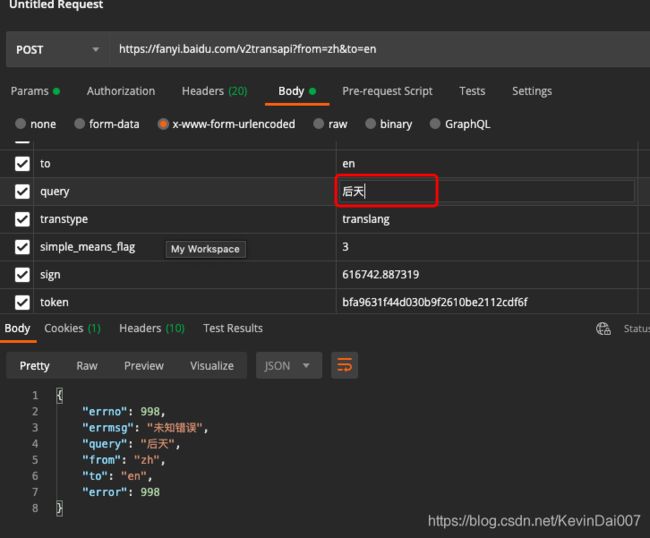

在postman中构建此请求发现请求可以发送成功(注意,需要把网页中的cookie也拷过来才能请求成功);然而如果改变翻译内容请求就会失败

所以肯定有某些参数是计算出来的,观察可以发现sign、token两个参数十分有可能是动态的,那么我们来找一下这两个参数是怎么生成的

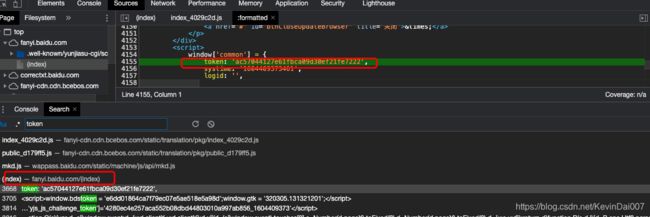

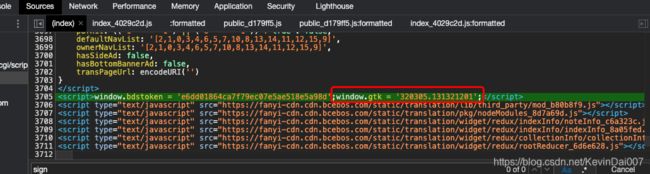

全局搜索token会在https://fanyi.baidu.com/ 页面中找到token的值,且此值正好与请求中的一致

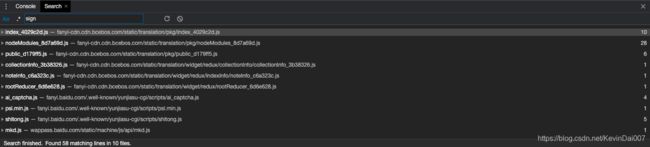

然后寻找sign,也是全局搜索一下,会发现使用sign的地方好像还挺多

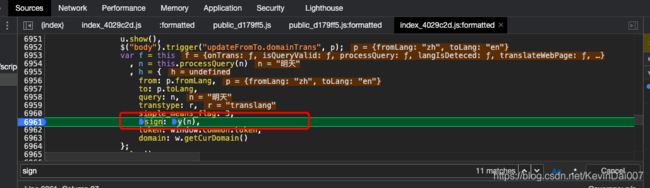

我们逐一分析只有会发现很多都是assign,剩余真正出现sign的地方其实并不多。我们在所有使用sign的地方打上断点,并点一下页面上的翻译按钮。断点可以看到sign在这里被赋值,其中n是我们要翻译的词,而sign是根据y函数计算出来的值

这时鼠标放在y函数上,然后进入y函数会发现以下三个函数就是生成sign的关键

function a(r) {

if (Array.isArray(r)) {

for (var o = 0, t = Array(r.length); o < r.length; o++)

t[o] = r[o];

return t

}

return Array.from(r)

}

function n(r, o) {

for (var t = 0; t < o.length - 2; t += 3) {

var a = o.charAt(t + 2);

a = a >= "a" ? a.charCodeAt(0) - 87 : Number(a),

a = "+" === o.charAt(t + 1) ? r >>> a : r << a,

r = "+" === o.charAt(t) ? r + a & 4294967295 : r ^ a

}

return r

}

function e(r) {

var o = r.match(/[\uD800-\uDBFF][\uDC00-\uDFFF]/g);

if (null === o) {

var t = r.length;

t > 30 && (r = "" + r.substr(0, 10) + r.substr(Math.floor(t / 2) - 5, 10) + r.substr(-10, 10))

} else {

for (var e = r.split(/[\uD800-\uDBFF][\uDC00-\uDFFF]/), C = 0, h = e.length, f = []; h > C; C++)

"" !== e[C] && f.push.apply(f, a(e[C].split(""))),

C !== h - 1 && f.push(o[C]);

var g = f.length;

g > 30 && (r = f.slice(0, 10).join("") + f.slice(Math.floor(g / 2) - 5, Math.floor(g / 2) + 5).join("") + f.slice(-10).join(""))

}

var u = void 0

, l = "" + String.fromCharCode(103) + String.fromCharCode(116) + String.fromCharCode(107);

u = null !== i ? i : (i = window[l] || "") || "";

for (var d = u.split("."), m = Number(d[0]) || 0, s = Number(d[1]) || 0, S = [], c = 0, v = 0; v < r.length; v++) {

var A = r.charCodeAt(v);

128 > A ? S[c++] = A : (2048 > A ? S[c++] = A >> 6 | 192 : (55296 === (64512 & A) && v + 1 < r.length && 56320 === (64512 & r.charCodeAt(v + 1)) ? (A = 65536 + ((1023 & A) << 10) + (1023 & r.charCodeAt(++v)),

S[c++] = A >> 18 | 240,

S[c++] = A >> 12 & 63 | 128) : S[c++] = A >> 12 | 224,

S[c++] = A >> 6 & 63 | 128),

S[c++] = 63 & A | 128)

}

for (var p = m, F = "" + String.fromCharCode(43) + String.fromCharCode(45) + String.fromCharCode(97) + ("" + String.fromCharCode(94) + String.fromCharCode(43) + String.fromCharCode(54)), D = "" + String.fromCharCode(43) + String.fromCharCode(45) + String.fromCharCode(51) + ("" + String.fromCharCode(94) + String.fromCharCode(43) + String.fromCharCode(98)) + ("" + String.fromCharCode(43) + String.fromCharCode(45) + String.fromCharCode(102)), b = 0; b < S.length; b++)

p += S[b],

p = n(p, F);

return p = n(p, D),

p ^= s,

0 > p && (p = (2147483647 & p) + 2147483648),

p %= 1e6,

p.toString() + "." + (p ^ m)

}

var i = null;

t.exports = e

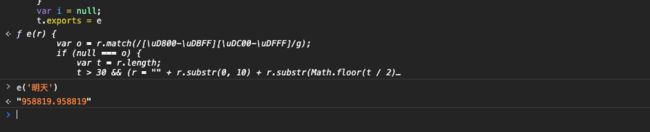

我们随便找个网页,在控制台中注入这三个方法,然后执行e(‘明天’)会得到一个sign值,虽然看起来g格式似乎正确,但在postman模拟请求后会发现这个值是错误的

这说明上面三个函数肯定哪里有问题,仔细分析三个函数会发现一个奇怪的点

u = null !== i ? i : (i = window[l] || "") || "";

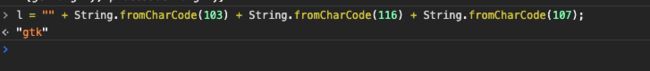

这一行使用了一个全局变量window[l],而在我们随便找的页面中肯定是么有window[l]的,或许是这个原因我们的计算才会失败,从函数中可以找到

l = "" + String.fromCharCode(103) + String.fromCharCode(116) + String.fromCharCode(107);

由此可知window[l]即window[‘gtk’],那么我们再全局搜索一下’gtk’在哪

那么我们把函数中的i替换为"320305.131321201"后再执行一次e(‘明天’)

![]()

可以看到已经与我们在network标签页中看到的sign参数一致了。经过分析 可以发现gtk其实是一个常量,我们直接写死即可

这时我们是不是就能愉快的使用百度翻译的接口了呢??no,no,no,百度在这里还有一个小小的trick

1、记得我前面说过的发送 https://fanyi.baidu.com/v2transapi?from=zh&to=en 请求时要带上cookie吗,这个该怎么处理呢?

2、这个token值真的可靠吗?

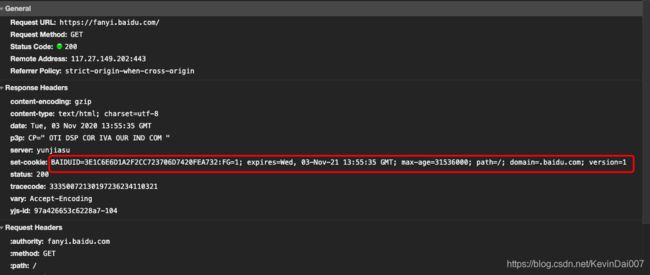

针对第一个问题,这似乎很简单只需要访问一次百度翻译的首页 https://fanyi.baidu.com/在response中取到cookie即可

第二个问题就很有意思了,经过仔细研究可以发现,当我们第一次请求百度翻译页面(即cookie为空时),此时页面中返回的token是无效的(使用此token发送请求不会成功)。需要先访问一次https://fanyi.baidu.com/获取到set-cookie,然后用此cookie再访问https://fanyi.baidu.com/ 此时页面返回的token值才能正常使用,不得不说这确实是个容易迷惑人的trick

思路整理

1、首先访问https://fanyi.baidu.com/获取set-cookie中的值

2、使用cookie访问https://fanyi.baidu.com/ 解析页面中的token、gtk(常量可写死)

3、把gtk带入上述的三个函数计算翻译目标的sign

4、通过token、sign以及cookie构建请求

5、解析响应获得翻译结果

Coding

前两步操作基本一致,我们可以写一个方法完成

private void initToken() {

try {

for (int i = 0; i < 2; i++) {

HttpGet get = new HttpGet("https://fanyi.baidu.com/");

get.setHeader("user-agent","Mozilla/5.0 (Macintosh; Intel Mac OS X 10_14_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.111 Safari/537.36");

get.setHeader("cookie",this.baiduid);

HttpResponse httpResponse = HttpClientManager.get(get);

if (httpResponse.getStatusLine().getStatusCode() == HttpStatus.SC_OK) {

String s = HttpResponseUtil.gainFromResponse(httpResponse);

String token = StringUtils.substringBetween(s, "token: '", "',");

this.token = token;

Header firstHeader = httpResponse.getFirstHeader("set-cookie");

if(firstHeader != null){

String baiduid = StringUtils.substringBetween(firstHeader.getValue(), "BAIDUID=", ";");

this.baiduid = String.format(this.baiduid, baiduid);

}

}

}

} catch (Exception e) {

e.printStackTrace();

}

}

对目标词汇计算sign值。代码中script既上文中提到的三个函数

private Map<String, String> executeJS(String script, List<String> words) {

Map<String, String> result = new HashMap<>();

try {

ScriptEngineManager manager = new ScriptEngineManager();

ScriptEngine engine = manager.getEngineByName("JavaScript");

engine.eval(script);

Invocable inv = (Invocable) engine;

for (String word : words) {

String sign = (String) inv.invokeFunction("e", word);

result.put(word, sign);

}

} catch (Exception e) {

e.printStackTrace();

}

result = result.entrySet().stream().filter(entry -> StringUtils.isNotBlank(entry.getKey())).collect(Collectors.toMap(e -> e.getKey(), e -> e.getValue()));

return result;

}

发送请求得到翻译结果

private Map<String, String> sendReqeust(Map<String, String> wordSignMap) {

for (Map.Entry<String, String> entry : wordSignMap.entrySet()) {

try {

HttpPost post = new HttpPost("https://fanyi.baidu.com/v2transapi?from=zh&to=en");

post.setHeader("cookie", this.baiduid);

post.setHeader("user-agent", "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_14_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.111 Safari/537.36:");

List<NameValuePair> list = new ArrayList<NameValuePair>();

list.add(new BasicNameValuePair("from", "zh"));

list.add(new BasicNameValuePair("to", "en"));

list.add(new BasicNameValuePair("query", entry.getKey()));

list.add(new BasicNameValuePair("transtype", "translang"));

list.add(new BasicNameValuePair("simple_means_flag", "3"));

list.add(new BasicNameValuePair("sign", entry.getValue()));

list.add(new BasicNameValuePair("domain", "common"));

list.add(new BasicNameValuePair("token", this.token));

StringEntity stringEntity = new UrlEncodedFormEntity(list, Charset.defaultCharset());

post.setEntity(stringEntity);

HttpResponse httpResponse = HttpClientManager.get(post);

TimeUnit.SECONDS.sleep(1);//防止速度过快触发限流

if (httpResponse.getStatusLine().getStatusCode() == HttpStatus.SC_OK) {

String result = HttpResponseUtil.gainFromResponse(httpResponse);

String errno = JSON.parseObject(result).getString("errno");

if (StringUtils.isNotBlank(errno)) {

continue;

}

Header cookie = httpResponse.getFirstHeader("Set-Cookie");

if (cookie != null) {

this.baiduid = this.baiduid + ";" + cookie.getValue();

}

result = JSON.parseObject(result).getJSONObject("trans_result").getJSONArray("data").getJSONObject(0).getJSONArray("result").getJSONArray(0).getString(1);

wordSignMap.put(entry.getKey(), result);

} else if (httpResponse.getStatusLine().getStatusCode() == HttpStatus.SC_UNAUTHORIZED) {

initToken();

continue;

}

} catch (Exception e) {

e.printStackTrace();

}

}

return wordSignMap;

}

我随便找了一百多个词翻译,都能正常执行

{"感恋":"Feeling in love","思越":"Thinking more","是非":"Right and wrong","孤独":"lonely","抚摩":"Caress","欢快":"Happy","凝恋":"Love","真假":"True or false","珍贵":"precious","淡雅":"elegant and quiet","思玄":"Sixuan","愉快":"cheerful","零落":"Scattered","柔软":"soft","祸福":"misfortune and fortune","含苞":"in bud","思咏":"Thinking and singing","留恋":"Nostalgia","落莫":"desolate","思纬":"Synovate ","迷恋":"Infatuation","流恋":"Love flow","婉恋":"Wanlian love","情恋":"Love","寒冷":"cold","失恋":"Lovelorn","生死":"life and death","积恋":"Accumulate love","思莼":"Ulva lactuca","恳恋":"Sincere love","爱恋":"be in love with","呼吸":"breathing","反正":"anyway","多少":"How many?","思议":"conceive","破旧":"dilapidated","虚假":"false","特殊":"special","醉人":"Intoxicating","花瓣":"petal","思秋":"Miss autumn","依恋":"to feel attachment to someone","伶仃":"Lingding","思忆":"cherish the memory of","怀恋":"Nostalgia","遥望":"Looking from afar","畅快":"Happy","微弱":"weak","宽敞":"spacious","褒贬":"Praises and demerits","忆恋":"Recalling love","曲直":"Straight","整齐":"neat","丑陋":"ugly","落寞":"Lonely","沉寂":"quiet","孤寂寂寞":"Lonely and lonely","神韵":"romantic charm","兴奋":"excitement","相恋":"Fall in love","思绎":"Thoughts","怒放":"In full bloom","朴素":"simple","颤抖":"tremble","恬静":"Quiet","彼此":"each other","寂寥":"Solitude","思域":"civic ","前后":"around","恋恋":"passionately attached","昼夜":"Day and night","娇美":"Delicate and beautiful","明亮":"bright","欢畅":"Happy","思惟":"thought","思凡":"Think of the world","贪恋":"Greed","兴衰":"Rise and fall","寥寂":"Lonely","开关":"switch","善恶":"Good and evil","首尾":"Head and tail","孤单":"alone","思致":"power of thinking","喜悦":"Joy","欣恋":"Love","思愆":"Thinking wrongly","思理":"Thinking","鲜花":"flower","祝愿":"wish","思言":"Thinking and speaking","思怀":"Thinking","欢腾":"Exultation","疼痛":"pain","舒展":"stretch","虚实":"Deficiency and excess","朝夕":"Day and night","飘散":"thin out","慕恋":"Adoration","恐惧":"fear","单恋":"Single love","思服":"Thinking and serving","早晚":"Sooner or later","顾恋":"yearn for","耽恋":"Be in love","漂浮":"float","奔腾":"Galloping","猜测":"guess","花蕊":"Stamen","嫪恋":"Love","南北":"north and south","素雅":"simple but elegant","幽香":"delicate fragrance","自恋":"narcissism","僻静":"Secluded","长久":"Long term","欢喜":"happy","思永":"Siyong","吞吐":"Throughput","缠绕":"Entanglement","动静":"movement","悲欢":"Joys and sorrows","绻恋":"Love","寂然":"Silence","甘苦":"Sweet and bitter","思恋":"Nostalgia","初恋":"first love","左右":"about","奇怪":"strange","娇嫩":"delicate","拉扯":"Pull","始末":"whole story","思元":"Thinking yuan","快乐":"happy","欣喜":"delighted","悲恋":"Sad love","寂静":"silent","黑白":"black and white","馨香":"Fragrance","花粉":"pollen","消灭":"eliminate","安恋":"Love","遐恋":"Reverie love","东西":"thing","固定":"fixed","孤立":"isolated","热恋":"be passionately in love","宁静":"quiet","模仿":"imitate","黑暗":"dark","清静":"quiet","眷恋":"Attachment","安静":"be quiet","取舍":"Trade off","沉静":"quiet","思潮":"thought"}

ok,到这这里整个代码就完成了,能对任意词语进行翻译,对比使用百度翻译的api来说没有任何次数限制更加的方便实惠。如果大家觉得什么网站加密很有趣也可以发给我研究一下啊