Spark 对hadoopnamenode-log文件进行数据清洗并存入mysql数据库

一.查找需要清洗的文件

1.1查看hadoopnamenode-log文件位置

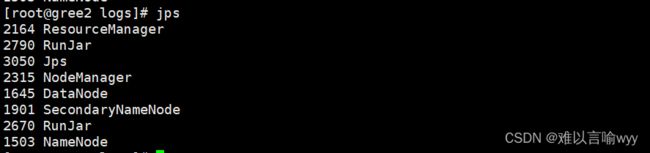

1.2 开启Hadoop集群和Hive元数据、Hive远程连接

具体如何开启可以看我之前的文章:(10条消息) SparkSQL-liunx系统Spark连接Hive_难以言喻wyy的博客-CSDN博客

1.3 将这个文件传入到hdfs中:

hdfs dfs -put hadoop-root-namenode-gree2.log /tmp/hadoopNamenodeLogs/hadooplogs/hadoop-root-namenode-gree2.log

二.日志分析

将里面部分字段拿出来分析:

2023-02-10 16:55:33,123 INFO org.apache.hadoop.hdfs.server.namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

2023-02-10 16:55:33,195 INFO org.apache.hadoop.hdfs.server.namenode.NameNode: createNameNode []

2023-02-10 16:55:33,296 INFO org.apache.hadoop.metrics2.impl.MetricsConfig: loaded properties from hadoop-metrics2.properties

2023-02-10 16:55:33,409 INFO org.apache.hadoop.metrics2.impl.MetricsSystemImpl: Scheduled Metric snapshot period at 10 second(s).

可以看出其可以以INFO来作为中间字段,用indexof读取出该位置索引,以截取字符段的方式来将清洗的数据拿出。

三.代码实现

3.1 对数据进行清洗

object hadoopDemo {

def main(args: Array[String]): Unit = {

val spark: SparkSession = SparkSession.builder().master("local[*]").appName("HadoopLogsEtlDemo").getOrCreate()

val sc: SparkContext = spark.sparkContext

import spark.implicits._

import org.apache.spark.sql.functions._

// TODO 根据INFO这个字段来对数据进行封装到Row中。

val row: RDD[Row] = sc.textFile("hdfs://192.168.61.146:9000/tmp/hadoopNamenodeLogs/hadooplogs/hadoop-root-namenode-gree2.log")

.filter(x => {

x.startsWith("2023")

})

.map(x => {

val strings: Array[String] = x.split(",")

val num1: Int = strings(1).indexOf(" INFO ")

val num2: Int = strings(1).indexOf(":")

if(num1!=(-1)){

val str1: String = strings(1).substring(0, num1)

val str2: String = strings(1).substring(num1 + 5, num2)

val str3: String = strings(1).substring(num2 + 1, strings(1).length)

Row(strings(0), str1, "INFO",str2, str3)

}

else {

val num3: Int = strings(1).indexOf(" WARN ")

val num4: Int = strings(1).indexOf(" ERROR ")

if(num3!=(-1)&&num4==(-1)){

val str1: String = strings(1).substring(0, num3)

val str2: String = strings(1).substring(num3 + 5, num2)

val str3: String = strings(1).substring(num2 + 1, strings(1).length)

Row(strings(0), str1,"WARN", str2, str3)}else{

val str1: String = strings(1).substring(0, num4)

val str2: String = strings(1).substring(num4 + 6, num2)

val str3: String = strings(1).substring(num2 + 1, strings(1).length)

Row(strings(0), str1,"ERROR", str2, str3)

}

}

})

val schema: StructType = StructType(

Array(

StructField("event_time", StringType),

StructField("number", StringType),

StructField("status", StringType),

StructField("util", StringType),

StructField("info", StringType),

)

)

val frame: DataFrame = spark.createDataFrame(row, schema)

frame.show(80,false)

}

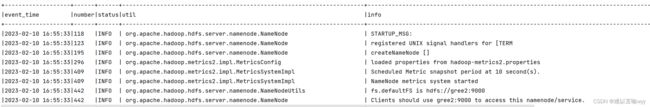

}清洗后的效果图:

3.2 创建jdbcUtils来将其数据导入到数据库:

object jdbcUtils {

val url = "jdbc:mysql://192.168.61.141:3306/jsondemo?createDatabaseIfNotExist=true"

val driver = "com.mysql.cj.jdbc.Driver"

val user = "root"

val password = "root"

val table_access_logs: String = "access_logs"

val table_full_access_logs: String = "full_access_logs"

val table_day_active:String="table_day_active"

val table_retention:String="retention"

val table_loading_json="loading_json"

val table_ad_json="ad_json"

val table_notification_json="notification_json"

val table_active_background_json="active_background_json"

val table_comment_json="comment_json"

val table_praise_json="praise_json"

val table_teacher_json="teacher_json"

val properties = new Properties()

properties.setProperty("user", jdbcUtils.user)

properties.setProperty("password", jdbcUtils.password)

properties.setProperty("driver", jdbcUtils.driver)

def dataFrameToMysql(df: DataFrame, table: String, op: Int = 1): Unit = {

if (op == 0) {

df.write.mode(SaveMode.Append).jdbc(jdbcUtils.url, table, properties)

} else {

df.write.mode(SaveMode.Overwrite).jdbc(jdbcUtils.url, table, properties)

}

}

def getDataFtameByTableName(spark:SparkSession,table:String):DataFrame={

val frame: DataFrame = spark.read.jdbc(jdbcUtils.url, table, jdbcUtils.properties)

frame

}

}3.3 数据导入

jdbcUtils.dataFrameToMysql(frame,jdbcUtils.table_day_active,1)