openAI API简易使用教程

准备

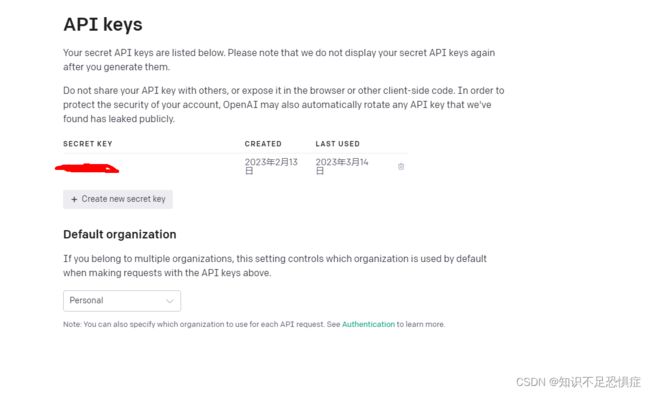

- 创建openAI 账号(https://platform.openai.com/overview),右上角personal,创建API key。

pip install openai

pip install --upgrade tiktoken

tiktoken 是用来计算每次查询时的token数,因为openAI是根据token数计费,不是必须安装。

API调用

api key 可以直接明文写在代码中,也可以通过环境变量方式获取

import os

import openai

# OPENAI_API_KEY是自己设定的环境变量名

openai.api_key = os.getenv("OPENAI_API_KEY")

# 明文

openai.api_key = *************

openAI提供了几种不同场景的模型,主要有text completion、code completion、chat completion、image completion,例如chat completion,则调用方式为

openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Who won the world series in 2020?"},

{"role": "assistant", "content": "The Los Angeles Dodgers won the World Series in 2020."},

{"role": "user", "content": "Where was it played?"}

]

)

其中

model 是具体的模型,gpt-3.5-turbo是openAI最先进的语言模型,当然也可以用其他模型。

role,三种固定值,

system:类似一种前提,表示后续的对话以此情景为基础;

user:提问者;

assistant:当对话需要结合上下文时,通过它让模型知道之前的对话内容。

content是具体的对话内容

发起一次请求到响应会存在几秒钟的延迟,response 格式如下所示

{

'id': 'chatcmpl-6p9XYPYSTTRi0xEviKjjilqrWU2Ve',

'object': 'chat.completion',

'created': 1677649420,

'model': 'gpt-3.5-turbo',

'usage': {'prompt_tokens': 56, 'completion_tokens': 31, 'total_tokens': 87},

'choices': [

{

'message': {

'role': 'assistant',

'content': 'The 2020 World Series was played in Arlington, Texas at the Globe Life Field, which was the new home stadium for the Texas Rangers.'},

'finish_reason': 'stop',

'index': 0

}

]

}

提取回复内容response['choices'][0]['message']['content']

每次response中不同finish-reason值代表不同状态:

- stop:API返回完整内容

- length:由于max_token限制,回答不完整。

- content_filter:回复被过滤

- null:API还在思考答案

计算token数量

openAI的gpt-3.5-turbo-0301模型token最多4096,超过限制只能缩短请求内容。

而且请求的token和回复的token数会被加一起计费,例如说输入了10个token,openAI回复了20个token,那么最终收费是按照30个token进行收费。

import tiktoken

encoding = tiktoken.encoding_for_model("gpt-3.5-turbo")

def num_tokens_from_string(string: str, encoding_name: str) -> int:

"""Returns the number of tokens in a text string."""

encoding = tiktoken.get_encoding(encoding_name)

num_tokens = len(encoding.encode(string))

return num_tokens

num_tokens_from_string("tiktoken is great!", "cl100k_base")

how to count tokens with tiktoken

示例

- 中文请求

# 中文

Reponse = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[

{"role": "user", "content": "上海在哪里"},

]

)

回复

{

"choices": [

{

"finish_reason": "stop",

"index": 0,

"message": {

"content": "\n\n\u4e0a\u6d77\u4f4d\u4e8e\u4e2d\u56fd\u4e1c\u90e8\u6cbf\u6d77\u5730\u5e26\uff0c\u6bd7\u90bb\u6c5f\u82cf\u548c\u6d59\u6c5f\u4e24\u7701\uff0c\u5904\u4e8e\u957f\u6c5f\u53e3\u548c\u676d\u5dde\u6e7e\u4e4b\u95f4\uff0c\u5730\u7406\u5750\u6807\u4e3a31.23\u00b0N, 121.47\u00b0E\u3002",

"role": "assistant"

}

}

],

"created": 1678794854,

"id": "chatcmpl-6txWIqBPu6GIbaN7fwnosvKzfkLEE",

"model": "gpt-3.5-turbo-0301",

"object": "chat.completion",

"usage": {

"completion_tokens": 63,

"prompt_tokens": 13,

"total_tokens": 76

}

}

- 翻译

reponse = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "You are a helpful assistant that translates English to French."},

{"role": "user", "content": 'Translate the following English text to French: "{text}"'}

]

)

回复

{

"choices": [

{

"finish_reason": "stop",

"index": 0,

"message": {

"content": "Je suis d\u00e9sol\u00e9, je ne peux pas traduire cette demande car il n'y a pas de texte fourni entre les accolades. Veuillez ajouter du texte \u00e0 traduire.",

"role": "assistant"

}

}

],

"created": 1678798886,

"id": "chatcmpl-6tyZKMOnfUUeRIszSmgovSKvzsNfA",

"model": "gpt-3.5-turbo-0301",

"object": "chat.completion",

"usage": {

"completion_tokens": 41,

"prompt_tokens": 34,

"total_tokens": 75

}

}

也可以不加system

reponse = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[

{"role": "user", "content": 'Translate the following English text to French: "{text}"'}

]

)

回复:

{

"choices": [

{

"finish_reason": "stop",

"index": 0,

"message": {

"content": "\n\n\"{text}\" is already in English and does not need to be translated.",

"role": "assistant"

}

}

],

"created": 1678799017,

"id": "chatcmpl-6tybRxkYc92IE1mXELPuWnw2OFmgE",

"model": "gpt-3.5-turbo-0301",

"object": "chat.completion",

"usage": {

"completion_tokens": 17,

"prompt_tokens": 18,

"total_tokens": 35

}

}