Nacos源码分析-注册中心-Distro

Nacos 的AP

Nacos的AP模式,采用server之间互相的数据同步来实现数据在集群中的同步、复制操作

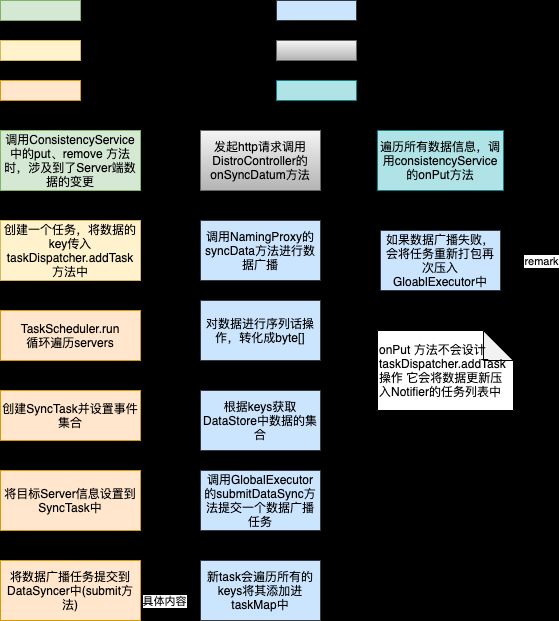

触发数据广播

public class DistroConsistencyServiceImpl implements EphemeralConsistencyService {

@Override

public void put(String key, Record value) throws NacosException {

onPut(key, value);

taskDispatcher.addTask(key);

}

}

当调用 ConsistencyService中定义的put、remove方法时,涉及到了server端数据的变更 此时会创建一个任务,将数据的key传入 taskDispatcher.addTask 方法中,用于后面数据变更时数据查找操作。

public class TaskDispatcher {

public void addTask(String key) {

taskSchedulerList.get(UtilsAndCommons.shakeUp(key, cpuCoreCount)).addTask(key);

}

}

这里有一个方法需要注意——shakeUp,查看官方代码注解可知这是将key(key可以看作是一次数据变更事件)这里应该是将任务均匀的路由到不同的TaskScheduler对象,确保每个TaskScheduler所承担的任务都差不多。

public class TaskScheduler implements Runnable {

....

private BlockingQueue<String> queue = new LinkedBlockingQueue<>(128 * 1024);

....

public void addTask(String key) {

queue.offer(key);

}

@Override

public void run() {

List<String> keys = new ArrayList<>();

while (true) {

try {

String key = queue.poll(partitionConfig.getTaskDispatchPeriod(), TimeUnit.MILLISECONDS);

if (Loggers.DISTRO.isDebugEnabled() && StringUtils.isNotBlank(key)) {

Loggers.DISTRO.debug("got key: {}", key);

}

if (dataSyncer.getServers() == null || dataSyncer.getServers().isEmpty()) {

continue;

}

if (StringUtils.isBlank(key)) {

continue;

}

if (dataSize == 0) {

keys = new ArrayList<>();

}

keys.add(key);

dataSize++;

if (dataSize == partitionConfig.getBatchSyncKeyCount()

|| (System.currentTimeMillis() - lastDispatchTime) > partitionConfig

.getTaskDispatchPeriod()) {

// bookmark 核心代码模块 将数据在nacos server 中进行广播操作

for (Member member : dataSyncer.getServers()) {

// bookmark 是自己的话 不需要广播操作

if (NetUtils.localServer().equals(member.getAddress())) {

continue;

}

// bookmark 创建SyncTask

SyncTask syncTask = new SyncTask();

// bookmark 设置事件集合(keys 集合)

syncTask.setKeys(keys);

// bookmark 将目标server信息设置到SyncTask中

syncTask.setTargetServer(member.getAddress());

if (Loggers.DISTRO.isDebugEnabled() && StringUtils.isNotBlank(key)) {

Loggers.DISTRO.debug("add sync task: {}", JacksonUtils.toJson(syncTask));

}

// bookmark 将数据广播任务提交到DataSync中

dataSyncer.submit(syncTask, 0);

}

lastDispatchTime = System.currentTimeMillis();

dataSize = 0;

}

} catch (Exception e) {

Loggers.DISTRO.error("dispatch sync task failed.", e);

}

}

}

}

核心代码就是for (Server member : dataSyncer.getServers()) {..}循环体内的代码,此处就是将数据在Nacos Server中进行广播操作;具体步骤如下:

- 创建

SyncTask,并设置事件集合(就是key集合) - 将目标

Server信息设置到SyncTask中——syncTask.setTargetServer(member.getKey()) - 将数据广播任务提交到

DataSyncer中

执行数据广播 DataSyncer

public class DataSyncer {

public void submit(SyncTask task, long delay) {

// If it's a new task:

// bookmark 是否是新任务

if (task.getRetryCount() == 0) {

// bookmark 遍历所有的keys

Iterator<String> iterator = task.getKeys().iterator();

while (iterator.hasNext()) {

String key = iterator.next();

// bookmark 添加进taskMap

if (StringUtils.isNotBlank(taskMap.putIfAbsent(buildKey(key, task.getTargetServer()), key))) {

// associated key already exist:

if (Loggers.DISTRO.isDebugEnabled()) {

Loggers.DISTRO.debug("sync already in process, key: {}", key);

}

iterator.remove();

}

}

}

if (task.getKeys().isEmpty()) {

// all keys are removed:

return;

}

// bookmark 提交一个数据广播任务

GlobalExecutor.submitDataSync(() -> {

// 1. check the server

if (getServers() == null || getServers().isEmpty()) {

Loggers.SRV_LOG.warn("try to sync data but server list is empty.");

return;

}

List<String> keys = task.getKeys();

if (Loggers.SRV_LOG.isDebugEnabled()) {

Loggers.SRV_LOG.debug("try to sync data for this keys {}.", keys);

}

// bookmark 通过SyncTask中的keys 去DataStore中去查询key所对一个的数据集合

// 2. get the datums by keys and check the datum is empty or not

Map<String, Datum> datumMap = dataStore.batchGet(keys);

if (datumMap == null || datumMap.isEmpty()) {

// clear all flags of this task:

for (String key : keys) {

taskMap.remove(buildKey(key, task.getTargetServer()));

}

return;

}

// bookmark 对数据进行序列化操作,转化为 byte[]数组

byte[] data = serializer.serialize(datumMap);

long timestamp = System.currentTimeMillis();

// bookmark 内部会发起http请求 进行数据广播

boolean success = NamingProxy.syncData(data, task.getTargetServer());

if (!success) {

// bookmark 如果数据广播失败,

// bookmark 将任务重新打包再次压入GlobalExecutor中

SyncTask syncTask = new SyncTask();

syncTask.setKeys(task.getKeys());

syncTask.setRetryCount(task.getRetryCount() + 1);

syncTask.setLastExecuteTime(timestamp);

syncTask.setTargetServer(task.getTargetServer());

retrySync(syncTask);

} else {

// clear all flags of this task:

for (String key : task.getKeys()) {

taskMap.remove(buildKey(key, task.getTargetServer()));

}

}

}, delay);

}

}

GlobalExecutor.submitDataSync(Runnable runnable)提交一个数据广播任务;首先通过SyncTask中的key集合去DataStore中去查询key所对应的数据集合,然后对数据进行序列化操作,转为byte[]数组后,执行Http请求操作——NamingProxy.syncData(data, task.getTargetServer());如果数据广播失败,则将任务重新打包再次压入GlobalExecutor中。

NamingProxy.syncData 方法:

public class NamingProxy {

public static boolean syncData(byte[] data, String curServer) {

Map<String, String> headers = new HashMap<>(128);

headers.put(HttpHeaderConsts.CLIENT_VERSION_HEADER, VersionUtils.version);

headers.put(HttpHeaderConsts.USER_AGENT_HEADER, UtilsAndCommons.SERVER_VERSION);

headers.put("Accept-Encoding", "gzip,deflate,sdch");

headers.put("Connection", "Keep-Alive");

headers.put("Content-Encoding", "gzip");

try {

/** bookmark PUT http://ip:port/nacos/v1/ns//distro/datum 该url的处理器为{@link com.alibaba.nacos.naming.controllers.DistroController#onSyncDatum(Map)}*/

HttpClient.HttpResult result = HttpClient.httpPutLarge(

"http://" + curServer + ApplicationUtils.getContextPath() + UtilsAndCommons.NACOS_NAMING_CONTEXT

+ DATA_ON_SYNC_URL, headers, data);

if (HttpURLConnection.HTTP_OK == result.code) {

return true;

}

if (HttpURLConnection.HTTP_NOT_MODIFIED == result.code) {

return true;

}

throw new IOException("failed to req API:" + "http://" + curServer + ApplicationUtils.getContextPath()

+ UtilsAndCommons.NACOS_NAMING_CONTEXT + DATA_ON_SYNC_URL + ". code:" + result.code + " msg: "

+ result.content);

} catch (Exception e) {

Loggers.SRV_LOG.warn("NamingProxy", e);

}

return false;

}

}

这里将数据提交到了URL为PUT http://ip:port/nacos/v1/ns//distro/datum中,而该URL对应的处理位置是com.alibaba.nacos.naming.controllers.DistroController#onSyncDatum(Map)。

public class DistroController {

@PutMapping("/datum")

public ResponseEntity onSyncDatum(@RequestBody Map<String, Datum<Instances>> dataMap) throws Exception {

if (dataMap.isEmpty()) {

Loggers.DISTRO.error("[onSync] receive empty entity!");

throw new NacosException(NacosException.INVALID_PARAM, "receive empty entity!");

}

for (Map.Entry<String, Datum<Instances>> entry : dataMap.entrySet()) {

if (KeyBuilder.matchEphemeralInstanceListKey(entry.getKey())) {

String namespaceId = KeyBuilder.getNamespace(entry.getKey());

String serviceName = KeyBuilder.getServiceName(entry.getKey());

if (!serviceManager.containService(namespaceId, serviceName) && switchDomain

.isDefaultInstanceEphemeral()) {

serviceManager.createEmptyService(namespaceId, serviceName, true);

}

// bookmark 进行数据的更新操作,onPut方法不会涉及 taskDispatcher.addTask操作,而是将数据更新压入了Notifier的Task列表中

consistencyService.onPut(entry.getKey(), entry.getValue().value);

}

}

return ResponseEntity.ok("ok");

}

}

public class DistroConsistencyServiceImpl implements EphemeralConsistencyService {

public void onPut(String key, Record value) {

if (KeyBuilder.matchEphemeralInstanceListKey(key)) {

Datum<Instances> datum = new Datum<>();

datum.value = (Instances) value;

datum.key = key;

datum.timestamp.incrementAndGet();

dataStore.put(key, datum);

}

if (!listeners.containsKey(key)) {

return;

}

notifier.addTask(key, ApplyAction.CHANGE);

}

}