Netty源码之EventLoop工作原理

前言

上一篇分析了Netty服务端启动原理,本篇会详细介绍Netty服务启动后的工作流程。

NioEventLoop

Netty线程模型中,NioEventLoop是一个非常重要的类,把netty服务看成是一个工厂,bossGroup中的NioEventLoop负责分配任务,而workerGroup中的NioEventLoop就是干活的工人,也就是处理读写事件。

上篇讲到Netty服务启动后,bossGroup中的NioEventLoop就开始工作,下面就从NioEventLoop的run方法开始分析:

protected void run() {

//loop循环工作主流程

for (;;) {

try {

try {

//选择策略,如果自己的队列中有任务则这直接调用selectNow(),让select马上返

//否则会来到SelectStrategy.SELECT:正常调用select(wakenUp.getAndSet(false))

//这个不难理解,因为如果有队列任务,自然需要去执行,所以直接select后往下走

switch (selectStrategy.calculateStrategy(selectNowSupplier, hasTasks())) {

case SelectStrategy.CONTINUE:

continue;

case SelectStrategy.BUSY_WAIT:

// fall-through to SELECT since the busy-wait is not supported with NIO

//这里又会进入一个select死循环,具体逻辑进去看下

case SelectStrategy.SELECT:

select(wakenUp.getAndSet(false));

if (wakenUp.get()) {

//如果wakenUp为true,则让selector.select直接返回

//这里主要考虑到用户线程可以显示调用wakenUp()方法改变wakenUp的值

//这里的操作主要是想让下一次select循环完成select后直接返回,具体会在select(wakenUp.getAndSet(false))中体现

selector.wakeup();

}

// fall through

default:

}

} catch (IOException e) {

rebuildSelector0();

handleLoopException(e);

continue;

}

cancelledKeys = 0;

needsToSelectAgain = false;

final int ioRatio = this.ioRatio;

//ioRatio 默认为50,表示eventloop执行IO与非IO任务的时间比例大致相等

if (ioRatio == 100) {

try {

processSelectedKeys();

} finally {

// Ensure we always run tasks.

runAllTasks();

}

} else {

final long ioStartTime = System.nanoTime();

try {

//处理socket事件

processSelectedKeys();

} finally {

// Ensure we always run tasks.

final long ioTime = System.nanoTime() - ioStartTime;

//执行等待队列中的任务

runAllTasks(ioTime * (100 - ioRatio) / ioRatio);

}

}

} catch (Throwable t) {

handleLoopException(t);

}

// Always handle shutdown even if the loop processing threw an exception.

try {

if (isShuttingDown()) {

closeAll();

if (confirmShutdown()) {

return;

}

}

} catch (Throwable t) {

handleLoopException(t);

}

}

}首先分析下几个主要方法,先看select(wakenUp.getAndSet(false)),传进去的是wakenUp的旧值,然后把wakenUp改为false,这里在说下,wakenUp这个值,为false意味着,下一次select会阻塞直到超时,为true则会马上返回,而且用户线程有方法可以显示的修改这个值:

private void select(boolean oldWakenUp) throws IOException {

Selector selector = this.selector;

try {

int selectCnt = 0;

long currentTimeNanos = System.nanoTime();

long selectDeadLineNanos = currentTimeNanos + delayNanos(currentTimeNanos);

for (;;) {

//计算一下超时时间,因为enventloop也可以接收延时任务,如果没有延时任务,默认超时时间为0.5毫秒

long timeoutMillis = (selectDeadLineNanos - currentTimeNanos + 500000L) / 1000000L;

//如果超时时间不超过0,则直接selectNow()返回

if (timeoutMillis <= 0) {

if (selectCnt == 0) {

selector.selectNow();

selectCnt = 1;

}

break;

}

//判断有没有队列任务,如果有则selectNow()返回

//如果wakenUp为ture,这里是不会进去的,因为如果wakenUp为ture,下面的selector.select(timeoutMillis)也会直接返回

if (hasTasks() && wakenUp.compareAndSet(false, true)) {

selector.selectNow();

selectCnt = 1;

break;

}

//NIO的select操作,超时则返回

int selectedKeys = selector.select(timeoutMillis);

selectCnt ++;

if (selectedKeys != 0 || oldWakenUp || wakenUp.get() || hasTasks() || hasScheduledTasks()) {

//如果有事件或有队列任务,或者wakenUp新或旧值为true,则返回

break;

}

if (Thread.interrupted()) {

if (logger.isDebugEnabled()) {

logger.debug("Selector.select() returned prematurely because " +

"Thread.currentThread().interrupt() was called. Use " +

"NioEventLoop.shutdownGracefully() to shutdown the NioEventLoop.");

}

selectCnt = 1;

break;

}

long time = System.nanoTime();

if (time - TimeUnit.MILLISECONDS.toNanos(timeoutMillis) >= currentTimeNanos) {

//这里判断一下时间,如果是正常超时的则做相应的操作

//至于为什么有这种莫名其妙的操作,连同下面的else下面会讲到

selectCnt = 1;

} else if (SELECTOR_AUTO_REBUILD_THRESHOLD > 0 &&

selectCnt >= SELECTOR_AUTO_REBUILD_THRESHOLD) {

selector = selectRebuildSelector(selectCnt);

selectCnt = 1;

break;

}

currentTimeNanos = time;

}

if (selectCnt > MIN_PREMATURE_SELECTOR_RETURNS) {

if (logger.isDebugEnabled()) {

logger.debug("Selector.select() returned prematurely {} times in a row for Selector {}.",

selectCnt - 1, selector);

}

}

} catch (CancelledKeyException e) {

if (logger.isDebugEnabled()) {

logger.debug(CancelledKeyException.class.getSimpleName() + " raised by a Selector {} - JDK bug?",

selector, e);

}

}

}上面的selectCnt 这个变量,先是++操作,后面判断又置为1,主要是为了解决java NIO的空轮询bug,这个bug会让select不超时直接返回,在循环中导致cpu占用急剧拉升。netty用selectCnt这个标识判断(通过selectCnt是否大于某个阈值),如果出现了空轮询bug,就重新build一个selector,也就是else中的逻辑,大家感兴趣可点进去看看。

假设现在有一个客户端发起了连接,select正常返回,那么来到processSelectedKeys():

private void processSelectedKeys() {

if (selectedKeys != null) {

processSelectedKeysOptimized();

} else {

processSelectedKeysPlain(selector.selectedKeys());

}

}

private void processSelectedKeysOptimized() {

//遍历selectedKeys

for (int i = 0; i < selectedKeys.size; ++i) {

final SelectionKey k = selectedKeys.keys[i];

selectedKeys.keys[i] = null;

//拿到channel上的attachment,也就是NioServerSocketChannel

final Object a = k.attachment();

if (a instanceof AbstractNioChannel) {

//处理事件

processSelectedKey(k, (AbstractNioChannel) a);

} else {

@SuppressWarnings("unchecked")

NioTask task = (NioTask) a;

processSelectedKey(k, task);

}

if (needsToSelectAgain) {

selectedKeys.reset(i + 1);

selectAgain();

i = -1;

}

}

}

private void processSelectedKey(SelectionKey k, AbstractNioChannel ch) {

final AbstractNioChannel.NioUnsafe unsafe = ch.unsafe();

//一些验证判断,如果不在当前工作线程内会return,考虑到当前channel已经注销

if (!k.isValid()) {

final EventLoop eventLoop;

try {

eventLoop = ch.eventLoop();

} catch (Throwable ignored) {

return;

}

if (eventLoop != this || eventLoop == null) {

return;

}

unsafe.close(unsafe.voidPromise());

return;

}

try {

int readyOps = k.readyOps();

if ((readyOps & SelectionKey.OP_CONNECT) != 0) {

int ops = k.interestOps();

ops &= ~SelectionKey.OP_CONNECT;

k.interestOps(ops);

unsafe.finishConnect();

}

if ((readyOps & SelectionKey.OP_WRITE) != 0) {

ch.unsafe().forceFlush();

}

// to a spin loop

if ((readyOps & (SelectionKey.OP_READ | SelectionKey.OP_ACCEPT)) != 0 || readyOps == 0) {

unsafe.read();

}

} catch (CancelledKeyException ignored) {

unsafe.close(unsafe.voidPromise());

}

} 一个客户端发起连接,所以是accept事件,所以聚焦这个if:

if ((readyOps & (SelectionKey.OP_READ | SelectionKey.OP_ACCEPT)) != 0 || readyOps == 0) {

unsafe.read();

}

用netty自己实现的unsafe处理accept事件,这里是服务端channel的unsafe,会走NioMessageUnsafe这个子类:

public void read() {

assert eventLoop().inEventLoop();

final ChannelConfig config = config();

final ChannelPipeline pipeline = pipeline();

//接收缓冲区分配器

final RecvByteBufAllocator.Handle allocHandle = unsafe().recvBufAllocHandle();

allocHandle.reset(config);

boolean closed = false;

Throwable exception = null;

try {

try {

do {

//读取数据,这里会循环读取

int localRead = doReadMessages(readBuf);

if (localRead == 0) {

break;

}

if (localRead < 0) {

closed = true;

break;

}

allocHandle.incMessagesRead(localRead);

} while (allocHandle.continueReading());

} catch (Throwable t) {

exception = t;

}

int size = readBuf.size();

for (int i = 0; i < size; i ++) {

readPending = false;

//对于每个客户端连接,调用服务端handler的Channelread方法

pipeline.fireChannelRead(readBuf.get(i));

}

readBuf.clear();

allocHandle.readComplete();

//调用服务端handler的ChannelReadComplete方法

pipeline.fireChannelReadComplete();

...

} finally {

if (!readPending && !config.isAutoRead()) {

removeReadOp();

}

}

}接下来看读取数据方法doReadMessages(readBuf):

protected int doReadMessages(List读完数据后来到pipeline.fireChannelRead(readBuf.get(i)),触发服务端handler的ChannelRead方法,看过上一篇的应该知道,服务端的handler就是ServerBootstrapAcceptor,下面直接来到ServerBootstrapAcceptor的ChannelRead,我们知道,这个方法主要就是把客户端的socketChannel注册到workerGroup中某个enventLoop的selector上:

public void channelRead(ChannelHandlerContext ctx, Object msg) {

final Channel child = (Channel) msg;

//把handler加入channel的pipeline,这里的childHandler就是用户自行编写的处理读事件的handler

child.pipeline().addLast(childHandler);

//设置channel参数相关

setChannelOptions(child, childOptions, logger);

for (Entry, Object> e: childAttrs) {

child.attr((AttributeKey 注册channel会来到MultithreadEventLoopGroup#:

public ChannelFuture register(Channel channel) {

return next().register(channel);

}

next方法会轮询选出一个eventLoop处理注册任务,至于注册的逻辑会在eventLoop处理队列任务时执行,注册完后会执行handler生命周期各个方法,和注册服务端NioServerSocketChannel的过程是一样的,这在上篇启动原理已经详细介绍,这里就不细说了。

注册完成后,workerGroup的各个eventLoop会从run方法开始,循环执行IO和非IO任务,这里当然就是客户端读写事件和注册channel事件。

和服务端分派线程不同的是工作线程的读数据逻辑,再次来到

if ((readyOps & (SelectionKey.OP_READ | SelectionKey.OP_ACCEPT)) != 0 || readyOps == 0) {

unsafe.read();

}

工作线程来到这里会进入NioByteUnsafe#read,逻辑和分派线程读数据的差不多,只是这里变成了字节读取:

public final void read() {

//省略...

try {

do {

byteBuf = allocHandle.allocate(allocator);

//将数据读入字节缓冲区byteBuf

allocHandle.lastBytesRead(doReadBytes(byteBuf));

if (allocHandle.lastBytesRead() <= 0) {

byteBuf.release();

byteBuf = null;

close = allocHandle.lastBytesRead() < 0;

if (close) {

readPending = false;

}

break;

}

allocHandle.incMessagesRead(1);

readPending = false;

pipeline.fireChannelRead(byteBuf);

byteBuf = null;

} while (allocHandle.continueReading());

allocHandle.readComplete();

pipeline.fireChannelReadComplete();

if (close) {

closeOnRead(pipeline);

}

} catch (Throwable t) {

handleReadException(pipeline, byteBuf, t, close, allocHandle);

} finally {

if (!readPending && !config.isAutoRead()) {

removeReadOp();

}

}

}最后依然会fireChannelRead调用handler的ChannelRead方法。

遗留了一点,关于每个eventLoop队列任务的执行:

protected boolean runAllTasks(long timeoutNanos) {

fetchFromScheduledTaskQueue();

Runnable task = pollTask();

if (task == null) {

afterRunningAllTasks();

return false;

}

//计算到期时间

final long deadline = ScheduledFutureTask.nanoTime() + timeoutNanos;

long runTasks = 0;

long lastExecutionTime;

for (;;) {

//执行队列任务

safeExecute(task);

runTasks ++;

//每执行64个任务检查下有没有到期

if ((runTasks & 0x3F) == 0) {

lastExecutionTime = ScheduledFutureTask.nanoTime();

if (lastExecutionTime >= deadline) {

//如果给定时间用完了,就退出方法,剩余的任务留到下次执行

break;

}

}

task = pollTask();

if (task == null) {

lastExecutionTime = ScheduledFutureTask.nanoTime();

break;

}

}

afterRunningAllTasks();

this.lastExecutionTime = lastExecutionTime;

return true;

}这个方法的入参是ioTime * (100 - ioRatio) / ioRatio,即处理IO任务的事件乘以一个倍数,这个倍数是非IO任务所能占用的时间和IO任务所能占用的时间的比值,也就是用来表示runAllTasks能占用的时间。

至此,netty的工作流程差不多就结束了。

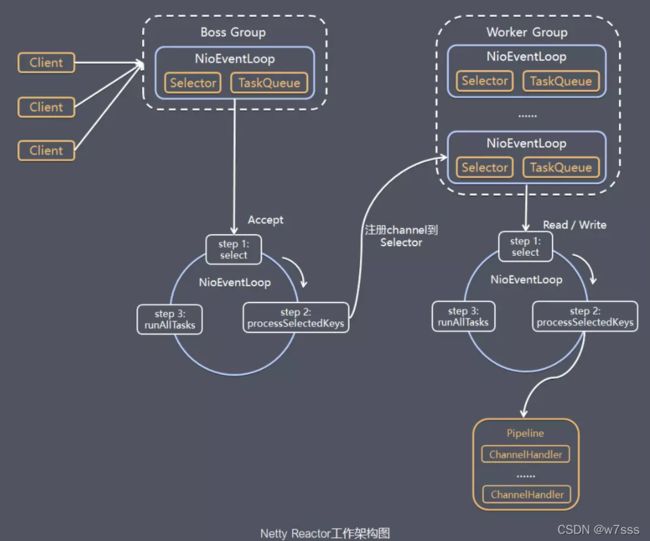

盗个图总结一下:

其实netty最核心的还是要理解NioEventLoop的工作模型,它是netty中干活的线程,因为是一个线程循环的执行任务,所以叫事件循环线程,上图画的够形象的了。

关于netty,还有很多内容,诸如Pipeline是如何工作的,netty独有的堆外内存管理模型,内存零拷贝,心跳handler等等,后面有机会再讲吧。