kuberetes下部署pulsar消息队

本文仅介绍如何快速部署,对于pulsar的详细使用和相关概念不多做解释

如需了解更多关于pulsar的信息可参考如下:

官方文档:https://pulsar.apache.org/docs/2.10.x/

pulsar相关介绍文档:https://blog.csdn.net/Tencent_TEG/article/details/122551505

1.docker方式部署

1.1部署pulsar standalone

多用于开发环境或是测试环境

docker run -it --restart=always \

--name pulsar-standalone \

-p 6650:6650 \

-p 8080:8080 \

-v /data2/pulsar/data:/pulsar/data \

-d apachepulsar/pulsar:2.10.1 \

sh -c "sed -i '/^advertisedAddress=/ s|.*|advertisedAddress=192.168.0.3|' /pulsar/conf/standalone.conf & \

bin/pulsar standalone > pulsar.log 2>&1 & \

sleep 80 && bin/pulsar-admin clusters update standalone \

--url http://pulsar-standalone:8080 \

--broker-url pulsar://pulsar-standalone:6650 & \

tail -F pulsar.log"注意:advertisedAddress需要指定宿主机的IP地址,否则会报错

镜像包含了:zookeeper,broker,bookeeper,proxy(未使用)

如果重新启动pulsar的话需要重新执行命令把初始化的去掉,否则会无法启动

docker run -it --restart=always \

--name pulsar-standalone \

-p 6650:6650 \

-p 8080:8080 \

-v /data2/pulsar/data:/pulsar/data \

-d apachepulsar/pulsar:2.10.1 \

sh -c "sed -i '/^advertisedAddress=/ s|.*|advertisedAddress=192.168.0.3|' /pulsar/conf/standalone.conf & \

bin/pulsar standalone > pulsar.log 2>&1 & tail -F pulsar.log"1.2部署pulsar-manager

docker run -it --restart=always \

--name pulsar-manager \

-p 9527:9527 -p 7750:7750 \

-e SPRING_CONFIGURATION_FILE=/pulsar-manager/pulsar-manager/application.properties \

--link pulsar-standalone \

--entrypoint="" \

-v /data2/pulsar-manager/dbdata:/pulsar-manager/pulsar-manager/dbdata \

-d apachepulsar/pulsar-manager:v0.2.0 \

sh -c "sed -i '/^default.environment.name/ s|.*|default.environment.name=pulsar-standalone|' /pulsar-manager/pulsar-manager/application.properties; \

sed -i '/^default.environment.service_url/ s|.*|default.environment.service_url=http://pulsar-standalone:8080|' /pulsar-manager/pulsar-manager/application.properties; \

/pulsar-manager/entrypoint.sh & \

tail -F /pulsar-manager/pulsar-manager/pulsar-manager.log"镜像包含pulsar-manager和postgres,默认使用postgres数据库,数据库存储路径/pulsar-manager/pulsar-manager/dbdata

1.3初始化账号登录pulsar-manager管理pulsar

# 获取token

CSRF_TOKEN=$(curl http://192.168.0.3:7750/pulsar-manager/csrf-token)

# 设置管理员密码

curl \

-H "X-XSRF-TOKEN: $CSRF_TOKEN" \

-H "Cookie: XSRF-TOKEN=$CSRF_TOKEN;" \

-H 'Content-Type: application/json' \

-X PUT http://192.168.0.30:7750/pulsar-manager/users/superuser \

-d '{"name": "admin", "password": "pulsar", "description": "test", "email": "username@test.org"}'1.4登录配置访问

配置环境名称,添加pulsar-manager访问pulsar的web端口(8080,6650是pulsar bookie的端口用于数据存取交互,pulsar-manager通过web访问pulsar获取bookie的相关访问信息然后进行数据的交互,应用程序访问pulsar也是如此)

登录后的页面

- k8s集群下部署单节点pulsar

本次使用的k8s环境版本如下:

Kubernetes版本:v1.23.1

Helm版本:v3.9.4

2.1创建pulsar的headless

apiVersion: v1

kind: Service

metadata:

name: pulsar-standalone-service

namespace: pulsar

labels:

app: pulsar-standalone-service

spec:

type: ClusterIP

clusterIP: None

ports:

- port: 6650

name: bookiesport

targetPort: 6650

- port: 8080

name: webport

targetPort: 8080

selector:

app: pulsar-standalone2.2 创建statefulset

apiVersion: apps/v1

kind: StatefulSet

metadata:

labels:

app: pulsar-standalone

name: pulsar-standalone-sts

namespace: pulsar

spec:

serviceName: pulsar-standalone-service

replicas: 1

selector:

matchLabels:

app: pulsar-standalone

template:

metadata:

labels:

app: pulsar-standalone

spec:

containers:

- name: pulsar-standalone

image: apachepulsar/pulsar:2.10.1

imagePullPolicy: Always

command: ["/bin/bash"]

args: ["-c", "sed -i '/^advertisedAddress=/ s|.*|advertisedAddress=pulsar-standalone-depl-0.pulsar-standalone-service.pulsar.svc.cluster.local|' conf/standalone.conf && bin/pulsar standalone > pulsar.log 2>&1 && sleep 60 && bin/pulsar-admin clusters update standalone --url http://pulsar-standalone-depl-0.pulsar-standalone-service.pulsar.svc.cluster.local:8080 --broker-url pulsar://pulsar-standalone-depl-0.pulsar-standalone-service.pulsar.svc.cluster.local:6650 && tail -F pulsar.log"]

ports:

- name: pulsarport

containerPort: 6650

protocol: TCP

- name: webport

containerPort: 8080

protocol: TCP

volumeMounts:

# pulsar的数据持久化

- name: pulsar

mountPath: /pulsar/data

subPath: pulsardata

volumeClaimTemplates:

- metadata:

name: pulsar

spec:

accessModes: [ "ReadWriteMany" ]

# 改成对应的sc

storageClassName: managed-nfs-storage

resources:

requests:

storage: 10Gi

注:conf/standalone.conf中的advertisedAddress地址为pulsar的pod IP;当重启pulsar如果出现报错,可以尝试把yaml文件中的containers.args改为下面一段,再重新apply

args: ["-c", "sed -i '/^advertisedAddress=/ s|.*|advertisedAddress=pulsar-standalone-depl-0.pulsar-standalone-service.pulsar.svc.cluster.local|' conf/standalone.conf && bin/pulsar standalone > pulsar.log 2>&1 && tail -F pulsar.log"]本次部署的namespace是kube-ops,statefulset名称为pulsar-standalone-depl

2.3 创建pulsar-manager的svc

apiVersion: v1

kind: Service

metadata:

name: pulsar-mng-svc

namespace: pulsar

labels:

app: pulsar-mng-svc

spec:

type: NodePort

ports:

- port: 9527

name: pulsarmng

targetPort: 9527

nodePort: 30527

- port: 7750

name: bookkeeper

targetPort: 7750

nodePort: 30750

selector:

app: pulsar-manager2.4创建pulsar-manager的pvc(用于持久化存储postgres的数据目录)

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pulsar-manager-pvc

namespace: pulsar

spec:

# 改为k8s下对应的sc

storageClassName: "managed-nfs-storage"

accessModes:

- ReadWriteMany

resources:

requests:

storage: 2Gi

2.5创建pulsar-manager的svc

apiVersion: v1

kind: Service

metadata:

name: pulsar-mng-svc

namespace: pulsar

labels:

app: pulsar-mng-svc

spec:

type: NodePort

ports:

- port: 9527

name: pulsarmng

targetPort: 9527

nodePort: 30527

- port: 7750

name: bookkeeper

targetPort: 7750

nodePort: 30750

selector:

app: pulsar-manager

2.6 deployment文件

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: pulsar-manager

name: pulsar-manager-depl

namespace: pulsar

spec:

replicas: 1

selector:

matchLabels:

app: pulsar-manager

template:

metadata:

labels:

app: pulsar-manager

spec:

containers:

- name: pulsar-manager

image: apachepulsar/pulsar-manager:v0.2.0

imagePullPolicy: Always

command: ["/bin/sh"]

args: ["-c", "sed -i '/^default.environment.name/ s|.*|default.environment.name=pulsar-standalone|' /pulsar-manager/pulsar-manager/application.properties; sed -i '/^default.environment.service_url/ s|.*|default.environment.service_url=http://pulsar-standalone-depl-0.pulsar-standalone-service.kube-ops.svc.cluster.local:8080|' /pulsar-manager/pulsar-manager/application.properties; /pulsar-manager/entrypoint.sh; tail -F /pulsar-manager/pulsar-manager/pulsar-manager.log"]

ports:

- name: pulsarmng

containerPort: 9527

protocol: TCP

- name: bookkeeper

containerPort: 7750

protocol: TCP

env:

- name: SPRING_CONFIGURATION_FILE

value: "/pulsar-manager/pulsar-manager/application.properties"

volumeMounts:

# postgres数据库存储目录

- mountPath: /pulsar-manager/pulsar-manager/dbdata

subPath: dbdata

name: dbdata

restartPolicy: Always

volumes:

- name: dbdata

persistentVolumeClaim:

claimName: pulsar-manager-pvc2.7创建访问pulsar-manager的ingress

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: pulsar-ingress

namespace: kube-ops

spec:

rules:

- host: pulsar-mng.local

http:

paths:

- backend:

service:

name: pulsar-mng-svc

port:

number: 9527

path: /

pathType: Prefix启动成功后访问本地host绑定下访问,然后配置env变量

3. k8s集群下部署pulsar集群

通过helm部署;参考文章: https://mp.weixin.qq.com/s/1o13M5uxEiLqPZa08YyJdQ

3.1 部署前准备

3.1.1 安装Helm

地址:https://github.com/helm/helm/releases 找到对应本版

wget https://get.helm.sh/helm-v3.9.4-linux-amd64.tar.gz

tar xf helm-v3.9.4-linux-amd64.tar.gz

cd linux-amd64

mv helm /usr/bin/

helm version

# 先添加常用的chart源

helm repo add bitnami https://charts.bitnami.com/bitnami

helm repo add aliyuncs https://apphub.aliyuncs.com

helm repo add stable http://mirror.azure.cn/kubernetes/charts/3.1.2 镜像准备

部署 Pulsar 集群需要的组件有 proxy, broker, bookie, zookeeper, recovery, toolset, pulsar-manager。

其中 proxy, broker, bookie, zookeeper, recovery, toolset 的官方容器镜像都是apachepulsar/pulsar-all。pulsar-manager 的官方镜像是apachepulsar/pulsar-manager。

本文使用的 pulsar 官方的 helm chart https://github.com/apache/pulsar-helm-chart/releases。

git clone -b pulsar-2.9.4 --depth 1 https://github.com/apache/pulsar-helm-chart.git

cd pulsar-helm-chartpulsar-helm-chart 的版本为 2.9.4,该版本中 pulsar 的版本为 2.10.2, pulsar-manager 版本为 v0.3.0

注意:因为再部署时pulsar-manager:v0.3.0一直报错无法解决后改用v0.2.0

主要镜像:

apachepulsar/pulsar-all:2.9.4

apachepulsar/pulsar-manager:v0.2.0

为了方便部署这边先把镜像拉下来然后推送到我们内网的镜像仓库Harbor上

docker pull apachepulsar/pulsar-all:2.10.2

docker tag apachepulsar/pulsar-all:2.10.2 harbor.local.gtland/ops/pulsar-all:2.10.2

docker push harbor.local.gtland/ops/pulsar-all:2.10.2

docker pull apachepulsar/pulsar-manager:v0.2.0

docker tag apachepulsar/pulsar-manager:v0.2.0 harbor.local.gtland/ops/pulsar-manager:v0.2.0

docker push harbor.local.gtland/ops/pulsar-manager:v0.2.03.1.3 创建JWT认证所需的secret

部署的 Pulsar 集群需要在安全上开通 JWT 认证。根据前面学习的内容,JWT 支持通过两种不同的秘钥生成和验证 Token:

对称秘钥:

使用单个 Secret key 来生成和验证 Token

非对称秘钥:包含由私钥和公钥组成的一对密钥

使用 Private key 生成 Token

使用 Public key 验证 Token

推荐使用非对称密钥的方式,需要先生成密钥对,再用秘钥生成 token。因为 Pulsar 被部署在 K8S 集群中,在 K8S 集群中存储这些秘钥和 Token 的最好的方式是使用 K8S 的 Secret。

pulsar-helm-chart 专门提供了一个prepare_helm_release.sh脚本,可以用来生成这些 Secret。

cd pulsar-helm-chart

./scripts/pulsar/prepare_helm_release.sh \

-n pulsar \

-k pulsar \

-l-n:指定namespace命名

-k: 指定helm部署时helm release的版本名称

-l: 将生成的内容输出

输出:

apiVersion: v1

data:

PRIVATEKEY: <...>

PUBLICKEY: <...>

kind: Secret

metadata:

creationTimestamp: null

name: pulsar-token-asymmetric-key

namespace: pulsar

#generate the tokens for the super-users: proxy-admin,broker-admin,admin

#generate the token for proxy-admin

---

#pulsar-token-asymmetric-key

apiVersion: v1

data:

TOKEN: <...>

TYPE: YXN5bW1ldHJpYw==

kind: Secret

metadata:

creationTimestamp: null

name: pulsar-token-proxy-admin

namespace: pulsar

#generate the token for broker-admin

---

#pulsar-token-asymmetric-key

apiVersion: v1

data:

TOKEN: <...>

TYPE: YXN5bW1ldHJpYw==

kind: Secret

metadata:

creationTimestamp: null

name: pulsar-token-broker-admin

namespace: pulsar

#generate the token for admin

---

#pulsar-token-asymmetric-key

apiVersion: v1

data:

TOKEN: <...>

TYPE: YXN5bW1ldHJpYw==

kind: Secret

metadata:

creationTimestamp: null

name: pulsar-token-admin

namespace: pulsar

#-------------------------------------

#

#The jwt token secret keys are generated under:

# - 'pulsar-token-asymmetric-key'

#

#The jwt tokens for superusers are generated and stored as below:

# - 'proxy-admin':secret('pulsar-token-proxy-admin')

# - 'broker-admin':secret('pulsar-token-broker-admin')

# - 'admin':secret('pulsar-token-admin')

注意将输出中无用的注释,然后写到yaml文件中执行生成对应的secret

pulsar-token-asymmetric-key 这个 Secret 中是用于生成 Token 和验证 Token 的私钥和公钥

pulsar-token-proxy-admin 这个 Secret 中是用于 proxy 的超级用户角色 Token

pulsar-token-broker-admin 这个 Secret 中是用于 broker 的超级用户角色 Token

pulsar-token-admin 这个 Secret 中是用于管理客户端的超级用户角色 Token

3.1.4 修改pulsar-helm中的values.yaml

下载pulsar的helm文件

wget https://github.com/apache/pulsar-helm-chart/releases/download/pulsar-2.9.4/pulsar-2.9.4.tgz

tar xf pulsar-2.9.4.tgz

cd pulsar

cp values.yaml values.yaml_bak编辑修改values.yaml

# 修改1

namespace: "pulsar"

# 如果已有这个命名空间则false 无的话改为true

namespaceCreate: false

# 修改2 使用持久化存储

persistence: true

## Volume settings

volumes:

persistence: true

# configure the components to use local persistent volume

# the local provisioner should be installed prior to enable local persistent volume

local_storage: false

# 修改3

components:

# zookeeper

zookeeper: true

# bookkeeper

bookkeeper: true

# bookkeeper - autorecovery

autorecovery: true

# broker

broker: true

# functions

functions: true

# proxy

proxy: true

# toolset

toolset: true

# pulsar manager 因为这里使用自己的postgres所以pulsar-manager单独部署

pulsar_manager: false

# 修改4

monitoring:

# monitoring - prometheus

prometheus: false

# monitoring - grafana

grafana: false

# monitoring - node_exporter

node_exporter: false

# alerting - alert-manager

alert_manager: false

# 修改5

images:

zookeeper:

repository: harbor.yfyun.gtland/ops/pulsar-all

tag: 2.10.2

pullPolicy: IfNotPresent

bookie:

repository: harbor.yfyun.gtland/ops/pulsar-all

tag: 2.10.2

pullPolicy: IfNotPresent

autorecovery:

repository: harbor.yfyun.gtland/ops/pulsar-all

tag: 2.10.2

pullPolicy: IfNotPresent

broker:

repository: harbor.yfyun.gtland/ops/pulsar-all

tag: 2.10.2

pullPolicy: IfNotPresent

proxy:

repository: harbor.yfyun.gtland/ops/pulsar-all

tag: 2.10.2

pullPolicy: IfNotPresent

functions:

repository: harbor.yfyun.gtland/ops/pulsar-all

tag: 2.10.2

# 修改6

# Enable or disable broker authentication and authorization.

auth:

authentication:

# 改为true

enabled: true

provider: "jwt"

jwt:

# Enable JWT authentication

# If the token is generated by a secret key, set the usingSecretKey as true.

# If the token is generated by a private key, set the usingSecretKey as false.

usingSecretKey: false

authorization:

# 改为true

enabled: true

zookeeper:

.

.

.

volumes:

# use a persistent volume or emptyDir

# 使用持久化存储

persistence: true

data:

name: data

size: 20Gi

local_storage: true

# 修改成k8s中使用的sc(sc已经建好了的)

storageClassName: "managed-nfs-storage"

bookkeeper:

.

.

.

volumes:

# use a persistent volume or emptyDir

persistence: true

journal:

name: journal

size: 10Gi

local_storage: true

storageClassName: managed-nfs-storage

useMultiVolumes: false

multiVolumes:

- name: journal0

size: 10Gi

storageClassName: managed-nfs-storage

mountPath: /pulsar/data/bookkeeper/journal0

- name: journal1

size: 10Gi

storageClassName: managed-nfs-storage

mountPath: /pulsar/data/bookkeeper/journal1

ledgers:

name: ledgers

size: 50Gi

storageClassName: managed-nfs-storage

useMultiVolumes: false

multiVolumes:

- name: ledgers0

size: 10Gi

storageClassName: managed-nfs-storage

mountPath: /pulsar/data/bookkeeper/ledgers0

- name: ledgers1

size: 10Gi

storageClassName: managed-nfs-storage

mountPath: /pulsar/data/bookkeeper/ledgers1

## use a single common volume for both journal and ledgers

useSingleCommonVolume: false

common:

name: common

size: 60Gi

local_storage: true

storageClassName: "managed-nfs-storage"

# 修改7

pulsar_metadata:

component: pulsar-init

image:

# the image used for running `pulsar-cluster-initialize` job

repository: harbor.yfyun.gtland/ops/pulsar-all

tag: 2.10.2

pullPolicy: IfNotPresent

# 修改8

## Proxy service

## templates/proxy-service.yaml

##

ports:

http: 80

https: 443

pulsar: 6650

pulsarssl: 6651

service:

annotations: {}

# 原来是LoadBalancer改为NodePort

type: NodePort3.2 执行helm部署pulsar

helm install \

--values ./pulsar/values.yaml \

--set initialize=true \

--namespace pulsar \

pulsar pulsar-2.9.4.tgz执行成功后可以看到

注意:完成发布后pulsar-bookie-init-9r6ss和pulsar-pulsar-init-74pzl的状态是completed

如果后面values.yaml有调整可以使用命令

helm upgrade pulsar pulsar-2.9.4.tgz \

--namespace pulsar \

-f ./pulsar/values.yaml3.2.1 验证创建租户,命名空间和主题是否正常

k8sadm@m10166:~/pulsar-proxy$ kubectl -n pulsar exec -it pulsar-toolset-0 -- bash

# 创建tenants 命名为:test-tenant

I have no name!@pulsar-toolset-0:/pulsar$ bin/pulsar-admin tenants create test-tenant

I have no name!@pulsar-toolset-0:/pulsar$ bin/pulsar-admin tenants list

public

pulsar

test-tenant

# 创建namespaces 命名为test

I have no name!@pulsar-toolset-0:/pulsar$ bin/pulsar-admin namespaces create test-tenant/test-ns

I have no name!@pulsar-toolset-0:/pulsar$ bin/pulsar-admin namespaces list test-tenant

test-tenant/test-ns

# 创建topic

I have no name!@pulsar-toolset-0:/pulsar$ bin/pulsar-admin topics create-partitioned-topic test-tenant/test-ns/test-topic -p 3

I have no name!@pulsar-toolset-0:/pulsar$ bin/pulsar-admin topics list-partitioned-topics test-tenant/test-ns

persistent://test-tenant/test-ns/test-topic

正常创建使用

3.3 部署postgres

3.3.1创建postgres的svc

apiVersion: v1

kind: Service

metadata:

name: postgres-service

namespace: pulsar

labels:

app: postgres

spec:

selector:

app: postgres

ports:

- name: pgport

port: 5432

clusterIP: None3.3.2 postgres的statefuleset

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: postgres

namespace: pulsar

spec:

selector:

matchLabels:

app: postgres

serviceName: "postgres-service"

template:

metadata:

labels:

app: postgres

spec:

terminationGracePeriodSeconds: 10

nodeSelector:

public: ops

containers:

- name: postgres

image: postgres

imagePullPolicy: IfNotPresent

env:

# 配置postgres的密码

- name: POSTGRES_PASSWORD

value: "pulsar"

- name: ALLOW_IP_RANGE

value: "0.0.0.0/0"

ports:

- name: pgport

protocol: TCP

containerPort: 5432

#resources:

# limits:

# cpu: 8000m

# memory: 8Gi

# requests:

# cpu: 1000m

# memory: 1Gi

volumeMounts:

- name: postgresdata

mountPath: /var/lib/postgresql

securityContext:

runAsUser: 999

fsGroup: 999

volumeClaimTemplates:

- metadata:

name: postgresdata

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "managed-nfs-storage"

resources:

requests:

storage: 50Gi

执行后查看是否成功部署

进入pod执行sql

kubectl -n pulsar exec -it postgres-0 -- bash执行下面的sql

CREATE DATABASE pulsar_manager;

\c pulsar_manager; # 输入密码也是pulsar

CREATE TABLE IF NOT EXISTS environments (

name varchar(256) NOT NULL,

broker varchar(1024) NOT NULL,

CONSTRAINT PK_name PRIMARY KEY (name),

UNIQUE (broker)

);

CREATE TABLE IF NOT EXISTS topics_stats (

topic_stats_id BIGSERIAL PRIMARY KEY,

environment varchar(255) NOT NULL,

cluster varchar(255) NOT NULL,

broker varchar(255) NOT NULL,

tenant varchar(255) NOT NULL,

namespace varchar(255) NOT NULL,

bundle varchar(255) NOT NULL,

persistent varchar(36) NOT NULL,

topic varchar(255) NOT NULL,

producer_count BIGINT,

subscription_count BIGINT,

msg_rate_in double precision ,

msg_throughput_in double precision ,

msg_rate_out double precision ,

msg_throughput_out double precision ,

average_msg_size double precision ,

storage_size double precision ,

time_stamp BIGINT

);

CREATE TABLE IF NOT EXISTS publishers_stats (

publisher_stats_id BIGSERIAL PRIMARY KEY,

producer_id BIGINT,

topic_stats_id BIGINT NOT NULL,

producer_name varchar(255) NOT NULL,

msg_rate_in double precision ,

msg_throughput_in double precision ,

average_msg_size double precision ,

address varchar(255),

connected_since varchar(128),

client_version varchar(36),

metadata text,

time_stamp BIGINT,

CONSTRAINT fk_publishers_stats_topic_stats_id FOREIGN KEY (topic_stats_id) References topics_stats(topic_stats_id)

);

CREATE TABLE IF NOT EXISTS replications_stats (

replication_stats_id BIGSERIAL PRIMARY KEY,

topic_stats_id BIGINT NOT NULL,

cluster varchar(255) NOT NULL,

connected BOOLEAN,

msg_rate_in double precision ,

msg_rate_out double precision ,

msg_rate_expired double precision ,

msg_throughput_in double precision ,

msg_throughput_out double precision ,

msg_rate_redeliver double precision ,

replication_backlog BIGINT,

replication_delay_in_seconds BIGINT,

inbound_connection varchar(255),

inbound_connected_since varchar(255),

outbound_connection varchar(255),

outbound_connected_since varchar(255),

time_stamp BIGINT,

CONSTRAINT FK_replications_stats_topic_stats_id FOREIGN KEY (topic_stats_id) References topics_stats(topic_stats_id)

);

CREATE TABLE IF NOT EXISTS subscriptions_stats (

subscription_stats_id BIGSERIAL PRIMARY KEY,

topic_stats_id BIGINT NOT NULL,

subscription varchar(255) NULL,

msg_backlog BIGINT,

msg_rate_expired double precision ,

msg_rate_out double precision ,

msg_throughput_out double precision ,

msg_rate_redeliver double precision ,

number_of_entries_since_first_not_acked_message BIGINT,

total_non_contiguous_deleted_messages_range BIGINT,

subscription_type varchar(16),

blocked_subscription_on_unacked_msgs BOOLEAN,

time_stamp BIGINT,

UNIQUE (topic_stats_id, subscription),

CONSTRAINT FK_subscriptions_stats_topic_stats_id FOREIGN KEY (topic_stats_id) References topics_stats(topic_stats_id)

);

CREATE TABLE IF NOT EXISTS consumers_stats (

consumer_stats_id BIGSERIAL PRIMARY KEY,

consumer varchar(255) NOT NULL,

topic_stats_id BIGINT NOT NUll,

replication_stats_id BIGINT,

subscription_stats_id BIGINT,

address varchar(255),

available_permits BIGINT,

connected_since varchar(255),

msg_rate_out double precision ,

msg_throughput_out double precision ,

msg_rate_redeliver double precision ,

client_version varchar(36),

time_stamp BIGINT,

metadata text

);

CREATE TABLE IF NOT EXISTS tokens (

token_id BIGSERIAL PRIMARY KEY,

role varchar(256) NOT NULL,

description varchar(128),

token varchar(1024) NOT NUll,

UNIQUE (role)

);

CREATE TABLE IF NOT EXISTS users (

user_id BIGSERIAL PRIMARY KEY,

access_token varchar(256),

name varchar(256) NOT NULL,

description varchar(128),

email varchar(256),

phone_number varchar(48),

location varchar(256),

company varchar(256),

expire BIGINT,

password varchar(256),

UNIQUE (name)

);

CREATE TABLE IF NOT EXISTS roles (

role_id BIGSERIAL PRIMARY KEY,

role_name varchar(256) NOT NULL,

role_source varchar(256) NOT NULL,

description varchar(128),

resource_id BIGINT NOT NULL,

resource_type varchar(48) NOT NULL,

resource_name varchar(48) NOT NULL,

resource_verbs varchar(256) NOT NULL,

flag INT NOT NULL

);

CREATE TABLE IF NOT EXISTS tenants (

tenant_id BIGSERIAL PRIMARY KEY,

tenant varchar(255) NOT NULL,

admin_roles varchar(255),

allowed_clusters varchar(255),

environment_name varchar(255),

UNIQUE(tenant)

);

CREATE TABLE IF NOT EXISTS namespaces (

namespace_id BIGSERIAL PRIMARY KEY,

tenant varchar(255) NOT NULL,

namespace varchar(255) NOT NULL,

UNIQUE(tenant, namespace)

);

CREATE TABLE IF NOT EXISTS role_binding(

role_binding_id BIGSERIAL PRIMARY KEY,

name varchar(256) NOT NULL,

description varchar(256),

role_id BIGINT NOT NULL,

user_id BIGINT NOT NULL

);注意:登录pulsar需要切换到postgres用户

su postgres

psql -U postgres -W

输入密码:pulsar

未创建数据库前

执行sql后

4.部署pulsar-manager

4.1 创建pulsar-manager持久化pvc

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pulsar-manager2-pvc

namespace: pulsar

spec:

storageClassName: "managed-nfs-storage"

accessModes:

- ReadWriteMany

resources:

requests:

storage: 2Gi4.2 创建pvc

apiVersion: v1

kind: Service

metadata:

name: pulsar-manager

namespace: pulsar

labels:

app: pulsar-manager

annotations:

pulsar-manager.io/scrape: 'true'

spec:

selector:

app: pulsar-manager

ports:

- name: sport

port: 7750

protocol: TCP

targetPort: 7750

- name: nport

port: 9527

protocol: TCP

targetPort: 9527

nodePort: 32527

type: NodePort4.3 deployment文件

apiVersion: apps/v1

kind: Deployment

metadata:

name: pulsar-manager

namespace: pulsar

labels:

app: pulsar-manager

spec:

replicas: 1

selector:

matchLabels:

app: pulsar-manager

template:

metadata:

name: pulsar-manager

labels:

app: pulsar-manager

spec:

containers:

- name: pulsar-manager

image: apachepulsar/pulsar-manager:v0.2.0

imagePullPolicy: IfNotPresent

ports:

- name: sport

containerPort: 7750

- name: nport

containerPort: 9527

env:

- name: REDIRECT_HOST

value: "http://127.0.0.1"

- name: REDIRECT_PORT

value: "9527"

- name: DRIVER_CLASS_NAME

value: "org.postgresql.Driver"

- name: URL

value: "jdbc:postgresql://postgres-service.pulsar.svc:5432/pulsar_manager"

- name: USERNAME

value: "postgres"

- name: PASSWORD

value: "pulsar"

- name: LOG_LEVEL

value: "DEBUG"

# 开启JWT认证后, 这里需要指定pulsar-token-admin这个Secret中的JWT Token

- name: JWT_TOKEN

value: ""

volumeMounts:

- name: data

mountPath: /data

volumes:

- name: data

persistentVolumeClaim:

claimName: pulsar-manager2-pvc JWT_TOKEN在secret中需要通过base64 -d还原在写在value的值中

执行启动

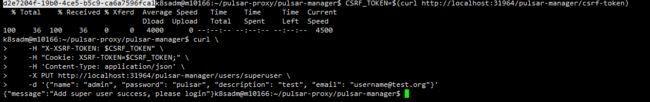

启动后需要添加admin用户

CSRF_TOKEN=$(curl http://localhost:31964/pulsar-manager/csrf-token)

curl \

-H "X-XSRF-TOKEN: $CSRF_TOKEN" \

-H "Cookie: XSRF-TOKEN=$CSRF_TOKEN;" \

-H 'Content-Type: application/json' \

-X PUT http://localhost:31964/pulsar-manager/users/superuser \

-d '{"name": "admin", "password": "pulsar", "description": "test", "email": "username@test.org"}'

创建成功

测试登录访问http://IP:32527

添加环境变量

确认查看集群数是否对应

查看租户,命名空间和主题可以看到之前我们手动命令创建的

租户

命名空间

主题

完成!

参考资料

https://mp.weixin.qq.com/s/1o13M5uxEiLqPZa08YyJdQ

https://blog.csdn.net/u010743471/article/details/127789231

https://blog.csdn.net/hll19950830/article/details/122666693

https://blog.csdn.net/yinjl123456/article/details/120926819