Android MTK Camera HAL3学习

Mtk Camera MtkCam3架构学习

站在巨人的肩膀上,补充学习!!!

虽然流程图都老了,没有时间画,但大框架还是不变的哈。

MTK HAL3各部件功能

1.MtkCam3中对接Android的接口:ICameraProvider, ICameraDevice, ICameraDeviceSession,ICameraDeviceCallback

(1)ICameraProvider的实现类CameraProviderImpl只是一个 adapter包在 Camera Device Manager, 适配不同版本的 camera device interface。Camera Service可以通过 ICameraProvider 去拿到 ICameraDevice 。

(2)ICameraDevice 和 ICameraDeviceSession 的实现类 CameraDevice3Impl, CameraDevice3SessionImpl 。用于Camera Service 去操作每一个 camera。 比如: open, close, configureStreams, processCaptureRequest 。

(3)ICameraDeviceCallback主要实现在AppStreamMgr和CameraDeviceManager,对数据向上层做callback处理;ICameraDeviceManager管理例如[email protected]/internal/0 、[email protected]/internal/0…对应HAL1/HAL3曝露给APP的logical device

2.AppStreamManager位于framework与pipeline之间,主要职责有如下三条:

(1)解析上层下发的Camera requset

(2)Callback的注册;底层数据callback主要由AppStreamMgr进行打包

(3)将Framework的转为HAL能识别的Stream

3.IPipelineModel的角色:

(1)在open/close stage,Power on/off sensor;

(2)在config stage,根据APP的createCaptureSession里面带下来的surface list,推测Output以及按照Topological推测Pipeline各个Node是否需要创建以及各个Node的I/O buffer,建立整条PipelineModel;

(3)在Request Stage,接到上层queue下来的request,转化为Pipleline统一的IPipelineFrame,决定这个request的I/O buffer、Topological、sub frame、dummy frame、feature set等信息;

4.HWNode是大Node,三方算法的挂载在这些node里面,作为小node:

(1)P1Node负责输出raw图;P2A负责将raw图转yuv图;P2CaptureNode主要负责拍照的frame的处理,P2StreamingNode主要负责录像预览的数据处理,JpegNode的输入时main YUV、Thumbnail YUV及metadata,输出是Jpeg及App metadata。

5.Android Camera Hal3 标准API

(1)camera_module_t->common.open()

(2)camera3_device_t->ops->initialize()

(3)camera3_device_t->ops->configure_streams()

(4)camera3_device_t->ops->constrct_default_request_settings()

(5)camera3_device_t->ops->process_capture_request()

(6)camera3_callback_ops_t->notify()

(7)camera3_callback_ops_t->process_capture_result()

(8)camera3_device_t->ops->flush()

(9)camera3_device_t->common->close()

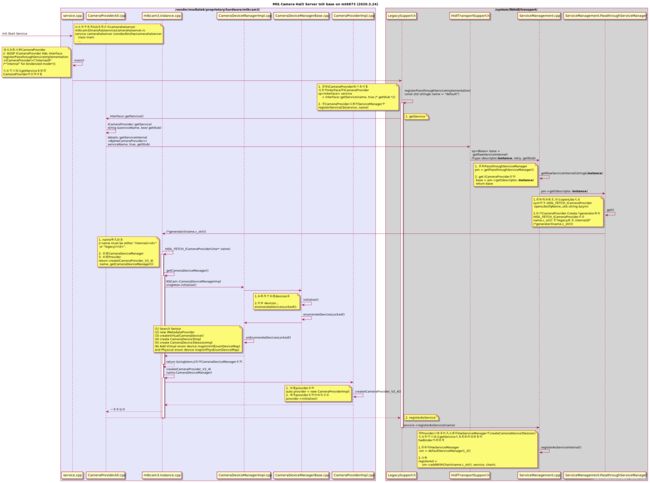

MTK HAL3 Init

在rc进程中使用init进程注册Camerahalserver,通过registerPassthroughServiceImplementation来注册CameraProvider,之后调用getservice,打开[email protected]库,通过HIDL接口HIDL_FETCH_ICameraProvider来获取CameraProvider实例对象,并注册为sevice;

调用emulateDeviceLocked枚举相机设备(CameraDeviceManagerBase.cpp → CameraDeviceManagerImpl.cpp → imgsensor_drc.cpp → sensorlist,cpp → imgsensor.c → imgsensor_sensor_list.c /imgsensor_hw.c );

枚举数量sensorNum后构建mPhysEnumDeviceMap以及创建IMetadataProvider获取对应deviceid的元数据信息staticMetadata;

调用addVirtualDevicesLocked将创建的CameraDevice3Impl封装成VirtEnumDevice,保存在 mVirtEnumDeviceMap;同时构造CameraDevice3SessionImpl两者用于后续操作每一个Camera的会话接口,其中保存了底层的配置信息mStaticInfo;

I mtkcam-devicemgr: pHalDeviceList:0xb400007cf3be5c60 searchDevices:4 queryNumberOfDevices:4

D mtkcam-devicemgr: [onEnumerateDevicesLocked] [0x00] vid: 0x00 isHidden:0 IMetadataProvider:0xb400007d53be4810 sensor:SENSOR_DRVNAME_HI4821Q_MIPI_RAW

D mtkcam-devicemgr: [operator()] Camera device@3.6/internal/0, hidden: 0

D mtkcam-devicemgr: [onEnumerateDevicesLocked] [0x01] vid: 0x01 isHidden:0 IMetadataProvider:0xb400007d53be1e10 sensor:SENSOR_DRVNAME_HI1336_MIPI_RAW

D mtkcam-devicemgr: [operator()] Camera device@3.6/internal/1, hidden: 0

D mtkcam-devicemgr: [onEnumerateDevicesLocked] [0x02] vid: 0x02 isHidden:0 IMetadataProvider:0xb400007d53be4b90 sensor:SENSOR_DRVNAME_GC02M1B_MIPI_MONO

D mtkcam-devicemgr: [operator()] Camera device@3.6/internal/2, hidden: 0

D mtkcam-devicemgr: [onEnumerateDevicesLocked] [0x03] vid: 0x03 isHidden:0 IMetadataProvider:0xb400007d53be3a10 sensor:SENSOR_DRVNAME_HI4821Q_MIPI_RAW_bayermono

D mtkcam-devicemgr: [operator()] Camera device@3.6/internal/3, hidden: 0

D mtkcam-devicemgr: [onEnumerateDevicesLocked] [addSensorSizeToCompare] Sensor 0, 8032x6032

D mtkcam-devicemgr: [onEnumerateDevicesLocked] [addSensorSizeToCompare] Sensor 1, 4224x3136

D mtkcam-devicemgr: [onEnumerateDevicesLocked] [addSensorSizeToCompare] Sensor 2, 1600x1200

I mtkcam-devicemgr: [logLocked] Physical Devices: # 3

I mtkcam-devicemgr: [logLocked] --

I mtkcam-devicemgr: [logLocked] [00] -> orientation(wanted/setup)=( 90/90 ) BACK hasFlashUnit:1 SENSOR_DRVNAME_HI4821Q_MIPI_RAW [PhysEnumDevice:0xb400007cd3c00650]

I mtkcam-devicemgr: [logLocked] --

I mtkcam-devicemgr: [logLocked] [01] -> orientation(wanted/setup)=(270/270) FRONT hasFlashUnit:0 SENSOR_DRVNAME_HI1336_MIPI_RAW [PhysEnumDevice:0xb400007cd3c001d0]

I mtkcam-devicemgr: [logLocked] --

I mtkcam-devicemgr: [logLocked] [02] -> orientation(wanted/setup)=(270/90 ) BACK hasFlashUnit:1 SENSOR_DRVNAME_GC02M1B_MIPI_MONO [PhysEnumDevice:0xb400007cd3bff4b0]

I mtkcam-devicemgr: [logLocked] --

I mtkcam-devicemgr: [logLocked] Virtual Devices: # 4

I mtkcam-devicemgr: [logLocked] --

I mtkcam-devicemgr: [logLocked] [device@3.6/internal/0] -> 00 torchModeStatus:AVAILABLE_OFF hasFlashUnit:1 [VirtEnumDevice:0xb400007cb3c0e3d0 IVirtualDevice:0xb400007d03bd7140]

I mtkcam-devicemgr: [logLocked] --

I mtkcam-devicemgr: [logLocked] [device@3.6/internal/1] -> 01 torchModeStatus:NOT_AVAILABLE hasFlashUnit:0 [VirtEnumDevice:0xb400007cb3c06450 IVirtualDevice:0xb400007d03bd7400]

I mtkcam-devicemgr: [logLocked] --

I mtkcam-devicemgr: [logLocked] [device@3.6/internal/2] -> 02 torchModeStatus:AVAILABLE_OFF hasFlashUnit:1 [VirtEnumDevice:0xb400007cb3c069d0 IVirtualDevice:0xb400007d03bd7ae0]

I mtkcam-devicemgr: [logLocked] --

I mtkcam-devicemgr: [logLocked] [device@3.6/internal/3] -> 03 torchModeStatus:AVAILABLE_OFF hasFlashUnit:1 [VirtEnumDevice:0xb400007cb3c04f50 IVirtualDevice:0xb400007d03bd6e80]

I mtkcam-devicemgr: [logLocked] --

I mtkcam-devicemgr: [logLocked] Open Devices: # 0 (multi-opened maximum: # 1)

OpenCamera

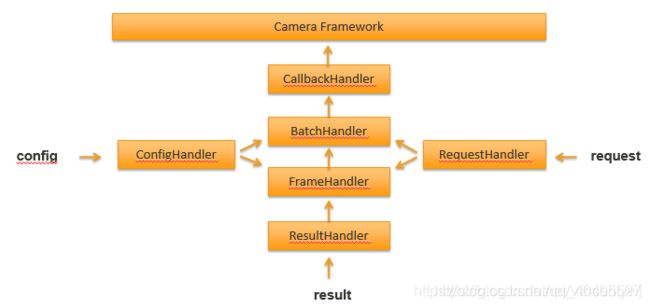

通过CameraDevice3Impl, CameraDevice3SessionImpl执行open flow,调用onOpenLocked;创建了IAppstreamMgr对象,初始化的时候创建CallbackHandler、CallbackHandler、FrameHandler、ResultHandler、RequestHandler、ConfigHandler对象,不同对象处理不同类型的事件,这样可以最大程度减小阻塞

AppStreamMgr架构

/ ************************************************************************************************************************************** /

负责AppStreamMgr的回调

alps/vendor/mediatek/proprietary/hardware/mtkcam3/main/hal/device/3.x/app/AppStreamMgr.CallbackHandler.cpp

负责AppStreamMgr的Frame处理

alps/vendor/mediatek/proprietary/hardware/mtkcam3/main/hal/device/3.x/app/AppStreamMgr.FrameHandler.cpp

负责AppStreamMgr的Batch处理

alps/vendor/mediatek/proprietary/hardware/mtkcam3/main/hal/device/3.x/app/AppStreamMgr.BatchHandler.cpp

负责AppStreamMgr的处理请求

alps/vendor/mediatek/proprietary/hardware/mtkcam3/main/hal/device/3.x/app/AppStreamMgr.RequestHandler.cpp

负责AppStreamMgr的结果返回

alps/vendor/mediatek/proprietary/hardware/mtkcam3/main/hal/device/3.x/app/AppStreamMgr.ResultHandler.cpp

负责AppStreamMgr的Config处理

alps/vendor/mediatek/proprietary/hardware/mtkcam3/main/hal/device/3.x/app/AppStreamMgr.ConfigHandler.cpp

/ *************************************************************************************************************************************** /

构建IPipelineModeManager,获取IPipelineMode实例,执行PipelineMode中的open()方法;调用mHalDeviceAdapter-> open/powerOn;这里的powerOn有另起一个线程去操作sensor,等待sensor上电完成后对3A进行初始化以及powerOn操作(3A的powerOn 似乎只有同步2ASetting AF 和 AE, 因为AE algo也需要 fFum 和 fFocusLength)

ConfigureStream

AppStreamMgr.configHandler负责AppStreamMgr的Config处理,调用beginConfigureStreams开始处理配流

checkStreams的原则:最多一个输入Input;最少一个输出Output且Format不能相同

D mtkcam-AppStreamMgr: [AppMgr-configureStreams] 0 4000x3000 IN ImgFormat:0x11(NV21) ...

D mtkcam-AppStreamMgr: [AppMgr-configureStreams] 0x1 960x720 OUT ImgFormat:0x11(NV21) ...

D mtkcam-AppStreamMgr: [AppMgr-configureStreams] 0x2 4000x3000 OUT ImgFormat:0x2300(JPEG) ...

D mtkcam-AppStreamMgr: [AppMgr-configureStreams] 0x3 4000x3000 OUT ImgFormat:0x11(NV21) ...

PipelineModelImpl::configure配置sessionCfgParams参数后调用createPipelineModelSession创建会话

(1)验证输出的sessionCfgParams是否有效( 是否为 nullptr )

(2)调用convertToUserConfigutaion将UserConfiguration转为pParsedAppConfiguration对象 (std::make_shard返回指针类型)

D mtkcam-PipelineModelSession-Factory: [parseAppStreamInfo] ispTuningDataEnable(0) StreamId(0x1)

ConsumerUsage(512) size(960,720) yuv_isp_tuning_data_size(1280,720) raw_isp_tuning_data_size(2560,1920)

D mtkcam-PipelineModelSession-Factory: [parseAppStreamInfo] ispTuningDataEnable(0) StreamId(0x3)

ConsumerUsage(131075) size(4080,3072) yuv_isp_tuning_data_size(1280,720) raw_isp_tuning_data_size(2560,1920)

D mtkcam-PipelineModelSession-Factory: [decidePipelineModelSession] Default

(3)创建PipelineSettingPolicy;调用decidePipelineModelSession对Type3 PD、remosaic、SMVR进行决策判断并制定

D mtkcam-PipelineSettingPolicyFactory: [decidePolicyAndMake] Neither SMVRConstraint or SMVRBatch policy used,

pSMVRBatchInfo=0x0

在集成3rd 超夜算法的时候可以看到decidePipelineModelSession函数中的isSuperNightMode,同时可使用opMode去客制化控制三方算法

PipelindeModelSessionFactory.cpp

static auto decidePipelineModelSession(IPipelineModelSessionFactory::CreateParams const& creationParams,

std::shared_ptr<PipelineUserConfiguration>const& pUserConfigration,

std::shared_ptr<PipelineUserConfiguration2>const& pUserConfiguration2,

std::shared_prt<IPipelineSettingPolicy>const& pSettingPolicy){

...

auto const& pParsedAppConfiguration = pUserConfiguration->pParseAppConfiguration;

if(pParsedAppConfiguration->operationMode == 0x0001){ //自定义

//do it;

}

if (pUserConfigutaion->pParsedAppConfiguraion->isSuperNightMode){

CAN_ULOGMI("[%s] Super Nigth Mode using Default Sesion", _FUNCTION_);

return PipelineModelSessionDefault::makeInstance(

"Default+SuperNightMode/", convertTO_CtorParams(),

PipelineModelSesionDefault::ExtCtorParams{

.pAppRaw16Reprocessor =

makeAppRaw16Reprocessor(

creationParams, pUserConfiguration,

"superNightMode"

),

}

);

}

(4)创建pipeline session(管理flow control Session Policy决定要走哪个session,特殊的模式走特殊的session);(3)(4)会决定出pipelineContext(拓补图 将各个hwnode组合起来成为一条pipeline)

Requset

D mtkcam-dev3: [0-session::processCaptureRequest] -> 1st request - OK

D mtkcam-AppStreamMgr: [0-FrameHandler::registerFrame] zslStillCapture=1, reprocessing=0, requestType=1

I mtkcam-PipelineModelSession: [submitRequest]<Default/0> requestNo:81 {repeating:0 control.aeTargetFpsRange:5,30

control.captureIntent:2 control.enableZsl:1 control.processRawEn:0 control.mode:1

control.sceneMode:0 control.videoStabilizationMode:0 edge.mode:2 }

D mtkcam-PipelineModelSessionBasic: [processEvaluatedFrame] [requestNo:81] multi-frame request: sub:#3 preSub:#0

preDummy:#0 postDummy:#3 needZslFlow:1

D mtkcam-PipelineModelSessionDefault: [onProcessEvaluatedFrame] [requestNo:81] submit Zsl Request

D mtkcam-PipelineModelSessionDefault: [operator()] ZSL frame no : 81

I mtkcam-PipelineModelSessionDefault: [operator()] zsl requestNo:81 new frames: [F81] [F82] [F83] [F84]

I MtkCam/P1NodeImp: [acceptRequest] [Cam::0] Num[F:81,R:81] - BypassFrame

D MtkCam/P1NodeImp: [verifyAct] [Cam::0] send the ZSL request and try to trigger

I MtkCam/P1NodeImp: [releaseAction] [Cam::0 R82 S82 E82 D79 O79 #0] [P1::DEL][Num Q:83 M:0 F:81 R:81 @0][Type:7

I MtkCam/P1NodeImp: [runQueue] [Cam::0 R82 S82 E82 D79 O79 #0] [P1::REQ][Num Q:83 M:0 F:81 R:81 @0][Type:7

I MtkCam/P1NodeImp: [releaseFrame] [Cam::0 R82 S82 E82 D79 O79 #0] [P1::OUT][Num Q:83 M:0 F:81 R:81 @0][Type:7

D MtkCam/ppl_context: [sendFrameToRootNodes] r81 frameNo:81 (X) -> 0x1 success; erase record

D MtkCam/ppl_context: [onDispatchFrame] r81 frameNo:81 0x1 -> 0x14 READY(1/1)

...//实际sendFrameToRootNodes与onDispatchFrame之间有帧差,zsl主要是bypassFrame

...//ByPassFrame [F81] [F82] [F83] [F84] 所以下dummy后onDispatchFrame是 frameNo:78

I mtkcam-PipelineModelSession: [submitRequest]<Default/0> requestNo:82 {repeating:0 control.aeTargetFpsRange:5,30

I MtkCam/P1NodeImp: [requireJob] [Cam::0] Roll[0] < (1)

I MtkCam/P1NodeImp: [fetchJob] [Cam::0] using-dummy-request

I MtkCam/P1NodeImp: [createAction] [Cam::0 R82 S82 E82 D80 O79 #0] [P1::REQ][Num Q:87 M:0 F:-1 R:-1 @0][Typ3:3

I MtkCam/P1NodeImp: [setRequest] [Cam::0 R83 S83 E82 D80 O79 #0] [P1::SET][Num Q:87 M:83 F:-1 R:-1 @0][Typ3:3

I MtkCam/P1NodeImp: [runQueue] [Cam::0 R83 S83 E82 D80 O79 #1] [P1::REQ][Num Q:88 M:0 F:85 R:82 @0][Type:1

D MtkCam/ppl_context: [sendFrameToRootNodes] r82 frameNo:85 (X) -> 0x1 success; erase record

I MtkCam/P1NodeImp: [releaseAction] [Cam::0 R83 S83 E82 D80 O79 #1][P1::DEQ][Num Q:80 M:80 F:78 R:78 @82][Type:1

I MtkCam/P1NodeImp: [releaseFrame] [Cam::0 R83 S83 E83 D81 O81 #1] [P1::OUT][Num Q:81 M:81 F:79 R:79 @83][Type:1

D MtkCam/ppl_context: [onDispatchFrame] r78 frameNo:78 0x1 -> 0x15 READY(1/1)

(1)CameraDevice3Session在执行capturerequset的时候首先要将Framework传下来的类型转换成HAL能识别的appRequest;通过pAppStreamManager->sumbitRequest送入AppStreamMgr(captureIntent: 4 对应record+capture 3对应record 2对应capture 1对应preview);AppStreamMgr最终会调用registerFrames,主要是将appRequest填入AppStreamMgr的结构体中,用于后续callback使用

(2)通过appRequest创建feature或者customization的PipelineRequsets;由pPipelineModel->submitRequset处理;其中会利用之前open时候创建的IPipelineModelSession去submitRequset ( Pipeline做完自己的工作之后会调用UpdateResult进行callback到AppStreamMgr )

(3)PipeModelSession首先需要convert,将UserRequsetParams转为ParsedAppRequest;在submitOneRequest会中会分别调用onRequset_EvaluateRequest,onRequset_Reconfiguraion,onRequest_ProcessEvaluatedFrame,onRequest_Ending

(4)在onRequset_EvaluateRequest中最终会去P2NodeDecisionPolicy中调用evalutreRequest,其会制定是否需要使用p2c node(之前遇到过微距专业模式下拍照没有exif信息,就是没有needP2Capturecnode,根因是由于minDuration导致的拍照的size等于rrzo size)

(5)在onRequest_ProcessEvaluatedFrame中调用onProcessEvaluatedFrame会去处理requset,是否是ZSL模式(non-zsl,zsl streaming / normal capture),函数最后会调用pipelineContext->queue将IPipelineFrame送入每一个hw node进行处理;首先就是对p1Node进行P1:queue

(6)如果走zsl capture, P1Node会直接bypassFrame,不会set到hal3那边,同时由于是zsl flow,p1 node这边在生成zsl这一帧同时会垫一帧,在底层hal3看到就是dummy帧;

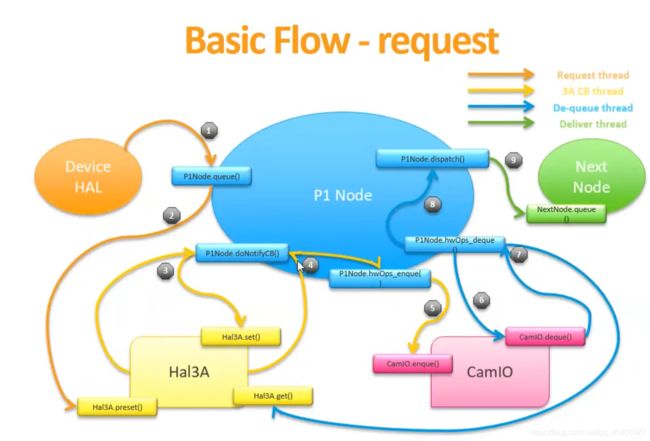

P1Node从Hal3A中去获取metadata,从P1Driver(CamIO)中去获取image,从log中 Num Q:80 M:80 F:78 R:78 @82中可以得知Q是magic number,主要是用于3A那边的(3A主要就是P1Node阶段跑起来的线程),F是Frame number用于记录每一帧的action,R是Requset number用于记录request(有时候一个request会对应多个frame num,比如多帧拍照的时候),@82对应sensor出帧sof(singal of frame)。P1Node出图主要有大尺寸的imgo(pure raw),小尺寸的RRZO(process raw)等,结束后调用onDispatchFrame送给下个node

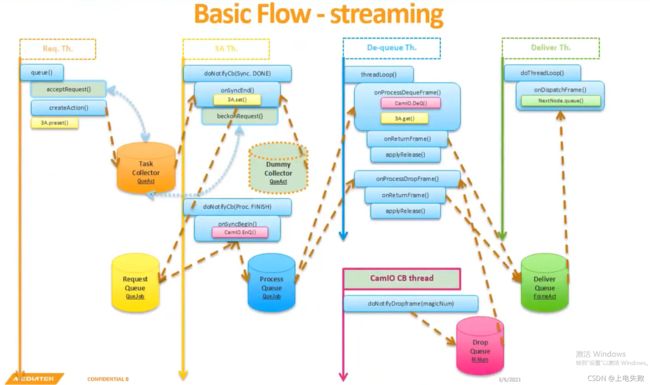

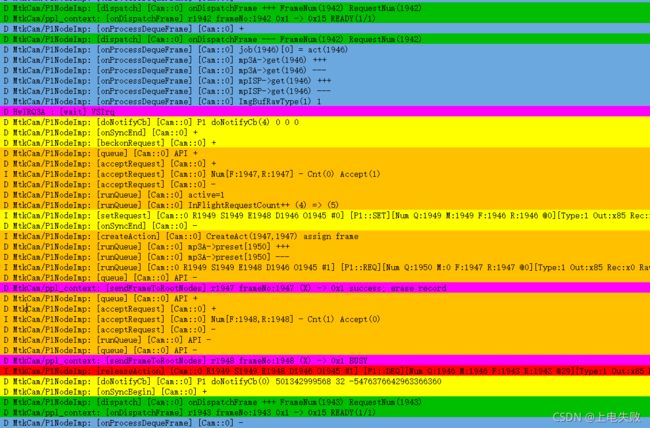

P1Node主要是有5个Thread在跑, Req Th 、 3A Th 、De-queue Th 、 Deliver Th、 P1 Driver Th

Req Th :通过acceptRequest判断task是否为空,如果为空,则createAction创建一个act

3A Th :Sensor出一个Vsync down的信号后,P1Node就会调用OnSyncEnd去设对应的metadata,如task不为空,则会取出这个act送到

Request Queue,3A Hal拿到set后就会去计算,计算完毕之后则会返回一个 Procfinish,在OnSyncBegin函数中就是enque一个

frame buffer进P1 Drvier,同时取出Request Queue中的act送到Process Queue中,如果task为空为了保证流程的正常性,则

会垫一个dummy,beckonRequest就是通知 Req Th 可以下一个新的act过来,

De-queue Th :在onProcessDequeFrame就会去等P1Driver的frame buffer 以及 get 3A那边设下来的metadata,拿到后送入 Deliver Th中去onDispatchFrame送入一个node使用