centos7 搭建双网卡绑定team(主备模式)实例

centos7 搭建双网卡绑定team(主备模式)实例

一、前言

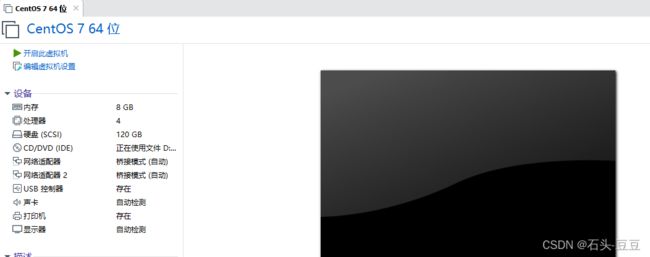

准备了一台Centos7虚拟机,除管理网口外另外添加了两张网卡做主备测试。

主要目的:实现网络的高可用,防止一条网线或交换机故障影响该物理机进行数据交互

team的四种模式:

broadcast (广播模式)

activebackup (主备模式)

roundrobin (轮训模式)

loadbalance (负载均衡) LACP

两张网卡 ens37、ens38,绑定为team0。

[root@localhost ~]# ip addr

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:68:41:58 brd ff:ff:ff:ff:ff:ff

inet 192.168.0.111/24 brd 192.168.0.255 scope global noprefixroute dynamic ens33

valid_lft 7160sec preferred_lft 7160sec

inet6 fe80::b6d4:b92a:41f6:6aaa/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: ens37: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:2b:ba:f5 brd ff:ff:ff:ff:ff:ff

inet 192.168.0.108/24 brd 192.168.0.255 scope global noprefixroute dynamic ens37

valid_lft 7160sec preferred_lft 7160sec

inet6 fe80::40a5:becd:a073:dc24/64 scope link noprefixroute

valid_lft forever preferred_lft forever

4: ens38: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:2b:ba:ff brd ff:ff:ff:ff:ff:ff

inet 192.168.0.109/24 brd 192.168.0.255 scope global noprefixroute dynamic ens38

valid_lft 7160sec preferred_lft 7160sec

inet6 fe80::82ac:b478:4978:d539/64 scope link noprefixroute

valid_lft forever preferred_lft forever

二、测试team主备模式

①、创建team0网卡

[root@localhost ~]# nmcli connection add type team ifname team0 con-name team0 config '{"runner":{"name":"activebackup"}}'

Connection 'team0' (989fbffa-79dd-4fe7-8da8-88002ae9dcfe) successfully added.

[root@localhost ~]# teamdctl team0 state

setup:

runner: activebackup

runner:

active port:

②、绑定ens37、ens38网卡到team0

[root@localhost ~]# nmcli connection add ifname ens37 con-name team0-port1 type team-slave master team0

Connection 'team0-port1' (50f80466-7d87-4b1a-a5fa-98cd69e4f309) successfully added.

[root@localhost ~]# nmcli connection add ifname ens38 con-name team0-port2 type team-slave master team0

Connection 'team0-port2' (8fefd4e8-b0ee-4119-b51f-b6a38adda3fa) successfully added.

③、再次查看team0状态

[root@localhost ~]# teamdctl team0 state

setup:

runner: activebackup

runner:

active port:

④、重启team0网卡和network、networkmanager服务

[root@localhost ~]# nmcli connection down team0 && nmcli connection up team0

Connection 'team0' successfully deactivated (D-Bus active path: /org/freedesktop/NetworkManager/ActiveConnection/4)

(process:1765): GLib-GIO-WARNING **: 22:58:37.908: gdbusobjectmanagerclient.c:1589: Processing InterfaceRemoved signal for path /org/freedesktop/NetworkManager/ActiveConnection/4 but no object proxy exists

Connection successfully activated (master waiting for slaves) (D-Bus active path: /org/freedesktop/NetworkManager/ActiveConnection/5)

[root@localhost ~]# teamdctl team0 state

setup:

runner: activebackup

runner:

active port:

[root@localhost ~]# systemctl restart NetworkManager

[root@localhost ~]# teamdctl team0 state

setup:

runner: activebackup

runner:

active port:

[root@localhost ~]# teamdctl team0 state

setup:

runner: activebackup

runner:

active port:

[root@localhost ~]# nmcli connection down team0 && nmcli connection up team0

[root@localhost ~]# systemctl restart network

[root@localhost ~]# teamdctl team0 state

setup:

runner: activebackup

ports:

ens37

link watches:

link summary: up

instance[link_watch_0]:

name: ethtool

link: up

down count: 0

ens38

link watches:

link summary: down

instance[link_watch_0]:

name: ethtool

link: down

down count: 0

runner:

active port: ens37

⑤、查看team0获取到的IP地址信息

192.168.0.108

[root@localhost ~]# ip addr

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:68:41:58 brd ff:ff:ff:ff:ff:ff

inet 192.168.0.111/24 brd 192.168.0.255 scope global noprefixroute dynamic ens33

valid_lft 7150sec preferred_lft 7150sec

inet6 fe80::b6d4:b92a:41f6:6aaa/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: ens37: mtu 1500 qdisc pfifo_fast master team0 state UP group default qlen 1000

link/ether 00:0c:29:2b:ba:f5 brd ff:ff:ff:ff:ff:ff

4: ens38: mtu 1500 qdisc pfifo_fast master team0 state UP group default qlen 1000

link/ether 00:0c:29:2b:ba:f5 brd ff:ff:ff:ff:ff:ff

7: team0: mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 00:0c:29:2b:ba:f5 brd ff:ff:ff:ff:ff:ff

inet 192.168.0.108/24 brd 192.168.0.255 scope global noprefixroute dynamic team0

valid_lft 7150sec preferred_lft 7150sec

inet6 fe80::43c3:b672:e22b:1ec8/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@localhost ~]# teamdctl team0 state

setup:

runner: activebackup

ports:

ens37

link watches:

link summary: up

instance[link_watch_0]:

name: ethtool

link: up

down count: 0

ens38

link watches:

link summary: up

instance[link_watch_0]:

name: ethtool

link: up #

down count: 0

runner:

active port: ens37 #当前活动网卡

⑥、模拟ens37故障

ping 检测丢了两个包网络恢复正常。

C:\Users\Administrator>ping 192.168.0.108 -t

正在 Ping 192.168.0.108 具有 32 字节的数据:

来自 192.168.0.108 的回复: 字节=32 时间<1ms TTL=64

来自 192.168.0.108 的回复: 字节=32 时间<1ms TTL=64

来自 192.168.0.108 的回复: 字节=32 时间<1ms TTL=64

来自 192.168.0.108 的回复: 字节=32 时间<1ms TTL=64

来自 192.168.0.108 的回复: 字节=32 时间=1ms TTL=64

来自 192.168.0.108 的回复: 字节=32 时间<1ms TTL=64

来自 192.168.0.108 的回复: 字节=32 时间=1ms TTL=64

来自 192.168.0.108 的回复: 字节=32 时间=1ms TTL=64

请求超时。

请求超时。

来自 192.168.0.108 的回复: 字节=32 时间<1ms TTL=64

来自 192.168.0.108 的回复: 字节=32 时间<1ms TTL=64

来自 192.168.0.108 的回复: 字节=32 时间=1ms TTL=64

来自 192.168.0.108 的回复: 字节=32 时间<1ms TTL=64

来自 192.168.0.108 的回复: 字节=32 时间<1ms TTL=64

来自 192.168.0.108 的回复: 字节=32 时间<1ms TTL=64

来自 192.168.0.108 的回复: 字节=32 时间<1ms TTL=64

来自 192.168.0.108 的回复: 字节=32 时间<1ms TTL=64

当前活动网卡ens38

[root@localhost ~]# teamdctl team0 state

setup:

runner: activebackup

ports:

ens37

link watches:

link summary: down

instance[link_watch_0]:

name: ethtool

link: down

down count: 1

ens38

link watches:

link summary: up

instance[link_watch_0]:

name: ethtool

link: up

down count: 0

runner:

active port: ens38

三、其他

①、给team0设置静态IP

[root@localhost ~]# nmcli connection modify ipv4.addresses 192.168.1.7/24 ipv4.gateway 8.8.8.8 ipv4.dns 8.8.8.8 ipv4.method manual

②、配置team0负责均衡模式

1、创建team模式

nmcli con add type team con-name team0 ifname team0 config ‘{“runner”:{“name”:“loadbalance”}}’ (负载均衡模式)(roundrobin、轮训)

nmcli con show

2、创建team-slave,绑定网卡

nmcli con add type team-slave con-name team0-prot1 ifname em1 master team0

nmcli con add type team-slave con-name team0-prot2 ifname em2 master team0

3、配置IPV4地址

nmcli con mod team0 ipv4.addresses “171.16.41.x/24”

nmcli con mod team0 ipv4.gateway “171.15.41.x”

nmcli con mod team0 ipv4.method manual

4、启动team0

nmcli con up team0

5、重启网络服务

systemctl restart network

6、检查网卡绑定状态

teamdctl team0 state

7、检查网卡绑定效果

nmcli dev dis em1 //关闭绑定状态

nmcli dev con em1 //恢复绑定状