keepalived + haproxy 实现web 双主模型的高可用负载均衡--转

1、本文的架构图:

阐述各服务器用途:

1、haproxy在本构架中实现的是:负载均衡

2、keepalived实现对haproxy的高可用

3、apache static 实现静态页面的访问

4、aoache dynamic实现动态页面的访问,图中有两个是实现负载均衡的

配置各功能模块:

一、配置haproxy和keepalived

验证:

1、当一台keepalived宕机后,VIP会不会转移到另外一台服务器

2、当一台haproxy服务出故障,VIP会不会转移到另外一台服务器

注意:

那如果keepalived宕机了,haproxy服务还正常运行,我们要不要让另外一台服务器把VIP夺过去呢?

理论上来讲:最好不要,但是我们的keepalived中的脚本监控着haproxy的进程,keepalived宕机之后,就无从得知haproxy的健康状态,也不能决定自己的优先权priority降不降低了。所以,理论上来讲最好不要,但是实际中光靠keepalived是做不到的。

配置:

1、给两台服务器分别安装上keepalived

|

1

|

[root@station139 ~]# yum -y install keepalived

|

2、配置keepalived

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

|

[root@node2 ~]# vim /etc/keepalived/keepalived.conf

! Configuration File

for

keepalived

global_defs {

notification_email {

root@localhost 配置服务状态变化发送邮件到哪个地址

}

notification_email_from kaadmin@localhost

smtp_server

127.0

.

0.1

给哪个smtp服务器发邮件

smtp_connect_timeout

30

联系上面smtp服务器

30

秒联系不上,就超时

router_id LVS_DEVEL

}

vrrp_script chk_haproxy { 本脚本是用来检测该服务器上haproxy服务的健康状态的

script

"killall -0 haproxy"

interval

1

weight -

2

}

vrrp_instance VI_1 {

state MASTER 这太服务器为主的keepalived

interface

eth0 通过eth0网卡广播

virtual_router_id

200

虚拟路由id要改,如果在一个局域网中有多个keepalived集群

priority

100

优先级

advert_int

1

authentication {

auth_type PASS

auth_pass

11112222

}

track_script {

chk_haproxy

}

virtual_ipaddress {

192.168

.

1.200

本机的虚拟IP

}

notify_master

"/etc/keepalived/notify.sh master"

各不用状态下运行的脚本

notify_backup

"/etc/keepalived/notify.sh backup"

notify_fault

"/etc/keepalived/notify.sh fault"

}

vrrp_instance VI_2 { 另外一台主keepalived的从

state BACKUP

interface

eth0

virtual_router_id

57

priority

99

设置要比另外一台主keepalived的优先级低

advert_int

1

authentication {

auth_type PASS

auth_pass

1111

}

track_script {

chk_mantaince_down

}

virtual_ipaddress {

192.168

.

1.201

}

}

|

3、写keepalived处在不同状态下所运行的脚本

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

|

#!/bin/bash

# Author: MageEdu <[email protected]>

# description: An example of notify script

#

vip=

192.168

.

1.200

contact=

'root@localhost'

notify() {

mailsubject=

"`hostname` to be $1: $vip floating"

mailbody=

"`date '+%F %H:%M:%S'`: vrrp transition, `hostname` changed to be $1"

echo $mailbody | mail -s

"$mailsubject"

$contact

}

case

"$1"

in

master)

notify master

/etc/rc.d/init.d/haproxy start

exit

0

;;

backup)

notify backup

/etc/rc.d/init.d/haproxy stop

exit

0

;;

fault)

notify fault

/etc/rc.d/init.d/haproxy stop

exit

0

;;

*)

echo

'Usage: `basename $0` {master|backup|fault}'

exit

1

;;

esac

给脚本以执行权限:

chmod +x /etc/keepalived/notify.sh

|

4、配置haproxy

因为要实现动静分离,那么我们在配置文件中,就要定义动态资源静态资源转移到不同的服务上去

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

|

[root@node2 ~]# yum -y install haproxy 安装haproxy

[root@node2 ~]# vim /etc/haproxy/haproxy.cfg

#

log

127.0

.

0.1

local2

chroot /

var

/lib/haproxy

pidfile /

var

/run/haproxy.pid

maxconn

4000

user haproxy

group haproxy

daemon

# turn on stats unix socket

stats socket /

var

/lib/haproxy/stats

#---------------------------------------------------------------------

# common defaults that all the

'listen'

and

'backend'

sections will

#

use

if

not designated

in

their block

#---------------------------------------------------------------------

defaults

mode http 指定haproxy工作模式为http

log global

option httplog

option dontlognull

option http-server-close 当客户端超时时,允许服务端断开连接

option forwardfor except

127.0

.

0.0

/

8

在http的响应头部加入forwardfor

option redispatch #在使用了基于cookie的会话保持的时候,通常加这么一项,一旦后端某一server宕机时,能够将其会话重新派发到其它的upstream servers

retries

3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn

3000

#---------------------------------------------------------------------

# main frontend which proxys to the backends

#---------------------------------------------------------------------

frontend main *:

80

前端代理

acl url_static path_beg -i /

static

/images /javascript /stylesheets

acl url_static path_end -i .jpg .gif .png .css .js

acl url_dynamic path_end -i .php

use_backend

static

if

url_static

default_backend

dynamic

#---------------------------------------------------------------------

#

static

backend

for

serving up images, stylesheets and such

#---------------------------------------------------------------------

backend

static

后端的静态请求响应

balance roundrobin

server

static

192.168

.

1.100

:

80

inter

3000

rise

2

fall

3

check maxconn

5000

#---------------------------------------------------------------------

# round robin balancing between the

var

ious backends

#---------------------------------------------------------------------

backend

dynamic

后端的动态请求响应

balance roundrobin

server dynamic1

192.168

.

1.101

:

80

inter

3000

rise

2

fall

3

check maxconn

5000

server dynamic2

192.168

.

1.102

:

80

inter

3000

rise

2

fall

3

check maxconn

5000

listen statistics

mode http

bind *:

8080

~ stats enable

stats auth admin:admin

stats uri /admin?stats 指定URI的访问路径

stats admin

if

TRUE

stats hide-version

stats refresh 5s

acl allow src

192.168

.

0.0

/

24

定义访问控制列表

tcp-request content accept

if

allow

tcp-request content reject

|

5、配置另外一台haproxy服务器

因为两台服务器的配置大体相同,我们就直接讲以上配置好的复制文件和脚本文件都传到这台haproxy服务器上,做下修就可以了

|

1

2

3

4

5

6

7

8

9

|

[root@node2 ~]# scp /etc/keepalived/keepalived.conf root@

192.168

.

1.121

:/etc/keepalived/

root@

192.168

.

1.121

's password:

keepalived.conf

100

%

4546

4

.4KB/s

00

:

00

[root@node2 ~]# scp /etc/keepalived/notify.sh root@

192.168

.

1.121

:/etc/keepalived/

root@

192.168

.

1.121

's password:

notify.sh

100

%

751

0

.7KB/s

00

:

00

[root@node2 ~]# scp /etc/haproxy/haproxy.cfg root@

192.168

.

1.121

:/etc/haproxy/

root@

192.168

.

1.121

's password:

haproxy.cfg

100

%

3529

3

.5KB/s

00

:

00

|

传输完成,接着来配置 /etc/keepalived/keepalived.conf 因为两个节点上的/etc/haproxy/haproxy.cfg相同不用更改

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

|

interface

eth0

! Configuration File

for

keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from kaadmin@localhost

smtp_server

127.0

.

0.1

smtp_connect_timeout

30

router_id LVS_DEVEL

}

vrrp_script chk_haproxy {

script

"killall -0 haproxy"

interval

1

weight -

2

}

vrrp_instance VI_1 {

state BACKUP 这台把master改成 backup

interface

eth0

virtual_router_id

200

priority

99

优先级调的比上一个低

advert_int

1

authentication {

auth_type PASS

auth_pass

11112222

}

track_script {

chk_haproxy

}

virtual_ipaddress {

192.168

.

1.200

}

}

vrrp_instance VI_2 {

state MASTER 本台的这个要调成MASTER,上个是backup

interface

eth0

virtual_router_id

57

priority

100

这个优先级也要高于上个

advert_int

1

authentication {

auth_type PASS

auth_pass

1111

}

virtual_ipaddress {

192.168

.

1.201

}

notify_master

"/etc/keepalived/notify.sh master"

notify_backup

"/etc/keepalived/notify.sh backup"

notify_fault

"/etc/keepalived/notify.sh fault"

}

|

注意:

notify_master

"/etc/keepalived/notify.sh master"

notify_backup

"/etc/keepalived/notify.sh backup"

notify_fault "/etc/keepalived/notify.sh fault"

3个状态分别要执行的脚本,只能放在 MASTER中,原因是:因为是互为主从,每个主的都会有个另外一个主的从,如果

把这 “3个状态执行脚本” 写入到从的区域中,那么另外一个主的从状态就会执行这个脚本,因为就会停掉所要高可用的

程序,这就造成了,两个VIP全部转移到其中一个服务器上去。

我们来验证下,如果keepalived和haproxy分别宕机,vip会不会转移:

在两个节点上都启动 keepalived和haproxy服务

|

1

2

3

4

|

[root@node2 ~]# service haproxy start

Starting haproxy: [ OK ]

[root@node2 ~]# service keepalived start

Starting keepalived: [ OK ]

|

以下为正常情况:

keepalived 1:

keepalived 2:

我们来模拟让第一个haproxy停止掉,再看下,VIP会不会全到 keepalived 2上面去:

|

1

2

|

[root@node2 ~]# service haproxy stop

Stopping haproxy: [ OK ]

|

查看keepalived 1 和 keepalived 2

看,都过来了。。。。

验证负载均衡很动静分离

我们给3个web服务不同的网页

1、给apache static一个静态页面,来验证如果请求的不是以 .php结尾的网页文件都定向到这太服务器上来

2、给apache dynamic 1 、2 分别两个 index.php ,实现对动态网页的负载均衡

我们给apache static 一个符合-i .jpg .gif .png .css .js的网页,就给个图片网页吧

apache static

|

1

|

scp

1

.png root@

192.168

.

1.100

:/

var

/www/html

|

apache dynamic 1

|

1

2

3

4

5

|

vim /

var

/www/html/index.php

192.168

.

1.101

<?php

phpinfo();

?>

|

apache dynamic 2

|

1

2

3

4

5

|

vim /

var

/www/html/index.php

192.168

.

1.102

<?php

phpinfo();

?>

|

1、我们来请求 1.png 结尾的静态文件

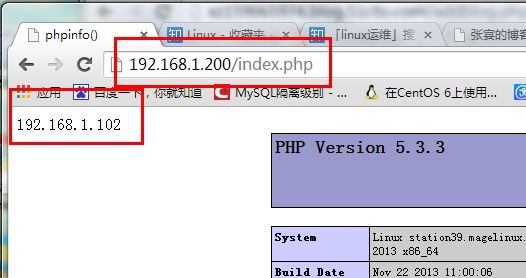

2、我们来请求 .php结尾的页面

如此看来,已经对以.php的动态页面做了负载均衡了

我们再通过 192.168.1.201 这个虚拟 ip 访问试试:

由此看来,也实现了双主模型了,两个haproxy同时可以服务了。。。

3、我们来看看状态页面

本文出自 “linux运维” 博客,请务必保留此出处http://xz159065974.blog.51cto.com/8618592/1405812