MapReduce WordCount 案例实操

MapReduce WordCount 案例实操

需求:

在给定的文本文件中统计输出每一个单词出现的次数

(1)输入数据 hello.txt

(2)期望输出数据

jinghang 2

banzhang 1

cls 2

hadoop 1

jiao 1

…

步骤分析:

根据MapReduce 编程规范,分别编写 Mapper、 Reducer、Driver

1.准备 输入数据 hello.txt

hello jinghang jinghang

ss jiao

banzhang

hadoop hadoop

idea Idea

java math

machine machinr

machine

2.预想输出数据效果

hello 1

jinghang 2

.....

3.Mapper

- 将 MapTask 传给我们的文本内容先转换成 String

2)根据空格将一行内容进行切分

3)将单词输出为 <单词 , 1>

如:jinghang , 1

jinghang , 1

4 Reducer

1)汇总各个key 的个数

jinghang , 1

jinghang , 1

2)输出该 key 的总次数

jinghang, 2

5 Driver

1) 指定配置信息,获取 job 对象实例

2)制定本程序的 jar 包所在的本地路径

3)关联 Mapper 和 Reducer 业务类

4)指定 Mapper 输出数据的 K ,V 类型

5)指定 最终输出数据的 K ,V 类型

6) 指定 job 的输入原始文件所在的目录

7)指定 job 的输出结果所在的目录

8)提交作业

实施步骤:

1.使用 IDEA 创建 maven 工程

2.在poem.xml 文件中添加依赖

<dependencies>

<dependency>

<groupId>junitgroupId>

<artifactId>junitartifactId>

<version>RELEASEversion>

dependency>

<dependency>

<groupId>org.apache.logging.log4jgroupId>

<artifactId>log4j-coreartifactId>

<version>2.8.2version>

dependency>

<dependency>

<groupId>org.apache.hadoopgroupId>

<artifactId>hadoop-commonartifactId>

<version>2.7.2version>

dependency>

<dependency>

<groupId>org.apache.hadoopgroupId>

<artifactId>hadoop-clientartifactId>

<version>2.7.2version>

dependency>

<dependency>

<groupId>org.apache.hadoopgroupId>

<artifactId>hadoop-hdfsartifactId>

<version>2.7.2version>

dependency>

dependencies>

3 在项目的src/main/resources目录下,新建一个文件,命名为“log4j.properties”,在文件中填入。

log4j.rootLogger=INFO, stdout

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern=%d %p [%c] - %m%n

log4j.appender.logfile=org.apache.log4j.FileAppender

log4j.appender.logfile.File=target/spring.log

log4j.appender.logfile.layout=org.apache.log4j.PatternLayout

log4j.appender.logfile.layout.ConversionPattern=%d %p [%c] - %m%n

4 编写程序

(1) 编写 Mapper类

package com.jinghang.wordcount;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

/*

KEYIN maptask 输入的key值类型 (LongWritable)

//行首在文件中的偏移量(距离文件中的最开始差多少,换行算一个),这一行的内容,

VALUEIN maptask 输入的value值类型 (Text)

KEYOUT maptask 输出的key值类型 (Text)

VALUEOUT maptask 输出的value值类型 (IntWritable)

*/

public class WCMapper extends Mapper<LongWritable, Text,Text, IntWritable> {

private Text outKey = new Text();

//单词每出现一次,标记为1

private IntWritable outValue = new IntWritable(1);

@Override

protected void map(LongWritable key, Text value, Context context)

throws IOException, InterruptedException {

//参数中的Context 代表任务

//拿到这一行数据

String line = value.toString();

//按照空格切分数据

String[] words = line.split(" ");

//遍历数组,把单词变成(word ,1)的形式交给框架

// for (String word : words) {

// context.write(new Text(word),new IntWritable(1));

//在遍历数组中大量 new 出新对象,浪费资源,使得垃圾回收线程一直在工作,程序运行较慢,因此设置 私有变量outKey和 outValue

// }

for (String word : words) {

outKey.set(word);

context.write(outKey,outValue);

}

}

}

(2 )编写 Reducer 类

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

public class WCReduce extends Reducer<Text, IntWritable,Text,IntWritable> {

private IntWritable total = new IntWritable();

@Override

protected void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException {

//做累加

int sum = 0;

for (IntWritable value : values) {

sum += value.get();

}

//包装结果并输出

total.set(sum);

context.write(key,total);

}

}

(3)编写 Driver 类

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

public class WCDriver {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

//1.获取配置信息-->获取job对象实例

Job job = Job.getInstance(new Configuration());

//2.制定本程序的 jar 包所在的本地路径(Driver类)

job.setJarByClass(WCDriver.class);

//3.关联Mapper 、Reducer 类

job.setMapperClass(WCMapper.class);

job.setReducerClass(WCReducer.class);

//4.指定 Mapper 输出数据的 键、值 类型

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

//5.指定 最终输出数据的 键、值 类型

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

//6.job 输入文件所在原始目录

FileInputFormat.setInputPaths(job,new Path(args[0]));

7.job 输出文件所在原始目录

FileOutputFormat.setOutputPath(job,new Path(args[1]));

//8 提交

boolean result = job.waitForCompletion(true);

System.exit(result?0:1);

}

}

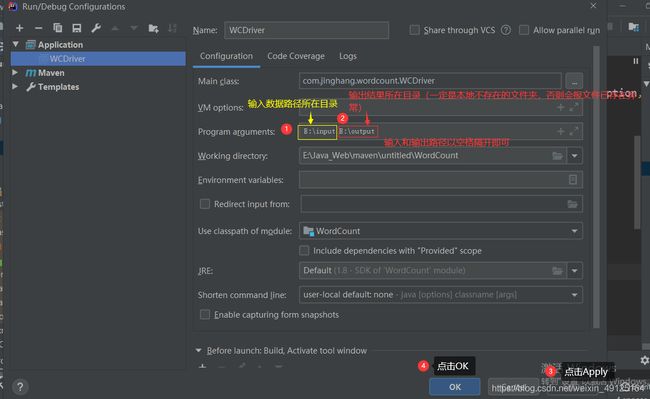

5 在本地上运行

(1)传入参数(输入数据所在目录,输出结果所要存放的目录)

输出结果所在文件夹或目录一定要是不存在的,否则会 出现文件已存在异常

(2)运行程序

(3)查看结果

Idea 1

banzhang 1

hadoop 2

hello 1

idea 1

java 1

jiao 1

jinghang 2

machine 2

machinr 1

math 1

ss 1

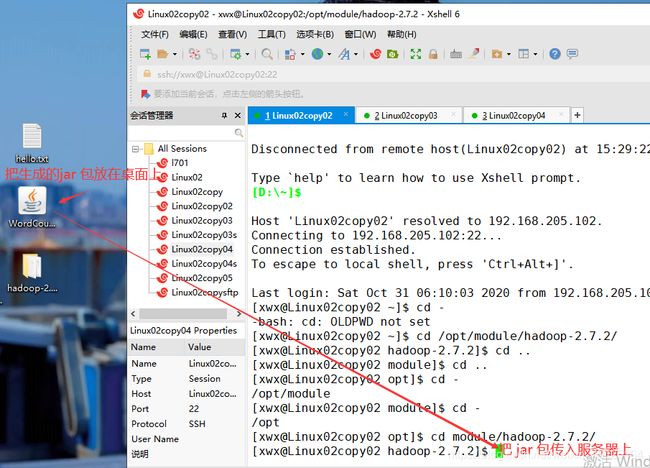

6 集群上测试

生成jar 包,如图:

将生成的jar 包放入服务器:

在集群上运行:

[xwx@Linux02copy02 hadoop-2.7.2]$ hadoop jar WordCount-1.0-SNAPSHOT.jar com.jinghang.wordcount.WCDriver /input /out

# hadoop jar 运行的jar 包包名 Driver类的全类名 输入数据目录 输出结果地址

查看结果:

[xwx@Linux02copy02 hadoop-2.7.2]$ bin/hdfs dfs -cat /out/*

I 1

hadoop 1

hdfs 1

hodoop 1

miss 3

tear 1

you 3

$ hadoop jar WordCount-1.0-SNAPSHOT.jar com.jinghang.wordcount.WCDriver /input /out

hadoop jar 运行的jar 包包名 Driver类的全类名 输入数据目录 输出结果地址

查看结果:

[xwx@Linux02copy02 hadoop-2.7.2]$ bin/hdfs dfs -cat /out/*

I 1

hadoop 1

hdfs 1

hodoop 1

miss 3

tear 1

you 3