Docker Swarm集群企业案例实战

1. Docker Swarm集群企业案例实战

- Docker Swarm 和 Docker Compose 一样,都是 Docker 官方容器编排项目,但不同的是,Docker Compose 是一个在单个服务器或主机上创建多个容器的工具,而 Docker Swarm 则可以在多个服务器或主机上创建容器集群服务,对于微服务的部署,显然 Docker Swarm 会更加适合。

1.1. Swarm概念剖析

- Swarm是Docker公司自主研发的容器集群管理系统, Swarm在早期是作为一个独立服务存在, 在Docker Engine v1.12中集成了Swarm的集群管理和编排功能。可以通过初始化Swarm或加入现有Swarm来启用Docker引擎的Swarm模式。

- Docker Engine CLI和API包括了管理Swarm节点命令,比如添加、删除节点,以及在Swarm中部署和编排服务。也增加了服务栈(Stack)、服务(Service)、任务(Task)概念。

- Swarm集群管理和任务编排功能已经集成到了Docker引擎中,通过使用swarmkit。swarmkit是一个独立的,专门用于Docker容器编排的项目,可以直接在Docker上使用。

- Swarm集群是由多个运行swarm模式的Docker主机组成,关键的是,Docker默认集成了swarm mode。swarm集群中有manager(管理成员关系和选举)、worker(运行swarm service)。

- 一个Docker主机可以是Manager,也可以是Worker角色,当然,也可以既是Manager,同时也是Worker。

- 当你创建一个service时,你定义了它的理想状态(副本数、网络、存储资源、对外暴露的端口等)。Docker会维持它的状态,例如,如果一个worker node不可用了,Docker会调度不可用node的task到其他nodes上。

- 运行在容器中的一个task,是swarm service的一部分,且通过swarm manager进行管理和调度,和独立的容器是截然不同的。

- Swarm service相比单容器的一个最大优势就是,你能够修改一个服务的配置:包括网络、数据卷,不需要手工重启服务。Docker将会更新配置,把过期配置的task停掉,重新创建一个新配置的容器。

- 当然,也许你会觉得docker Compose也能做swarm类似的事情,某些场景下是可以。但是Swarm相比docker compose,功能更加丰富,比如说自动扩容、缩容,分配至Task到不同的Nodes等。

- 一个node是Swarm集群中的一个Docker引擎实例。你也可以认为这就是一个docker节点。你可以运行一个或多个节点在单台物理机或云服务器上,但是生产环境上,典型的部署方式是:Docker节点交叉分布式部署在多台物理机或云主机上。

- 通过Swarm部署一个应用,你向manager节点提交一个service,然后manager节点分发工作(task)给worker node。Manager节点同时也会容器编排和集群管理功能,它会选举出一个leader来指挥编排任务。worker nodes接受和执行从manager分发过来的task

- 一般地,Manager节点同时也是worker节点,但是,你也可以将manager节点配置成只进行管理的功能。Agent则运行在每个worker节点上,时刻等待着接受任务。worker node会上报manager node,分配给他的任务当前状态,这样manager node才能维持每个worker的工作状态。

- Service就是在manager或woker节点上定义的tasks。service是swarm系统最核心的架构,同时也是和swarm最主要的交互者。当你创建一个service,你指定容器镜像以及在容器内部运行的命令。

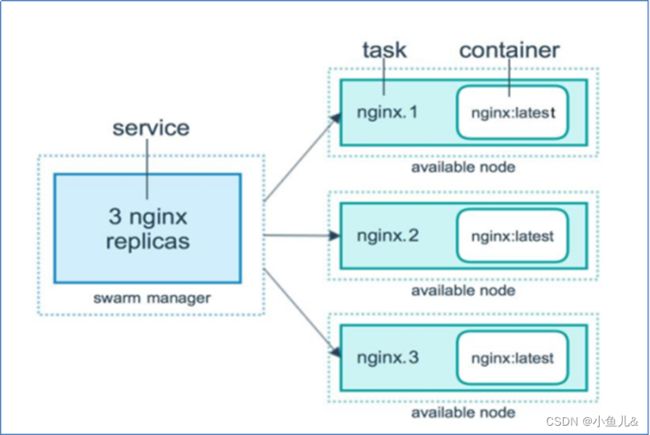

- 在副本集模式下,swarm manager将会基于你需要扩容的需求,把task分发到各个节点。对于全局service,swarm会在每个可用节点上运行一个task。

- Task携带Docker引擎和一组命令让其运行在容器中。它是swarm的原子调度单元。manager节点在扩容的时候回交叉分配task到各个节点上,一旦一个task分配到一个node,它就不能移动到其他node。

1.2 Docker Swarm优点

- Docker Engine集成集群管理

- 使用Docker Engine CLI 创建一个Docker Engine的Swarm模式,在集群中部署应用程序服务。

- 去中心化设计

- Swarm角色分为Manager和Worker节点, Manager节点故障不影响应用使用。

- 扩容缩容

- 可以声明每个服务运行的容器数量,通过添加或删除容器数自动调整期望的状态。

- 期望状态协调

- Swarm Manager节点不断监视集群状态,并调整当前状态与期望状态之间的差异。

- 多主机网络

- 可以为服务指定overlay网络。当初始化或更新应用程序时, Swarm manager会自动为overlay网络上的容器分配IP地址。

- 服务发现

- Swarm manager节点为集群中的每个服务分配唯一的DNS记录和负载均衡VIP。可以通过Swarm内置的DNS服务器查询集群中每个运行的容器。

- 负载均衡

- 实现服务副本负载均衡,提供入口访问。

- 安全传输

- Swarm中的每个节点使用TLS相互验证和加密, 确保安全的其他节点通信

- 滚动更新

- 升级时,逐步将应用服务更新到节点,如果出现问题,可以将任务回滚到先前版本。

2. Swarm负载均衡

- Swarm manager使用 ingress负载均衡来暴露你需要让外部访问的服务。swarm manager能够自动的分配一个外部端口到service,当然,你也能够配置一个外部端口,你可以指定任意没有使用的port,如果你不指定端口,那么swarm manager会给service指定30000-32767之间任意一个端口。

- Swarm模式有一个内部的DNS组件,它能够自动分发每个服务在swarm里面。swarm manager使用内部负载均衡机制来接受集群中节点的请求,基于DNS名字解析来实现。

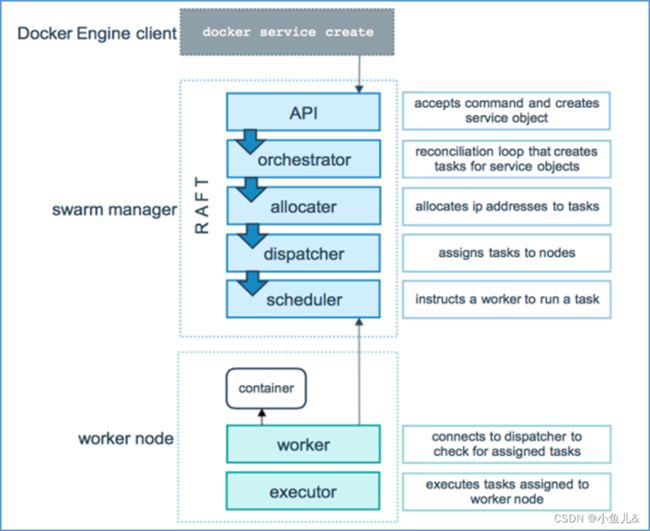

2.1. Swarm架构图

- API:接受命令,创建一个service(API输入)

- orchestrator:service对象创建的task进行编排工作(编排)

- allocater:为各个task分配IP地址(分配IP)

- dispatcher:将task分发到nodes(分发任务)

- scheduler:安排一个worker运行task(运行任务

Worker node:

2.2. Swarm节点及防火墙设置

| 集群角色 | 宿主机ip地址 | 宿主机系统 | docker版本 |

|---|---|---|---|

| manager | 192.168.2.10 | CentOS7.9 | 23.0.3 |

| Node1 | 192.168.2.20 | CentOS7.9 | 23.0.3 |

| Node2 | 192.168.2.30 | CentOS7.9 | 23.0.3 |

- Manager、node1、node2节点进行如下配置

//添加hosts解析

[root@localhost ~]# vim /etc/hosts

添加如下:

192.168.2.10 manager

192.168.2.20 node1

192.168.2.30 node2

//临时关闭selinux和防火墙;

sed -i '/SELINUX/s/enforcing/disabled/g' /etc/sysconfig/selinux

setenforce 0

systemctl stop firewalld.service

systemctl disable firewalld.service

//同步节点时间

yum install ntpdate -y

ntpdate pool.ntp.org

//修改对应节点主机名

hostname `cat /etc/hosts|grep $(ifconfig|grep broadcast|tail -1|awk '{print $2}')|awk '{print $2}'`;su

//关闭swapoff

[root@manager ~]# swapoff -a

sed -ri 's/.*swap.*/#&/g' /etc/fstab ---永久关闭

3. Docker安装

- Manager、node1、node2节点进行如下配置

//安装依赖包

yum -y install yum-utils device-mapper-persistent-data lvm2

//配置docker镜像源

wget -O /etc/yum.repos.d/docker-ce.repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

//安装docker

yum -y install docker-ce

//修改docker配置文件

[root@manager ~]# vim /etc/docker/daemon.json

添加内容如下:

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

],

"registry-mirrors": ["https://uyah70su.mirror.aliyuncs.com"]

}

//注意,由于国内拉取镜像较慢,配置文件最后增加了registry-mirrors

mkdir -p /etc/systemd/system/docker.service.d

sed -i '/^ExecStart/s/dockerd/dockerd -H tcp:\/\/0.0.0.0:2375/g' /usr/lib/systemd/system/docker.service

//重启docker

[root@manager ~]# systemctl daemon-reload

[root@manager ~]# systemctl enable docker

[root@manager ~]# systemctl restart docker

ps -ef|grep -aiE docker

4. Swarm集群部署

- 根据如上步骤,Docker平台准备完成,接下来部署Swarm集群,在Manager节点初始化集群,操作的方法和指令如下:

[root@manager ~]# docker swarm init --advertise-addr 192.168.2.10

Swarm initialized: current node (44bghdfflwgqhzywunoqfqgqf) is now a manager.

To add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-1ll2gomc2khm5b5yjah2mmr8llpqn7jlb68rb7kudfbtpj0coo-3ws55l597bu7144wg6xddweyj 192.168.2.10:2377

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.

4.1. 将Node1节点加入Swarm集群,操作的方法和指令如下

//此操作在node1上操作:

docker swarm join --token SWMTKN-1-1ll2gomc2khm5b5yjah2mmr8llpqn7jlb68rb7kudfbtpj0coo-3ws55l597bu7144wg6xddweyj 192.168.2.10:2377

4.2. 将Node2节点加入Swarm集群,操作的方法和指令如下:

//此操作在node2上操作:

[root@node2 ~]# docker swarm join --token SWMTKN-1-1ll2gomc2khm5b5yjah2mmr8llpqn7jlb68rb7kudfbtpj0coo-3ws55l597bu7144wg6xddweyj 192.168.2.10:2377

This node joined a swarm as a worker.

4.3. 查看Swarm集群Node状态,命令操作如下:

[root@manager ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

44bghdfflwgqhzywunoqfqgqf * manager Ready Active Leader 23.0.3

heavfkcv59ed5bkikbxot7mus node1 Ready Active 23.0.3

k9a71k4kzhl9nw53ijo6lx4y5 node2 Ready Active 23.0.3

5. Swarm部署Nginx服务

5.1. 基于Swarm集群来创建一台Nginx WEB服务,操作指令如下:

[root@manager ~]# docker service create --replicas 1 --name nginx-web nginx:latest

- --replicas 副本集数

- --name 服务名称

5.2. 查看Nginx服务,操作指令如下 :

[root@manager ~]# docker service ps nginx-web

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

ixh4h670h4ws nginx-web.1 nginx:latest node1 Running Running 2 minutes ago

5.3. 显示服务详细信息,操作指令如下

[root@manager ~]# docker service inspect --pretty nginx-web

ID: st3o1iyv7whmn8eds87m35ozx

Name: nginx-web

Service Mode: Replicated

Replicas: 1

Placement:

UpdateConfig:

Parallelism: 1

On failure: pause

Monitoring Period: 5s

Max failure ratio: 0

Update order: stop-first

RollbackConfig:

Parallelism: 1

On failure: pause

Monitoring Period: 5s

Max failure ratio: 0

Rollback order: stop-first

ContainerSpec:

Image: nginx:latest@sha256:0d17b565c37bcbd895e9d92315a05c1c3c9a29f762b011a10c54a66cd53c9b31

Init: false

Resources:

Endpoint Mode: vip

5.4. 查看Json格式

[root@manager ~]# docker service inspect nginx-web

[

{

"ID": "st3o1iyv7whmn8eds87m35ozx",

"Version": {

"Index": 21

},

"CreatedAt": "2023-04-14T08:49:13.137753329Z",

"UpdatedAt": "2023-04-14T08:49:13.137753329Z",

"Spec": {

"Name": "nginx-web",

"Labels": {},

"TaskTemplate": {

"ContainerSpec": {

"Image": "nginx:latest@sha256:0d17b565c37bcbd895e9d92315a05c1c3c9a29f762b011a10c54a66cd53c9b31",

"Init": false,

"StopGracePeriod": 10000000000,

"DNSConfig": {},

"Isolation": "default"

},

"Resources": {

"Limits": {},

"Reservations": {}

},

"RestartPolicy": {

"Condition": "any",

"Delay": 5000000000,

"MaxAttempts": 0

},

"Placement": {

"Platforms": [

{

"Architecture": "amd64",

"OS": "linux"

},

{

"OS": "linux"

},

{

"OS": "linux"

},

{

"Architecture": "arm64",

"OS": "linux"

},

{

"Architecture": "386",

"OS": "linux"

},

{

"Architecture": "mips64le",

"OS": "linux"

},

{

"Architecture": "ppc64le",

"OS": "linux"

},

{

"Architecture": "s390x",

"OS": "linux"

}

]

},

"ForceUpdate": 0,

"Runtime": "container"

},

"Mode": {

"Replicated": {

"Replicas": 1

}

},

"UpdateConfig": {

"Parallelism": 1,

"FailureAction": "pause",

"Monitor": 5000000000,

"MaxFailureRatio": 0,

"Order": "stop-first"

},

"RollbackConfig": {

"Parallelism": 1,

"FailureAction": "pause",

"Monitor": 5000000000,

"MaxFailureRatio": 0,

"Order": "stop-first"

},

"EndpointSpec": {

"Mode": "vip"

}

},

"Endpoint": {

"Spec": {}

}

}

]

6. Swarm服务扩容&升级

6.1. Nginx服务扩容和缩容,最终每个Node上分布了1个Nginx容器

[root@manager ~]# docker service scale nginx-web=3

nginx-web scaled to 3

overall progress: 3 out of 3 tasks

1/3: running [==================================================>]

2/3: running [==================================================>]

3/3: running [==================================================>]

verify: Service converged

[root@manager ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

st3o1iyv7whm nginx-web replicated 3/3 nginx:latest

[root@manager ~]# docker service ps nginx-web

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

ixh4h670h4ws nginx-web.1 nginx:latest node1 Running Running 38 minutes ago

l0dobbkogr1l nginx-web.2 nginx:latest manager Running Running 57 seconds ago

oyh7urhu955q nginx-web.3 nginx:latest node2 Running Running 57 seconds ago

6.2. 滚动更新服务

[root@manager ~]# docker service update --image tomcat nginx-web

[root@manager ~]# docker service ps -f 'DESIRED-STATE=running' nginx-web

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

o9f4petj2bag nginx-web.1 tomcat:latest node1 Running Running 9 minutes ago

xyotaj1ifj5b nginx-web.2 tomcat:latest manager Running Running 6 minutes ago

i92vie0nkps8 nginx-web.3 tomcat:latest node2 Running Running 4 minutes ago

6.3. 手动回滚

[root@manager ~]# docker service update --rollback nginx-web

[root@manager ~]# docker service ps -f 'DESIRED-STATE=running' nginx-web

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

worhbabalj5c nginx-web.1 nginx:latest node1 Running Running 2 minutes ago

ldgv6sagqa8u nginx-web.2 nginx:latest manager Running Running 2 minutes ago

qq7j0f2rks3i nginx-web.3 nginx:latest node2 Running Running 2 minutes ago

7. Manager和Node角色切换

7.1. Manager和Node角色切换之前,查看节点状态信息

[root@manager ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

44bghdfflwgqhzywunoqfqgqf * manager Ready Active Leader 23.0.3

heavfkcv59ed5bkikbxot7mus node1 Ready Active 23.0.3

k9a71k4kzhl9nw53ijo6lx4y5 node2 Ready Active 23.0.3

7.2. Manager和Node角色切换,停掉现有manager Docker引擎服务,操作指令如下:

//将node1升级为Manager

[root@manager ~]# docker node promote node1

Node node1 promoted to a manager in the swarm.

//将manager的docker服务停掉

[root@manager ~]# systemctl stop docker.socket

//在node1查看节点状态信息

[root@node1 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

44bghdfflwgqhzywunoqfqgqf manager Ready Active Unreachable 23.0.3

heavfkcv59ed5bkikbxot7mus * node1 Ready Active Leader 23.0.3

k9a71k4kzhl9nw53ijo6lx4y5 node2 Ready Active 23.0.3

8. Swarm数据管理之Volume

- Docker Swarm数据管理方式有很多,其中volume方式管理数据也比较常见,配置相对比较简单,其原理是:在宿主机上创建一个Volume,默认目录为:/var/lib/docker/volume/your_custom_volume/_data。

- 将容器的某个目录(例如容器网站数据目录)映射到宿主机的volume上,即使容器挂了,数据还会依然保留在宿主机的volume上。

//创建Nginx服务,Volume映射

[root@node1 ~]# docker service create --replicas 1 --mount type=volume,src=nginx_data,dst=/usr/share/nginx/html --name nginx-www nginx:latest

//查看nginx-www在哪台服务器创建

[root@node1 ~]# docker service inspect nginx-www

//查看数据卷;

[root@manager ~]# ls /var/lib/docker/volumes/

backingFsBlockDev metadata.db nginx_data

[root@manager ~]# ls -l /var/lib/docker/volumes/nginx_data/_data/

总用量 8

-rw-r--r-- 1 root root 497 12月 28 2021 50x.html

-rw-r--r-- 1 root root 615 12月 28 2021 index.html

-可以看到容器里面的nginx数据目录已经挂在到宿主机的nginx_data了

9. Swarm数据管理之Bind

- Bind mount模式工作原理:将宿主机某个目录映射到docker容器,很适合于网站,同时把宿主机的这个目录作为git版本目录,每次update代码的时候,容器就会更新

- 分别在在mananger、node1、node2上创建web网站目录

mkdir -p /data/webapps/www/

- 创建服务,操作指令如下:

[root@node1 ~]# docker service create --replicas 1 --mount type=bind,src=/data/webapps/www,dst=/usr/share/nginx/html --name nginx-v1 nginx:latest

- 查看已创建的nginx-v1服务,操作指令如下

docker service ps nginx-v1

docker service inspect nginx-v1

- 测试宿主机的数据盘和容器是映射关系

[root@node1 ~]# ls /data/webapps/www/

[root@node1 ~]# echo www.sxy.com Test Pages >>/data/webapps/www/index.html

- 进入容器查看内容

[root@node1 ~]# docker exec -it nginx-v1.1.eq7qn5670ui64wab6u87mx5x1 /bin/bash

root@6df12eda8415:/# cat /usr/share/nginx/html/index.html

www.sxy.com Test Pages

- 可以看到我们在宿主机上创建的index.html已经挂在到容器上

10. Swarm数据管理之NFS

- 如上两种方式都是单机Docker上数据共享方式,要是在集群中,这个就不适用了,我们必须使用共享存储或网络存储了。这里我们使用NFS来测试。

10.1. 安装NFS文件服务

[root@manager ~]# yum -y install nfs-utils.x86_64 rpcbind

10.2. 配置共享目录&权限

[root@manager ~]# vim /etc/exports

/data/ 192.168.2.0/24(rw,sync,insecure,anonuid=1000,anongid=1000,no_root_squash)

10.3. 启动NFS服务

[root@manager ~]# systemctl enable rpcbind

[root@manager ~]# systemctl start rpcbind

[root@manager ~]# systemctl enable nfs-server

[root@manager ~]# systemctl start nfs-server

[root@manager ~]# exportfs -rv

10.4. 所有节点安装nfs客户端

yum -y install nfs-utils.x86_64

10.5.创建Nginx Volume名称:volume_test1,操作指令如下:

[root@node1 ~]# docker volume create --driver local --opt type=nfs --opt o=addr=192.168.2.10,rw --opt device=/data/ volume_test

volume_test1

10.6. 创建Nginx服务,绑定volume_test1 nfs映射目录,操作指令如下

[root@node1 ~]# docker service create --mount type=volume,source=volume_test,destination=/usr/share/nginx/html/ --replicas 3 --name nginx-test --publish 88:80 nginx:latest

zklzy25i5acr3srdcegi0b61e

overall progress: 3 out of 3 tasks

1/3: running [==================================================>]

2/3: running [==================================================>]

3/3: running [==================================================>]

verify: Service converged

10.7.可以查看到nfs已经挂在到manager节点, 进入容器查看并创建内容

//查看nginx-test都在哪台服务器创建容器

[root@node1 ~]# docker service ps nginx-test

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

m8mspt13wmfi nginx-test.1 nginx:latest node1 Running Running 8 minutes ago

kfgvwn8j0vtk nginx-test.2 nginx:latest manager Running Running 8 minutes ago

kgpy17zjz865 nginx-test.3 nginx:latest node2 Running Running 8 minutes ago

[root@manager ~]# docker exec -it nginx-test.1.qv3g2fm8f73byv7mtcmn1zq55 /bin/bash

root@a38779c38ccc:/# cd /usr/share/nginx/html/

root@a38779c38ccc:/usr/share/nginx/html# ls

50x.html index.html

root@a38779c38ccc:/usr/share/nginx/html# echo "wo shi ys" >> index.htm

root@a38779c38ccc:/usr/share/nginx/html# ls

50x.html index.html index.htm

10.8. 在Nginx容器中创建内容之后,退出容器,进入宿主机NFS服务器上,进入/data/目录,查看内容

[root@manager ~]# cd /data/

[root@manager data]# ls

50x.html index.htm index.html

[root@manager data]# cat index.htm

wo shi ys

11. Docker Swarm新增节点

- Docker Swarm集群在生产环境正常运行中,随着企业业务飞速的增长,此时需要扩容Swarm节点,作为运维人员该如何操作呢?操作的方法和步骤如下:

- 新增一台服务器,IP地址:192.168.2.40

11. 1. 在每台服务器上hosts文件中,添加Node3和IP绑定记录,操作指令如下:

vim /etc/hosts

192.168.2.10 manager

192.168.2.20 node1

192.168.2.30 node2

192.168.2.40 node3

//注意:node3需要添加上面所有内容,其他主机只需添加node3的内容就好。

11.2. 在node3配置:

//关闭selinux和防火墙

sed -i '/SELINUX/s/enforcing/disabled/g' /etc/sysconfig/selinux

setenforce 0

systemctl stop firewalld.service

systemctl disable firewalld.service

//同步节点时间;

yum install ntpdate -y

ntpdate pool.ntp.org

//修改对应节点主机名

hostname node3;su

//关闭swapoff

swapoff -a

sed -ri 's/.*swap.*/#&/g' /etc/fstab ---永久关闭

11.3. 在新节点node3上,部署Docker引擎服务

//安装依赖包

yum -y install yum-utils device-mapper-persistent-data lvm2

//配置docker镜像源

wget -O /etc/yum.repos.d/docker-ce.repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

//安装docker

yum -y install docker-ce

//修改docker配置文件

[root@manager ~]# vim /etc/docker/daemon.json

添加内容如下:

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

],

"registry-mirrors": ["https://uyah70su.mirror.aliyuncs.com"]

}

//注意,由于国内拉取镜像较慢,配置文件最后增加了registry-mirrors

mkdir -p /etc/systemd/system/docker.service.d

sed -i '/^ExecStart/s/dockerd/dockerd -H tcp:\/\/0.0.0.0:2375/g' /usr/lib/systemd/system/docker.service

//重启docker

[root@manager ~]# systemctl daemon-reload

[root@manager ~]# systemctl enable docker

[root@manager ~]# systemctl restart docker

ps -ef|grep -aiE docker

11.4. 在已经初始化的机器上(Manager)执行如下指令,获取客户端加入集群的命令

[root@node1 ~]# docker swarm join-token manager

To add a manager to this swarm, run the following command:

docker swarm join --token SWMTKN-1-1ll2gomc2khm5b5yjah2mmr8llpqn7jlb68rb7kudfbtpj0coo-anqgw5qli4j1n6o3c9rmgylgp 192.168.2.20:2377

11.5. 将结果复制到新增Node3节点机器上执行即可

[root@node3 ~]# docker swarm join --token SWMTKN-1-1ll2gomc2khm5b5yjah2mmr8llpqn7jlb68rb7kudfbtpj0coo-anqgw5qli4j1n6o3c9rmgylgp 192.168.2.20:2377

11.6. 查看集群状态是否新增成功

[root@node1 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

44bghdfflwgqhzywunoqfqgqf manager Ready Active Reachable 23.0.3

heavfkcv59ed5bkikbxot7mus * node1 Ready Active Leader 23.0.3

k9a71k4kzhl9nw53ijo6lx4y5 node2 Ready Active 23.0.3

s2tjb3vmazfk2cj5oy8fj90ca node3 Ready Active Reachable 23.0.3

- Leader ,意味着该节点是使得群的所有群管理和编排决策的主要管理器节点。

- Reachable ,意味着节点是管理者节点正在参与Raft共识。如果领导节点不可用,则该节点有资格被选为新领导者。

- Unavailable ,意味着节点是不能与其他管理器通信的管理器。如果管理器节点不可用,您应该将新的管理器节点加入群集,或者将工作器节点升级为管理器。

- 节点AVAILABILITY列说明,显示调度程序是否可以将任务分配给节点

- Active,意味着调度程序可以将任务分配给节点。

- Pause,意味着调度程序不会将新任务分配给节点,但现有任务仍在运行。

- Drain,意味着调度程序不会向节点分配新任务。调度程序关闭所有现有任务并在可用节点上调度它们。

12. Docker Swarm删除节点

- Docker Swarm集群在生产环境正常运行中,随着服务器使用寿命长,难免有服务器过保,下架,此时需要删除Swarm节点,作为运维人员该如何操作呢?操作的方法和步骤如下:

12.1. 将Node3节点停用,该节点上的容器会迁移到其他节点,执行如下操作指令:

[root@node1 ~]# docker node update --availability drain node3

12.2. 停止Node3 docker服务,删除节点前,需先停该节点的docker服务

[root@node3 ~]# systemctl stop docker.socket

12.3. 登录到Manager上,将节点node3降级成worker,然后删除。只能删除worker基本的节点。

[root@node1 ~]# docker node demote node3

Manager node3 demoted in the swarm.

[root@node1 ~]# docker node rm node3

node3

[root@node1 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

44bghdfflwgqhzywunoqfqgqf manager Ready Active Reachable 23.0.3

heavfkcv59ed5bkikbxot7mus * node1 Ready Active Leader 23.0.3

k9a71k4kzhl9nw53ijo6lx4y5 node2 Ready Active 23.0.3