ElasticSearch 基础教程

ElasticSearch 基础教程

-

- 1.概念

- 2.使用场景:

- 3.基本概念

-

- 1.NRT

- 2.Cluster

- 3.Node

- 4.Index

- 5.Type

- 6.Document

- 7.Shards & Replicas

- 4.安装和配置

-

- 1.集群健康

- 2.列出所有索引

- 3.创建索引

- 4.索引和查询文档

- 5.删除索引

- 6.总结:

- 7.修改文档(重新索引)

- 8.更新文档

- 9.删除文档

- 10.批量处理

- 6.样本数据测试

- 7.Search API

- 8.查询 DSL 语言介绍

- 9.Search DSL match

- 10.Search DSL Filter

- 11.Search DSL Range

- 12.聚合查询

1.概念

Elasticsearch 是一个高度可扩展的开源全文搜索和分析引擎。它使您可以近乎实时地快速存储、搜索和分析大量数据。

ES能做什么?

全文检索(全部字段)、模糊查询(搜索)、数据分析(提供分析语法,例如聚合)

全文检索:检索所有的字段,而不是某个字段

模糊查询:也可以查询所有的字段

参考:https://www.elastic.co/guide/en/elasticsearch/reference/6.0/getting-started.html

2.使用场景:

1.搜索引擎

2.ELK 收集日志,分析日志

3.您运行一个价格警报平台,该平台允许精通价格的客户指定一条规则,例如“我有兴趣购买特定的电子产品,并且我希望在下个月内任何供应商的产品价格低于 X 美元时收到通知” . 在这种情况下,您可以抓取供应商价格,将它们推送到 Elasticsearch 并使用其反向搜索 (Percolator) 功能将价格变动与客户查询进行匹配,并最终在找到匹配项后将警报推送给客户。

4.BI 分析,对大量数据(想想数百万或数十亿条记录)进行快速调查、分析、可视化和提出临时问题。

3.基本概念

1.NRT

Elasticsearch 是一个近实时( Near RealTime)的搜索平台,从索引文档到可搜索之间存在轻微的延迟(通常为一秒)。

2.Cluster

集群的默认名称是“elasticsearch”,作为自动发现。您可以将logging-dev、logging-stage和logging-prod 用于开发、暂存和生产集群。集群由节点组成。

3.Node

启动时分配给节点的随机唯一标识符 (UUID),也可以自定义

4.Index

类似于数据库,索引名必须全部小写,数量不限。

5.Type

在 6.0.0 中已弃用。

6.Document

文档是可以建立索引的基本单元。用JSON(JavaScript Object Notation)表示。文档必须分配给一个 type。

7.Shards & Replicas

分片:

1.解决分布式存储问题;

2.解决分布式并行搜索大量数据的问题,提高吞吐量;

3.解决水平扩缩容问题。

4.分片的分布机制及文档聚合返回搜索结果对用户透明(不可见)。

副本:

1.分片、节点发生故障是提供高可用;故副本分片不能和主分片分配在同一节点;

2.搜索在副本上并行执行,增加吞吐量;

注意:

1.可以在创建索引时定义分片和副本数,随时可以修改副本数量,但是不能修改分片数量;

2.默认5个主分片和一个副本,故至少两个节点,一共10个分片

3.每个ES 的分片是一个 Lucene 索引,单个 Lucene 索引可以包含的文档上限是 Integer.MAX_VALUE - 128[LUCENE-5843

4.可以使用_cat/shards 监控分片大小

4.安装和配置

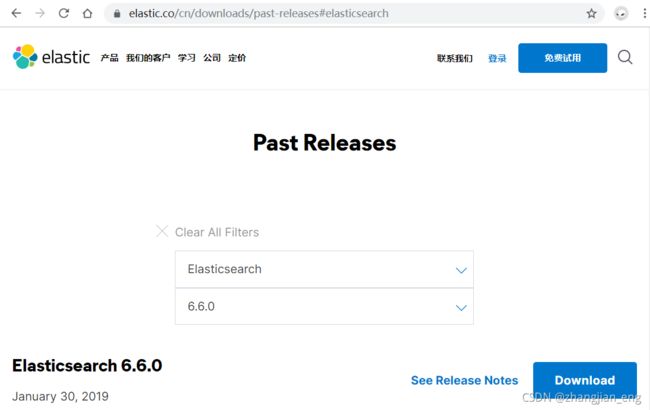

1.ES 安装要求java8 以上,在 [www.elastic.co/downloads]下载

2.config/elasticsearch.yml 默认都是注释的,只满足学习环境,生成环境必须修改path.data、path.logs、network.host 等。

# 集群名称,默认是 elasticsearch,同一个集群互相发现

cluster.name: my-application

# 节点名称

node.name: node-104

# 数据目录,不要用默认的

path.data: /opt/module/elasticsearch/data

# 日志目录,不要用默认的

path.logs: /opt/module/elasticsearch/logs

# 内存锁,设置为 true 即使内存不够同也不用交换区

bootstrap.memory_lock: true

# 还不清楚,后面查

bootstrap.system_call_filter: false

# 对外暴露的 ip

network.host: hadoop104

# 可以做为发现的节点

discovery.zen.ping.unicast.hosts: ["192.168.253.102"]

3.默认的配置文件

# ======================== Elasticsearch Configuration =========================

#

# NOTE: Elasticsearch comes with reasonable defaults for most settings.

# Before you set out to tweak and tune the configuration, make sure you

# understand what are you trying to accomplish and the consequences.

#

# The primary way of configuring a node is via this file. This template lists

# the most important settings you may want to configure for a production cluster.

#

# Please consult the documentation for further information on configuration options:

# https://www.elastic.co/guide/en/elasticsearch/reference/index.html

#

# ---------------------------------- Cluster -----------------------------------

#

# Use a descriptive name for your cluster:

#

#cluster.name: my-application

#

# ------------------------------------ Node ------------------------------------

#

# Use a descriptive name for the node:

#

#node.name: node-1

#

# Add custom attributes to the node:

#

#node.attr.rack: r1

#

# ----------------------------------- Paths ------------------------------------

#

# Path to directory where to store the data (separate multiple locations by comma):

#

#path.data: /path/to/data

#

# Path to log files:

#

#path.logs: /path/to/logs

#

# ----------------------------------- Memory -----------------------------------

#

# Lock the memory on startup:

#

#bootstrap.memory_lock: true

#

# Make sure that the heap size is set to about half the memory available

# on the system and that the owner of the process is allowed to use this

# limit.

#

# Elasticsearch performs poorly when the system is swapping the memory.

#

# ---------------------------------- Network -----------------------------------

#

# Set the bind address to a specific IP (IPv4 or IPv6):

#

#network.host: 192.168.0.1

#

# Set a custom port for HTTP:

#

#http.port: 9200

#

# For more information, consult the network module documentation.

#

# --------------------------------- Discovery ----------------------------------

#

# Pass an initial list of hosts to perform discovery when new node is started:

# The default list of hosts is ["127.0.0.1", "[::1]"]

#

#discovery.zen.ping.unicast.hosts: ["host1", "host2"]

#

# Prevent the "split brain" by configuring the majority of nodes (total number of master-eligible nodes / 2 + 1):

#

#discovery.zen.minimum_master_nodes:

#

# For more information, consult the zen discovery module documentation.

#

# ---------------------------------- Gateway -----------------------------------

#

# Block initial recovery after a full cluster restart until N nodes are started:

#

#gateway.recover_after_nodes: 3

#

# For more information, consult the gateway module documentation.

#

# ---------------------------------- Various -----------------------------------

#

# Require explicit names when deleting indices:

#

#action.destructive_requires_name: true

4.安装步骤

# 下载 (wget、离线也可以)

curl -L -O https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-6.0.1.tar.gz

# 解压

tar -xvf elasticsearch-6.0.1.tar.gz

# 切换目录

cd elasticsearch-6.0.1/bin

# 前台启动

./elasticsearch

# 后台启动

./elasticsearch -d

# 记录 pid

./bin/elasticsearch -p pid -d

# 执行集群名称和节点名称启动

./elasticsearch -Ecluster.name=my_cluster_name -Enode.name=my_node_name

# 停止集群

jps | grep Elasticsearch

kill -SIGTERM 15516

# 或者

kill `cat pid`

5.检查集群 Running

curl -X GET "localhost:9200/?pretty"

# Kibana

GET /

结果:

{

"name" : "Cp8oag6",

"cluster_name" : "elasticsearch",

"cluster_uuid" : "AT69_T_DTp-1qgIJlatQqA",

"version" : {

"number" : "6.0.1",

"build_hash" : "f27399d",

"build_date" : "2016-03-30T09:51:41.449Z",

"build_snapshot" : false,

"lucene_version" : "7.0.1",

"minimum_wire_compatibility_version" : "1.2.3",

"minimum_index_compatibility_version" : "1.2.3"

},

"tagline" : "You Know, for Search"

}

6.配置

Elasticsearch 有三个配置文件:

elasticsearch.yml用于配置 Elasticsearchjvm.options用于配置 Elasticsearch JVM 设置log4j2.properties用于配置 Elasticsearch 日志记录

生产上必须配置一下:

path.data和path.logscluster.namenode.namebootstrap.memory_locknetwork.hostdiscovery.zen.ping.unicast.hostsdiscovery.zen.minimum_master_nodes- JVM 堆转储路径

path.data设置可以设置为多个路径,在这种情况下,所有路径都将用于存储数据(尽管属于单个分片的文件都将存储在同一数据路径上)

bootstrap.memory_lock JVM 不会被换出到磁盘,这对节点的健康至关重要。实现这一目标的一种方法是将 bootstrap.memory_lock设置设置为true。

一旦提供自定义设置network.host,Elasticsearch 就会假定您正在从开发模式转移到生产模式,并将许多系统启动检查从警告升级为异常。

discovery.zen.ping.unicast.hosts 当需要与其他服务器上的节点形成集群时,必须提供集群中可能处于活动状态且可联系的其他节点的种子列表。

#-XX:HeapDumpPath=/heap/dump/path 在 jvm.options 中,指定具体的文件。

discovery.zen.minimum_master_nodes : 形成集群的最少节点数,避免脑裂,必须大于集群节点总数的 1/2 。

1.集群健康

# 健康检查

curl -X GET "hadoop102:9200/_cat/health?v&pretty"

# Kibana

GET /_cat/nodes?v

-

绿色 - 一切都很好(集群功能齐全)

-

黄色 - 所有数据都可用,但一些副本尚未分配(集群功能齐全)

-

红色 - 由于某种原因,某些数据不可用(集群部分功能)

**注意:**当集群为红色时,它将继续处理来自可用分片的搜索请求,但您可能需要尽快修复它,因为存在未分配的分片。

# 节点查看

curl -X GET "hadoop102:9200/_cat/nodes?v&pretty"

# Kibana

GET /_cat/nodes?v

2.列出所有索引

# 列出索引

curl -X GET "hadoop102:9200/_cat/indices?v&pretty"

# Kibana

GET /_cat/indices?v

3.创建索引

创建名称为 customer 的索引。

PUT /customer?pretty

curl -X PUT "hadoop102:9200/customer?pretty&pretty"

GET /_cat/indices?v

curl -X GET "hadoop102:9200/_cat/indices?v&pretty"

# 有 1 个名为 customer 的索引,它有 5 个主分片和 1 个副本(默认值),其中包含 0 个文档。

# 黄色表示有一些副本尚未分配,因为只有一个节点

4.索引和查询文档

如果索引不存在,自动创建。

如果不指定 id,会生成一个默认的文档 id 。

curl -X PUT "hadoop102:9200/customer/doc/1?pretty&pretty" -H 'Content-Type: application/json' -d'

{

"name": "John Doe"

}

'

# Kabana 中创建

PUT /customer/doc/1?pretty

{

"name": "John Doe"

}

response:

{

"_index" : "customer",

"_type" : "doc",

"_id" : "1",

"_version" : 1,

"result" : "created",

"_shards" : {

"total" : 2,

"successful" : 1,

"failed" : 0

},

"_seq_no" : 0,

"_primary_term" : 1

}

查询结果:

curl -X GET "hadoop102:9200/customer/doc/1?pretty&pretty"# KibanaGET /customer/doc/1?pretty# 结果:{ "_index" : "customer", "_type" : "doc", "_id" : "1", "_version" : 1, "found" : true, "_source" : { "name": "John Doe" }}

5.删除索引

DELETE /customer?prettyGET /_cat/indices?vcurl -X DELETE "hadoop102:9200/customer"curl -X GET "hadoop102:9200/_cat/indices?v&pretty"

6.总结:

kibana 访问数据的模式

< REST动词> /<索引>/<类型>/<ID>

PUT /customer

PUT / customer/doc/1 { "name" : "John Doe" }

GET /customer/doc/1

DELETE /customer

7.修改文档(重新索引)

curl -X PUT "hadoop102:9200/customer/doc/1?pretty&pretty" -H 'Content-Type: application/json' -d'

{

"name": "Jane Doe"

}

'

# Kibana

PUT /customer/doc/1?pretty

{

"name": "Jane Doe"

}

8.更新文档

实际上是删除旧的文档,索引新的文档。

curl -X POST "hadoop102:9200/customer/doc/1/_update?pretty&pretty" -H 'Content-Type: application/json' -d'

{

"doc": { "name": "Jane Doe" }

}

'

# Kibana

POST /customer/doc/1/_update?pretty

{

"doc": { "name": "Jane Doe" }

}

也可以使用脚本 +5 ,类似于SQL UPDATE-WHERE

curl -X POST "hadoop102:9200/customer/doc/1/_update?pretty&pretty" -H 'Content-Type: application/json' -d'

{

"script" : "ctx._source.age += 5"

}

'

# Kibana

POST /customer/doc/1/_update?pretty

{

"script" : "ctx._source.age += 5"

}

9.删除文档

删除 index 比删除所有的文档要高效。

curl -X DELETE "hadoop102:9200/customer/doc/2?pretty&pretty"

# Kibana

DELETE /customer/doc/2?pretty

10.批量处理

1.可以减少网络连接

2.注意:Bulk API 不会因为其中一项操作失败而失败(没有事务),会返回每个操作的状态。

3.索引两个文档的例子

curl -X POST "hadoop102:9200/customer/doc/_bulk?pretty&pretty" -H 'Content-Type: application/json' -d'{"index":{"_id":"1"}}{"name": "John Doe" }{"index":{"_id":"2"}}{"name": "Jane Doe" }'

# Kibana

POST /customer/doc/_bulk?pretty{"index":{"_id":"1"}}{"name": "John Doe" }{"index":{"_id":"2"}}{"name": "Jane Doe" }

4.一个更新第一个文档,删除第二个文档的例子

curl -X POST "hadoop102:9200/customer/doc/_bulk?pretty&pretty" -H 'Content-Type: application/json' -d'{"update":{"_id":"1"}}{"doc": { "name": "John Doe becomes Jane Doe" } }{"delete":{"_id":"2"}}'

# Kibana

POST /customer/doc/_bulk?pretty{"update":{"_id":"1"}}{"doc": { "name": "John Doe becomes Jane Doe" } }{"delete":{"_id":"2"}}

6.样本数据测试

1.准备客户银行帐户信息 ,用 www.json-generator.com/ 生成的,保存在 es data/ 目录下

{

"account_number":0,

"balance":16623,

"firstname":"Bradshaw",

"lastname":"Mckenzie",

"age":29,

"gender":"F",

"address":"244 Columbus地点",

"雇主":"Euron",

"电子邮件":"[email protected]",

"城市":"Hobucken",

"状态":"CO"

}

2.导入数据

# 导入数据

curl -H "Content-Type: application/json" -XPOST 'hadoop102:9200/bank/account/_bulk?pretty&refresh' --data-binary "@accounts.json"

# 查看数据

curl 'localhost:9200/_cat/indices?v'

health status index uuid pri rep docs.count

green open accounts zTkxOW6dQB6RUJP_n-2hvg 5 1 1000 0 973kb 482.4kb

7.Search API

有两种搜索的方法

1.URL 发送参数

q=*参数指示 Elasticsearch 匹配索引中的所有文档。

sort=account_number:asc参数表示使用account_number每个文档的字段按升序对结果进行排序。

curl -X GET "hadoop102:9200/accounts/_search?q=*&sort=account_number:asc&pretty"

# Kibana

GET /accounts/_search?q=*&sort=account_number:asc&pretty

响应:

{

"took" : 19,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 1000,

"max_score" : null,

"hits" : [

{

"_index" : "accounts",

"_type" : "doc",

"_id" : "0",

"_score" : null,

"_source" : {

"account_number" : 0,

"balance" : 16623,

"firstname" : "Bradshaw",

"lastname" : "Mckenzie",

"age" : 29,

"gender" : "F",

"address" : "244 Columbus Place",

"employer" : "Euron",

"email" : "[email protected]",

"city" : "Hobucken",

"state" : "CO"

},

"sort" : [

0

]

}

.....

}

]

}

}

took– Elasticsearch 执行搜索的时间(以毫秒为单位)timed_out– 告诉我们搜索是否超时_shards– 告诉我们搜索了多少个分片,以及搜索成功/失败的分片数hits- 搜索结果hits.total– 符合我们搜索条件的文档总数hits.hits– 实际搜索结果数组(默认为前 10 个文档)hits.sort- 结果的排序键(如果按分数排序则丢失)hits._score并且max_score- 暂时忽略这些字段

2.请求 body 发送数据(推荐)

curl -X GET "hadoop102:9200/accounts/_search?pretty" -H 'Content-Type: application/json' -d'{ "query": { "match_all": {} }, "sort": [ { "account_number": "asc" } ]}'

# Kibana

GET /accounts/_search{ "query": { "match_all": {} }, "sort": [ { "account_number": "asc" } ]}

注意:一旦返回搜索结果,Elasticsearch 就完全完成了请求,并且不会维护任何类型的服务器端资源或打开结果中的游标。这与许多其他平台(如 SQL)形成鲜明对比,其中您最初可能会预先获得查询结果的部分子集,然后如果您想获取(或翻阅)其余部分,则必须不断返回服务器使用某种有状态服务器端游标的结果。

8.查询 DSL 语言介绍

query : 查询的定义

curl -X GET "hadoop102:9200/accounts/_search?pretty" -H 'Content-Type: application/json' -d'

{

"query": { "match_all": {} }

}

'

# Kibana

GET /bank/_search

{

"query": { "match_all": {} }

}

size: 查询的数量,默认是10。

curl -X GET "hadoop102:9200/accounts/_search?pretty" -H 'Content-Type: application/json' -d'

{

"query": { "match_all": {} },

"size": 1

}

'

# Kibana

GET /bank/_search

{

"query": { "match_all": {} },

"size": 1

}

from:从文档10开始,到19结束,一共10个文档,默认是0。

curl -X GET "hadoop102:9200/accounts/_search?pretty" -H 'Content-Type: application/json' -d'

{

"query": { "match_all": {} },

"from": 10,

"size": 10

}

'

# Kibana

GET /bank/_search

{

"query": { "match_all": {} },

"from": 10,

"size": 10

}

sort:排序,按照balance 字段降序排序,返回前10个文档

curl -X GET "hadoop102:9200/accounts/_search?pretty" -H 'Content-Type: application/json' -d'{ "query": { "match_all": {} }, "sort": { "balance": { "order": "desc" } }}'

# Kibana

GET /bank/_search{ "query": { "match_all": {} }, "sort": { "balance": { "order": "desc" } }}

9.Search DSL match

_source:搜索部分字段,只搜索 [“account_number”, “balance”] 两个字段。

curl -X GET "hadoop102:9200/accounts/_search?pretty" -H 'Content-Type: application/json' -d'{ "query": { "match_all": {} }, "_source": ["account_number", "balance"]}'

# Kibana

GET /bank/_search {"query": { "match_all": {} }, "_source": ["account_number", "balance"]}

match query:对特定的字段集搜索,示例为account_number = 20 的账户。

不区分大小写

curl -X GET "hadoop102:9200/accounts/_search?pretty" -H 'Content-Type: application/json' -d'

{

"query": { "match": { "account_number": 20 } }

}

'

# Kibana

GET /bank/_search

{

"query": { "match": { "account_number": 20 } }

}

match query:查询 address 中包含 mill 的账户。

curl -X GET "hadoop102:9200/accounts/_search?pretty" -H 'Content-Type: application/json' -d'

{

"query": { "match": { "address": "mill" } }

}

'

# Kibana

GET /bank/_search

{

"query": { "match": { "address": "mill" } }

}

此示例是match的变体( match_phrase),返回地址中包含短语“mill Lane”的所有帐户:

curl -X GET "hadoop102:9200/accounts/_search?pretty" -H 'Content-Type: application/json' -d'

{

"query": { "match_phrase": { "address": "mill lane" } }

}

'

# Kibana

GET /bank/_search

{

"query": { "match": { "address": "mill lane" } }

}

bool query: 两个 match 的组合。

must: 必须都为真。

此示例组合两个match查询并返回地址中同时包含“mill”和“lane”的所有帐户。

curl -X GET "hadoop102:9200/accounts/_search?pretty" -H 'Content-Type: application/json' -d'{ "query": { "bool": { "must": [ { "match": { "address": "mill" } }, { "match": { "address": "lane" } } ] } }}'

# Kibana

GET /bank/_search{ "query": { "match_phrase": { "address": "mill lane" } }}

bool should : 返回 match 中的任何一个为真。

curl -X GET "hadoop102:9200/accounts/_search?pretty" -H 'Content-Type: application/json' -d'{ "query": { "bool": { "should": [ { "match": { "address": "mill" } }, { "match": { "address": "lane" } } ] } }}'

# Kibana

GET /bank/_search{ "query": { "bool": { "should": [ { "match": { "address": "mill" } }, { "match": { "address": "lane" } } ] } }}

bool must_not: 匹配都为 false 的账户

curl -X GET "hadoop102:9200/accounts/_search?pretty" -H 'Content-Type: application/json' -d'{ "query": { "bool": { "must_not": [ { "match": { "address": "mill" } }, { "match": { "address": "lane" } } ] } }}'

# Kibana

GET /bank/_search{ "query": { "bool": { "must_not": [ { "match": { "address": "mill" } }, { "match": { "address": "lane" } } ] } }}

复杂组合的例子:

此示例返回 age = 40 但status <> ID 的所有帐户:

curl -X GET "hadoop102:9200/accounts/_search?pretty" -H 'Content-Type: application/json' -d'

{

"query": {

"bool": {

"must": [

{ "match": { "age": "40" } }

],

"must_not": [

{ "match": { "state": "ID" } }

]

}

}

}

'

# Kibana

GET /bank/_search

{

"query": {

"bool": {

"must": [

{ "match": { "age": "40" } }

],

"must_not": [

{ "match": { "state": "ID" } }

]

}

}

}

10.Search DSL Filter

同下一起。

11.Search DSL Range

_score: 分数是一个数值,文档与指定的搜索查询匹配程度的相对度量。

分数越高,文档越相关,分数越低,文档越不相关。

查询并不总是需要产生分数,特别是当它们仅用于“过滤”文档集时。

filter 不会改变分数。只有 true 和 false。

rangequery: 按值范围过滤文档。这通常用于数字或日期过滤。大于等于,小于等于。

过滤出 balance 大于等于2000且小于等于3000的账户。

curl -X GET "localhost:9200/bank/_search?pretty" -H 'Content-Type: application/json' -d'

{

"query": {

"bool": {

"must": { "match_all": {} },

"filter": {

"range": {

"balance": {

"gte": 20000,

"lte": 30000

}

}

}

}

}

}

'

# Kibana

GET /bank/_search

{

"query": {

"bool": {

"must": { "match_all": {} },

"filter": {

"range": {

"balance": {

"gte": 20000,

"lte": 30000

}

}

}

}

}

}

除了match_all,match,bool,和range查询,有很多可用的其他查询类型的

12.聚合查询

首先,此示例按状态对所有帐户进行分组,然后返回按计数降序(也是默认值)排序的前 10 个(默认)状态.

size=0为不显示搜索命中,因为我们只想查看响应中的聚合结果

等同于sql:select state, count (*) FROM accounts group by state order by count (*) desc

curl -X GET "hadoop102:9200/accounts/_search?pretty" -H 'Content-Type: application/json' -d'

{

"size": 0,

"aggs": {

"group_by_state": {

"terms": {

"field": "state.keyword"

}

}

}

}

'

# Kibana

GET /bank/_search

{

"size": 0,

"aggs": {

"group_by_state": {

"terms": {

"field": "state.keyword"

}

}

}

}

结果:

{

"took": 29,

"timed_out": false,

"_shards": {

"total": 5,

"successful": 5,

"skipped" : 0,

"failed": 0

},

"hits" : {

"total" : 1000,

"max_score" : 0.0,

"hits" : [ ]

},

"aggregations" : {

"group_by_state" : {

"doc_count_error_upper_bound": 20,

"sum_other_doc_count": 770,

"buckets" : [ {

"key" : "ID",

"doc_count" : 27

}, {

"key" : "TX",

"doc_count" : 27

}, {

"key" : "AL",

"doc_count" : 25

}, {

"key" : "MD",

"doc_count" : 25

}, {

"key" : "TN",

"doc_count" : 23

}, {

"key" : "MA",

"doc_count" : 21

}, {

"key" : "NC",

"doc_count" : 21

}, {

"key" : "ND",

"doc_count" : 21

}, {

"key" : "ME",

"doc_count" : 20

}, {

"key" : "MO",

"doc_count" : 20

} ]

}

}

}

在前面的聚合基础上,此示例按州计算平均帐户余额(同样仅针对按降序按计数排序的前 10 个州):

select count(1) as average_balance, avg(account) as balance group by state limit 10;

average_balance聚合嵌套在group_by_state聚合中。这是所有聚合的通用模式。

可以在聚合中任意嵌套聚合。

curl -X GET "hadoop102:9200/accounts/_search?pretty" -H 'Content-Type: application/json' -d'

{

"size": 0,

"aggs": {

"group_by_state": { // 相当于 group by state.keyword

"terms": {

"field": "state.keyword"

},

"aggs": { // 相当于 count(balance) as average_balance

"average_balance": {

"avg": {

"field": "balance"

}

}

}

}

}

}

'

# Kibana

GET /bank/_search

{

"size": 0,

"aggs": {

"group_by_state": {

"terms": {

"field": "state.keyword"

},

"aggs": {

"average_balance": {

"avg": {

"field": "balance"

}

}

}

}

}

}

聚合结果:

{

"took" : 32,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 1000,

"max_score" : 0.0,

"hits" : [ ]

},

"aggregations" : {

"group_by_state" : {

"doc_count_error_upper_bound" : 20,

"sum_other_doc_count" : 770,

"buckets" : [

{

"key" : "ID",

"doc_count" : 27,

"average_balance" : {

"value" : 24368.777777777777

}

},

{

"key" : "TX",

"doc_count" : 27,

"average_balance" : {

"value" : 27462.925925925927

}

},

{

"key" : "AL",

"doc_count" : 25,

"average_balance" : {

"value" : 25739.56

}

},

{

"key" : "MD",

"doc_count" : 25,

"average_balance" : {

"value" : 24963.52

}

},

{

"key" : "TN",

"doc_count" : 23,

"average_balance" : {

"value" : 29796.782608695652

}

},

{

"key" : "MA",

"doc_count" : 21,

"average_balance" : {

"value" : 29726.47619047619

}

},

{

"key" : "NC",

"doc_count" : 21,

"average_balance" : {

"value" : 26785.428571428572

}

},

{

"key" : "ND",

"doc_count" : 21,

"average_balance" : {

"value" : 26303.333333333332

}

},

{

"key" : "ME",

"doc_count" : 20,

"average_balance" : {

"value" : 19575.05

}

},

{

"key" : "MO",

"doc_count" : 20,

"average_balance" : {

"value" : 24151.8

}

}

]

}

}

}

复杂的且套聚合查询

在之前的聚合基础上,让我们现在按降序对平均余额进行排序:

select range_age, gender.keyword, avg(balance) as average_balance from accounts group by range_age, gender.keyword ;

此示例演示了如何按年龄段(20-29、30-39 和 40-49 岁)和gender分组,最后得到每个年龄段、每个性别的平均帐户余额:

curl -X GET "hadoop102:9200/accounts/_search?pretty" -H 'Content-Type: application/json' -d'

{

"size": 0,

"aggs": {

"group_by_age": {

"range": {

"field": "age",

"ranges": [

{

"from": 20,

"to": 30

},

{

"from": 30,

"to": 40

},

{

"from": 40,

"to": 50

}

]

},

"aggs": { // 先 avg(balance) as average_balance group by gender.keyword

"group_by_gender": {

"terms": {

"field": "gender.keyword"

},

"aggs": {

"average_balance": {

"avg": {

"field": "balance"

}

}

}

}

}

}

}

}

'

# Kibana

GET /bank/_search

{

"size": 0,

"aggs": {

"group_by_age": {

"range": {

"field": "age",

"ranges": [

{

"from": 20,

"to": 30

},

{

"from": 30,

"to": 40

},

{

"from": 40,

"to": 50

}

]

},

"aggs": {

"group_by_gender": {

"terms": {

"field": "gender.keyword"

},

"aggs": {

"average_balance": {

"avg": {

"field": "balance"

}

}

}

}

}

}

}

}

查询结果:

{

"took" : 34,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 1000,

"max_score" : 0.0,

"hits" : [ ]

},

"aggregations" : {

"group_by_age" : {

"buckets" : [

{

"key" : "20.0-30.0",

"from" : 20.0,

"to" : 30.0,

"doc_count" : 451,

"group_by_gender" : {

"doc_count_error_upper_bound" : 0,

"sum_other_doc_count" : 0,

"buckets" : [

{

"key" : "M",

"doc_count" : 232,

"average_balance" : {

"value" : 27374.05172413793

}

},

{

"key" : "F",

"doc_count" : 219,

"average_balance" : {

"value" : 25341.260273972603

}

}

]

}

},

{

"key" : "30.0-40.0",

"from" : 30.0,

"to" : 40.0,

"doc_count" : 504,

"group_by_gender" : {

"doc_count_error_upper_bound" : 0,

"sum_other_doc_count" : 0,

"buckets" : [

{

"key" : "F",

"doc_count" : 253,

"average_balance" : {

"value" : 25670.869565217392

}

},

{

"key" : "M",

"doc_count" : 251,

"average_balance" : {

"value" : 24288.239043824702

}

}

]

}

},

{

"key" : "40.0-50.0",

"from" : 40.0,

"to" : 50.0,

"doc_count" : 45,

"group_by_gender" : {

"doc_count_error_upper_bound" : 0,

"sum_other_doc_count" : 0,

"buckets" : [

{

"key" : "M",

"doc_count" : 24,

"average_balance" : {

"value" : 26474.958333333332

}

},

{

"key" : "F",

"doc_count" : 21,

"average_balance" : {

"value" : 27992.571428571428

}

}

]

}

}

]

}

}

}