RuntimeError: DataLoader worker (pid 32633) is killed by signal: Killed.

硬件设备jetson xavier nx开发套件

软件版本bsp3271 jetpack4.6.1

torch1.10 torchvision 0.11.1

error_if_any_worker_fails()

RuntimeError: DataLoader worker (pid 32633) is killed by signal: Killed.

python3 train.py --img 640 --batch 8 --epochs 300 --data data/coco128.yaml --cfg models/yolov5s.yaml --weights weights/yolov5s.pt --device '0'

github: skipping check (offline)

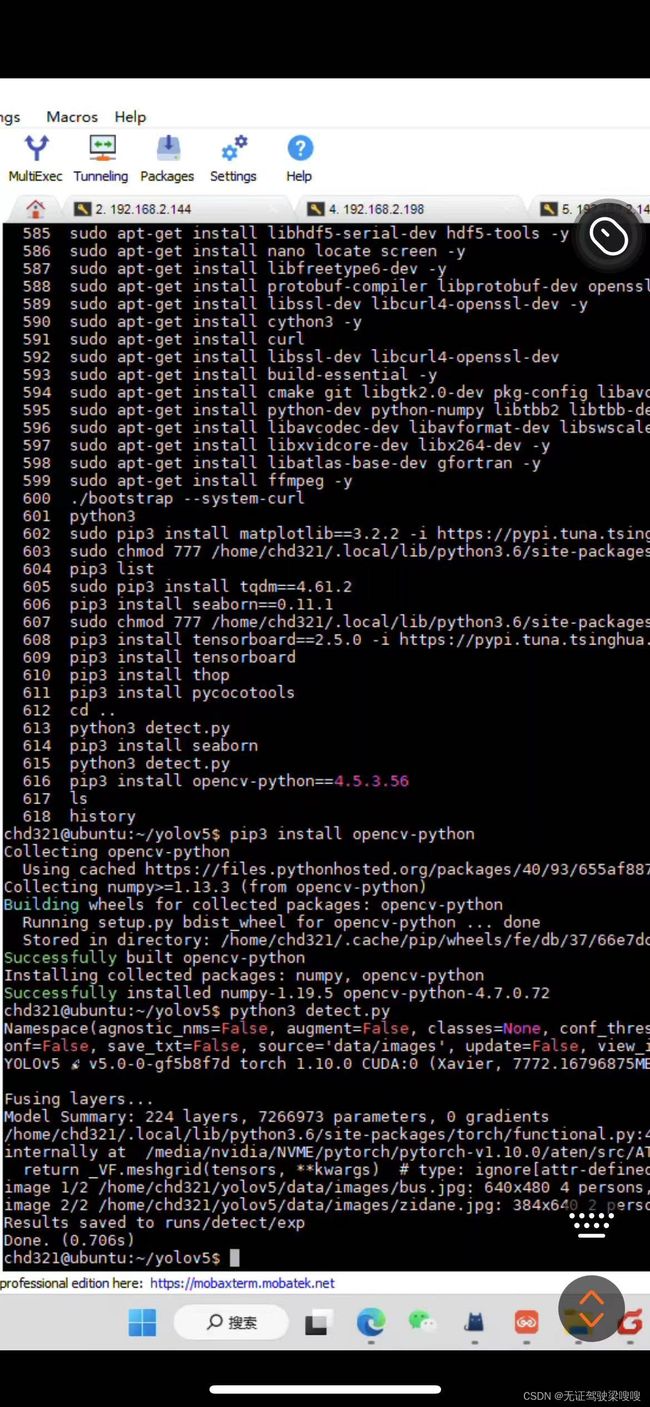

YOLOv5 v5.0-0-gf5b8f7d torch 1.10.0 CUDA:0 (Xavier, 7772.16796875MB)

Namespace(adam=False, artifact_alias='latest', batch_size=8, bbox_interval=-1, bucket='', cache_images=False, cfg='models/yolov5s.yaml', data='data/coco128.yaml', device='0', entity=None, epochs=300, evolve=False, exist_ok=False, global_rank=-1, hyp='data/hyp.scratch.yaml', image_weights=False, img_size=[640, 640], label_smoothing=0.0, linear_lr=False, local_rank=-1, multi_scale=False, name='exp', noautoanchor=False, nosave=False, notest=False, project='runs/train', quad=False, rect=False, resume=False, save_dir='runs/train/exp3', save_period=-1, single_cls=False, sync_bn=False, total_batch_size=8, upload_dataset=False, weights='weights/yolov5s.pt', workers=8, world_size=1)

tensorboard: Start with 'tensorboard --logdir runs/train', view at http://localhost:6006/

hyperparameters: lr0=0.01, lrf=0.2, momentum=0.937, weight_decay=0.0005, warmup_epochs=3.0, warmup_momentum=0.8, warmup_bias_lr=0.1, box=0.05, cls=0.5, cls_pw=1.0, obj=1.0, obj_pw=1.0, iou_t=0.2, anchor_t=4.0, fl_gamma=0.0, hsv_h=0.015, hsv_s=0.7, hsv_v=0.4, degrees=0.0, translate=0.1, scale=0.5, shear=0.0, perspective=0.0, flipud=0.0, fliplr=0.5, mosaic=1.0, mixup=0.0

wandb: Install Weights & Biases for YOLOv5 logging with 'pip install wandb' (recommended)

from n params module arguments

0 -1 1 3520 models.common.Focus [3, 32, 3]

1 -1 1 18560 models.common.Conv [32, 64, 3, 2]

2 -1 1 18816 models.common.C3 [64, 64, 1]

3 -1 1 73984 models.common.Conv [64, 128, 3, 2]

4 -1 1 156928 models.common.C3 [128, 128, 3]

5 -1 1 295424 models.common.Conv [128, 256, 3, 2]

6 -1 1 625152 models.common.C3 [256, 256, 3]

7 -1 1 1180672 models.common.Conv [256, 512, 3, 2]

8 -1 1 656896 models.common.SPP [512, 512, [5, 9, 13]]

9 -1 1 1182720 models.common.C3 [512, 512, 1, False]

10 -1 1 131584 models.common.Conv [512, 256, 1, 1]

11 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

12 [-1, 6] 1 0 models.common.Concat [1]

13 -1 1 361984 models.common.C3 [512, 256, 1, False]

14 -1 1 33024 models.common.Conv [256, 128, 1, 1]

15 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

16 [-1, 4] 1 0 models.common.Concat [1]

17 -1 1 90880 models.common.C3 [256, 128, 1, False]

18 -1 1 147712 models.common.Conv [128, 128, 3, 2]

19 [-1, 14] 1 0 models.common.Concat [1]

20 -1 1 296448 models.common.C3 [256, 256, 1, False]

21 -1 1 590336 models.common.Conv [256, 256, 3, 2]

22 [-1, 10] 1 0 models.common.Concat [1]

23 -1 1 1182720 models.common.C3 [512, 512, 1, False]

24 [17, 20, 23] 1 229245 models.yolo.Detect [80, [[10, 13, 16, 30, 33, 23], [30, 61, 62, 45, 59, 119], [116, 90, 156, 198, 373, 326]], [128, 256, 512]]

/home/chd321/.local/lib/python3.6/site-packages/torch/functional.py:445: UserWarning: torch.meshgrid: in an upcoming release, it will be required to pass the indexing argument. (Triggered internally at /media/nvidia/NVME/pytorch/pytorch-v1.10.0/aten/src/ATen/native/TensorShape.cpp:2157.)

return _VF.meshgrid(tensors, **kwargs) # type: ignore[attr-defined]

Model Summary: 283 layers, 7276605 parameters, 7276605 gradients, 17.2 GFLOPS

Transferred 360/362 items from weights/yolov5s.pt

WARNING: Dataset not found, nonexistent paths: ['/home/chd321/coco128/images/train2017']

Downloading https://github.com/ultralytics/yolov5/releases/download/v1.0/coco128.zip ...

100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 6.66M/6.66M [01:45<00:00, 65.9kB/s]

Dataset autodownload success

Scaled weight_decay = 0.0005

Optimizer groups: 62 .bias, 62 conv.weight, 59 other

train: Scanning '../coco128/labels/train2017' images and labels... 126 found, 2 missing, 0 empty, 0 corrupted: 100%|█████████████████████████████████████| 128/128 [00:00<00:00, 681.53it/s]

train: New cache created: ../coco128/labels/train2017.cache

val: Scanning '../coco128/labels/train2017.cache' images and labels... 126 found, 2 missing, 0 empty, 0 corrupted: 100%|██████████████████████████████████████████| 128/128 [00:00

train(hyp, opt, device, tb_writer)

File "train.py", line 303, in train

pred = model(imgs) # forward

File "/home/chd321/.local/lib/python3.6/site-packages/torch/nn/modules/module.py", line 1102, in _call_impl

return forward_call(*input, **kwargs)

File "/home/chd321/yolov5/models/yolo.py", line 123, in forward

return self.forward_once(x, profile) # single-scale inference, train

File "/home/chd321/yolov5/models/yolo.py", line 139, in forward_once

x = m(x) # run

File "/home/chd321/.local/lib/python3.6/site-packages/torch/nn/modules/module.py", line 1102, in _call_impl

return forward_call(*input, **kwargs)

File "/home/chd321/yolov5/models/common.py", line 138, in forward

return self.cv3(torch.cat((self.m(self.cv1(x)), self.cv2(x)), dim=1))

File "/home/chd321/.local/lib/python3.6/site-packages/torch/nn/modules/module.py", line 1102, in _call_impl

return forward_call(*input, **kwargs)

File "/home/chd321/yolov5/models/common.py", line 42, in forward

return self.act(self.bn(self.conv(x)))

File "/home/chd321/.local/lib/python3.6/site-packages/torch/nn/modules/module.py", line 1102, in _call_impl

return forward_call(*input, **kwargs)

File "/home/chd321/.local/lib/python3.6/site-packages/torch/nn/modules/conv.py", line 446, in forward

return self._conv_forward(input, self.weight, self.bias)

File "/home/chd321/.local/lib/python3.6/site-packages/torch/nn/modules/conv.py", line 443, in _conv_forward

self.padding, self.dilation, self.groups)

File "/home/chd321/.local/lib/python3.6/site-packages/torch/utils/data/_utils/signal_handling.py", line 66, in handler

_error_if_any_worker_fails()

RuntimeError: DataLoader worker (pid 29639) is killed by signal: Killed.原因内存不够,添加虚拟内存内存方法如下

chd321@ubuntu:~/yolov5$ sudo vi trian.py

[sudo] password for chd321:

chd321@ubuntu:~/yolov5$ sudo vi train.py

chd321@ubuntu:~/yolov5$ git clone https://github.com/JetsonHacksNano/installSwapfile

Cloning into 'installSwapfile'...

remote: Enumerating objects: 37, done.

remote: Counting objects: 100% (3/3), done.

remote: Compressing objects: 100% (3/3), done.

remote: Total 37 (delta 0), reused 0 (delta 0), pack-reused 34

Unpacking objects: 100% (37/37), done.

chd321@ubuntu:~/yolov5$ cd installSwapfile/

chd321@ubuntu:~/yolov5/installSwapfile$ s

-bash: s: command not found

chd321@ubuntu:~/yolov5/installSwapfile$ ls

autoMount.sh installSwapfile.sh LICENSE README.md

chd321@ubuntu:~/yolov5/installSwapfile$ vi installSwapfile.sh

chd321@ubuntu:~/yolov5/installSwapfile$ sudo ./installSwapfile.sh