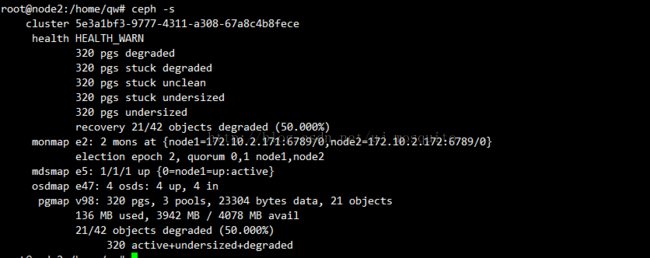

Ceph更多Mon 更多mds

1、当前状态

ceph-authtool /tmp/ceph.mon.keyring --import-keyring /etc/ceph/ceph.client.admin.keyring

monmaptool --create --add node1 172.10.2.172 --fsid e3de7f45-c883-4e2c-a26b-210001a7f3c2 /tmp/monmap

mkdir -p /var/lib/ceph/mon/ceph-node2

ceph-mon --mkfs -i node2 --monmap /tmp/monmap --keyring /tmp/ceph.mon.keyring

touch /var/lib/ceph/mon/ceph-node2/done

/etc/init.d/ceph start

附:配置文件

root@node1:/var/lib/ceph/osd# cat /etc/ceph/ceph.conf

[global]

fsid = 5e3a1bf3-9777-4311-a308-67a8c4b8fece

mon initial members = node1

mon host = 172.10.2.171

public network = 172.10.2.0/24

auth cluster required = none

auth service required = none

auth client required = none

osd journal size = 1024

filestore xattr use omap = true

osd pool default size = 2

osd pool default min size = 1

osd pool default pg num = 128

osd pool default pgp num = 128

osd crush chooseleaf type = 1

[mon.node1]

host = node1

mon addr = 172.10.2.171:6789

[mon.node2]

host = node2

mon addr = 172.10.2.172:6789

[osd.0]

host = node1

addr = 172.10.2.171:6789

osd data = /var/lib/ceph/osd/ceph-0

[osd.1]

host = node1

addr = 172.10.2.171:6789

osd data = /var/lib/ceph/osd/ceph-1

[osd.2]

host = node2

addr = 172.10.2.172:6789

osd data = /var/lib/ceph/osd/ceph-2

[osd.3]

host = node2

addr = 172.10.2.172:6789

osd data = /var/lib/ceph/osd/ceph-3

[mds.node1]

host = node1

[mds.node2]

host = node2

[mds]

max mds = 2