navmesh寻路(英文)

Fixing Pathfinding Once and For All

I normally do everything I can to avoid saying things that could be interpreted as a criticism of other games or developers in the industry.

But in this case, I had to make a bit of an exception.

I need to talk about some problems we face with pathfinding. In order to prove that these problems still exist, I felt the need to make this video ... which will hopefully be taken in the humorous and lighthearted spirit in which it was intended

All of these clips were recorded over the last week with the latest, most-recently-patched version of each game.

As you can see, we're still a long way from having robust pathfinding across the board ... and it's even a problem in some million-unit-selling, AAA-quality titles.

It's not necessarily a universal problem. Many modern games do have high-quality pathfinding, and pathfinding often works well in some of the games shown here.

But there are still too many games that do pathfinding the same way that games did in the 1990s.

(Note: The only reason you see lots of PC role-playing games here just comes down to convenience. These are the games I had installed at the time. The problem isn't specific to any genre or platform by any stretch of the imagination; there are plenty of console games with similar issues.)

To the best of my knowledge, most of these games use waypoint graphs for pathfinding. I think that's the reason for several of the pathfinding issues you see in this video, and many of the pathfinding problems we face in the industry as a whole.

I believe waypoint graphs are now obsolete. This article explains the limitations of the waypoint graph approach and lays out a five-point argument for a better approach.

There was a time when it made sense to use waypoints for pathfinding. Back in the 1980s and 1990s, we were working under serious technical constraints and we had to cut a lot of corners.

But we're a multi-billion-dollar industry now. Our target platforms have multiple cores and ever-increasing amounts of memory, and we can afford to do pathfinding correctly.

There's a saying among AI developers in the industry: "pathfinding is solved." We have good approaches to every pathfinding problem modern games face. We just don't always use them.

There's no reason not to have pathfinding that works in every game, every time.

Hit the jump for a detailed explanation.

Why Waypoints Aren't For Pathfinding

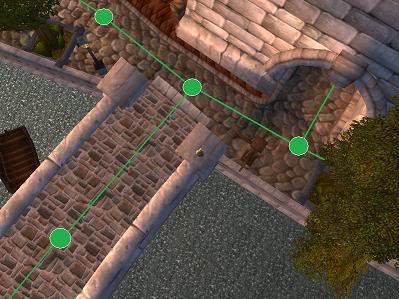

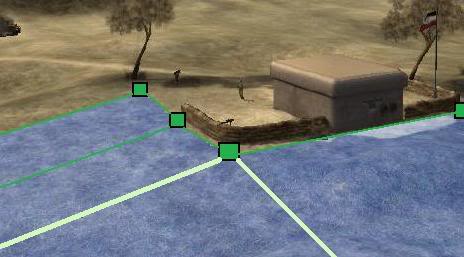

Let me show you what a typical waypoint graph looks like. Here's a small piece of Stormwind City in World of WarCraft:

Figure 1. Part of Stormwind City in World of WarCraft

Here's what a typical waypoint graph might look like in that area.

Figure 2. The same area annotated with a waypoint graph

There's another way to do it, and it involves using convex polygons to describe where AI characters can travel. This representation gives AIs a lot more information about the world around them and supports much more intelligent decision-making.

Here's what a navigation mesh looks like:

Figure 3. The same area annotated with a navigation mesh

Here, then are the five big reasons why waypoint networks fall short.

1. Some kinds of game worlds require a ridiculous number of waypoints.

Large, open areas usually require tons of waypoints sprinkled liberally throughout the game world to achieve adequate movement.

A navigation mesh, on the other hand, usually only requires a couple of big polygons for these kinds of areas ... which you can pathfind through that much more quickly.

Here's an example.

The image below shows a town in World of WarCraft called "Halaa." This is a relatively large, mostly open area that NPCs need to be able to navigate through. I've edited out a few details, such as the flag and the fountain in town, for the sake of this example.

Figure 4. The town of Halaa in World of WarCraft, seen from above (slightly modified)

With a waypoint-based system, we need to place a lot of waypoints to get full coverage. Even then, our NPCs will end up doing a lot of zigzags during movement unless we specify a lot more waypoints than I've shown here.

Figure 5. Halaa with a waypoint graph

With a navigation mesh, on the other hand, we can describe the same area with a handful of convex polygons:

Figure 6. Halaa with a navigation mesh

The simplicity of the navigation mesh means we don't have to search as many nodes when we call our pathfinding algorithm at runtime, and pathfinding will be faster as a result.

2. They make characters "zig-zag" as they move.

Waypoint graphs force your characters to stick to the graphs you create. This means that your characters are effectively on rails, and will almost never take the optimal path from A to B ... because the most direct path will almost never match the graph.

That causes unnatural pathfinding artifacts -- in particular, characters artificially "zig-zagging" left and right as they walk.

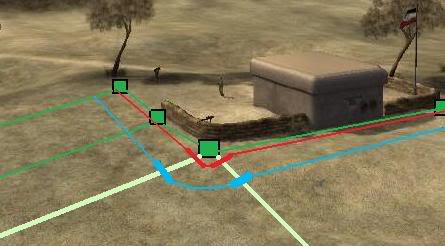

Here's an example. Let's say we want a character to walk from A to B:

Here's what the path would look like with the waypoint graph I showed you earlier.

Figure 8. Navigating from A to B using the waypoint graph

As you can see, our AIs will be turning a lot while they walk along the yellow path.

Ideally, after we created the path, we could somehow adjust it to make it smoother ... perhaps by creating a Catmull-Rom spline along the path points.

The problem is, waypoint networks don't have any information about anything outside the network, and that makes it impossible to do path-smoothing and adjustment safely and reliably.

How can you create a spline if the spline is outside of the waypoint graph, and actually following its curves might make you fall off a bridge?

You might think there has to be some simpler way to make a path. Unfortunately, with waypoint graphs, there isn't. Again, if we deviate outside of the waypoint graph even a little bit, we could easily end up falling off a cliff or bumping into a wall. We just don't have any way of knowing, since the graph doesn't have any of that data.

So in order to stay safe, we have to take the most pessimistic view possible, and stay on the graph at all times.

The navigation mesh path, on the other hand, would look like this:

Figure 9. Navigating from A to B on the navigation mesh

Since we know where a character can safely walk, we can smooth out the path in any way we like, so long as the smoothing keeps the path on the navigation mesh.

("Oh," I hear you say, "but I can just add more links to my waypoint graph to smooth everything out! I'll connect all the nodes in the whole town if I know an NPC can walk between them in a straight line."

"But that gives you exponential explosion," I reply ... "You're talking about 40 or 50 extra links in this example alone. The number of connections you'll need between all the nodes approaches O(N^2) as the size of the area increases."

"OK," I hear you say, "In that case, I'll just mark each area between any set of 3 mutually adjacent waypoints as 'open' so I know my AIs can walk anywhere in that area."

"That makes a polygon ... and you've just created a navigation mesh," say I).

The fact that navigation meshes tell us exactly where our characters are allowed to travel means we can easily use splines to smooth our paths. All we need to do is make sure our splines stay inside the navigation mesh.

Going back to our Stormwind City example, here's a waypoint path (red) and a smoothed path on a navigation mesh (blue).

Figure 10. A waypoint path (red) and a smoothed navigation mesh path (blue)

3. They don't allow path correction. That makes robust dynamic obstacle avoidance difficult, if not impossible.

Waypoint graphs don't have any data about anything outside the graph. They don't know which areas characters can legally walk on even one inch outside the graph.

That makes it impossible for pathfinding systems to modify waypoint paths to deal with dynamic obstacles robustly.

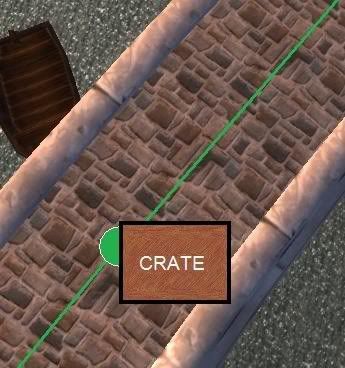

Let's imagine there's a large, heavy crate on the bridge in Stormwind City.

With a waypoint graph, we're screwed -- if the crate overlaps the waypoint graph, we have no idea whether to go around it to the left or the right, or if our path is blocked completely and we need to take a major detour. We could always guess, but if we make the wrong guess, we'll fall off the bridge and end up in the water.

Figure 11. A large, immovable crate on the bridge

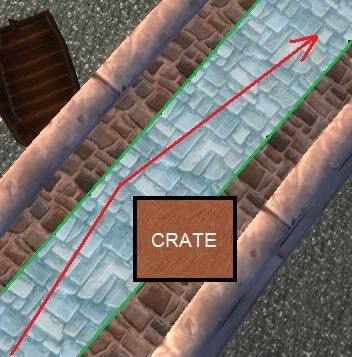

With a navigation mesh, though, it's a lot easier, since we know what areas we're allowed to walk in. We do a bit of raycasting against the barrel and adjust our path around it, keeping our path (and ourselves) safely on the navigation mesh.

Figure 12. Navigating around the crate

Sure, you can address this in a waypoint graph, too, by dropping down tons and tons of waypoints until your waypoint graph is as thick as grass (and your pathfinding as slow as molasses).

I don't know about you, but I'd rather run my A* search over one big polygon than several hundred waypoints.

4. They don't work robustly for characters that move differently.

Waypoint graphs tend to work poorly for characters with different heights, widths, turn rates, and other pathfinding parameters. This means it's usually impossible to use a single, unified waypoint graph for all the different types of units in your game.

When we think about AI pathfinding, we tend to think in terms of humans navigating around in a game world.

But AI systems need to control lots of different kinds of units -- tanks, planes, ships, hovercraft, motorcycles, orcs, goblins, dragons, lumbering giants, flying and hovering creatures, and so on. In many cases, units need to do pathfinding differently depending on their size, shape, and movement capabilities. A good pathfinding representation should handle all of them.

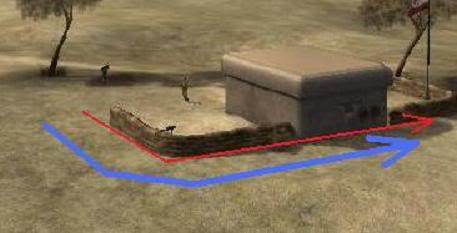

Here's an example. Let's say we have a concrete bunker out in the desert with a few sandbags around it.

Figure 13. A bunker in the desert

If we have a human soldier, he can run along right next to the sandbags (the red arrow in the image below).

A Panzer tank, on the other hand, has to drive much farther away from the sandbags to avoid a collision (blue arrow).

Figure 14. The closest possible paths that a human soldier (red) and a tank (blue) can take around the sandbags

With a waypoint approach, you can't handle this robustly with a single representation. You need to use totally separate waypoint networks for the tanks and the soldiers. Add a new "motorcycle" unit, and now you need a third network. And so on for every different type of unit that has different movement capabilities.

With a navigation mesh, on the other hand, it becomes much easier.

Figure 15. Part of a navigation mesh around the outside of the bunker

Notice the two light green edges emanating from the corner of the sandbags in the image above. Since we know that a soldier has a collision radius of 1 meter, and a Panzer tank has a radius of 5 meters, it becomes trivial for us to build two different paths using the same mesh. We just compute the path along the sandbags, and then push it either 1 meter or 5 meters away from the corner, respectively, depending on whether we're doing pathfinding for a soldier or a tank.

Figure 16. Part of a navigation mesh around the outside of the bunker

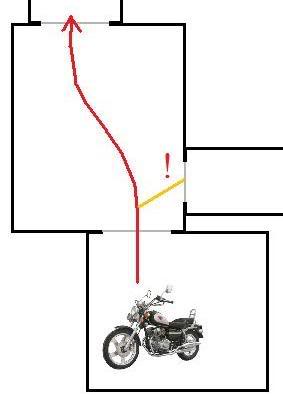

Here's another example. Let's say we have a soldier on a motorcycle. Unlike human soldiers, motorcycles can't turn on a dime while they're moving.

In the example below, it's obvious that the motorcycle rider can drive safely from his current position to the room at the top (red line), but cannot turn into the hallway on the right since the angle is too sharp (orange line). Whatever pathfinding representation we choose should be able to figure this out, too.

Figure 17. A motorcyclist can go into the room at the top (red) but not the room on the right (orange)

With a navigation mesh, this is relatively easy to do. We just test the turn angles and distances as we're doing the pathfinding and reject any paths that require steep turns over short distances.

With a waypoint approach, on the other hand, it's essentially impossible. At a minimum, we'd need a completely separate waypoint graph for soldiers on motorcycles, since we can't use the same graph that soldiers on foot are using. Adding an entirely new graph like that for every unit with different pathfinding capabilities gets very cumbersome.

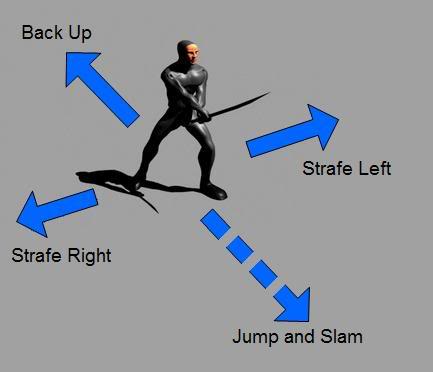

5. They don't have enough data to support what your AI needs beyond pathfinding.

AI search spaces aren't just for navigation. AIs need to be able to use the pathfinding data to tell them how to move in the world.

A game character needs to constantly query the pathfinding system and ask: is it OK if I move here? How about over here?

Games are increasingly moving to animation-driven movement systems that give animators control over the position of game characters. That means that in order to know what the results will be if we play animation X, we need to figure out where the character will be when X is finished, and whether that location is inside a wall, hanging over a cliff, or in some other location the level designers don't want him to be.

Here's an example. The swordsman below has four moves: a left strafe, a right strafe, a backstep, and a big flying leap into the air that sends him up 20 feet and then has him land 8 feet in front of where he started.

Figure 18. A swordsman with four animations that contain translation

In order to make sure my swordsman doesn't end up slamming into a brick wall when he leaps or strafes or backsteps, I need to test the predicted end position of each of these animations against the navigation mesh.

Yes, it's possible to use your collision system to do raycasting. That will often give you useful information, and for dynamic physics objects in the game world, it's usually the only way for AIs to find out about them.

But raycasting can be expensive, and when you only care about the static game world and not the dynamic physics objects, it's faster and easier to query a navigation mesh.

Although I can use raycasting to tell me if there's a wall between me and point (X,Y,Z), the collision system can't tell me if the swordsman will land in a position that the level designers actually want characters to walk in ... only a navigation mesh can tell me that.

If you're still not convinced after all of that, let me try to address any remaining concerns you might have by answering common questions about waypoint graphs and navigation meshes.

Q & A

But isn't it slower to do pathfinding on a navigation mesh?

Not at all. A navigation mesh is a graph, just like a waypoint graph is, and the core pathfinding routines are very similar. The difference is that a navigation mesh has a polygon associated with each graph node.

The image below shows the graph connecting the nav mesh polygons.

Figure 19. An illustration of the graph (red) underlying a navigation mesh

Whenever you run the A* algorithm for pathfinding, you're always running it on some kind of a graph, whether it's a square grid, a waypoint graph, a navigation mesh, or what have you.

In most cases, navigation meshes have performance similar to waypoint graphs. In cases where they cover the same area with fewer nodes (such as Figure 6), a navigation mesh can actually be significantly faster.

But don't navigation meshes take a lot more memory than waypoints?

If done correctly, no.

Navigation meshes are a very simple and compact representation. In general, a nav mesh will only require a tiny fraction of the memory required by the underlying rendering geometry for the static game world. A navigation mesh is much smaller than a collision mesh, since it cares about fewer surfaces (it ignores walls, ceilings, and other unwalkable surfaces) and uses a smaller number of much larger polygons to cover a given area.

A navigation mesh tends to use a few more points for each graph node than a waypoint graph in the worst case. And in some cases, such as the town in Figure 6, a navigation mesh can actually be smaller because it's a simpler representation.

Most of the examples you showed were role-playing games. Why don't I see the same kinds of problems in first-person shooters?

The problems are still there in many first-person shooters, but they're harder to spot due to the nature of the gameplay.

- Most AIs don't live long enough to let you spot the flaws in their pathfinding.

- AIs will usually stop and shoot the moment they have line-of-sight to you, so their paths are a lot shorter.

- In many single-player FPS games, AIs don't move very much, and will attempt to snipe you from a relatively fixed position.

- A lot of modern FPS games provide AI sidekicks who will kill the enemy AIs so quickly they don't have time to move very far.

Here's an example from Half-Life 2. With a little bit of experimentation, it's easy to see that the guard on the stairs is locked to a single, linear waypoint path that runs directly up and down the center of the stairs. The guard still ends up looking competent since he can shoot at you whenever he can see you.

When you take away ranged weapons and force characters to use melee weapons -- as with some of the Covenant soldiers in Halo, the Icarus stealth assassins in F.E.A.R., or the Metroids in a Metroid Prime game -- waypoint graphs are suddenly a much less attractive option (which is part of the reason all three of those games used navigation meshes instead ...)

Look, I understand everything you said, but designers need to be able to put down cover points, patrol paths, and so on. That's just essential for creating the AI in our games.

Of course! You can still do that, even if you're using a navigation mesh.

The key is to separate the two representations. You use one representation (a nav mesh) for the pathfinding and navigation, and another (designer-placed points) tell the AI how to use the world.

A navigation mesh doesn't prevent you from doing any scripting. It simply provides extra information for your AIs for when they need to navigate more autonomously.

Here's an example. We have a patrol path (purple) for some guards patrolling around town and a special marker for an old man who likes to fish in the moat a few times a day (red). The cover points (yellow) represent points where characters could take cover around corners in FPS-style magic duels between the mages and warlocks of Stormwind City.

Figure 20. Various terrain markers (AI hints) and a navigation mesh

OK, but isn't it a lot of work to place a navigation mesh?

In general, placing a navigation mesh by hand is not much more labor-intensive than placing a waypoint graph. In both techniques, the amount of time you spend creating the search space representation is a fraction of the time you spend testing it in-game.

From my experience, it takes 1-3 hours to create a navigation mesh for a medium-sized level in an FPS game.

Creating a navigation mesh editor is relatively simple. All you need is the ability to drop vertices into the game world, select them, and specify that they represent a polygon in the navigation mesh. You can determine the connectivity of the navigation mesh based on which vertices are shared between adjacent polygons.

Also, there are some middleware solutions, such as xaitment, NavPower, PathEngine and Kynapse, that can handle both the nav mesh construction and runtime pathfinding aspects for you. All of these are becoming increasingly robust and viable solutions.

What if I want to have AIs walk up and down stairs? My designers don't want to have to lay down a nav mesh polygon for every single step in the staircase.

A navigation mesh just represents an area that an AI can walk in. It doesn't replace a collision mesh -- your NPCs will still use the underlying collision system for all their in-game collision.

You should be able to safely cover the entire stairway in a single rectangular navigation mesh polygon.

Can't I just give each point in my waypoint graph a radius, so I have circles instead of points? That gives me a representation of what areas are walkable. That's how they do it in robotics, too ...

That approach can work in some cases, but most areas in videogames have a lot of human architecture, full of angular streets and buildings. Circles don't represent these kinds of spaces very well, and it's difficult to achieve the same level of coverage that you can get with polygons.

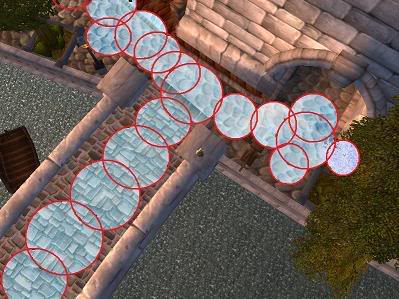

Here's an example of the circle-based approach in Stormwind City:

Figure 21. Extending the waypoint approach with circles

(Quick anecdote: When I worked on the MechWarrior 4: Vengeance team, we found out the hard way that the circle-based system that worked so well in outdoor areas really didn't work so well when we suddenly tried to put it to use in urban areas. The team eventually made it work, but a lesson was learned: circles and urban environments don't mix!)

Also, having corners is important. In the case of the desert bunker Figure 16, we can use the corner of the navigation mesh to create different paths for units with different shapes, sizes, and movement capabilities, such as a soldier and a tank. Circle graphs don't have "corners," so it becomes much more difficult to create correct paths for different kinds of units.

My game already has a collision mesh. Can't I just use that for pathfinding?

You can try, but it's not a good idea.

A collision mesh is optimized for collision, not for pathfinding. As a result, it's going to have a lot more polygons than you need for pathfinding, and your search function is going to be slower.

For example, your collision mesh might have 1000 polygons on a cobblestone street that a navigation mesh would represent with 1 rectangle, and 50 polygons on the roof of a building that a navigation mesh might not include at all (since our characters would presumably never walk on the roof).

Also, part of the advantage of a navigation mesh (as with any search space representation) is that it doesn't just tell you where your characters can travel; it also tells you where designers want them to travel.

If you let your designers create navigation meshes by hand, you give them the ability to tell the game "don't ever send AIs here. I just don't want them to go there because it's not appropriate for this game." That's not the kind of information you can ever get from a collision mesh.

All the examples as presented show the nav mesh as a 2D projection onto the space. How would you handle a case like a bridge over a road, wherein you could go over or under. Or a building with multiple floors?

Navigation meshes handle those cases very elegantly. Here's an example of what part of a nav mesh in Shattrath City in World of WarCraft might look like (for simplicity, I've omitted the parts of the nav mesh on the far side of the bridge).

Figure 22. Part of a navigation mesh over and under a bridge in Shattrath City

In a navigation mesh, each polygon also typically stores a "height" parameter indicating how much vertical clearance that is available for that polygon. So the polygon that you see passing under the bridge would know that it only has 7 feet of clearance, and units more than 7 feet tall would know that they cannot use those polygons.

In the case of a multi-storey building, each floor can have its own navigation mesh polygons, connected with additional polygons in the stairwells (elevators are a bit trickier, of course).

I don't believe in a one-size-fits-all approach. Different games require different representations for pathfinding.

If you're making a strategy game that's entirely 2D, then an approach based on a grid is usually better, since grids give you fast random access to any cell.

Otherwise, the navigation mesh really is the best approach we've found for robust pathfinding and terrain reasoning in truly 3D worlds.

Suppose I were interested in implementing navigation meshes in my game. Has anything been published to help me figure out how to build the meshes, do pathfinding on them, and optimize them for my game?

Here are the ones I'm aware of:

"Simplified 3D Movement and Pathfinding Using Navigation Meshes" by Greg Snook (Game Programming Gems)

"Polygon Soup for the Programmer's Soul: 3D Pathfinding" by Greg Hjelstrom and Patrick Smith (GamaSutra.com)

"Building a Near-Optimal Navigation Mesh" by Paul Tozour (AI Game Programming Wisdom)

"Efficient Navigation Mesh Implementation" by John C. O'Neill (Journal of Game Development, March 2004)

"Search Space Representations" by Paul Tozour (AI Game Programming Wisdom 2)

"A Fast Approach To Navigation Meshes" by Stephen White and Christopher Christensen (Game Programming Gems 3)

"Choosing a Relationship Between Path-Finding and Collision" by Thomas Young (Game Programming Gems 3)

"Improving on Near-Optimality: More Techniques for Building Navigation Meshes" by Fredrik Farnstrom (AI Game Programming Wisdom 3)

"Smoothing a Navigation Mesh Path" by Geraint Johnson (AI Game Programming Wisdom 3)

"Dynamically Updating a Navigation Mesh via Efficient Polygon Subdivision" by Paul Marden and Forrest Smith (AI Game Programming Wisdom 4)

"Intrinsic Detail in Navigation Mesh Generation" by Colt McAnlis and James Stewart (AI Game Programming Wisdom 4)

"Navigation Mesh Generation: An Empirical Approach" by David Hamm (AI Game Programming Wisdom 4)

"Navigation Mesh Generation in Highly Dynamic Worlds" by Ramon Axelrod (AI Game Programming Wisdom 4)

"Crowds in a Polygon Soup: Next-Gen Path Planning" by David Miles (http://www.navpower.com/gdc2006_miles_david_pathplanning.ppt)

Note: Please e-mail me if you're aware of any other articles that should be on this list.

How many games have actually used navigation meshes successfully?

Here's a short list:

Halo 2

Halo 3

First Encounter Assault Recon (F.E.A.R.)

Counter-Strike: Source

Metroid Prime

Metroid Prime 2: Echoes

Metroid Prime 3: Corruption

Jak and Daxter: The Precursor Legacy

Jak II

Jak 3

Uncharted: Drake's Fortune

Scarface: The World is Yours

... and many more.

So there you have it: all the cool kids are doing it.

OK, maybe it works for you, but we used waypoints in all of our previous games, and they worked fine.

Like they say, if it ain't broke, don't fix it ... and we haven't run into any of the problems you're talking about.

Look at the big picture. Think 10 or 20 years down the road.

In that kind of time frame, do you think your games might have lots of different types of AI-controlled characters with different shapes, sizes, and movement capabilities?

Will players have AI henchmen that they expect to be just as intelligent as themselves?

Will your game worlds be significantly larger, more complex, and more dynamic than they are today?

Will you have huge crowds of AI characters -- so many that just using simple steering and obstacle avoidance are no longer adequate to make them coordinate with each other effectively?

Will your games have realistic physics and huge amounts of physically-simulated objects, and will players be able to use the physics to mess with the AI characters in every way imaginable?

Will players be able to change the game world until it's virtually unrecognizable?

Will there be AIs in multiplayer that are expected to pass for human players?

There's no way to know what games will look like decades in the future, but it's clear that we're going to need much more advanced AI. Part of that will require us to give game characters the data they need to reason about their environment, find optimal paths, and deal robustly with complex, ever-changing game worlds.

July 13, 2008

Collaboration

Disclaimer: This post might come across a bit like a product endorsement. My goal here is to endorse a development technique, not a specific product.

This post isn't directly related to game AI, but it's too important not to talk about it.

---

The scale of game development has grown by orders of magnitude since I joined the industry in 1994. As a result, the amount of code we deal with on a daily basis has grown enormously. Engineering teams have grown ever larger, and it's become increasingly challenging to deal with the massive game engines of today and to find engineers with the skills required to work effectively in every part of the codebase.

(Yes, lots of developers have tried to get around this by using licensed engines, but that's a topic for another day).

As a result of this huge increase in complexity, studios have started to recognize the need to find ways to improve training and teamwork. New hires need to be brought up-to-speed on the company's coding standards and the workings of all of the different parts of the codebase. There's also been a growing realization that all the engineers need to continually improve one another's skills and to eliminate as many bugs as possible before the product goes into Quality Assurance (QA).

There's been no shortage of games that have suffered as a result of engineering teams that found themselves on the sharp end of the scale problem. Technical failures have resulted in botched product launches, product cancellations, and even studio closures.

Surprisingly, as an industry, we've been very slow to address the problem.

Everyone recognizes that training and teamwork are key, but there's no consensus on how to go about improving those.

A few game developers have experimented with "extreme programming," but usually without much success. The developers I've spoken with who tried "XP" quickly grew frustrated with the pair programming aspect, which requires two engineers to do the job that one engineer should be able to do. In most cases, this just wasted valuable engineering time. They also claimed that XP ultimately only acts as a tutoring program to bring the skill of the most junior engineers up to the level of the most senior engineers, and it really had no benefit for the most senior engineers.

Other developers use code reviews to various degrees. Many of the studios I've visited over the past month claim to be using a code review process to some extent -- for all checkins, for large checkins only, or only for new programmers for the first three months.

That process can have enormous benefits, but it's also a huge pain in the butt. It's hard to get multiple programmers together in the same room at a time -- particularly since an effective code review usually requires two or more additional engineers to perform the review. Code reviews are most important toward the end of the project, when people have the least amount of time to participate. And having to look over someone's shoulder to read their code -- potentially for several hours -- can be really tiresome.

But there's a better way, and it's online collaboration.

At a previous employer, we used SmartBear Software's Code Collaborator™ to review all checkins. Every Perforce changelist had to be reviewed by two additional engineers before it could be checked into the repository.

When you're ready to check something in, you go to Perforce, right-click on your checkin to create a code review, and invite two or more other engineers to review it. Those engineers then get an e-mail, and that e-mail takes them to a web page that shows them your summary of your checkin and allows them to review all the diffs of the files you changed. They can then add comments or open "defects" for any of your changes to tell you what to fix before you check it in -- "This looks like a memory leak," "Please check for NULL here," "This is a coding standards violation," and so on.

The ostensible reason for this process was to get more engineers familiar with different parts of the codebase that they wouldn't have had any exposure to otherwise.

What we found was far more surprising -- we uncovered an amazing number of bugs before they ever reached QA.

Furthermore, the bugs were coming from everywhere, not just the junior engineers. All the engineers, including all of the most senior developers, had plenty of room for improvement.

Over time, it made all of us better engineers by making us more paranoid about potential sources of engineering defects. We learned to look at our code with a critical eye -- "What would Steve say about this code? Yeah, I'd better fix that ..."

There are always times when it's important to discuss things in person. Sometimes you do need to walk over to somebody's desk to discuss code changes in-person. But 90% of the changes are smaller or more obvious changes that don't need to be done in-person. Collaborator allows you to fire off a review that can be performed at the reviewers' convenience -- they can take as much time as they need to inspect your code, and they can fit it into their own schedule when it's most appropriate for them to do so.

I can't possibly recommend online collaboration more highly.

• Collaboration removes defects before they reach QA, saving time in testing and improving the integrity of the codebase.

• It trains developers to spot errors they might not see otherwise, and makes them much more cautious about their checkins.

• It brings all engineers in line with the company's coding standards.

• It makes engineers more familiar with parts of the codebase they might never see otherwise, breaking down the "my code/your code" silos and giving your engineering team much broader exposure to their own codebase.

• Unlike in-person code reviews, it allows reviewers to review changes at their convenience, whenever their own schedules allow them to participate, and to do so at their own pace.