Omniverse Replicator环境配置和使用说明

Omniverse Replicator使用说明

本教程将介绍Omniverse Replicator的环境配置和使用说明, 参加Sky Hackathon的同学可以参考本教程来合成训练数据集.

文章目录

- Omniverse Replicator使用说明

-

- 1. Omniverse环境配置

-

- 1.a.安装Omniverse Launcher

-

- 1.a.1.在下面的地址下载Omniverse Launcher

- 1.a.2.安装Omniverse Launcher

- 1.a.3.登录

- 1.b 安装Omniverse CODE

- 2. Omniverse Code介绍

- 3.使用Omniverse Code/Replicator生成场景并合成图像数据集

1. Omniverse环境配置

NVIDIA Omniverse 可以在任何搭载了 RTX 的设备上运行。为了获得理想性能,我们建议使用显存不低于 8GB 的 GeForce RTX 3070 或 NVIDIA RTX A4000 显卡。

| 组成部分 | 最低规格 |

|---|---|

| 支持的操作系统 | Windows 11/ Windows 10(64 位 版本 1909 及更高版本) |

| CPU | Intel I7 /AMD Ryzen 2.5 GHz 或更高 |

| CPU Core 核心数 | 4 个或更多 |

| RAM | 16Gb 或更多 |

| 存储 | 500Gb SSD 或更多 |

| GPU | 任何 RTX GPU |

| VRAM | 6Gb 或更多 |

| 最低 视频驱动版本 | 单击 此处,查看新版驱动 |

Omniverse中的应用需要GPU和安装驱动, 如果您已安装则无需重复操作.

如果您未安装, 请参考:

- Windows: https://blog.csdn.net/kunhe0512/article/details/124331221

- Linux: https://blog.csdn.net/kunhe0512/article/details/125061911

您可能需要根据最新版本调整上面文章中的步骤

下面的步骤以Windows 10系统为例

1.a.安装Omniverse Launcher

1.a.1.在下面的地址下载Omniverse Launcher

https://www.nvidia.com/en-us/omniverse/download/

1.a.2.安装Omniverse Launcher

双击下载omniverse-launcher-win.exe, 按照要求安装.

1.a.3.登录

如果您没有账号, 请创建账号. 如果您已经有账号, 登录Omniverse Launcher

1.b 安装Omniverse CODE

在EXCHANGE中找到CODE, 点击并安装.

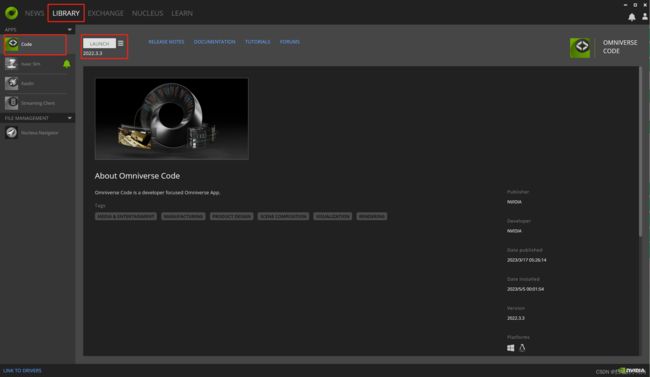

安装完之后, 在LIBRARY页面中选择CODE并加载

当出现以下页面, 意味着安装成功了

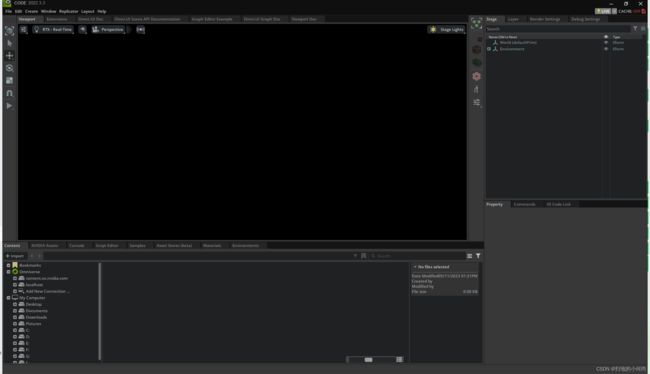

注意: 这里一定要等到RTX Loading字样消失在操作CODE, 否则可能会出现卡顿或者程序以外关闭

2. Omniverse Code介绍

Omniverse Code是我们生成图像数据集主要操作的工具, 我们使用的Replicator也是可以在里面操作的一个扩展库.

您可以在Omniverse Code里面输入准备好的代码, 然后生成您想创建的场景, 并通过Replicator合成训练数据集

下面是Omniverse Code的页面展示:

注意:在每次生成生成一个新的场景是, 需要点击左上角的File -> New -> Don’t Save. 连续更新代码, 然后点击Run(Ctrl + Enter)可能会出现程序意外关闭.

3.使用Omniverse Code/Replicator生成场景并合成图像数据集

- 打开Omniverse Code

- 在Script Editor里面输入以下代码:

# import replicator envirnoment

import omni.replicator.core as rep

# setup random view range for camera: low point, high point

sequential_pos = [(-800, 220, -271),(800, 220,500)]

# position of look-at target

look_at_position = (-212, 78, 57)

# setup working layer

with rep.new_layer():

# define 3d models: usd format file source link, class, initial position

WORKSHOP = 'http://omniverse-content-production.s3-us-west-2.amazonaws.com/Assets/ArchVis/Industrial/Buildings/Warehouse/Warehouse01.usd'

CONVEYOR = 'http://omniverse-content-production.s3-us-west-2.amazonaws.com/Assets/DigitalTwin/Assets/Warehouse/Equipment/Conveyors/ConveyorBelt_A/ConveyorBelt_A23_PR_NVD_01.usd'

BOX1 = 'http://omniverse-content-production.s3-us-west-2.amazonaws.com/Assets/ArchVis/Industrial/Containers/Cardboard/Cardbox_A3.usd'

BOX2 = 'http://omniverse-content-production.s3-us-west-2.amazonaws.com/Assets/ArchVis/Industrial/Containers/Cardboard/Cardbox_B3.usd'

BOX3 = 'http://omniverse-content-production.s3-us-west-2.amazonaws.com/Assets/ArchVis/Industrial/Containers/Cardboard/Cardbox_C3.usd'

BOX4 = 'http://omniverse-content-production.s3-us-west-2.amazonaws.com/Assets/ArchVis/Industrial/Containers/Cardboard/Cardbox_D3.usd'

workshop = rep.create.from_usd(WORKSHOP)

conveyor1 = rep.create.from_usd(CONVEYOR)

conveyor2 = rep.create.from_usd(CONVEYOR)

box1 = rep.create.from_usd(BOX1,semantics=[('class', 'box')])

box2 = rep.create.from_usd(BOX2,semantics=[('class', 'box')])

box3 = rep.create.from_usd(BOX3,semantics=[('class', 'box')])

box4 = rep.create.from_usd(BOX4,semantics=[('class', 'box')])

with workshop:

rep.modify.pose(

position=(0,0,0),

rotation=(0,-90,-90)

)

with conveyor1:

rep.modify.pose(

position=(-40,0,0),

rotation=(0,-90,-90)

)

with conveyor2:

rep.modify.pose(

position=(-40,0,100),

rotation=(-90,90,0)

)

with box1:

rep.modify.pose(

position=(-350,78,57),

rotation=(0,-90,-90),

scale=rep.distribution.uniform(1,1)

)

with box2:

rep.modify.pose(

position=(-100,78,57),

rotation=(0,-90,-90),

scale=rep.distribution.uniform(1,1)

)

with box3:

rep.modify.pose(

position=(100,78,57),

rotation=(0,-90,-90),

scale=rep.distribution.uniform(1,1)

)

with box4:

rep.modify.pose(

position=(200,78,57),

rotation=(0,-90,-90),

scale=rep.distribution.uniform(1,1)

)

# define lighting function

def sphere_lights(num):

lights = rep.create.light(

light_type="Sphere",

temperature=rep.distribution.normal(3500, 500),

intensity=rep.distribution.normal(15000, 5000),

position=rep.distribution.uniform((-300, -300, -300), (300, 300, 300)),

scale=rep.distribution.uniform(50, 100),

count=num

)

return lights.node

rep.randomizer.register(sphere_lights)

# define function to create random position range for target

def get_shapes():

shapes = rep.get.prims(semantics=[('class', 'box')])

with shapes:

rep.modify.pose(

position=rep.distribution.uniform((0, -50, 0), (0, 50, 0)))

return shapes.node

rep.randomizer.register(get_shapes)

# Setup camera and attach it to render product

camera = rep.create.camera(position=sequential_pos[0], look_at=look_at_position)

render_product = rep.create.render_product(camera, resolution=(512, 512))

with rep.trigger.on_frame(num_frames=100): #number of picture

rep.randomizer.sphere_lights(4) #number of lighting source

rep.randomizer.get_shapes()

with camera:

rep.modify.pose(

position=rep.distribution.uniform(sequential_pos[0],sequential_pos[1]), look_at=look_at_position)

# Initialize and attach writer for Kitti format data

writer = rep.WriterRegistry.get("KittiWriter")

writer.initialize(

output_dir="D:/sdg",

bbox_height_threshold=25,

fully_visible_threshold=0.95,

omit_semantic_type=True

)

writer.attach([render_product])

rep.orchestrator.preview()

-

单击左下角的

Run(Ctrl + Enter)按钮 -

选择上面的’Replicator -> Start’

-

在D:/sdg文件夹内查看生成的内容. 图片在Camera/rgb文件夹内, label把文件在Camera/object_detection文件夹内, 如下图所示

-

完成上述步骤, 说明您已经可以成功使用Omniverse Replicator来合成数据