nvidia 2022 GTC 学习笔记

我主要看的是关于机器人方向的一些视频,相关总结如下:

1. Developing, Training, and Testing AI-based Robots in Simulation [S41896]

https://reg.rainfocus.com/flow/nvidia/gtcspring2022/aplive/page/ap/session/1638578342492001voYY

这里我其实一直有疑惑,大概讲的是nvidia推出的一个机器人的仿真平台Isaac Sim,针对cpu以及gou良好的性能 。但我目前仅仅只是涉及到了Rviz以及Gazebo仿真。

还有之前了解到的 mojoco 、pybullet。

上述仿真平台各有应用场景,但我似乎没有找到一个比较全面的比较内容。

找到了这:

https://forums.developer.nvidia.com/t/migrating-from-gazebo-to-isaac-sim/166081

以及Isaac Sim 支持ros的一些资料:

https://docs.omniverse.nvidia.com/index.html

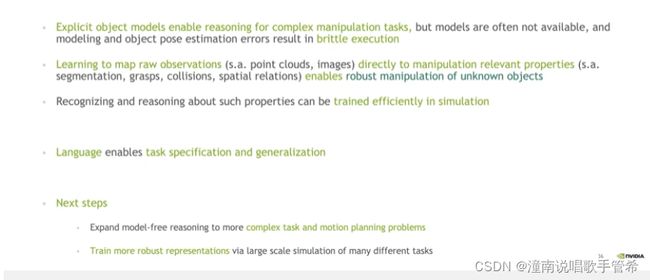

2. Object Manipulation without Explicit Models [S42024]

https://reg.rainfocus.com/flow/nvidia/gtcspring2022/aplive/page/ap/session/16390716586760012zkL

大致讲的就是机械臂需要精确的感知到模型后进行相应动作,然后如果感知的模型存在干扰或者说噪音会对机械臂行为造成很大影响,这极大的限制了机械臂的功能。

之后讲到了基于视觉感知到的物体模型,进行训练抓取。

以及直接从看到的物体获取抓取策略。

在杂乱的物体中如何有效取出目标物体。

提出了CLIPORT。

3. Deep Learning using NVIDIA Omniverse for Synthetic 3D Point Cloud Generation [S41484]

https://reg.rainfocus.com/flow/nvidia/gtcspring2022/aplive/page/ap/session/1637278252844001m0i6

深度学习在三维点云分割中实现了有意义的预测精度。点云通常由3D激光扫描仪、激光雷达系统和一些摄影测量算法输出,是存储物理收集的3D数据的主要手段。流行的图像数据集有数以千万计的标记场景,跨越数千个类,而公开的点云数据集只有不到几十个场景和不到10个类。我们认为这主要是因为在3D环境中标记点云区域的艰巨性。NVIDIA Omniverse和其他3D软件包允许从模拟生成标记的点云数据。我们探索深度学习模型的生成,通过结合公共可用的、综合生成的和标记的点云来实现最先进的预测精度。

Deep learning has achieved meaningful predictive accuracy in 3D point

cloud segmentation. Point clouds are commonly output from 3D laser

scanners, lidar systems, and some photogrammetry algorithms, and are

the primary means of storing physically collected 3D data. Whereas

popular image datasets have tens of millions of labeled scenes

spanning thousands of classes, publicly available point cloud datasets

have fewer than a couple dozen scenes and less than 10 classes. We

believe this is largely because of the arduous nature of labeling

point cloud regions in a 3D environment. NVIDIA Omniverse and other 3D

software packages allow the generation of labeled point cloud data

from simulation. We explore the generation of deep learning models to

achieve state-of-the-art predictive accuracy by combining publicly

available and synthetically generated and labeled point clouds.

4 GTC 2022 Keynote [S42295]

https://reg.rainfocus.com/flow/nvidia/gtcspring2022/aplive/page/ap/session/1641340307961001yn1K

软硬件,多领域全面布局。现在科技已经发展的这么迅速了吗。

Isaac Sim / omniverse 这个好像性能十分强大,数字孪生,仿真平台。支持深度强化学习。

5 Scaling Data-driven Robot Learning [S42252]

讲的就是让机器人从数据中学习掌握技巧,就是LFD(learn from demonstration )的概念。讲机器人通用化。

2021年iros上也提出过相关的论文,让机器人从视频中学习。

reuse data across tasks/ environments& embodiments?

generalize by leveraging boarding datasets

论文:

BC-Z:Zero-Shot Task generalization with robotic imitation learning

learning generalizable robotic reward functions from in-the-wild human videos,rss’21

数据集:

www.kaggle.com/google/bc-z-robot

Recent progress in robot learning has found that robots can acquire

complex visuomotor manipulation skills through trial and error.

Despite these successes, the generalization and versatility of robots

across environment conditions, tasks, and objects remains a major

challenge. And, unfortunately, our existing algorithms and training

setups aren’t prepared to tackle such challenges, which demand large

and diverse sets of tasks and experiences. This is because robots are

typically trained using online data collection, using data collected

entirely from scratch in a single lab environment. I’ll propose a

paradigm for robot learning where we continuously accumulate,

leverage, and reuse broad offline datasets across papers — much like

the rest of machine learning, but notably under-explored for robotic

manipulation.

5 Connect with the Experts: Robotics Development, Simulation, and Training [CWE41884]

这似乎是官方组织的一个会议,让参与者有机会和专家一对一沟通。

6 Designing a Flexible AI System for Adaptive Robot Arms [S42420]

https://reg.rainfocus.com/flow/nvidia/gtcspring2022/aplive/page/ap?search=S42420&tab.scheduledorondemand=1583520458947001NJiE

Adaptive robot arms need to be efficient and agile enough to handle a

wide range of tasks and environmental conditions in real-world

applications, bringing great challenges to an AI system, including

diverse problems of vision-based localization, visual-servo,

inspection, grasping, manipulation, dense environment reconstruction,

and even a combination of these. We’ll give a high-level overview of

basic AI system architecture design, and the essentials of each

component in different scenarios. These two parts contribute a lot to

building a flexible AI system with the adaptive learning capability

using a variety of tools and infrastructures, such as simulation,

easy-to-edit workflow, log & configuration manager, camera system,

robust inference engine, and integrating different data sources, which

is also the key to final success.

这个我大概看过后,感觉就是介绍产品的,并没有技术上的指导。

7 Developing ROS-based Mobile Robots using NVIDIA Isaac ROS GEMs [S41833]

https://reg.rainfocus.com/flow/nvidia/gtcspring2022/aplive/page/ap/session/1638566988736001o5cj

Get a technical overview of how NVIDIA’s hardware-accelerated robotics

software packages, Isaac ROS GEMs, are used to help a robot’s

perception, localization, and mapping.

主要讲的还是平台的各种性能,框架,支持ros,gpu加速等等。

看到这里发觉还是最开始关于issac的视频关于技术指导以及操作的内容讲解的比较多,后面去了官网查看相应内容,发现该仿真平台确实性能强大,仿真环境效果逼真。但实际一看配置需求,把我劝退的了。