文章目录

-

- 前言

- SparkSQL 定义UDF函数

- SparkSQL UDF相关代码

-

- UDF 返回Float类型

- UDF 返回Array类型

- UDF 返回Dict类型

前言

- 无论是Hive还是SparkSQL分析处理数据时,往往需要使用函数,SparkSQL模块本身自带很多实现公共功能的函数,在pyspark.sql.functions中。Hive中常见的自定义函数有三种UDF(一对一)、UDAF(多对一)、UDTF(一对多)。在SparkSQL中,目前仅仅支持UDAF与UDF,而python仅支持UDF。

SparkSQL 定义UDF函数

- sparksession.udf.register():注册的UDF可以用DSL和SQL,返回值用于DSL风格,传参内给的名字用于SQL风格。

udf = sparksession.udf.register(param1, param2, param3)

- pyspark.sql.functions.udf,仅能用于DSL风格。

udf = F.udf(param1, param2)

SparkSQL UDF相关代码

UDF 返回Float类型

from pyspark.sql import SparkSession

from pyspark.sql.types import StructType, StringType, IntegerType, FloatType

from pyspark.sql import functions as F

import numpy as np

if __name__ == '__main__':

ss = SparkSession.builder \

.appName("test") \

.master("local[*]") \

.getOrCreate()

sc = ss.sparkContext

rdd = sc.parallelize([1.52, 2.78, 3.62, 4.14, 5.26, 6.83, 7.91, 8.06, 9.31]).map(lambda x: [x])

df = rdd.toDF(["emb"])

def sigmoid(emb):

return (1 / (1 + np.exp(- emb))).__float__()

udf2 = ss.udf.register("udf1", sigmoid, FloatType())

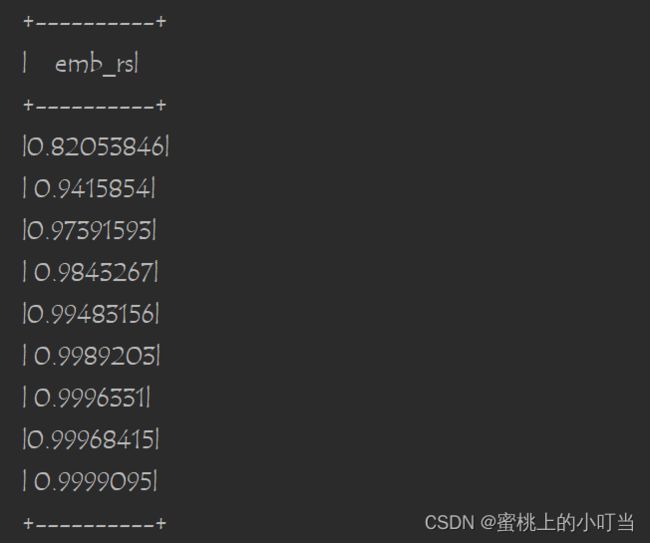

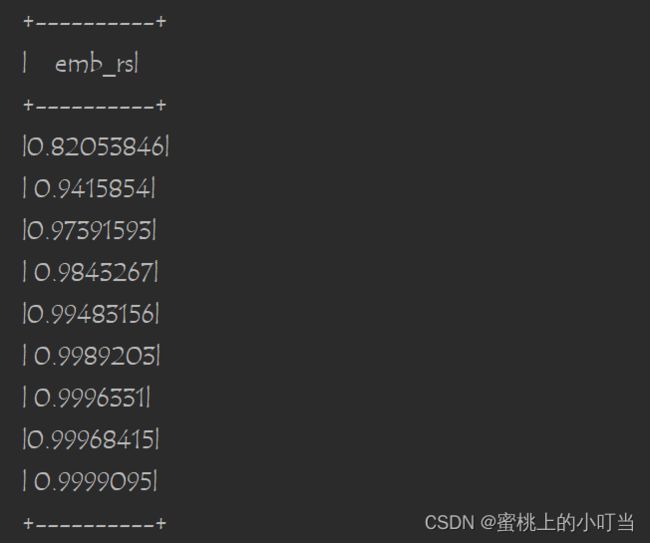

df.selectExpr("udf1(emb)").withColumnRenamed("udf1(emb)", "emb_rs").show()

df.select(udf2(df['emb'])).withColumnRenamed("udf1(emb)", "emb_rs").show()

udf3 = F.udf(sigmoid, FloatType())

df.select(udf3(df['emb'])).withColumnRenamed("sigmoid(emb)", "emb_rs").show()

- 演示结果

UDF 返回Array类型

from pyspark.sql import SparkSession

from pyspark.sql.types import StructType, StringType, IntegerType, FloatType, ArrayType

from pyspark.sql import functions as F

if __name__ == '__main__':

ss = SparkSession.builder \

.appName("test") \

.master("local[*]") \

.getOrCreate()

sc = ss.sparkContext

rdd = sc.parallelize([["hadoop spark flink python"], ["hadoop flink java hive"], ["hive spark java es"]])

df = rdd.toDF(["big_data"])

def split_line(data):

return data.split(" ")

udf2 = ss.udf.register("udf1", split_line, ArrayType(StringType()))

df.select(udf2(df['big_data'])).withColumnRenamed('udf1(big_data)', 'tech').show()

df.createTempView("big_datas")

ss.sql("SELECT udf1(big_data) AS tech FROM big_datas").show(truncate=False)

udf3 = F.udf(split_line, ArrayType(StringType()))

df.select(udf3(df['big_data'])).show(truncate=False)

- 演示结果

UDF 返回Dict类型

from pyspark.sql import SparkSession

from pyspark.sql.types import StructType, StringType, IntegerType, FloatType, ArrayType

from pyspark.sql import functions as F

if __name__ == '__main__':

ss = SparkSession.builder \

.appName("test") \

.master("local[*]") \

.getOrCreate()

sc = ss.sparkContext

rdd = sc.parallelize([1,2,3,4]).map(lambda x: [x])

df = rdd.toDF(["num"])

def odd_even(data):

if data %2 == 1:

return {"num": data, "type": "odd"}

else:

return {"num": data, "type": "even"}

"""

UDF的返回值是字典的话, 需要用StructType来接收

"""

udf1 = ss.udf.register("udf1", odd_even, StructType().add("num", IntegerType(), nullable=True).add("type", StringType(), nullable=True))

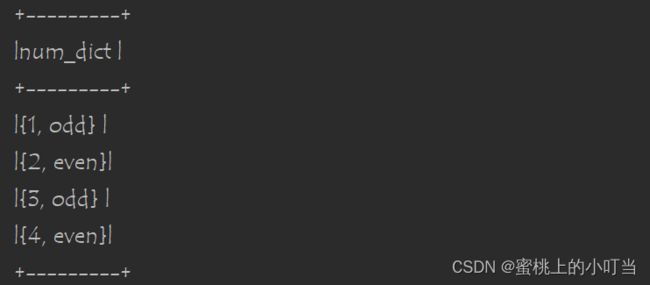

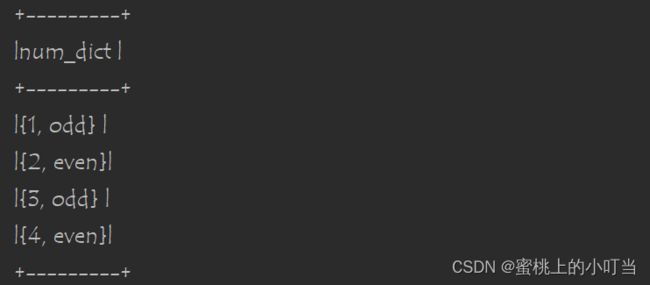

df.selectExpr("udf1(num)").withColumnRenamed("udf1(num)", "num_dict").show(truncate=False)

df.select(udf1(df['num'])).withColumnRenamed("udf1(num)", "num_dict").show(truncate=False)

- 演示结果