CKA 06_Kubernetes 工作负载与调度 Pod 管理 yaml 资源清单 标签 Pod 生命周期 容器探针

工作负载与调度

- 1. Pod 管理

-

- 1.1 kubectl 命令

- 2. yaml 资源清单

-

- 2.1 yaml 文件的格式

- 2.2 编写 yaml 资源清单

- 3. 标签

-

- 3.1 节点标签选择器

- 考试题目:pod 中运行 nginx 和 memcache 容器

- 4. Pod 生命周期

-

- 4.1 Init 容器

- 5. 容器探针

-

- 5.1 探测类型

- 5.2 配置存活、就绪和启动探测器

- 5.3 定义存活命令

- 5.4 定义就绪探测器

官方文档:工作负载 | Pods

1. Pod 管理

-

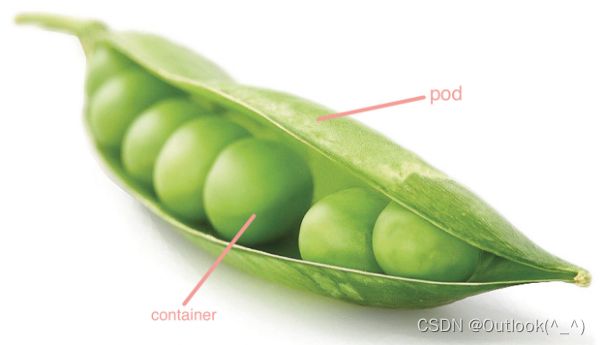

容器的本质是一个视图被隔离、资源受限的进程。

-

Pod 是一个逻辑单位,多个容器的组合,kubernetes 的原子调度单位。

-

一个 pod 类似一个豌豆荚,包含一个或多个容器,多个容器间共享 IPC、Network 和 UTC namespace。

-

Pod 是可以在 Kubernetes 中创建和管理的、最小的可部署的计算单元。

-

Pod (就像在鲸鱼荚或者豌豆荚中)是一组(一个或多个) 容器; 这些容器共享存储、网络、以及怎样运行这些容器的声明。 Pod 中的内容总是并置(colocated)的并且一同调度,在共享的上下文中运行。 Pod 所建模的是特定于应用的“逻辑主机”,其中包含一个或多个应用容器, 这些容器是相对紧密的耦合在一起的。 在非云环境中,在相同的物理机或虚拟机上运行的应用类似于 在同一逻辑主机上运行的云应用。

-

什么是 Pod ?

-

pod 要解决的核心问题:

容器之间是被 Linux namespace 和 cgroups 隔离的

-

pod 实现机制:

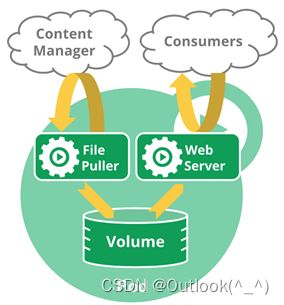

共享网络:- 容器通过 infra 容器(汇编语言编写,永远处于暂停状态)共享同一个网络命名空间。

- 容器之间使用 localhost 高速通信。

- 一个 pod 只有一份网络资源,被该 pod 内的容器共享。

- pod 的生命周期与 infra 容器一致,与其他容器无关。

共享存储

- 容器通过挂载共享的 volume 来实现共享存储

1.1 kubectl 命令

官方文档:Kubectl Reference Docs

官方文档:Kubectl 概述

-考试会考的命令:–sort-by=

使用名称排序的命令(官网)

使用CPU最高的排序(官网)

# 列出当前名字空间下所有 Services,按名称排序

kubectl get services --sort-by=.metadata.name

# 列出 Pods,按重启次数排序

kubectl get pods --sort-by='.status.containerStatuses[0].restartCount'

# 列举所有 PV 持久卷,按容量排序

kubectl get pv --sort-by=.spec.capacity.storage

- 创建 Pod

- 集群内部任意节点可以访问 Pod,但集群外部无法直接访问。

[root@k8s1 kubernetes]# kubectl run qian --image=nginx

pod/qian created

[root@k8s1 kubernetes]# kubectl get pod

NAME READY STATUS RESTARTS AGE

qian 1/1 Running 0 30s

- 删除 Pod

- 记得考试的时候,在命令后面加上 “ --force ”,这样删除会更快一些

[root@k8s1 kubernetes]# kubectl delete --force pod test

warning: Immediate deletion does not wait for confirmation that the running resource has been terminated. The resource may continue to run on the cluster indefinitely.

pod "test" force deleted

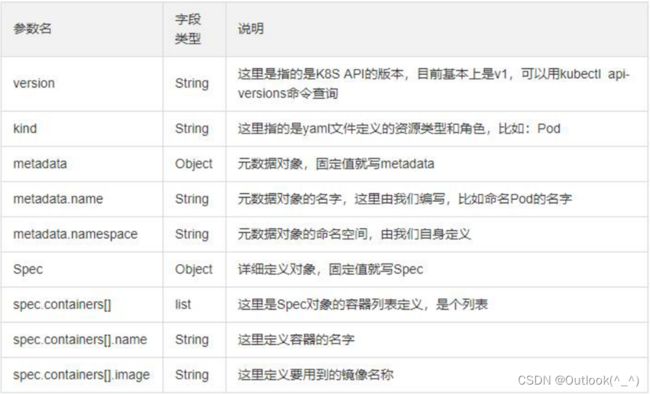

2. yaml 资源清单

2.1 yaml 文件的格式

- 格式如下:

apiVersion: group/version //指明api资源属于哪个群组和版本

kind: //标记创建的资源类型,k8s主要支持以下资源类别

//Pod,ReplicaSet,Deployment,StatefulSet,DaemonSet,Job,Cronjob

metadata: //元数据

name: //对象名称

namespace: //对象属于哪个命名空间

labels: //指定资源标签,标签是一种键值数据

spec: //定义目标资源的期望状态

- 查询帮助文档

kubectl explain pod

2.2 编写 yaml 资源清单

- 考试的时候,为了方便可以通过 kubectl 的命令导出一个 yaml 资源清单

这样节约时间

[root@k8s1 ~]# kubectl get pod test -o yaml > pod.yaml

[root@k8s1 ~]# vim pod.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

run: test

name: test

namespace: default

resourceVersion: "8646"

uid: a3836d42-9e54-4a8f-b219-a06a5556cc99

spec:

containers:

- image: nginx

imagePullPolicy: Always

name: test

- 如果要查看 pod 的期望状态

kubectl exolain pod.spec.containers

- 一个 pod 中如果定义两个 nginx 容器,那么会发生冲突

[root@k8s1 ~]# vim pod.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

run: qianqian

name: qianqian

namespace: default

spec:

containers:

- image: nginx

imagePullPolicy: Always

name: qianqian

- name: yao

image: nginx

imagePullPolicy: IfNotPresent // 本地没有就拉取

[root@k8s1 ~]# kubectl apply -f pod.yaml

pod/qianqian created

- 通过 kubectl describe pod qianqian 命令查看 pod 的运行日志,发现第一个容器 qianqian 在 k8s2 上成功运行,第二个容器 yao 是失败的状态

- 一个 pod 中如果定义两个容器,分别是 nginx 和 busybox,没有发生冲突

[root@k8s1 ~]# vim pod.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

run: aaa

name: aaa

namespace: default

spec:

containers:

- image: nginx

imagePullPolicy: Always

name: aaa

- image: busybox

name: bbb

stdin: true

tty: true

tolerations:

- key: "example-key"

operator: "Exists"

effect: "NoSchedule"

[root@k8s1 ~]# kubectl apply -f pod.yaml

pod/aaa created

[root@k8s1 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

aaa 2/2 Running 0 2m46s

- 查看 pod 创建的过程

[root@k8s1 ~]# kubectl describe pod aaa

......

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 6m35s default-scheduler Successfully assigned default/aaa to k8s2

Normal Pulling 6m34s kubelet Pulling image "nginx"

Normal Pulled 6m25s kubelet Successfully pulled image "nginx" in 8.639223366s

Normal Created 6m24s kubelet Created container aaa

Normal Started 6m24s kubelet Started container aaa

Normal Pulling 6m24s kubelet Pulling image "busybox"

Normal Pulled 6m3s kubelet Successfully pulled image "busybox" in 21.40434676s

Normal Created 6m3s kubelet Created container bbb

Normal Started 6m2s kubelet Started container bbb

- 交互式打开 busybox 容器的 shell

[root@k8s1 ~]# kubectl attach -it aaa -c bbb

If you don't see a command prompt, try pressing enter.

/ #

/ #

Session ended, resume using 'kubectl attach aaa -c bbb -i -t' command when the pod is running

- 交互式打开 nginx 的容器 aaa

退出后,容器关闭,但是,不影响容器的运行

[root@k8s1 ~]# kubectl exec -it aaa -c aaa -- bash

root@aaa:/# cd /etc/nginx/

root@aaa:/etc/nginx# ls

conf.d fastcgi_params mime.types modules nginx.conf scgi_params uwsgi_params

root@aaa:/etc/nginx#

exit

【【【实验有问题,18883】】】】】

3. 标签

- 查看集群节点的标签

[root@k8s1 ~]# kubectl get nodes --show-labels

NAME STATUS ROLES AGE VERSION LABELS

k8s1 Ready control-plane,master 2d2h v1.23.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s1,kubernetes.io/os=linux,node-role.kubernetes.io/control-plane=,node-role.kubernetes.io/master=,node.kubernetes.io/exclude-from-external-load-balancers=

k8s2 Ready <none> 2d2h v1.23.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s2,kubernetes.io/os=linux

k8s3 Ready <none> 2d2h v1.23.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s3,kubernetes.io/os=linux

3.1 节点标签选择器

- 在工作节点 k8s3 标记 ssd 的标签

[root@k8s1 ~]# kubectl label nodes k8s3 disktype=ssd

node/k8s3 labeled

[root@k8s1 ~]# kubectl get nodes --show-labels

NAME STATUS ROLES AGE VERSION LABELS

k8s1 Ready control-plane,master 2d3h v1.23.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s1,kubernetes.io/os=linux,node-role.kubernetes.io/control-plane=,node-role.kubernetes.io/master=,node.kubernetes.io/exclude-from-external-load-balancers=

k8s2 Ready <none> 2d3h v1.23.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s2,kubernetes.io/os=linux

k8s3 Ready <none> 2d3h v1.23.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,disktype=ssd,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s3,kubernetes.io/os=linux

- 编写 yaml 资源清单,在其中增加标签选择器。将名为 aaa 的 pod 运行在具有标签 ssd 的工作节点 k8s3 上

- yaml 资源清单如下:

[root@k8s1 ~]# vim pod.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

run: aaa

name: aaa

namespace: default

spec:

containers:

- image: nginx

imagePullPolicy: Always

name: aaa

nodeSelector:

disktype: ssd

tolerations:

- key: "example-key"

operator: "Exists"

effect: "NoSchedule"

- 生效

[root@k8s1 ~]# kubectl apply -f pod.yaml

pod/aaa created

- 查看 pod 运行的状态,发现 pod aaa 按照 yaml 清单中期望状态的要求,在 k8s3 上运行

[root@k8s1 ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

aaa 1/1 Running 0 22s 10.244.2.9 k8s3 <none> <none>

- 假如删除了 k8s3 上的标签 ssd,那么 pod aaa 将无法就位,因为它在找具有标签 ssd 的工作节点

- 删除标签

[root@k8s1 ~]# kubectl label nodes k8s3 disktype-

node/k8s3 unlabeled

- 删除之前的 pod 后,再生效资源清单

[root@k8s1 ~]# kubectl apply -f pod.yaml

pod/aaa created

- 查看 pod 的运行状态,发现是处于 Pending 的状态

[root@k8s1 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

aaa 0/1 Pending 0 4s

- 通过 kubectl describe 命令查看 pod 创建的过程

[root@k8s1 ~]# kubectl describe pod aaa

......

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 33s default-scheduler 0/3 nodes are available: 1 node(s) had taint {node-role.kubernetes.io/master: }, that the pod didn't tolerate, 2 node(s) didn't match Pod's node affinity/selector.

- 恢复工作节点 k8s3 上的标签 ssd,这时候就会发现 pod 运行成功了

- 添加标签

[root@k8s1 ~]# kubectl label nodes k8s3 disktype=ssd

node/k8s3 labeled

- 不需要再次生效 yaml 资源清单,只需要检查 pod 的运行状态,发现 pod 状态恢复正常,处于 running 状态

[root@k8s1 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

aaa 1/1 Running 0 2m13s

- 查看 pod aaa 的创建过程

[root@k8s1 ~]# kubectl describe pod aaa

......

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 84s (x2 over 2m30s) default-scheduler 0/3 nodes are available: 1 node(s) had taint {node-role.kubernetes.io/master: }, that the pod didn't tolerate, 2 node(s) didn't match Pod's node affinity/selector.

Normal Scheduled 34s default-scheduler Successfully assigned default/aaa to k8s3

Normal Pulling 32s kubelet Pulling image "nginx"

Normal Pulled 26s kubelet Successfully pulled image "nginx" in 6.39076152s

Normal Created 25s kubelet Created container aaa

Normal Started 25s kubelet Started container aaa

考试题目:pod 中运行 nginx 和 memcache 容器

-

这里我选用了 nginx 和 redis

-

题目要求:

在一个 pod 内创建一个 nginx 和 memcache 的容器,还需要调度在指定的主机上(3min)

-

思路:

完成这道题目,只需要做到两点,分别是,在期望状态 spec 中写入两个指定的容器,和通过标签实现调度到指定主机

- 首先要快速得到一个 yaml 资源清单的模板

- 通过 -o yaml 的参数导出模板

[root@k8s1 ~]# kubectl run ccc --image=nginx --dry-run=client -o yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: ccc

name: ccc

spec:

containers:

- image: nginx

name: ccc

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

[root@k8s1 ~]# kubectl run ccc --image=nginx --dry-run=client -o yaml > pod-2.yaml

- 根据题目要求修改模板

[root@k8s1 ~]# vim pod-2.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

run: ccc

name: ccc

spec:

containers:

- image: nginx

name: nginx //第一个容器

- name: redis //第二个容器

image: redis

nodeSelector: //节点标签选择器

disktype: ssd

tolerations:

- key: "example-key"

operator: "Exists"

effect: "NoSchedule"

- 需要提取拉取 redis 镜像

[root@k8s4 ~]# docker pull redis

[root@k8s4 ~]# docker tag docker.io/library/redis:latest reg.westos.org/k8s/redis:latest

[root@k8s4 ~]# docker push reg.westos.org/k8s/redis:latest

- 镜像准备好后,生效 yaml 资源清单

- 生效

[root@k8s1 ~]# kubectl apply -f pod-2.yaml

pod/ccc created

- 查看 pod 运行状态

[root@k8s1 ~]# kubectl get pod ccc -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ccc 2/2 Running 0 48s 10.244.2.12 k8s3 <none> <none>

- 查看 pod 的创建过程

[root@k8s1 ~]# kubectl describe pod ccc

......

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 25s default-scheduler Successfully assigned default/ccc to k8s3

Normal Pulling 24s kubelet Pulling image "nginx"

Normal Pulled 19s kubelet Successfully pulled image "nginx" in 4.325708968s

Normal Created 19s kubelet Created container nginx

Normal Started 19s kubelet Started container nginx

Normal Pulling 19s kubelet Pulling image "redis"

Normal Pulled 11s kubelet Successfully pulled image "redis" in 7.279734329s

Normal Created 11s kubelet Created container redis

Normal Started 11s kubelet Started container redis

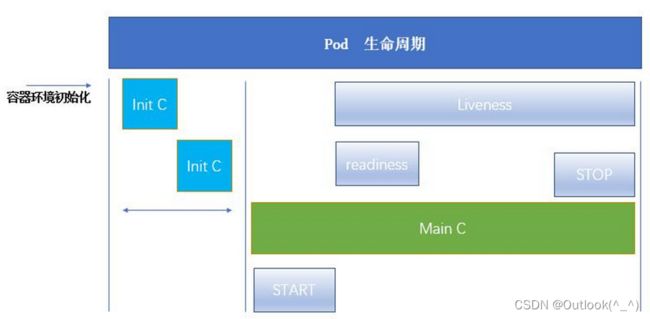

4. Pod 生命周期

官方文档:工作负载 | Pods | Pod 的生命周期

-

Pod 遵循一个预定义的生命周期,起始于 Pending 阶段,如果至少 其中有一个主要容器正常启动,则进入 Running,之后取决于 Pod 中是否有容器以 失败状态结束而进入 Succeeded 或者 Failed 阶段。

在 Pod 运行期间,kubelet 能够重启容器以处理一些失效场景。 在 Pod 内部,Kubernetes 跟踪不同容器的状态 并确定使 Pod 重新变得健康所需要采取的动作。

在 Kubernetes API 中,Pod 包含规约部分和实际状态部分。 Pod 对象的状态包含了一组 Pod 状况(Conditions)。 如果应用需要的话,你也可以向其中注入自定义的就绪性信息。

Pod 在其生命周期中只会被调度一次。 一旦 Pod 被调度(分派)到某个节点,Pod 会一直在该节点运行,直到 Pod 停止或者 被终止。

-

Pod 可以包含多个容器,应用运行在这些容器里面,同时 Pod 也可以有一个或多个先于应用容器启动的 Init 容器。

-

Init 容器与普通的容器非常像,除了如下两点:

- 它们总是运行到完成。

- Init 容器不支持 Readiness,因为它们必须在 Pod 就绪之前运行完成,每个 Init 容器必须运行成功,下一个才能够运行。

-

如果 Pod 的 Init 容器失败,Kubernetes 会不断地重启该 Pod,直到 Init 容器成功为止。然而,如果 Pod 对应的 restartPolicy 值为 Never,它不会重新启动

4.1 Init 容器

官方文档:工作负载 | Pods | Init 容器

-

Init 容器能做什么?

- Init 容器可以包含一些安装过程中应用容器中不存在的实用工具或个性化代码。

- Init 容器可以安全地运行这些工具,避免这些工具导致应用镜像的安全性降低。

- 应用镜像的创建者和部署者可以各自独立工作,而没有必要联合构建一个单独的应用镜像。

- Init 容器能以不同于 Pod 内应用容器的文件系统视图运行。因此,Init 容器可具有访问 Secrets 的权限,而应用容器不能够访问。

- 由于 Init 容器必须在应用容器启动之前运行完成,因此 Init 容器提供了一种机制来阻塞或延迟应用容器的启动,直到满足了一组先决条件。一旦前置条件满足,Pod 内的所有的应用容器会并行启动。

-

Pod会起一个Pause容器(为其他容器提供网络和存储的)

初始化容器很重要,它主要是为 Main C做一些 tar 包

(初始化容器按照严格的顺序执行,第一个失败则第二个也不能运行) -

Init C 退出后,Main C 才能开始

-

Liveness 会一直检测

- 编写 yaml 资源清单,定义两个初始化容器

[root@k8s1 ~]# vim pod-2.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

run: ccc

name: ccc

spec:

initContainers:

- name: init-myservice

image: busybox

command: ['sh', '-c', "until nslookup myservice.default.svc.cluster.local; do echo waiting for myservice; sleep 2; done"]

- name: init-mydb

image: busybox

command: ['sh', '-c', "until nslookup mydb.default.svc.cluster.local; do echo waiting for mydb; sleep 2; done"]

containers:

- image: nginx

name: nginx

tolerations:

- key: "example-key"

operator: "Exists"

effect: "NoSchedule"

- 生效 yaml 资源清单,创建 pod 失败

- 生效

[root@k8s1 ~]# kubectl apply -f pod-2.yaml

pod/ccc created

- 查看 Pod 的运行状态,发现 初始化容器 没有运行成功

[root@k8s1 ~]# kubectl get pod ccc

NAME READY STATUS RESTARTS AGE

ccc 0/1 Init:0/2 0 17s

- 查看构建过程,找到原因

- 原因是 Init C 没有运行,需要单独创建它们

[root@k8s1 ~]# kubectl describe pod ccc

......

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 51s default-scheduler Successfully assigned default/ccc to k8s2

Normal Pulling 49s kubelet Pulling image "busybox"

Normal Pulled 39s kubelet Successfully pulled image "busybox" in 10.183866119s

Normal Created 38s kubelet Created container init-myservice

Normal Started 38s kubelet Started container init-myservice

- 查看 Init C 的运行状态

- 查看第一个 Init C 的日志,发现它一直在解析

[root@k8s1 ~]# kubectl logs ccc -c init-myservice

waiting for myservice

Server: 10.96.0.10

Address: 10.96.0.10:53

** server can't find myservice.default.svc.cluster.local: NXDOMAIN

*** Can't find myservice.default.svc.cluster.local: No answer

waiting for myservice

- 第二个 Init C 更没有就位

[root@k8s1 ~]# kubectl logs ccc -c init-mydb

Error from server (BadRequest): container "init-mydb" in pod "ccc" is waiting to start: PodInitializing

- 编写第一个 Init C 的 yaml资源清单

[root@k8s1 ~]# vim service.yaml

---

apiVersion: v1

kind: Service

metadata:

name: myservice

spec:

ports:

- protocol: TCP

port: 80

targetPort: 9376

- 生效

[root@k8s1 ~]# kubectl apply -f service.yaml

service/myservice created

- 查看状态

[root@k8s1 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d3h

myservice ClusterIP 10.103.125.44 <none> 80/TCP 7s

- 再次查看 Pod 的运行状态,发现 Pod 依旧没有就位,但是已经有一个 Init C 运行成功并结束了

[root@k8s1 ~]# kubectl get pod ccc

NAME READY STATUS RESTARTS AGE

ccc 0/1 Init:1/2 0 7m16s

[root@k8s1 ~]# kubectl logs ccc -c init-myservice

waiting for myservice

Server: 10.96.0.10

Address: 10.96.0.10:53

*** Can't find myservice.default.svc.cluster.local: No answer

- 编写第二个 Init C 的资源清单

- 查看第二个 Init C 的日志

[root@k8s1 ~]# kubectl logs ccc -c init-mydb

waiting for mydb

Server: 10.96.0.10

Address: 10.96.0.10:53

** server can't find mydb.default.svc.cluster.local: NXDOMAIN

*** Can't find mydb.default.svc.cluster.local: No answer

- 编写 Init C 的资源清单

[root@k8s1 ~]# vim service.yaml

---

apiVersion: v1

kind: Service

metadata:

name: myservice

spec:

ports:

- protocol: TCP

port: 80

targetPort: 9376

---

apiVersion: v1

kind: Service

metadata:

name: mydb

spec:

ports:

- protocol: TCP

port: 80

targetPort: 9377

- 生效

[root@k8s1 ~]# kubectl apply -f service.yaml

service/myservice unchanged

service/mydb created

- 查看 service

[root@k8s1 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d3h

mydb ClusterIP 10.97.3.214 <none> 80/TCP 10s

myservice ClusterIP 10.103.125.44 <none> 80/TCP 4m

- 验证 Pod 的运行状态

[root@k8s1 ~]# kubectl get pod ccc

NAME READY STATUS RESTARTS AGE

ccc 1/1 Running 0 10m

- 查看 Pod 的创建过程

[root@k8s1 ~]# kubectl describe pod ccc

......

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 11m default-scheduler Successfully assigned default/ccc to k8s2

Normal Pulling 11m kubelet Pulling image "busybox"

Normal Pulled 11m kubelet Successfully pulled image "busybox" in 10.183866119s

Normal Created 11m kubelet Created container init-myservice

Normal Started 11m kubelet Started container init-myservice

Normal Pulling 4m1s kubelet Pulling image "busybox"

Normal Created 3m54s kubelet Created container init-mydb

Normal Pulled 3m54s kubelet Successfully pulled image "busybox" in 7.059943166s

Normal Started 3m53s kubelet Started container init-mydb

Normal Pulling 39s kubelet Pulling image "nginx"

Normal Pulled 31s kubelet Successfully pulled image "nginx" in 7.459692664s

Normal Created 31s kubelet Created container nginx

Normal Started 30s kubelet Started container nginx

5. 容器探针

官方文档: 工作负载 | Pods | Pod 的生命周期

- probe 是由 kubelet 对容器执行的定期诊断。 要执行诊断,kubelet 既可以在容器内执行代码,也可以发出一个网络请求。

5.1 探测类型

-

针对运行中的容器,kubelet 可以选择是否执行以下三种探针,以及如何针对探测结果作出反应:

- livenessProbe

指示容器是否正在运行。如果存活态探测失败,则 kubelet 会杀死容器, 并且容器将根据其重启策略决定未来。如果容器不提供存活探针, 则默认状态为 Success。 - readinessProbe

指示容器是否准备好为请求提供服务。如果就绪态探测失败, 端点控制器将从与 Pod 匹配的所有服务的端点列表中删除该 Pod 的 IP 地址。 初始延迟之前的就绪态的状态值默认为 Failure。 如果容器不提供就绪态探针,则默认状态为 Success。 - startupProbe

指示容器中的应用是否已经启动。如果提供了启动探针,则所有其他探针都会被 禁用,直到此探针成功为止。如果启动探测失败,kubelet 将杀死容器,而容器依其 重启策略进行重启。 如果容器没有提供启动探测,则默认状态为 Success。

- livenessProbe

5.2 配置存活、就绪和启动探测器

官方文档: 任务 | 配置 Pods 和容器

-

给容器配置活跃(Liveness)、就绪(Readiness)和启动(Startup)探测器。

-

kubelet 使用存活探测器来确定什么时候要重启容器。 例如,存活探测器可以探测到应用死锁(应用程序在运行,但是无法继续执行后面的步骤)情况。 重启这种状态下的容器有助于提高应用的可用性,即使其中存在缺陷。

-

kubelet 使用就绪探测器可以知道容器何时准备好接受请求流量,当一个 Pod 内的所有容器都就绪时,才能认为该 Pod 就绪。 这种信号的一个用途就是控制哪个 Pod 作为 Service 的后端。 若 Pod 尚未就绪,会被从 Service 的负载均衡器中剔除。

-

kubelet 使用启动探测器来了解应用容器何时启动。 如果配置了这类探测器,你就可以控制容器在启动成功后再进行存活性和就绪态检查, 确保这些存活、就绪探测器不会影响应用的启动。 启动探测器可以用于对慢启动容器进行存活性检测,避免它们在启动运行之前就被杀掉。

-

5.3 定义存活命令

- 许多长时间运行的应用最终会进入损坏状态,除非重新启动,否则无法被恢复。 Kubernetes 提供了存活探测器来发现并处理这种情况。

- 在这个 yaml 资源清单中,可以看到 Pod 中只有一个 Container。 periodSeconds 字段指定了 kubelet 应该每 5 秒执行一次存活探测。 initialDelaySeconds 字段告诉 kubelet 在执行第一次探测前应该等待 5 秒。

[root@k8s1 ~]# vim pod-3.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

run: nginx

name: ccc

spec:

containers:

- image: nginx

name: nginx

livenessProbe:

tcpSocket:

port: 80

initialDelaySeconds: 5

periodSeconds: 5

tolerations:

- key: "example-key"

operator: "Exists"

effect: "NoSchedule"

- 生效资源清单

[root@k8s1 ~]# kubectl apply -f pod-3.yaml

pod/ccc created

[root@k8s1 ~]# kubectl get pod ccc

NAME READY STATUS RESTARTS AGE

ccc 1/1 Running 0 89s

- 修改资源清单,将 port 改成 8080,正确的应该是 80。出现错误后,会每隔 5s 重启,探测 3 次

- 将80设置成错误的8080

[root@k8s1 ~]# vim pod-3.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

run: nginx

name: ccc

spec:

containers:

- image: nginx

name: nginx

livenessProbe:

tcpSocket:

port: 8080

initialDelaySeconds: 5

periodSeconds: 5

tolerations:

- key: "example-key"

operator: "Exists"

effect: "NoSchedule"

- 生效

[root@k8s1 ~]# kubectl apply -f pod-3.yaml

pod/ccc created

- watch 命令每隔 1s 监控 Pod 的运行状态

- 发现重启了 3 次

[root@k8s1 ~]# watch -n 1 'kubectl get pod ccc'

Every 1.0s: kubectl get pod ccc Sun Apr 24 01:05:13 2022

NAME READY STATUS RESTARTS AGE

ccc 1/1 Running 1 (21s ago) 50s

Every 1.0s: kubectl get pod ccc Sun Apr 24 01:05:52 2022

NAME READY STATUS RESTARTS AGE

ccc 1/1 Running 2 (34s ago) 88s

very 1.0s: kubectl get pod ccc Sun Apr 24 01:06:25 2022

NAME READY STATUS RESTARTS AGE

ccc 1/1 Running 3 (31s ago) 2m

- 再查看 Pod 的创建过程

[root@k8s1 ~]# kubectl describe pod ccc

......

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 2m28s default-scheduler Successfully assigned default/ccc to k8s2

Normal Pulled 2m17s kubelet Successfully pulled image "nginx" in 10.996320247s

Normal Pulled 112s kubelet Successfully pulled image "nginx" in 5.251596219s

Normal Pulled 79s kubelet Successfully pulled image "nginx" in 14.180982639s

Normal Created 78s (x3 over 2m16s) kubelet Created container nginx

Normal Started 78s (x3 over 2m16s) kubelet Started container nginx

Warning Unhealthy 59s (x9 over 2m9s) kubelet Liveness probe failed: dial tcp 10.244.1.14:8080: connect: connection refused

Normal Killing 59s (x3 over 119s) kubelet Container nginx failed liveness probe, will be restarted

Normal Pulling 58s (x4 over 2m28s) kubelet Pulling image "nginx"

5.4 定义就绪探测器

-

有时候,应用会暂时性地无法为请求提供服务。 例如,应用在启动时可能需要加载大量的数据或配置文件,或是启动后要依赖等待外部服务。 在这种情况下,既不想杀死应用,也不想给它发送请求。 Kubernetes 提供了就绪探测器来发现并缓解这些情况。 容器所在 Pod 上报还未就绪的信息,并且不接受通过 Kubernetes Service 的流量。

-

就绪探测器在容器的整个生命周期中保持运行状态。

-

活跃探测器 不等待 就绪性探测器成功。 如果要在执行活跃探测器之前等待,应该使用 initialDelaySeconds 或 startupProbe。

-

就绪探测器的配置和存活探测器的配置相似。 唯一区别就是要使用 readinessProbe 字段,而不是 livenessProbe 字段。

- 编写 yaml 资源清单

- 注意,这里一定会出现问题的,因为我设定了一个 test.html,虽然 Pod 是处于 running 的,但是,不能存活

[root@k8s1 ~]# vim pod-3.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

run: nginx

name: ccc

spec:

containers:

- image: nginx

name: nginx

livenessProbe:

tcpSocket:

port: 80

initialDelaySeconds: 5

periodSeconds: 5

readinessProbe:

httpGet:

path: /test.html

port: 80

initialDelaySeconds: 3

periodSeconds: 3

timeoutSeconds: 1

tolerations:

- key: "example-key"

operator: "Exists"

effect: "NoSchedule"

- 生效资源清单

- 生效

[root@k8s1 ~]# kubectl apply -f pod-3.yaml

pod/ccc created

- 通过 watch 命令监控 Pod 的运行状态

- 发现 Pod 处于 running 的状态,也不会 restart,只会一直 ready 0

[root@k8s1 ~]# watch -n 1 'kubectl get pod ccc'

Every 1.0s: kubectl get pod ccc Sun Apr 24 01:12:24 2022

NAME READY STATUS RESTARTS AGE

ccc 0/1 Running 0 52s

- 查看创建过程

- 出现 404 报错,找不到 test.html 文件

[root@k8s1 ~]# kubectl describe pod ccc

......

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 101s default-scheduler Successfully assigned default/ccc to k8s2

Normal Pulling 100s kubelet Pulling image "nginx"

Normal Pulled 96s kubelet Successfully pulled image "nginx" in 4.905888547s

Normal Created 95s kubelet Created container nginx

Normal Started 95s kubelet Started container nginx

Warning Unhealthy 30s (x21 over 90s) kubelet Readiness probe failed: HTTP probe failed with statuscode: 404

删除不需要的服务

[root@k8s1 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 443/TCP 2d4h

mydb ClusterIP 10.97.3.214 80/TCP 18m

myservice ClusterIP 10.103.125.44 80/TCP 22m

[root@k8s1 ~]# kubectl delete svc mydb

service “mydb” deleted

[root@k8s1 ~]# kubectl delete svc myservice

service “myservice” deleted

[root@k8s1 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 443/TCP 2d4h

- 暴露 80 端口服务

[root@k8s1 ~]# kubectl expose pod demo --port=80 --target-port=80

[root@k8s1 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ccc ClusterIP 10.110.34.32 <none> 80/TCP 22s

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d4h

[root@k8s1 ~]# kubectl describe svc ccc

Name: ccc

Namespace: default

Labels: run=nginx

Annotations: <none>

Selector: run=nginx

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.110.34.32

IPs: 10.110.34.32

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints: // 此处为空

Session Affinity: None

Events: <none>

- 解决问题,进入 Pod 的容器,创建 test.html 文件

[root@k8s1 ~]# kubectl exec ccc -- touch /usr/share/nginx/html/test.html

[root@k8s1 ~]# kubectl exec ccc -- ls /usr/share/nginx/html/

50x.html

index.html

test.html

- 最后验证 Pod 的运行状态

- 成功啦!

[root@k8s1 ~]# kubectl get pod ccc

NAME READY STATUS RESTARTS AGE

ccc 1/1 Running 0 6m59s

- 查看 service 的状态,发现 EndPoint 不再是空的

[root@k8s1 ~]# kubectl describe svc ccc

Name: ccc

Namespace: default

Labels: run=nginx

Annotations: <none>

Selector: run=nginx

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.110.34.32

IPs: 10.110.34.32

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.1.15:80 //成功

Session Affinity: None

Events: <none>

- 假如又删除了 test.html 文件,发现 Pod 又处于运行存活但是不能就绪的状态

[root@k8s1 ~]# kubectl exec ccc -- rm -f /usr/share/nginx/html/test.html

[root@k8s1 ~]# kubectl get pod ccc

NAME READY STATUS RESTARTS AGE

ccc 0/1 Running 0 8m23s

[root@k8s1 ~]# kubectl describe svc ccc

Name: ccc

Namespace: default

Labels: run=nginx

Annotations: <none>

Selector: run=nginx

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.110.34.32

IPs: 10.110.34.32

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints:

Session Affinity: None

Events: <none>

- 恢复文件就能解决问题,并通过 IP 访问到服务

[root@k8s1 ~]# kubectl exec ccc -- touch /usr/share/nginx/html/test.html

[root@k8s1 ~]# kubectl exec ccc -- ls /usr/share/nginx/html/test.html

/usr/share/nginx/html/test.html

[root@k8s1 ~]# kubectl get pod ccc

NAME READY STATUS RESTARTS AGE

ccc 1/1 Running 0 9m26s

[root@k8s1 ~]# kubectl describe svc ccc

Name: ccc

Namespace: default

Labels: run=nginx

Annotations: <none>

Selector: run=nginx

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.110.34.32

IPs: 10.110.34.32

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.1.15:80

Session Affinity: None

Events: <none>