05SpringCloud 分布式事务seata

分布式事务seata

1.前言

务必要知道,分布式事务不可能100%完美解决问题!只能尽量提高成功概率!让这个成功概率尽量接近99.999%,为了达到这个目的,甚至加入人工。

2.场景

有如下业务场景:当我们添加订单时,要扣减相应的库存,同时用户积分也要增加,支付服务做相应的支付功能,涉及微服务如下:

订单微服务、商品微服务、积分微服务、支付微服务

2.1 订单微服务关键代码

1.controller层

@GetMapping("/add/order")

public ResponseEntity<String> addOrder(Order order){

try {

orderService.addOrder(order);

return new ResponseEntity<String>("添加订单成功", HttpStatus.OK);

}catch (Exception exception){

return new ResponseEntity<String>(exception.getMessage(), HttpStatus.BAD_REQUEST);

}

}

2.业务层

@Service

@Transactional

public class OrderServiceImpl implements OrderService {

@Autowired

private OrderMapper orderMapper;

@Autowired

private ProductClient productClient;

@Override

public void addOrder(Order order) {

//openfeign远程调用

ResponseEntity<Product> responseEntity = productClient.getProductById(order.getPid());

if(responseEntity.getStatusCode().equals(HttpStatus.INTERNAL_SERVER_ERROR)){

throw new RuntimeException("product-service服务器报错");

}

Product product = responseEntity.getBody();

order.setPname(product.getPname());

order.setPrice(product.getPrice());

order.setUid(1);

order.setUsername("李小龙");

orderMapper.insert(order);

//扣减库存,openfeign远程调用

ResponseEntity<String> reduceStockEntity = productClient.reduceStock(product.getPid(), order.getNumber());

if(responseEntity.getStatusCode().equals(HttpStatus.INTERNAL_SERVER_ERROR)){

throw new RuntimeException(responseEntity.getBody().toString());

}

randomThrowException();

}

private void randomThrowException(){

Random random = new Random();

int n = random.nextInt(3)+1;//返回一个大于等于0小于3的随机数

if(n ==3 ){

System.out.println(8/0); //三分之一概率报错

}

}

}

3.openfeign接口

@FeignClient(qualifier = "productClient",value = "product-service")

public interface ProductClient {

@GetMapping("/get/product")

public ResponseEntity<Product> getProductById(@RequestParam("id") Integer id);

@GetMapping("/reduce/stock")

public ResponseEntity<String> reduceStock(@RequestParam("pid") Integer pid,

@RequestParam("num") Integer num);

}

2.2 商品微服务关键代码

1.controller层

@GetMapping("/get/product")

public ResponseEntity<Product> getProductById(@RequestParam("id") Integer id){

try {

Product product = productService.getProductById(id);

return new ResponseEntity<>(product, HttpStatus.OK);

}catch (Exception exception){

return new ResponseEntity<>(null,HttpStatus.INTERNAL_SERVER_ERROR);

}

}

@GetMapping("/reduce/stock")

public ResponseEntity<String> reduceStock(@RequestParam("pid") Integer pid,

@RequestParam("num") Integer num){

try {

productService.reduceStock(pid,num);

return new ResponseEntity<>("扣减库存成功",HttpStatus.OK);

}catch (Exception ex){

ex.printStackTrace();

return new ResponseEntity<>("扣减库存失败",HttpStatus.INTERNAL_SERVER_ERROR);

}

}

2.service层

@Service

@Transactional

public class ProductServiceImpl implements ProductService {

@Autowired

private ProductMapper productMapper;

@Override

public Product getProductById(Integer id) {

return productMapper.selectByPrimaryKey(id);

}

@Override

public void reduceStock(Integer pid, Integer num) {

Product product = getProductById(pid);

if(num <=0){

throw new RuntimeException("库存不合法");

}

if(product.getStock()<num){

throw new RuntimeException("库存不足");

}

product.setStock(product.getStock()-num);

productMapper.updateByPrimaryKeySelective(product);

}

}

问题:在调用完商品微服务扣减库存后,如果库存扣减成功,订单微服务继续调用randomThrowException()执行了除以0操作,必然导致订单添加失败,导致订单本地事务回滚,而商品微服务扣减库存的本地事务已经提交,这就是问题了。

3.seata

官网地址:http://seata.io/zh-cn/

3.1 seata是什么

Seata是一款开源的分布式事务解决方案,致力于在微服务架构下提供高性能和简单易用的分布式事务服务,Seata 为用户提供了 AT、TCC、SAGA 和 XA 事务模式,为用户打造一站式的分布式解决方案。我们将以AT模式作为学习重点

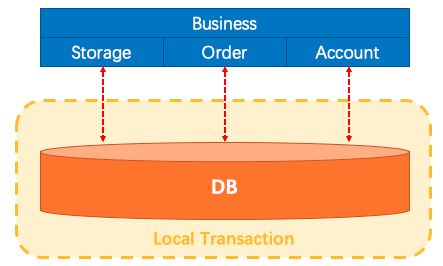

3.2 传统事务

在传统的,巨大的项目中,如下,事务由3个模块构成,开发人员只使用单一的数据源,自然地,数据的一致性就被本地事务所保证。

在微服务架构中,事情变得复杂了,以上的3个模块分别在不同的数据源上,每个数据源各自只能保证各自模块的数据一致性

针对于以上问题,Seata是如何处理这种问题的呢?

Seata把一个全局事务,看做是由一组分支事务组成的一个大的事务(分支事务可以直接认为就是本地事务)

3.3 seata中的三个组件

Transaction Coodinator(TC)

事务协调者,维护全局事务和分支事务的状态,驱动全局事务的提交或回滚

Transaction Manager(TM)

事务管理器,定义全局事务的范围:何时开始全局事务,何时提交或回滚全局事务,tc只负责开全局事务,由TM发通知给TC,当所有的微服务都调用完毕后,再次发消息给TC,通知TC处理

Resource Manager(RM)

资源管理器(数据库),负责向TC注册分支事务、向TC汇报状态,并接收事务协调器的指令。驱动分支事务的提交或回滚,每个RM会把本地事务执行结果以及微服务的ip和端口发送到TC,告知TC

3.4 Seata管理分布式事务的典型流程

- TM告知TC开启一个全局事务,TC生成一个全局唯一的XID。

- XID在微服务调用链中传播。

- RM向TC注册分支事务,将其纳入XID对应的全局事务的管辖之内。

- TM告知TC发起针对XID的全局提交或回滚决议。

- TC调度XID下管辖的全部分支事务完成回滚请求。

3.4.1设计思路**

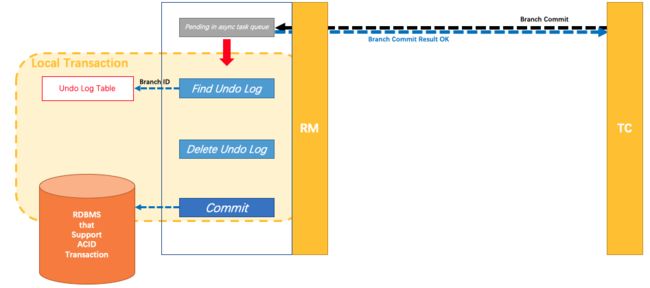

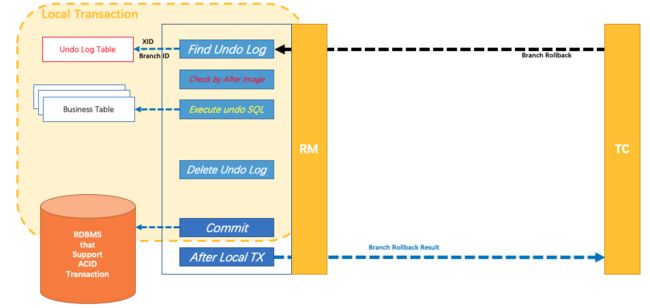

AT模式的核心是对业务无侵入,是一种改进后的两阶段提交,其设计思路如图

第一阶段

业务数据和回滚日志记录在同一个本地事务中提交,释放本地锁和连接资源。核心在于对业务sql进行解析,转换成undolog,并同时入库,这是怎么做的呢?先抛出一个概念DataSourceProxy代理数据源,通过名字大家大概也能基本猜到是什么个操作,后面做具体分析

第二阶段

分布式事务操作成功,则TC通知RM异步删除undolog

分布式事务操作失败,TM向TC发送回滚请求,RM 收到协调器TC发来的回滚请求,通过 XID 和 Branch ID 找到相应的回滚日志记录,通过回滚记录生成反向的更新 SQL 并执行,以完成分支的回滚。

整体执行流程

3.4.2 设计亮点**

相比与其它分布式事务框架,Seata架构的亮点主要有几个:

-

应用层基于SQL解析实现了自动补偿,从而最大程度的降低业务侵入性;

-

将分布式事务中TC(事务协调者)独立部署,负责事务的注册、回滚;

-

通过全局锁实现了写隔离与读隔离。

6.4.3 存在的问题

性能损耗

一条Update的SQL,则需要全局事务xid获取(与TC通讯)、before image(解析SQL,查询一次数据库)、after image(查询一次数据库)、insert undo log(写一次数据库)、before commit(与TC通讯,判断锁冲突),这些操作都需要一次远程通讯RPC,而且是同步的。另外undo log写入时blob字段的插入性能也是不高的。每条写SQL都会增加这么多开销,粗略估计会增加5倍响应时间。

性价比

为了进行自动补偿,需要对所有交易生成前后镜像并持久化,可是在实际业务场景下,这个是成功率有多高,或者说分布式事务失败需要回滚的有多少比率?按照二八原则预估,为了20%的交易回滚,需要将80%的成功交易的响应时间增加5倍,这样的代价相比于让应用开发一个补偿交易是否是值得?

全局锁

热点数据

相比XA,Seata 虽然在一阶段成功后会释放数据库锁,但一阶段在commit前全局锁的判定也拉长了对数据锁的占有时间,这个开销比XA的prepare低多少需要根据实际业务场景进行测试。全局锁的引入实现了隔离性,但带来的问题就是阻塞,降低并发性,尤其是热点数据,这个问题会更加严重。

回滚锁释放时间

Seata在回滚时,需要先删除各节点的undo log,然后才能释放TC内存中的锁,所以如果第二阶段是回滚,释放锁的时间会更长。

死锁问题

Seata的引入全局锁会额外增加死锁的风险,但如果出现死锁,会不断进行重试,最后靠等待全局锁超时,这种方式并不优雅,也延长了对数据库锁的占有时间。

3.5 seata TC搭建

下载seata中的TC服务端:https://github.com/seata/seata/releases/tag/v1.3.0。下载seata-1.3.0版本

1.解压下载的seata-1.3.0,进入conf目录,打开registry.conf文件,修改成如下配置

registry {

# file 、nacos 、eureka、redis、zk、consul、etcd3、sofa

type = "nacos"

nacos {

application = "seata-server"

serverAddr = "127.0.0.1:8848"

group = "DEFAULT_GROUP" #和微服务的分组相同

namespace = ""

cluster = "default"

username = ""

password = ""

}

}

config {

# file、nacos 、apollo、zk、consul、etcd3

type = "file"

file {

name = "file.conf"

}

}

2.打开file.conf文件

store {

## store mode: file、db、redis

mode = "db"

## database store property

db {

datasource = "druid"

## mysql/oracle/postgresql/h2/oceanbase etc.

dbType = "mysql"

driverClassName = "com.mysql.cj.jdbc.Driver"

url = "jdbc:mysql://127.0.0.1:3306/k15?serverTimezone=GMT%2B8"

user = "root"

password = "root"

minConn = 5

maxConn = 30

globalTable = "global_table"

branchTable = "branch_table"

lockTable = "lock_table"

queryLimit = 100

maxWait = 5000

}

}

3.创建seata数据库相关表

参考附录global_table,branch_table,lock_table,undo_log

4.进入到bin目录下,启动seata

window:

seata-server.bat 双击即可,把自己注册到nacos中,服务名默认:seata-server,运行端口默认 8091

linux:

seata-server.sh

说明:

如果微服务的jar和seata不是在同一台服务器,例如:本地window运行微服务项目,seata在linux服务器运行,这个时候要添加以下配置

spring:

cloud:

alibaba:

seata:

tx-service-group: my_test_tx_group

seata:

registry:

type: nacos

nacos:

server-addr: 192.168.1.23:8848

group: DEFAULT_GROUP

username: nacos

password: nacos

3.6 seata的TM RM搭建

1.分别在product-service和order-service微服务中添加如下依赖

<dependency>

<groupId>com.alibaba.cloudgroupId>

<artifactId>spring-cloud-starter-alibaba-seataartifactId>

dependency>

2.分别在product-service和order-service微服务中添加以下配置类,用来创建代理数据源,而创建代理数据源的目的是为了创建代理连接,而创建代理连接的目的是为创建代理Statement,这个代理Statement是可以产生前置镜像和后置镜像的

@Configuration

public class DataSourceProxyConfig {

@Primary

@Bean

@ConfigurationProperties(prefix = "spring.datasource")

public DruidDataSource druidDataSource() {

return new DruidDataSource();

}

@Bean

public DataSourceProxy dataSource(DruidDataSource druidDataSource) {

return new DataSourceProxy(druidDataSource);

}

}

首先说明一点,springboot操作dao首先采用的数据源是DruidDataSource,而seata只会去找代理数据源DataSourceProxy

源码解析:

DataSourcePorxy类

public DataSourceProxy(DataSource targetDataSource, String resourceGroupId) {

super(targetDataSource);

this.tableMetaExcutor = new ScheduledThreadPoolExecutor(1, new NamedThreadFactory("tableMetaChecker", 1, true));

this.init(targetDataSource, resourceGroupId);

}

创建出代理数据源,init()方法里面调用了getConnection()创建代理连接ConnectionProxy

在AbstractConnectionProxy类中,通过connection创建代理statement(StatementProxy)

public Statement createStatement() throws SQLException {

Statement targetStatement = this.getTargetConnection().createStatement();

return new StatementProxy(this, targetStatement);

}

跟踪到BaseTransactionalExecutor的execute()方法,调用doExecute()方法,在AbstractDMLBaseExecutor的executeAutoCommitFalse()方法中的源码如下

if (!"mysql".equalsIgnoreCase(this.getDbType()) && this.getTableMeta().getPrimaryKeyOnlyName().size() > 1) {

throw new NotSupportYetException("multi pk only support mysql!");

} else {

TableRecords beforeImage = this.beforeImage();

T result = this.statementCallback.execute(this.statementProxy.getTargetStatement(), args);

TableRecords afterImage = this.afterImage(beforeImage);

this.prepareUndoLog(beforeImage, afterImage);

return result;

}

可以看到,在statementCallback.execute执行前是有前置通知,执行后有后置通知,通过aop切面完成

3.分别在product-service和order-service配置文件添加如下配置,旨在“找TC”

server:

port: 8081

spring:

application:

name: product-service 或者 order-service

datasource:

driver-class-name: com.mysql.cj.jdbc.Driver

type: com.alibaba.druid.pool.DruidDataSource

password: root

url: jdbc:mysql://localhost:3306/k15?serverTimezone=UTC

username: root

cloud:

nacos:

discovery:

server-addr: 127.0.0.1:8848

alibaba:

seata:

tx-service-group: my_test_tx_group ##这个参数必须是这个名字,为什么值不直接为default?

说明,seata0.9的版本中conf目录下的file.conf文件是有这么一个参数的:

service {

#vgroup->rgroup

vgroup_mapping.my_test_tx_group = “default”…

添加druid的依赖

<dependency>

<groupId>com.alibabagroupId>

<artifactId>druid-spring-boot-starterartifactId>

<version>1.2.8version>

dependency>

4.在order-service的service层的addOrder方法上添加@GlobalTransactional(timeoutMills = 6000000)注解即可

5.postman测试,略

3.7 TC配置保存到nacos配置中心

1.打开seatar的conf目录下的registry.conf文件

registry {

# file 、nacos 、eureka、redis、zk、consul、etcd3、sofa

type = "nacos"

nacos {

application = "seata-server"

serverAddr = "127.0.0.1:8848"

group = "DEFAULT_GROUP" #和微服务的组相同

namespace = ""

cluster = "default"

username = "nacos"

password = "nacos"

}

}

config {

# file、nacos 、apollo、zk、consul、etcd3

type = "nacos"

nacos {

serverAddr = "127.0.0.1:8848"

namespace = ""

group = "SEATA_GROUP"

username = ""

password = ""

}

}

2.在seata根目录下,创建config.txt,内容如下:

transport.type=TCP

transport.server=NIO

transport.heartbeat=true

transport.enableClientBatchSendRequest=false

transport.threadFactory.bossThreadPrefix=NettyBoss

transport.threadFactory.workerThreadPrefix=NettyServerNIOWorker

transport.threadFactory.serverExecutorThreadPrefix=NettyServerBizHandler

transport.threadFactory.shareBossWorker=false

transport.threadFactory.clientSelectorThreadPrefix=NettyClientSelector

transport.threadFactory.clientSelectorThreadSize=1

transport.threadFactory.clientWorkerThreadPrefix=NettyClientWorkerThread

transport.threadFactory.bossThreadSize=1

transport.threadFactory.workerThreadSize=default

transport.shutdown.wait=3

service.vgroupMapping.my_test_tx_group=default

service.default.grouplist=127.0.0.1:8091

service.enableDegrade=false

service.disableGlobalTransaction=false

client.rm.asyncCommitBufferLimit=10000

client.rm.lock.retryInterval=10

client.rm.lock.retryTimes=30

client.rm.lock.retryPolicyBranchRollbackOnConflict=true

client.rm.reportRetryCount=5

client.rm.tableMetaCheckEnable=false

client.rm.sqlParserType=druid

client.rm.reportSuccessEnable=false

client.rm.sagaBranchRegisterEnable=false

client.tm.commitRetryCount=5

client.tm.rollbackRetryCount=5

client.tm.degradeCheck=false

client.tm.degradeCheckAllowTimes=10

client.tm.degradeCheckPeriod=2000

store.mode=db

store.db.datasource=druid

store.db.dbType=mysql

store.db.driverClassName=com.mysql.cj.jdbc.Driver

store.db.url=jdbc:mysql://127.0.0.1:3306/k15?serverTimezone=Asia/Shanghai

store.db.user=root

store.db.password=root

store.db.minConn=5

store.db.maxConn=30

store.db.globalTable=global_table

store.db.branchTable=branch_table

store.db.queryLimit=100

store.db.lockTable=lock_table

store.db.maxWait=5000

store.redis.host=127.0.0.1

store.redis.port=6379

store.redis.maxConn=10

store.redis.minConn=1

store.redis.database=0

store.redis.password=null

store.redis.queryLimit=100

server.recovery.committingRetryPeriod=1000

server.recovery.asynCommittingRetryPeriod=1000

server.recovery.rollbackingRetryPeriod=1000

server.recovery.timeoutRetryPeriod=1000

server.maxCommitRetryTimeout=-1

server.maxRollbackRetryTimeout=-1

server.rollbackRetryTimeoutUnlockEnable=false

client.undo.dataValidation=true

client.undo.logSerialization=jackson

client.undo.onlyCareUpdateColumns=true

server.undo.logSaveDays=7

server.undo.logDeletePeriod=86400000

client.undo.logTable=undo_log

client.log.exceptionRate=100

transport.serialization=seata

transport.compressor=none

metrics.enabled=false

metrics.registryType=compact

metrics.exporterList=prometheus

metrics.exporterPrometheusPort=9898

3.将seata-1.3.0源码包中的script/config-center/nacos/nacos-config.sh拷贝到seata/conf目录下:

4.执行该nacos-config.sh,右键git Bash Here

$ sh nacos-config.sh -h localhost -p 8848 -u nacos -w nacos

4.附录

1.相关数据库表(订单表、商品表)

DROP TABLE IF EXISTS `seata_order`;

CREATE TABLE `seata_order` (

`id` int(0) NOT NULL AUTO_INCREMENT COMMENT '订单id',

`number` int(0) DEFAULT NULL COMMENT '订单数据',

`pid` int(0) NOT NULL COMMENT '商品id',

`pname` varchar(255) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci DEFAULT NULL COMMENT '商品名称',

`price` decimal(10, 2) DEFAULT NULL COMMENT '价格',

`uid` int(0) DEFAULT NULL COMMENT '客户id',

`username` varchar(255) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci DEFAULT NULL COMMENT '客户名称',

PRIMARY KEY (`id`) USING BTREE

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_0900_ai_ci ROW_FORMAT = Dynamic;

-- ----------------------------

-- Table structure for seata_product

-- ----------------------------

DROP TABLE IF EXISTS `seata_product`;

CREATE TABLE `seata_product` (

`pid` int(0) NOT NULL AUTO_INCREMENT COMMENT '商品id',

`pname` varchar(255) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci DEFAULT NULL COMMENT '商品名称',

`price` decimal(10, 2) DEFAULT NULL COMMENT '商品价格',

`stock` int(0) DEFAULT NULL COMMENT '库存',

PRIMARY KEY (`pid`) USING BTREE

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_0900_ai_ci ROW_FORMAT = Dynamic;

-- ----------------------------

-- Records of seata_product

-- ----------------------------

INSERT INTO `seata_product` VALUES (1, '手机', 1000.00, 100);

INSERT INTO `seata_product` VALUES (2, '充电器', 2000.00, 100);

INSERT INTO `seata_product` VALUES (3, '耳机', 200.00, 100);

2.seata相关表

DROP TABLE IF EXISTS `branch_table`;

CREATE TABLE `branch_table` (

`branch_id` bigint(0) NOT NULL,

`xid` varchar(128) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NOT NULL,

`transaction_id` bigint(0) DEFAULT NULL,

`resource_group_id` varchar(32) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci DEFAULT NULL,

`resource_id` varchar(256) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci DEFAULT NULL,

`local_key` varchar(128) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci DEFAULT NULL,

`branch_type` varchar(8) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci DEFAULT NULL,

`status` tinyint(0) DEFAULT NULL,

`client_id` varchar(64) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci DEFAULT NULL,

`application_data` varchar(2000) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci DEFAULT NULL,

`gmt_create` datetime(0) DEFAULT NULL,

`gmt_modified` datetime(0) DEFAULT NULL,

PRIMARY KEY (`branch_id`) USING BTREE,

INDEX `idx_xid`(`xid`) USING BTREE

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_0900_ai_ci ROW_FORMAT = Dynamic;

-- ----------------------------

-- Table structure for global_table

-- ----------------------------

DROP TABLE IF EXISTS `global_table`;

CREATE TABLE `global_table` (

`xid` varchar(128) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NOT NULL,

`transaction_id` bigint(0) DEFAULT NULL,

`status` tinyint(0) NOT NULL,

`application_id` varchar(32) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci DEFAULT NULL,

`transaction_service_group` varchar(32) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci DEFAULT NULL,

`transaction_name` varchar(128) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci DEFAULT NULL,

`timeout` int(0) DEFAULT NULL,

`begin_time` bigint(0) DEFAULT NULL,

`application_data` varchar(2000) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci DEFAULT NULL,

`gmt_create` datetime(0) DEFAULT NULL,

`gmt_modified` datetime(0) DEFAULT NULL,

PRIMARY KEY (`xid`) USING BTREE,

INDEX `idx_gmt_modified_status`(`gmt_modified`, `status`) USING BTREE,

INDEX `idx_transaction_id`(`transaction_id`) USING BTREE

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_0900_ai_ci ROW_FORMAT = Dynamic;

-- ----------------------------

-- Table structure for lock_table

-- ----------------------------

DROP TABLE IF EXISTS `lock_table`;

CREATE TABLE `lock_table` (

`row_key` varchar(128) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NOT NULL,

`xid` varchar(96) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci DEFAULT NULL,

`transaction_id` mediumtext CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci,

`branch_id` mediumtext CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci,

`resource_id` varchar(256) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci DEFAULT NULL,

`table_name` varchar(32) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci DEFAULT NULL,

`pk` varchar(36) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci DEFAULT NULL,

`gmt_create` datetime(0) DEFAULT NULL,

`gmt_modified` datetime(0) DEFAULT NULL,

PRIMARY KEY (`row_key`) USING BTREE

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_0900_ai_ci ROW_FORMAT = Dynamic;

-- ----------------------------

-- Table structure for undo_log

-- ----------------------------

DROP TABLE IF EXISTS `undo_log`;

CREATE TABLE `undo_log` (

`id` bigint(0) NOT NULL AUTO_INCREMENT,

`branch_id` bigint(0) NOT NULL,

`xid` varchar(100) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`context` varchar(128) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL,

`rollback_info` longblob NOT NULL,

`log_status` int(0) NOT NULL,

`log_created` datetime(0) NOT NULL,

`log_modified` datetime(0) NOT NULL,

PRIMARY KEY (`id`) USING BTREE,

UNIQUE INDEX `ux_undo_log`(`xid`, `branch_id`) USING BTREE

) ENGINE = InnoDB AUTO_INCREMENT = 1 CHARACTER SET = utf8 COLLATE = utf8_general_ci ROW_FORMAT = Dynamic;

5.seata整合多数据源

如果订单有订单数据库,库存有库存数据库,seata的tc也用新的数据库,多数据源操作

则不需要修改原有的配置和代码,只需在订单库和库存库各自添加undo_log日志表即可,seata库的undo_log日志表则不需要了。