爬梯:Docker全解析(二)

5. 容器数据卷

实现容器中的数据同步到宿主机上的一中技术,数据卷技术。

将容器内的目录挂在到linux的目录上。

5.1 实现目录挂载 -v

-v 宿主机文件系统的路径:容器文件系统的路径

测试:创建centos容器,挂载目录,创建hello.volume文件

[root@ct7_1 ~]# docker run -it -v /home/centos_:/home centos bash

[root@5b8a92a9eb9b /]# cd home/

[root@5b8a92a9eb9b home]# ls

[root@5b8a92a9eb9b home]# touch hello.volume

[root@5b8a92a9eb9b home]# exit

exit

[root@ct7_1 ~]# cd /home/centos_/

[root@ct7_1 centos_]# ls

hello.volume

实现了数据卷的挂载。

此时查看此容器的系统信息:

docker inspect 5b8a92a9eb9b

# 省略了其它部分

......

"Mounts": [ # 挂载信息

{

"Type": "bind",

"Source": "/home/centos_", # 宿主机路径

"Destination": "/home", # 容器内的路径

"Mode": "",

"RW": true,

"Propagation": "rprivate"

}

],

......

5.2 具名和匿名挂载

实现数据卷有三种参数格式:

-v /宿主机路径:容器内路径 # 路径挂载

-v 容器内路径 # 匿名挂载

-v 数据卷名称:容器内路径 # 具名挂载

带有斜杠/ 表示路径,不带斜杠表示名称。

匿名挂载

指定容器内路径:/ect/nginx

docker run -d -P --name n1 -v /ect/nginx nginx

实际操作:

[root@ct7_1 /]# docker run -d -e MYSQL_ROOT_PASSWORD=123 --name m2 -v /val/mysql/data mysql:5.7

c293316f65ea5a748a54239d4972a521092b9c5aa69204f7fa2cbd93cca52b47

[root@ct7_1 /]# docker inspect m2

# 省略其他部分

# 使用匿名挂载,docker会自动判断需要挂载的文件夹,这里挂载mysql时则自动创建了两个劵:

......

"Mounts": [

{

"Type": "volume",

"Name": "873b58e3da0208f5a1cbf91cf6516fadc8c221a8c2b9751c50db9141052b9674",

"Source": "/var/lib/docker/volumes/873b58e3da0208f5a1cbf91cf6516fadc8c221a8c2b9751c50db9141052b9674/_data",

"Destination": "/val/mysql/data",

"Driver": "local",

"Mode": "",

"RW": true,

"Propagation": ""

},

{

"Type": "volume",

"Name": "09c72e4d7d2076a519d4252c0fc53198f2ecc1a4c4c015f23eabac7d4d81be7e",

"Source": "/var/lib/docker/volumes/09c72e4d7d2076a519d4252c0fc53198f2ecc1a4c4c015f23eabac7d4d81be7e/_data",

"Destination": "/var/lib/mysql",

"Driver": "local",

"Mode": "",

"RW": true,

"Propagation": ""

}

]

......

Mounts #

Type # 数据卷

Source # 宿主机路径

Destination # 容器内路径

RW # 读写权限,相对于容器而言

具名挂载

使用卷名:nginx-v

docker run -d -P --name n2 -v nginx-v:/etc/nginx nginx

实际操作:

[root@ct7_1 data]# docker run -d -e MYSQL_ROOT_PASSWORD=123 --name m1 -v mysql-v:/val/mysql/data mysql:5.7

[root@ct7_1 data]# docker volume ls

DRIVER VOLUME NAME

local 0150a37e67573109ad401da2c203f9a7724414787bc7cdd8605eb4acb35c3401

local mysql-v

[root@ct7_1 data]# docker volume inspect mysql-v

[

{

"CreatedAt": "2020-10-19T05:14:10-07:00",

"Driver": "local",

"Labels": null,

"Mountpoint": "/var/lib/docker/volumes/mysql-v/_data",

"Name": "mysql-v",

"Options": null,

"Scope": "local"

}

]

Mountpoint:挂载宿主机的路径。

查看所有数据卷信息

docker volume ls

docker容器的数据卷,在没有指定目录的情况下,文件都挂载在:

/var/lib/docker/volume/xxx/_data

添加权限 ro rw

- or:readonly 只读

- rw:readwrite 读写

这个是对容器而言的权限控制。

# 对此容器设置只读劵,容器只可以读取挂载的劵的内容,不能修改和删除

docker run -d -p -v nginx-v:/ect/nginx:ro nginx

# 对此容器设置读写劵

docker run -d -p -v nginx-v:/ect/nginx:rw nginx

5.3 通过dockerfile构建镜像的时候挂载

第6.2标题中介绍。

5.4 容器之间实现数据同步 --volumes-from

这一步我学完的时候也是感觉很麻烦的,因为有很多种情况需要实际测试,,,中间我还想了docker搭应用的集群时,高并发下这个挂载会有一个怎样的同步操作,,,是否稳定。还是全部理解透在回来思考这个问题吧。

使用 --volumes-from 实际操作一下这两种情况:

-

docker01 <—— docker02 <—— docker03

docker02 挂载docker01,docker03挂载docker02;

-

docker01 <—— docker02,docker01 <—— docker03

docker02和docker03都挂载docker01;

测试1:docker01 <—— docker02 <—— docker03

# 创建容器

[root@ct7_1 /]# docker run -itd --name docker01 ssx-centos:1.0

[root@ct7_1 /]# docker run -itd --name docker02 --volumes-from docker01 ssx-centos:1.0

[root@ct7_1 /]# docker run -itd --name docker03 --volumes-from docker02 ssx-centos:1.0

# 通过inspect查看三个容器的挂载信息,name、source、destination都是一样的

"Name": "8d2b953edaaca284f4a4130052c28b2f906a53673191edc83cd7aa5fff973525",

"Source": "/var/lib/docker/volumes/8d2b953edaaca284f4a4130052c28b2f906a53673191edc83cd7aa5fff973525/_data",

"Destination": "volume01",

"Name": "e9e7f5f6621894afe49d90281386f719dfa053c8c075da9f35126c01862302e2",

"Source": "/var/lib/docker/volumes/e9e7f5f6621894afe49d90281386f719dfa053c8c075da9f35126c01862302e2/_data",

"Destination": "volume02",

-

在宿主机中的挂载目录创建文件:suzhu.java

查看docker01、docker02、docker03的volume01目录内都生成了suzhu.java

-

在docker03的volume02中创建文件:docker03.class

查看宿主机对应挂载目录和docker02的volume02中都生成了 docker03.class文件

-

删除docker01容器,在宿主机挂载目录创建:suzhu.javascript

查看docker02、docker03的volume01目录内都生成了suzhu.java

[root@ct7_1 _data]# docker rm -f docker01 docker01 [root@ct7_1 _data]# touch suzhu.javascript [root@ct7_1 ~]# docker attach docker03 [root@4cbcc87247ca volume01]# ls suzhu.java suzhu.javascript

本来还打算删除volume02的劵e9e7f5f6621894afe49d90281386f719dfa053c8c075da9f35126c01862302e2再进行测试,结果提示该劵被docker02、docker03容器使用着不能删除,需要先删除容器,才能删除被应用的具体劵。

测试2:docker01 <—— docker02,docker01 <—— docker03

# 创建容器

[root@ct7_1 /]# docker run -itd --name docker01 ssx-centos:1.0

[root@ct7_1 /]# docker run -itd --name docker02 --volumes-from docker01 ssx-centos:1.0

[root@ct7_1 /]# docker run -itd --name docker03 --volumes-from docker01 ssx-centos:1.0

# 通过inspect查看三个容器的挂载信息,name、source、destination都是一样的

"Name": "4d6cc4b485b8a9d00276ca5afbc8f92c70b4852fa1ac5b5b6c7f150cc76304ce",

"Source": "/var/lib/docker/volumes/4d6cc4b485b8a9d00276ca5afbc8f92c70b4852fa1ac5b5b6c7f150cc76304ce/_data",

"Destination": "volume01",

"Name": "d2e84c161cda0b84d98e87b1150dd226e174ef583a1c05aa39980d29c3bbcef5",

"Source": "/var/lib/docker/volumes/d2e84c161cda0b84d98e87b1150dd226e174ef583a1c05aa39980d29c3bbcef5/_data",

"Destination": "volume02",

-

在docker01/volume01创建文件:docker01.css

查看宿主挂载路径、docker02、docker03的volume01目录内都生成了docker01.css

-

删除docker01容器,在docker02/volume01中创建docker02.jar

查看docker03/volume01中,也生成了docker02.jar

数据卷小结:

-

通过两个小测试充分说明这个挂载虽然是通过 --volume-from docker01 创建的,但是创建完容器之后并不依赖具体容器,而是依赖具体的volume;

-

劵是一个独立于容器和镜像的存在;

-

即便全部挂载劵的容器都删除了,宿主机中的文件依然健在;

-

劵的生命周期也是在全部挂载容器都删除后,走进消亡。

6. DockerFile

6.1 DockerFile介绍

dockerfile是用来构建docker镜像的文件(命令脚本),通过这个文件生成镜像。

也就是说创建镜像的方法有两种:

- 使用 docker commit 将已存在的容器打包为镜像;

- 编写 dockerfile 文件生成一个镜像;

6.1.1 DockerFile基础概念:

-

所有指令都是大写;

-

每一个指令相当于一个镜像层;

-

代码从上而下一行一行执行;

-

#表示注释;

6.1.2 探究官方centos的dockerfile

- 在dockerhub上搜centos,进入centos页面

- 点击centos7,跳转github。展示的文件就是centos7的dockerfile文件。一个指令表示一个镜像层。

6.1.3 DockerFile指令

FROM # 基础镜像,依赖于谁

MAINTAINER # 作者:姓名+邮箱

RUN # 镜像构建的时候需要运行的命令

ADD # 添加,可以添加其他镜像

WORKDIR # 镜像的工作目录

VOLUME # 挂载的目录

EXPOSE # 暴露的端口

CMD # 指定这个容器启动的时候需要运行的命令,最后一个才会生效

ENTRYPOINT # 指定这个容器启动的时候需要运行的命令,可以追加

ONBUILD # 当dockerfile被构建的时候触发的指令

COPY # 类似ADD,讲文件拷贝到镜像中

ENV # 构建的时候设置环境变量

ARG # 创建镜像过程中使用的变量

LABEL # 为生成的镜像添加元数据标签信息

STOPSIGNAL # 退出的信号值

HEALTHCHECK # 配置所启动容器如何进行健康检查

SHELL # 指定默认shell类型

网络资料:

6.2 编写dockerfile,通过docker build构建成镜像

编写一个简单的dockerfile,并指定数据卷。文件名:dockerfile-mycentos

FROM centos

VOLUME ["volume01","volume02"]

CMD echo "------ build end -------"

CMD /bin/bash

实现:使用docker build 命令构建镜像

[root@ct7_1 docker_files]# ls

dockerfile-mycentos

[root@ct7_1 docker_files]# docker build -f dockerfile-mycentos -t ssx-centos:1.0 .

Sending build context to Docker daemon 2.048kB

Step 1/4 : FROM centos

---> 0d120b6ccaa8

Step 2/4 : VOLUME ["volume01","volume02"]

---> Running in ba6d58157080

Removing intermediate container ba6d58157080

---> 7a7150925a95

Step 3/4 : CMD echo "...... build end ......"

---> Running in 41768d82fb91

Removing intermediate container 41768d82fb91

---> 6ec63fad6c2f

Step 4/4 : CMD /bin/bash

---> Running in 9211a9b3a640

Removing intermediate container 9211a9b3a640

---> e06c9143e0ed

Successfully built e06c9143e0ed

Successfully tagged ssx-centos:1.0

-f 表示文件路径

-t 表示标签tag(name:tag)

末尾有个“ . ” 表示生成镜像的路径是本地,也可以是url上传到仓库

[root@ct7_1 docker_files]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

ssx-centos 1.0 e06c9143e0ed 7 minutes ago 215MB

将ssx-centos镜像启动:

[root@ct7_1 docker_files]# docker run -itd ssx-centos:1.0 bash

ef5fa7b89801a5a31f6473bd4b30186ab10b7abe9c3a5e45e2d777327bce4a48

[root@ct7_1 docker_files]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

ef5fa7b89801 ssx-centos:1.0 "bash" 4 seconds ago Up 3 seconds modest_shamir

查看容器信息:docker inspect ef5fa7b89801

默认匿名挂载了两个劵:[“volume01”,“volume02”]

......

"Mounts": [

{

"Type": "volume",

"Name": "ee1b4560e998b49d1c00b86e7e90f00a7c295d9964edd0436aa6e71905ee6410",

"Source": "/var/lib/docker/volumes/ee1b4560e998b49d1c00b86e7e90f00a7c295d9964edd0436aa6e71905ee6410/_data",

"Destination": "volume01",

"Driver": "local",

"Mode": "",

"RW": true,

"Propagation": ""

},

{

"Type": "volume",

"Name": "d2fb1f67f79081fcc0110c249353b9825f8443d17af7cfbbc367eeed237cdcca",

"Source": "/var/lib/docker/volumes/d2fb1f67f79081fcc0110c249353b9825f8443d17af7cfbbc367eeed237cdcca/_data",

"Destination": "volume02",

"Driver": "local",

"Mode": "",

"RW": true,

"Propagation": ""

}

],

......

那么docker挂载数据卷的方法就有两种了:

- 使用run命令添加 -v 参数挂载数据卷;

- 在DockerFile文件中就指定数据卷,由构建容器的时候默认生成数据卷;

6.3 分析镜像 docker history

histroy这个命令可以分析image,将image“反编译”成dockerfile。

使用history分析前面构建的ssx-centos:1.0

[root@ct7_1 docker_files]# docker history ssx-centos:1.0

IMAGE CREATED CREATED BY SIZE COMMENT

e06c9143e0ed 25 hours ago /bin/sh -c #(nop) CMD ["/bin/sh" "-c" "/bin… 0B

6ec63fad6c2f 25 hours ago /bin/sh -c #(nop) CMD ["/bin/sh" "-c" "echo… 0B

7a7150925a95 25 hours ago /bin/sh -c #(nop) VOLUME [volume01 volume02] 0B

0d120b6ccaa8 2 months ago /bin/sh -c #(nop) CMD ["/bin/bash"] 0B

<missing> 2 months ago /bin/sh -c #(nop) LABEL org.label-schema.sc… 0B

<missing> 2 months ago /bin/sh -c #(nop) ADD file:538afc0c5c964ce0d… 215MB

也可以很清晰地看到,一个指令就被分成了一个镜像层。

6.4 CMD和ENTRYPOINT

CMD # 指定这个容器启动的时候需要运行的命令,最后一个才会生效

ENTRYPOINT # 指定这个容器启动的时候需要运行的命令,可以追加

测试CMD指令:

# 创建dockerfile

[root@ct7_1 docker_files]# vim dockerfile-test-CMD

FROM centos

CMD ["ls","-a"]

[root@ct7_1 docker_files]# docker build -f dockerfile-test-CMD -t testcmd:1 .

Sending build context to Docker daemon 3.072kB

Step 1/2 : FROM centos

---> 0d120b6ccaa8

Step 2/2 : CMD ["ls -a"]

---> Running in c36e81e377c1

Removing intermediate container c36e81e377c1

---> 3d582e3d64b2

Successfully built 3d582e3d64b2

Successfully tagged testcmd:1

[root@ct7_1 docker_files]# docker run testcmd:1

.

..

.dockerenv

bin

dev

etc

home

......

# 执行了 ls -a 的命令

# docker run 命令的末尾可以追加参数,会替代掉CMD的指令:

[root@ct7_1 docker_files]# docker run testcmd:1 ls -al

total 32

drwxr-xr-x. 17 root root 4096 Oct 20 15:52 .

drwxr-xr-x. 17 root root 4096 Oct 20 15:52 ..

-rwxr-xr-x. 1 root root 0 Oct 20 15:52 .dockerenv

......

测试 ENTRYPOINT:

# 创建文件

[root@ct7_1 docker_files]# vim dockerfile-test-entrypoint

FROM centos

ENTRYPOINT ["ls","-a"]

[root@ct7_1 docker_files]# docker build -f dockerfile-test-entrypoint -t testentrypoint .

Sending build context to Docker daemon 4.096kB

Step 1/2 : FROM centos

---> 0d120b6ccaa8

Step 2/2 : ENTRYPOINT ["ls","-a"]

---> Running in 64d8e9566b9f

Removing intermediate container 64d8e9566b9f

---> 626f916bf9ab

Successfully built 626f916bf9ab

Successfully tagged testentrypoint:latest

[root@ct7_1 docker_files]# docker run testentrypoint

.

..

.dockerenv

bin

dev

etc

home

......

# 执行了 ls -a 的命令

# docker run 命令的末尾可以追加参数,对于entrypoint是追加的形式:

[root@ct7_1 docker_files]# docker run testentrypoint -l

total 32

drwxr-xr-x. 17 root root 4096 Oct 20 15:58 .

drwxr-xr-x. 17 root root 4096 Oct 20 15:58 ..

......

6.5 将镜像发布到dockerhub

- 注册dockerhub账号

https://hub.docker.com/repositories

也相当于一个仓库

- 在linux登录dockerhub

[root@ct7_1 tomcatlogs]# docker login -u 用户名

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

- 使用tag命令修改镜像名

将mytomcat镜像加上dockerhub账号名,不然会提示没有权限的错误。

mytomcat -> 账号/mytomcat

[root@ct7_1 tomcatlogs]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

mytomcat latest 246e6d994987 30 minutes ago 586MB

[root@ct7_1 tomcatlogs]# docker tag 246e6d994987 ssx/mytomcat:1.0

[root@ct7_1 tomcatlogs]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

mytomcat latest 246e6d994987 31 minutes ago 586MB

ssx/mytomcat 1.0 246e6d994987 31 minutes ago 586MB

- 使用push命令将镜像推送到dockerhub

[root@ct7_1 tomcatlogs]# docker push 845484/mytomcat:1.0

The push refers to repository [docker.io/845484/mytomcat]

......

# 上传完即可

docker logout 退出登录。

6.6 将镜像发布到阿里云镜像仓库

- 注册阿里云账号

- 找到容器镜像服务

- 创建命名空间

- 创建镜像仓库

- docker登录阿里云仓库

docker login --username=123 registry.cn-beijing.aliyuncs.com

登录到阿里云镜像服务器有详细步骤。

7. Docker网络工程

启动docker之后的网络环境:

[root@ct7_1 ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eno16777736: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:58:c6:4b brd ff:ff:ff:ff:ff:ff

inet 192.168.0.106/24 brd 192.168.0.255 scope global dynamic eno16777736

valid_lft 78971sec preferred_lft 78971sec

inet 192.168.0.102/24 brd 192.168.0.255 scope global secondary eno16777736

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe58:c64b/64 scope link

valid_lft forever preferred_lft forever

5: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP

link/ether 02:42:5c:81:f0:34 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:5cff:fe81:f034/64 scope link

valid_lft forever preferred_lft forever

lo:本机回环地址;

eno16777736:虚拟机网卡地址;

docker0:docker0地址( inet 172.17.0.1/16 )

7.1 理解 Docker 0

提出一个问题:docker容器如何实现网络连通?

# 启动一个tomcat容器

[root@ct7_2 ~]# docker run -d -P --name tomcat01 tomcat

# 查看容器内的网络地址

[root@ct7_1 ~]# docker exec -it tomcat01 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

8: eth0@if9: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

# tomcat01的虚拟网卡网络地址:172.17.0.2/16

# 查看宿主机ip addr,多了这个

9: veth9771ef3@if8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP

link/ether 5e:19:e3:0c:d3:c5 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::5c19:e3ff:fe0c:d3c5/64 scope link

valid_lft forever preferred_lft forever

# 再启动tomcat02,查看 ip addr

11: vethd0bf24d@if10: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP

link/ether 4a:90:d7:9d:d7:72 brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet6 fe80::4890:d7ff:fe9d:d772/64 scope link

valid_lft forever preferred_lft forever

# tomcat01 ping tomcat02

[root@ct7_1 docker]# docker exec tomcat02 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

10: eth0@if11: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:03 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.3/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

[root@ct7_1 docker]# docker exec tomcat02 ping 172.17.0.2

PING 172.17.0.2 (172.17.0.2) 56(84) bytes of data.

64 bytes from 172.17.0.2: icmp_seq=1 ttl=64 time=0.058 ms

64 bytes from 172.17.0.2: icmp_seq=2 ttl=64 time=0.102 ms

64 bytes from 172.17.0.2: icmp_seq=3 ttl=64 time=0.102 ms

......

# 容器之间可以ping通

宿主机ping容器不通:

这里我的宿主机是ping不通容器的,但是容器之间可以相互ping。尝试过通过/etc/docker/daemon.json配置文件修改docker0的虚拟网卡地址,但是依然不能在linux上ping容器tomcat01,但是可以ping172.17.0.1这个docker0的地址。

百度过后剩下macvlan这种模式搭网桥这种方式没有尝试了,但是时间关系下次再来深入学习吧。

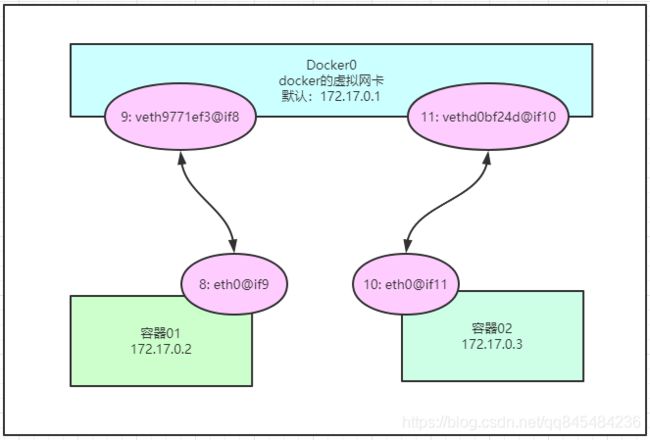

evth-pair

成对出现的网卡信息是一对虚拟设备接口,veth-pair。

veth-pair 成对出现,一段连接协议,一段连接彼此。

veth-pair 通过协议和桥梁连接着两个虚拟网络设备。

Docker网络模型:

通过图可以很清晰的理解,Docker0相当于一个路由,各种容器相当于主机通过veth技术连接到路由上。

若容器01想要访问容器02,需要通过veth访问到Docek0再通过veth找到容器02。

docker run --link

docker run命令的参数 --link

–help:

-l, --label list Set meta data on a container

--label-file list Read in a line delimited file of labels

--link list Add link to another container

--link-local-ip list Container IPv4/IPv6 link-local addresses

--log-driver string Logging driver for the container

--log-opt list Log driver options

--mac-address string Container MAC address (e.g., 92:d0:c6:0a:29:33)

实践操作:

[root@ct7_1 docker]# docker run -d -P --name tomcat04 --link tomcat01 tomcat

5ccc1d0deb01611d27096b4af1a20f65a3638f17066ff6099610ee2e8421921a

[root@ct7_1 docker]# docker exec tomcat04 ping tomcat01

PING tomcat01 (172.17.0.2) 56(84) bytes of data.

64 bytes from tomcat01 (172.17.0.2): icmp_seq=1 ttl=64 time=0.102 ms

64 bytes from tomcat01 (172.17.0.2): icmp_seq=2 ttl=64 time=0.104 ms

64 bytes from tomcat01 (172.17.0.2): icmp_seq=3 ttl=64 time=0.103 ms

其意思是在创建启动一个容器的时候,指定容器id,将其写入将要启动的容器的hosts中,实现ip映射:

[root@ct7_1 docker]# docker exec tomcat04 cat /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

172.17.0.2 tomcat01 4e583b4f6132

172.17.0.4 5ccc1d0deb01

目的是实现通过容器id/nam可以实现容器之间网络通信,因为创建容器不固定ip的话ip是有docker生成的。

ps:此时tomcat01并不能通过容器id/name访问tomcat04,因为tomcat01的hosts没有修改。

自定义网络 docker network create

docker network

帮助命令:

[root@ct7_1 docker]# docker network --help

Usage: docker network COMMAND

Manage networks

Commands:

connect Connect a container to a network

create Create a network

disconnect Disconnect a container from a network

inspect Display detailed information on one or more networks

ls List networks

prune Remove all unused networks

rm Remove one or more networks

查看networks列表

# docker network ls 查看docker网络配置

[root@ct7_1 docker]# docker network ls

NETWORK ID NAME DRIVER SCOPE

32d2205f0c9c bridge bridge local

83b0817cd97c host host local

e509661fb189 none null local

brige项就是Docker0

网络模式:

bridge:桥接(默认)

none:无网络

host:主机模式,与宿主机共享网络

container:容器网络连通(不建议)

**创建自定义网络:**mynet

# --driver bridge 网络模式

# --subnet 192.100.0.0/16 网段

# --gateway 192.100.0.1 网关

[root@ct7_1 docker]# docker network create --driver bridge --subnet 192.100.0.0/16 --gateway 192.100.0.1 mynet

a6a28eec5b789b21b395e962e6ca1135262c93c365a453e501a1c29242bce1f7

[root@ct7_1 docker]# docker network ls

NETWORK ID NAME DRIVER SCOPE

32d2205f0c9c bridge bridge local

83b0817cd97c host host local

a6a28eec5b78 mynet bridge local

使用mynet启动容器:

# 启动两个容器,使用mynet网络

[root@ct7_1 docker]# docker run --network mynet -d --name t1 tomcat

8c3a5909bddef83e0d3a159160f12b895422513e1e635c4086454abd3c3ff177

[root@ct7_1 docker]# docker run --network mynet -d --name t2 tomcat

8853fa5277c88e3c81eed0283da43b4706dff7b9a2564dc60f1e26a1ffe39e3b

# inspect查看mynet元信息

[root@ct7_1 docker]# docker network inspect mynet

[

{

"Name": "mynet",

"Id": "a6a28eec5b789b21b395e962e6ca1135262c93c365a453e501a1c29242bce1f7",

"Created": "2020-10-21T09:47:43.955802107-07:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "192.100.0.0/16",

"Gateway": "192.100.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"8853fa5277c88e3c81eed0283da43b4706dff7b9a2564dc60f1e26a1ffe39e3b": {

"Name": "t2",

"EndpointID": "7c791d91c6508cf965e8368cf8c88349896205c26b6ae38b40a30a1b1c30eaa9",

"MacAddress": "02:42:c0:64:00:03",

"IPv4Address": "192.100.0.3/16",

"IPv6Address": ""

},

"8c3a5909bddef83e0d3a159160f12b895422513e1e635c4086454abd3c3ff177": {

"Name": "t1",

"EndpointID": "0b1fa4d6f533c6faedac178600cff29bfa3ccbd50f8473eb80391f2b3d01dc59",

"MacAddress": "02:42:c0:64:00:02",

"IPv4Address": "192.100.0.2/16",

"IPv6Address": ""

}

},

"Options": {},

"Labels": {}

}

]

Containers属性里面可以看到有两个容器应用了该网络。

mynet自定义网络下的容器之间通信:

[root@ct7_1 docker]# docker exec t1 ping t2

PING t2 (192.100.0.3) 56(84) bytes of data.

64 bytes from t2.mynet (192.100.0.3): icmp_seq=1 ttl=64 time=0.041 ms

64 bytes from t2.mynet (192.100.0.3): icmp_seq=2 ttl=64 time=0.103 ms

^C

[root@ct7_1 docker]# docker exec t2 ping t1

PING t1 (192.100.0.2) 56(84) bytes of data.

64 bytes from t1.mynet (192.100.0.2): icmp_seq=1 ttl=64 time=0.024 ms

64 bytes from t1.mynet (192.100.0.2): icmp_seq=2 ttl=64 time=0.095 ms

直接可以使用容器name通信。

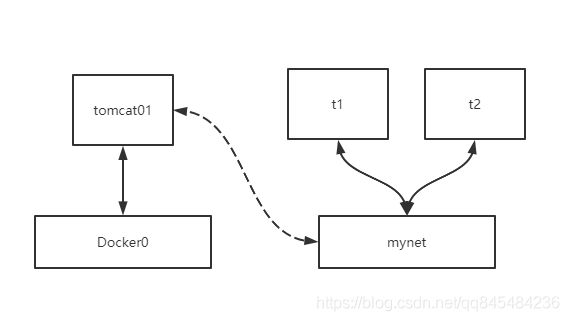

网络连接 docker network connect

它的作用是将一个容器与一个网络连通。

container和network

也就是当容器不在一个network中时,该容器要想跟这个network中的容器通信,就需要connect操作:

实践测试:

# 连接

[root@ct7_1 docker]# docker network connect mynet tomcat01

# ping

[root@ct7_1 docker]# docker exec tomcat01 ping t1

PING t1 (192.100.0.2) 56(84) bytes of data.

64 bytes from t1.mynet (192.100.0.2): icmp_seq=1 ttl=64 time=0.041 ms

64 bytes from t1.mynet (192.100.0.2): icmp_seq=2 ttl=64 time=0.100 ms

64 bytes from t1.mynet (192.100.0.2): icmp_seq=3 ttl=64 time=0.101 ms

# 查看tomcat01的网络情况

[root@ct7_1 docker]# docker exec tomcat01 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

43: eth0@if44: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

45: eth1@if46: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:c0:64:00:04 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 192.100.0.4/16 brd 192.100.255.255 scope global eth1

valid_lft forever preferred_lft forever

其原理是给tomcat01容器配置了两个ip地址实现的双网段。

8. Docker实战练习

8.1 实战练习安装 Nginx

-

官网仓库搜索nginx找相应版本,但是好像没啥差别,直接装最新版,,,

[root@ct7_1 ~]# docker pull nginx Using default tag: latest ...... -

创建容器并后台运行

这里遇到个大坑,前后折腾了三个多小时,,,

curl: (56) Recv failure: Connection reset by peer

# 首先,我是在自己的机器的vm安装centos7做学习测试的。 # 跟着操作了一发,想着是映射到8888端口上,nginx容器也起来了,宿主机8888有docker_proxy占用了,但是访问: curl localhost:8888 # 却返回 curl: (56) Recv failure: Connection reset by peer # 找了各种各样的问题,,,最终仍未解决,其实我跟狂神教程里的环境只差一点:阿里服务器和我的虚拟机 # 后来查到 docker 有四个网络模式,默认是桥接 brige,而有一种模式是直接将容器的网络环境应用宿主的网络环境: --net="host" # 最终使用命令: docker run -d --name n2 --net="host" nginx # 查看宿主机80端口 Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 18040/nginx: master # 直接显示nginx # 访问 [root@mq2 ~]# curl localhost <!DOCTYPE html> <html> <head> <title>Welcome to nginx!</title> ......然后在另一台机器上访问:

-

小结

最终是没能将docker:nginx 80 映射到宿主机上的其他端口,使用了host的网络模式。

猜测还有一些网络配置在虚拟机上需要修改吧,能力有限下次再深究,继续往下走先。

8.2 实战练习安装 Tomcat

-

在docker hub搜索tomcat

直接用host模式启动安装9.0的tomcat

docker run --rm --net host -d --name t1 tomcat:9.0直接执行run启动命令,自动下载tomcat。

–rm表示当该镜像停止后将自动删除容器,此命令一般测试用。

-

绑定到宿主机8080端口上,访问发现没有tomcat页面返回404

-

进入容器发现tomcat下有个webapps.dict目录,里面有ROOT目录,将其拷入webapps目录后,可以访问tomcat首页

[root@ct7_1 ~]# docker exec -it t1 bash root@ct7_1:/usr/local/tomcat# cp -r webapps.dist/ROOT webapps/ root@ct7_1:/usr/local/tomcat# cd webapps root@ct7_1:/usr/local/tomcat/webapps# ls ROOT刷新页面

-

小结

主要认识到了进入容器中的操作和在容器中进行文件的简单操作。

8.3 实战练习安装 ES+Kibana

-

官方指引安装命令如下:

直接拉取启动es,映射宿主机端口,-e配置环境限制es内存,指定7.9.2版本。

docker run -d --name elasticsearch01 --net host -e "discovery.type=single-node" -e ES_JAVA_OPTS="-Xmx1024m -Xms1024m" elasticsearch:7.6.2 -

访问9200

[root@ct7_1 ~]# curl localhost:9200 { "name" : "ct7_1", "cluster_name" : "docker-cluster", "cluster_uuid" : "EvCKNgxXRDWlfnnNVrzjWA", "version" : { "number" : "7.6.2", ...... -

小结

主要遇到的问题是内存问题,由于es运行需要消耗较大内存,所以添加了限制内存的参数,但是如果限制得太小,es根本起不来。各台服务器因情况而定。

查看docker内存情况:docker stats

docker stats CONTAINER ID NAME CPU % MEM USAGE / LIMIT MEM % NET I/O BLOCK I/O PIDS fea297813203 e1 0.38% 497.8MiB / 977.9MiB 50.91% 0B / 0B 1.25GB / 80MB 0

8.4 实战练习安装 mysql

实现mysql数据的持久化。

使用数据卷技术将mysql的数据文件夹挂载到宿主机上。

-

拉取安装mysql:5.7

[root@ct7_1 /]# docker pull mysql:5.7 -

启动mysql,挂载mysql配置文件和数据目录

官方指引:

$ docker run --name some-mysql -e MYSQL_ROOT_PASSWORD=my-secret-pw -d mysql:tag-e MYSQL_ROOT_PASSWORD 是设置mysql的密码。

实验挂载了mysql配置文件和数据库文件到宿主机,设置mysql密码为123:

[root@ct7_1 ~]# docker run -d -v /home/mysql/conf/:/etc/mysql/conf.d -v /home/mysql/data/:/var/lib/mysql --name mysql57 -e MYSQL_ROOT_PASSWORD=123 --net host mysql:5.7检验:

检查容器是否成功启动、再检查端口是否有程序监听,然后开启防火墙3306的规则协议:

[root@ct7_1 data]# firewall-cmd --zone=public --permanent --add-port=3306/tcp success [root@ct7_1 data]# firewall-cmd --reload success之后使用navicat连接,创建一个数据库:ssx

然后查看宿主机的挂载目录是否有对应数据库文件生成:

[root@ct7_1 /]# cd /home/mysql/data

[root@ct7_1 data]# ls

auto.cnf client-cert.pem ibdata1 ibtmp1 private_key.pem server-key.pem

ca-key.pem client-key.pem ib_logfile0 mysql public_key.pem ssx

ca.pem ib_buffer_pool ib_logfile1 performance_schema server-cert.pem sys

部署完成。

8.5 实战练习,dockerfile构建自定义centos镜像

构建自定义centos镜像

FROM centos # 以centos为最底层镜像层

MAINTAINER ssx<[email protected]> # 署名

VOLUME ["/home/volume001"] # 挂载数据卷

ENV MYPATH /home # 配置环境变量,默认/home

WORKDIR $MYPATH # 工作目录使用 MYPATH

#RUN yum -y install vim # 下载安装vim

#RUN yum -y install net-tools # 下载安装net-tools

EXPOSE 80 # 暴露、监听80端口

CMD echo $MYPATH+"..build end .." # 打印

CMD /bin/bash # 执行终端

通过build构建

[root@ct7_1 docker_files]# docker build -f dockerfile-mycentos -t mycentos:2.0 .

Sending build context to Docker daemon 2.048kB

Step 1/8 : FROM centos

---> 0d120b6ccaa8

Step 2/8 : MAINTAINER ssx<[email protected]>

---> Using cache

---> 8bcfb54bb497

Step 3/8 : VOLUME ["/home/volume001"]

---> Using cache

---> 77b0c8b9ce50

Step 4/8 : ENV MYPATH /home

---> Using cache

---> 64a0efadc466

Step 5/8 : WORKDIR $MYPATH

---> Using cache

---> 1cf85348bee0

Step 6/8 : EXPOSE 80

---> Using cache

---> a9d784977af0

Step 7/8 : CMD echo $MYPATH+"...... build end ......"

---> Using cache

---> 489d71963199

Step 8/8 : CMD /bin/bash

---> Using cache

---> d7d29f2185d8

Successfully built d7d29f2185d8

Successfully tagged mycentos:2.0

[root@ct7_1 docker_files]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

mycentos 2.0 d7d29f2185d8 7 minutes ago 215MB

8.6 实战练习 dockerfile构建tomcat镜像

- 准备tomcat9的压缩包、jdk1.8的压缩包

apache-tomcat-9.0.39.tar.gz

jdk-8u271-linux-x64.tar.gz

- 编写DockerFIle文件

官方推荐文件命名:DockerFile,并且在build的时候可以默认指定。

ADD 自动解压到指定目录

FROM centos

MAINTAINER ssx

COPY readme.txt /usr/local/readme.txt

ADD jdk-8u271-linux-x64.tar.gz /usr/local/

ADD apache-tomcat-9.0.39.tar.gz /usr/local/

# RUN yum -y install vim

ENV MYPATH /usr/local

WORKDIR $MYPATH

ENV JAVA_HOME /usr/local/jdk1.8.0_271

ENV CLASSPATH $JAVA_HOME/lib/dt.jar;$JAVA_HOME/lib/tools.jar

ENV CATALINA_HOME /usr/local/apache-tomcat-9.0.39

ENV CATALINA_BASH /usr/local/apache-tomcat-9.0.39

ENV PATH $PATH:$JAVA_HOME/bin;$CATALINA_HOME/lib;$CATALINA_BASE/bin

EXPOSE 8080

CMD /usr/local/apache-tomcat-9.0.39/bin/startup.sh && tail -F /url/local/apache-tomcat-9.0.39/bin/logs/catalina.out

- 构建成镜像

由于dockerfile命名为DockerFIle,build命令不需要指定。

docker build -t mytomcat .

- 启动容器

docker run -d --net host --name mytomcat -v /home/mytomcat/test:/usr/local/apache-tomcat-9.0.39/webapps/test -v /home/mytomcat/tomcatlogs:/usr/local/apache-tomcat-9.0.39/logs mytomcat

启动成功

[root@ct7_1 mytomcat]# docker run -d --net host --name mytomcat -v /home/mytomcat/test:/usr/local/apache-tomcat-9.0.39/webapps/test -v /home/mytomcat/tomcatlogs:/usr/local/apache-tomcat-9.0.39/logs mytomcat

3dcb9c51bfc4af0ab8c30d09851f1076ac4d17a2ac0b6283a5d7a2e41b84521e

[root@ct7_1 mytomcat]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

3dcb9c51bfc4 mytomcat "/bin/sh -c '/usr/lo…" 5 seconds ago Up 4 seconds mytomcat

[root@ct7_1 mytomcat]# netstat -antp

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp6 0 0 :::8080 :::* LISTEN 13207/java

- 假设部署项目

/test/web.xml

<web-app xmlns="http://xmlns.jcp.org/xml/ns/javaee"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://xmlns.jcp.org/xml/ns/javaee

http://xmlns.jcp.org/xml/ns/javaee/web-app_3_1.xsd"

version="3.1">

web-app>

/test/index.jsp

<%@ page language="java" contentType="text/html; charset=UTF-8"

pageEncoding="UTF-8"%>

石似心

Hello 石似心

<% System.out.println("----hello 石似心 ---"); %>

完成

- 查看tomcat日志

[root@ct7_1 tomcatlogs]# cat catalina.out

......

20-Oct-2020 17:00:09.841 INFO [main] org.apache.coyote.AbstractProtocol.start Starting ProtocolHandler ["http-nio-8080"]

20-Oct-2020 17:00:09.847 INFO [main] org.apache.catalina.startup.Catalina.start Server startup in [951] milliseconds

----hello ??? ---

[root@ct7_1 tomcatlogs]#

8.7 实战练习搭建redis集群

集群设计:

-

创建redis专用集群网络

[root@ct7_1 docker]# docker network create --subnet 172.10.0.0/16 redis -

写六份redis配置文件

# 使用shell脚本创建 for p in $(seq 1 6) do mkdir -p /mydata/redis/node-$p/conf; touch /mydata/redis/node-$p/conf/redis.conf; cat << EOF >>/mydata/redis/node-$p/conf/redis.conf port 6379 bind 0.0.0.0 cluster-enable yes cluster-config-file nodes.conf cluster-node-timeout 5000 cluster-announce-ip 172.10.0.1$p cluster-announce-port 6379 cluster-announce-bus-port 16379 appendonly yes EOF done[root@ct7_1 /]# cd mydata/redis/ [root@ct7_1 redis]# ls node- node-1 node-2 node-3 node-4 node-5 node-6 -

启动6个redis容器

# $p则是上面脚本对应的序号 docker run -p 637$p:6379 -p 1637$p:16379 --name redis-$p -v /mydata/redis/node-$p/data:/data -v /mydata/redis/node-$p/conf/redis.conf:/etc/redis/redis.conf -d --net redis --ip 172.10.0.1$p redis:5.0.9 redis-server /etc/redis/reids.conf # 启动6个 docker run -p 637$p:6379 -p 1637$p:16379 --name redis-$p -v /mydata/redis/node-$p/data:/data -v /mydata/redis/node-$p/conf/redis.conf:/etc/redis/redis.conf -d --net redis --ip 172.10.0.1$p redis:5.0.9 redis-server /etc/redis/reids.conf -

创建集群

redis-cli --cluster create 172.10.0.11:6379 172.10.0.12:6379 172.10.0.13:6379 172.10.0.14:6379 172.10.0.15:6379 172.10.0.16:6379 --cluster-replicas 1 -

容器中连接redis

# -c 集群模式访问 redis-cli -c # 查看集群信息 cluster info # 节点信息 cluster nodes

8.8 实战练习springboot微服务打包发布镜像

-

创建springboot项目

写一个controller,返回“hello docker.”,打包。

-

将jar包拷入docker服务器,编写Dockerfile

FROM java:8 COPY *.jar /app.jar CMD ["--hello docker project"] EXPOSE 8080 ENTRYPOINT ["java","-jar","/app.jar"] [root@ct7_1 idea]# ls demo-0.0.1-SNAPSHOT.jar Dockerfile

[root@ct7_1 idea]# docker build -t ssx666 .

Sending build context to Docker daemon 16.55MB

Step 1/5 : FROM java:8

8: Pulling from library/java

5040bd298390: Pull complete

fce5728aad85: Pull complete

76610ec20bf5: Pull complete

60170fec2151: Pull complete

e98f73de8f0d: Pull complete

11f7af24ed9c: Pull complete

49e2d6393f32: Pull complete

bb9cdec9c7f3: Pull complete

Digest: sha256:c1ff613e8ba25833d2e1940da0940c3824f03f802c449f3d1815a66b7f8c0e9d

Status: Downloaded newer image for java:8

---> d23bdf5b1b1b

Step 2/5 : COPY *.jar /app.jar

---> 1315f7ea89e1

Step 3/5 : CMD ["--hello docker project"]

---> Running in 96655336ea79

Removing intermediate container 96655336ea79

---> da4bdf3606b2

Step 4/5 : EXPOSE 8080

---> Running in 16e000c08014

Removing intermediate container 16e000c08014

---> 7a4d5b6a3510

Step 5/5 : ENTRYPOINT ["java","-jar","/app.jar"]

---> Running in e084a962dcda

Removing intermediate container e084a962dcda

---> b556e447b385

Successfully built b556e447b385

Successfully tagged ssx666:latest