Convnext实现(pytorch)

概述

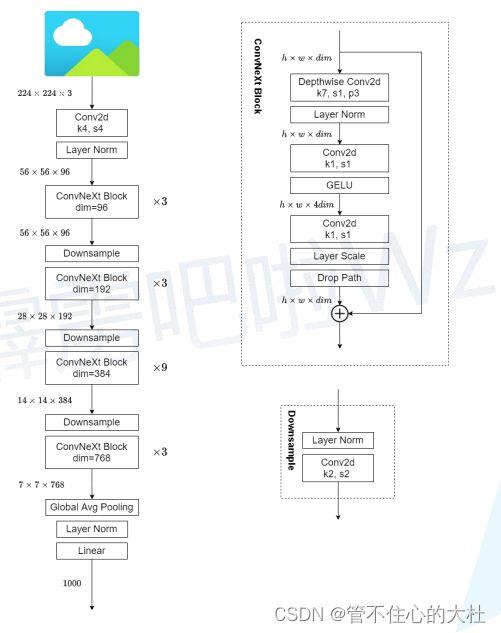

整体:

类似于patchify,使用卷积核大小为4*4,步距为4的卷积层的stem → LN → stage1 → 类似于patchmerging的downsample,使用卷积核大小为2*2,步距为2 → stage2 → ...... → 全局平均池化+LN+Linear。

Block:

DW conv → PW conv(这里用Linear实现)+LN → PW conv → layer scale(对每一个通道的数据进行缩放缩放的比例是可学习的参数)→ drop path+残差

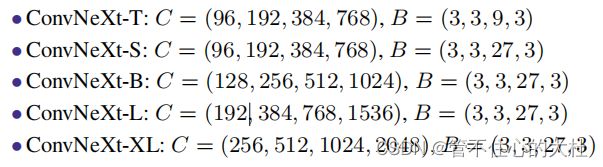

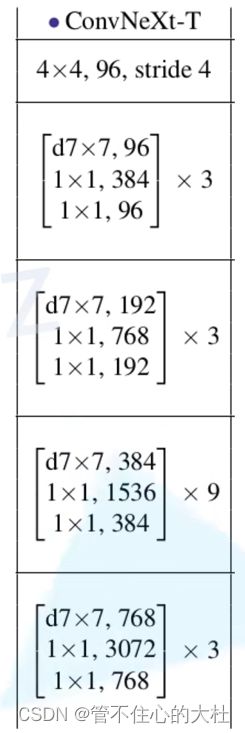

各个版本:

Convnext的几个版本,C代表每个stage的输入通道数,B是每个stage有多少block。

代码

代码非常简单,这里就不多说了。

import torch

import torch.nn as nn

import torch.nn.functional as F

def drop_path(x, drop_prob: float = 0., training: bool = False):

"""Drop paths (Stochastic Depth) per sample (when applied in main path of residual blocks).

This is the same as the DropConnect impl I created for EfficientNet, etc networks, however,

the original name is misleading as 'Drop Connect' is a different form of dropout in a separate paper...

See discussion: https://github.com/tensorflow/tpu/issues/494#issuecomment-532968956 ... I've opted for

changing the layer and argument names to 'drop path' rather than mix DropConnect as a layer name and use

'survival rate' as the argument.

"""

if drop_prob == 0. or not training:

return x

keep_prob = 1 - drop_prob

shape = (x.shape[0],) + (1,) * (x.ndim - 1) # work with diff dim tensors, not just 2D ConvNets

random_tensor = keep_prob + torch.rand(shape, dtype=x.dtype, device=x.device)

random_tensor.floor_() # binarize

output = x.div(keep_prob) * random_tensor

return output

class DropPath(nn.Module):

"""Drop paths (Stochastic Depth) per sample (when applied in main path of residual blocks).

"""

def __init__(self, drop_prob=None):

super(DropPath, self).__init__()

self.drop_prob = drop_prob

def forward(self, x):

return drop_path(x, self.drop_prob, self.training)

class LayerNorm(nn.Module):

r""" 支持两种数据形式: channel在最后 (默认) or channel在最前.

channels_last(batch_size, height, width, channels)

channels_first(batch_size, channels, height, width).

"""

def __init__(self, normalized_shape, eps=1e-6, data_format="channels_last"):

super().__init__()

self.weight = nn.Parameter(torch.ones(normalized_shape), requires_grad=True)

self.bias = nn.Parameter(torch.zeros(normalized_shape), requires_grad=True)

self.eps = eps

self.data_format = data_format

if self.data_format not in ["channels_last", "channels_first"]:

raise ValueError(f"not support data format '{self.data_format}'")

self.normalized_shape = (normalized_shape,)

def forward(self, x: torch.Tensor) -> torch.Tensor:

# 如果是channels_last可以直接用官方的layer_norm

if self.data_format == "channels_last":

return F.layer_norm(x, self.normalized_shape, self.weight, self.bias, self.eps)

# channel在前,自己搭建一个LN

elif self.data_format == "channels_first":

# [batch_size, channels, height, width]

mean = x.mean(1, keepdim=True)

var = (x - mean).pow(2).mean(1, keepdim=True)

x = (x - mean) / torch.sqrt(var + self.eps)

x = self.weight[:, None, None] * x + self.bias[:, None, None]

return x

class Block(nn.Module):

r""" ConvNeXt Block. There are two equivalent implementations:

(1) DwConv -> LayerNorm (channels_first) -> 1x1 Conv -> GELU -> 1x1 Conv; all in (N, C, H, W)

(2) DwConv -> Permute to (N, H, W, C); LayerNorm (channels_last) -> Linear -> GELU -> Linear; Permute back

We use (2) as we find it slightly faster in PyTorch

Args:

dim (int): Number of input channels.

drop_rate (float): Stochastic depth rate. Default: 0.0

layer_scale_init_value (float): Init value for Layer Scale. Default: 1e-6.

"""

def __init__(self, dim, drop_rate=0., layer_scale_init_value=1e-6):

super().__init__()

self.dwconv = nn.Conv2d(dim, dim, kernel_size=7, padding=3, groups=dim) # depthwise conv

self.norm = LayerNorm(dim, eps=1e-6, data_format="channels_last")

self.pwconv1 = nn.Linear(dim, 4 * dim) # 用Linear等价替换PW conv

self.act = nn.GELU()

self.pwconv2 = nn.Linear(4 * dim, dim)

# gamma即layer scale。对每一个通道的数目进行缩放,缩放的比例是可学习的参数,维度和通道数保持一致

self.gamma = nn.Parameter(layer_scale_init_value * torch.ones((dim,)),

requires_grad=True) if layer_scale_init_value > 0 else None

self.drop_path = DropPath(drop_rate) if drop_rate > 0. else nn.Identity()

def forward(self, x: torch.Tensor) -> torch.Tensor:

shortcut = x

x = self.dwconv(x)

x = x.permute(0, 2, 3, 1) # [N, C, H, W] -> [N, H, W, C]

x = self.norm(x)

x = self.pwconv1(x)

x = self.act(x)

x = self.pwconv2(x)

if self.gamma is not None:

x = self.gamma * x

x = x.permute(0, 3, 1, 2) # [N, H, W, C] -> [N, C, H, W]

x = shortcut + self.drop_path(x)

return x

class ConvNeXt(nn.Module):

r""" ConvNeXt

A PyTorch impl of : `A ConvNet for the 2020s` -

https://arxiv.org/pdf/2201.03545.pdf

Args:

in_chans (int): Number of input image channels. Default: 3

num_classes (int): Number of classes for classification head. Default: 1000

depths (tuple(int)): Number of blocks at each stage. Default: [3, 3, 9, 3]

dims (int): Feature dimension at each stage. Default: [96, 192, 384, 768]

drop_path_rate (float): Stochastic depth rate. Default: 0.

layer_scale_init_value (float): Init value for Layer Scale. Default: 1e-6.

head_init_scale (float): Init scaling value for classifier weights and biases. Default: 1.

"""

def __init__(self, in_chans: int = 3, num_classes: int = 1000, depths: list = None,

dims: list = None, drop_path_rate: float = 0., layer_scale_init_value: float = 1e-6,

head_init_scale: float = 1.):

super().__init__()

# 与Swin不同,这里将stem也当做下采样,downsample_layers内就是四个stage中的下采样

self.downsample_layers = nn.ModuleList()

stem = nn.Sequential(nn.Conv2d(in_chans, dims[0], kernel_size=4, stride=4),

LayerNorm(dims[0], eps=1e-6, data_format="channels_first"))

self.downsample_layers.append(stem)

# 对应stage2-stage4前的3个downsample

for i in range(3):

downsample_layer = nn.Sequential(LayerNorm(dims[i], eps=1e-6, data_format="channels_first"),

nn.Conv2d(dims[i], dims[i+1], kernel_size=2, stride=2))

self.downsample_layers.append(downsample_layer)

self.stages = nn.ModuleList() # stages

dp_rates = [x.item() for x in torch.linspace(0, drop_path_rate, sum(depths))]

cur = 0

# 构建每个stage中堆叠的block,用*解引用

for i in range(4):

stage = nn.Sequential(

*[Block(dim=dims[i], drop_rate=dp_rates[cur + j], layer_scale_init_value=layer_scale_init_value)

for j in range(depths[i])]

)

self.stages.append(stage)

# cur用于drop path

cur += depths[i]

self.norm = nn.LayerNorm(dims[-1], eps=1e-6) # final norm layer

self.head = nn.Linear(dims[-1], num_classes)

self.apply(self._init_weights)

self.head.weight.data.mul_(head_init_scale)

self.head.bias.data.mul_(head_init_scale)

def _init_weights(self, m):

if isinstance(m, (nn.Conv2d, nn.Linear)):

nn.init.trunc_normal_(m.weight, std=0.2)

nn.init.constant_(m.bias, 0)

def forward_features(self, x: torch.Tensor) -> torch.Tensor:

for i in range(4):

x = self.downsample_layers[i](x)

x = self.stages[i](x)

return self.norm(x.mean([-2, -1])) # global average pooling, (N, C, H, W) -> (N, C)

def forward(self, x: torch.Tensor) -> torch.Tensor:

x = self.forward_features(x)

x = self.head(x)

return x

def convnext_tiny(num_classes: int):

# https://dl.fbaipublicfiles.com/convnext/convnext_tiny_1k_224_ema.pth

model = ConvNeXt(depths=[3, 3, 9, 3],

dims=[96, 192, 384, 768],

num_classes=num_classes)

return model

convnext_tiny()