Python3 - k8s架构的安装与使用(详细)

文章目录

-

- 一、kunernetes简介

-

- 1. 为什么要用k8s ?

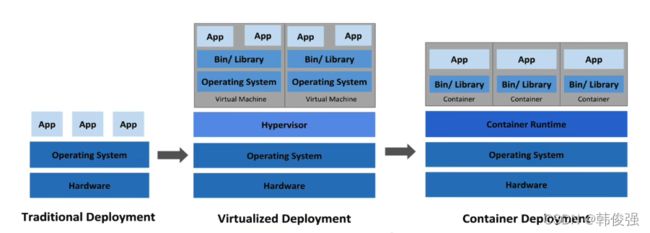

- 2. 部署方式的变迁

- 3. 传统部署时代

- 4. 虚拟化部署时代

- 5. 容器部署时代

- 6. 服务器费用对比

- 7. k8s是什么?

- 8. 纯容器模式的问题

- 9. 为什么要用k8s

- 10. k8s提供了什么功能

- 二、k8s架构安装

-

- 1. k8s流程

- 2. k8s工作原理

- 3. k8s组件交互原理(k8s使用流程?)

- 4.干活来了, 安装k8s (基础配置)

-

- 4.1 机器环境准备

- 4.2 组件版本

- 4.3 安装docker(all nodes)

- 4.4 安装k8s-master01 (master01机器执行)

- 4.5 初始化k8s-master01节点

- 4.6 添加k8s-node到集群中

- 4.7 安装flannel网络插件 (k8s-master01执行)

- 三、首次使用k8s部署应用程序

-

- 3.1 图解、小节

- 3.2 配置kubectl命令补全

- 3.3 k8s快速实践

- 3.4 详解最小调度单元pod

- 3.5 再来部署nginx应用 ---deployment

- 3.6 使用yaml文件

- 3.7 service 负载均衡

- 3.8 访问应用

- 3.9 deployment 其他功能

- 四、部署dashboard

-

- 4.1 下载k8s yaml文件

- 4.2 创建资源, 测试访问

- 4.3 创建账户访问dashboard

- 好啦~ 今天的实践到这里, 喜欢的点个赞吧~❤

我们前面学习了docker 《深入学习docker》

那么, 随着docker容器的量不断增长, 就需要一个管理docker的统一工具, 如何高效的部署和批量管理docker, 那非k8s莫属。

今天重点讲解k8s架构原理, 深入理解工作原理及流程, 熟练使用负载均衡, 以及dashboard的部署使用。

声明: 文章严禁转载~

一、kunernetes简介

欢迎来到k8s (kunernetes) 的世界

我为什么叫k8s? 了解下小故事~

- ks之间有8个字母

- 搭建8次才能成功

1. 为什么要用k8s ?

-

为什么要用k8s

-

k8s是什么

-

核心功能

-

安装学习

2. 部署方式的变迁

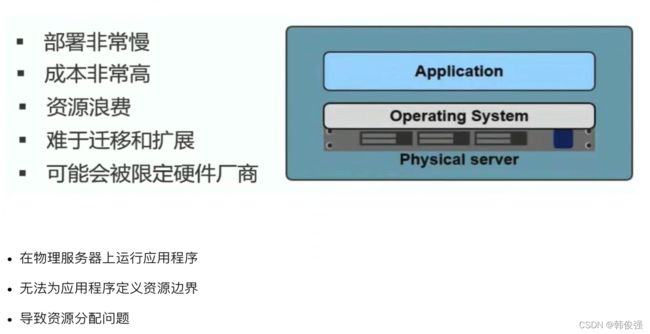

3. 传统部署时代

例如, 如果在物理服务器上运行多个应用程序, 则可能会出现一个应用程序占用大部分资源的情况, 结果可能导致其他应用程序的性能下降。

一种解决方案是在不同的物理服务器上运行每个应用程序, 但是由于资源利用不足而无法扩展, 并且维护许多物理服务器的成本很高。

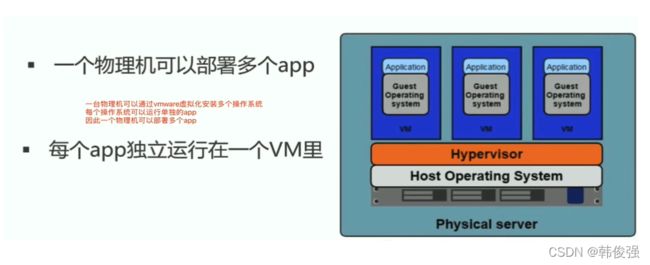

4. 虚拟化部署时代

由于物理机的诸多问题, 后来出现了虚拟机

- 作为解决方案, 引入了虚拟化

- 虚拟化技术允许你在单个物理服务器的 CPU 上运行多个虚拟机 (VM)

- 虚拟化允许应用程序在 VM 之间隔离, 并提供一定程序的安全

- 一个应用程序的信息, 不能被另一应用程序随意访问

- 虚拟化技术能够更好地利用物理服务器上的资源

- 因为可轻松地添加或者更新应用程序, 所以可以实现更好的伸缩性, 降低硬件成本等等。

- 每个 VM 是一台完整的计算器, 在虚拟化硬件智商运行所有组件, 包括其自己的操作系统 (内核+发行版)

缺点: 虚拟层冗余导致的资源浪费与性能下降

5. 容器部署时代

- 容器类似于 VM, 但是可以在应用程序之间共享操作系统 (OS)

- 容器被认为是轻量级的系统, 不包含内核, 公用宿主机的内核

- 容器与 VM 类似, 具有自己的文件系统、CPU、内存、进程控件等, 不包括内核

- 由于它们与基础架构分离, 因此可以跨云和 OS 发行版进行移植

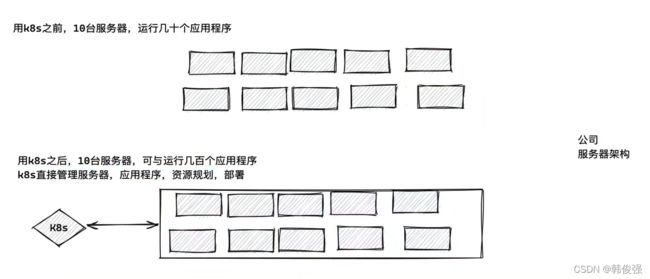

6. 服务器费用对比

7. k8s是什么?

官网: https://kubernetes.io/

Kubernetes 是一个开源的系统, 用于 容器化应用 的 自动化部署、扩缩容和管理。

Kubernetes 将多个容器组成一个逻辑单元便于管理(pod概念)。

Kubernetes 是基于 Google 内部的 15 年调度经验设计出来的系统, brog系统, 参考了业界的智慧。

官方提到的几个概念:

- 谷歌15年经验的系统, borg系统

- 逻辑单元, pod (用于运行多个docker容器)

- 容器化应用, 可以是docker容器, 也可以是其他容器

- docker的优点, 便于应用迁移, 运行, 资源隔离, 实现敏捷开发等

- 自动化部署, k8s可以将多个容器组成的应用, 自动化部署到集群中

- 扩缩容, 指的是能够方便的增加, 减少业务容器数量, 也就是类似我们增加, 减少服务器的概念

- k8s自动检测服务器性能, 如果服务器压力过高, 达到一个阈值, 自动创建容器, 增加一个后端负载均衡的节点

- 缩容, 去掉无用的节点, 节省机器资源

- 管理, 既然应用程序全运行在容器里了, 就得管理运行期间的依赖关系, 网络, 存储, 集群关系等

8. 纯容器模式的问题

docker部署应用, 缺点是什么?

- 业务容器数量庞大, 哪些容器部署在哪些节点, 使用了哪些端口, 如何记录、管理, 需要登录到每台机器去管理?

- 跨主机通信, 多个机器中的容器之间相互调用如何做, iptables规则手动维护?

- 跨主机容器之间相互调用, 配置如何写? 写死固定ip+端口? 注册中心

- 如何实现业务高可用? 多个容器对外提供服务如何实现负载均衡?

- 容器的业务中断了, 如何可以感知到, 感知到之后, 如何自动启动新的容器? k8s自动帮你重新运行一个容器, 业务自动恢复正常

- 如何实现滚动升级保证业务的连续性?

- …

因此, 这一切的问题, k8s 全部帮你解决了, k8s的强打, 超乎你想象, 可以说绝大多数企业, k8s都已经在生产环境运行, 这也是因为技术架构的复杂度提升, 微服务架构的存在, 只要逆向在运维道路上, 有更高的造诣和发展, k8s是一把金钥匙。

9. 为什么要用k8s

容器是打包和运行应用程序的好方式。

在生产环境中, 你需要管理运行应用的容器, 并确保不会停机。

例如, 如果一个容器发生故障, 则需要启动另外一个容器。

如果系统自动处理此行为, 会不会更容易?

这就是 Kubernetes 来解决这些问题的方式。

Kubernetes 会满足你的扩展要求、故障转移、部署模式等。

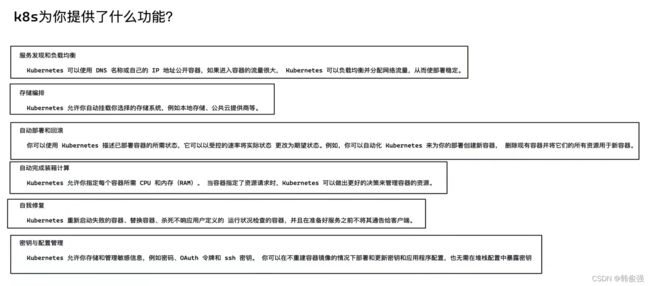

10. k8s提供了什么功能

二、k8s架构安装

k8s的安装部署, 分为两个角色, master 和 node (master slave 宗教)

也就是管理节点, 和工作节点

可以理解为

节点 ---- 服务器

老板 (发号施令, 且会有大老板, 二老板) , 高可用k8s集群, master01, master02 …

员工 (真正干活的, 很多个打工人) node, 正经干活的, 容器都运行在node节点上

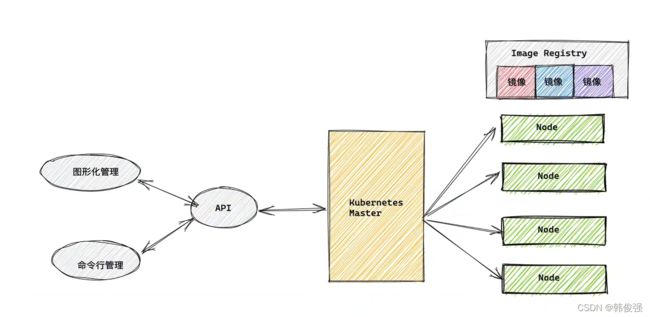

1. k8s流程

详细图解:

如图我们要学会:

- master怎么和node交互

- master和node理念都有什么

- 如何管理整个k8s集群

- 通过图形化平台工具

- 纯命令行访问k8s-api

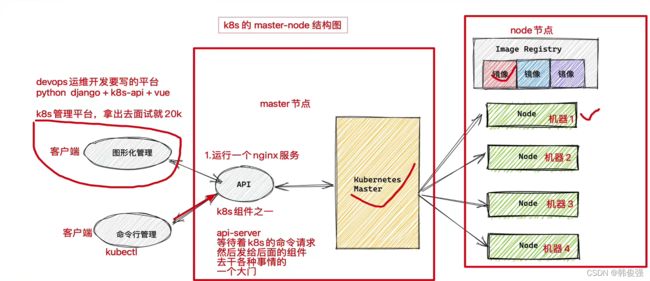

2. k8s工作原理

k8s工作流程图解:

master节点 (Control Plane 控制面板): master 节点控制整个集群

master节点上有一些核心组件:

- Controller Manager : 控制管理器, 确保集群以管理员期望的方式运行

- etcd: 键值数据库 (redis) [记账本, 记事本], 存储集群所有的数据

- scheduler: 调度器, 负责把业务容器调度到合适的node节点 (node节点对应一台真实服务器)

- api server: api网关 (所有的控制都需要通过api-server), 集群访问资源入口, 提供restapi, 以及访问安全控制

node节点 (worker 工作节点):

- kubelet (监工): 每一个node节点上必须安装的组件, 脏活累活, 都是它干

- 比如pod管理

- 容器健康检查, 监控

- kube-proxy: 代理网络, 维护节点中的iptables规则

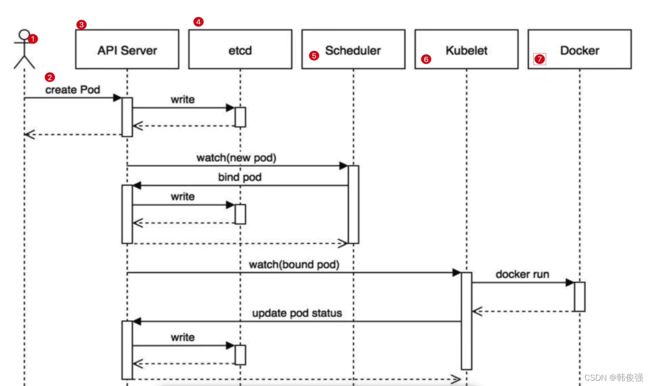

3. k8s组件交互原理(k8s使用流程?)

- 用户准备一个资源文件(yaml) (记录了业务应用的名称、镜像地址等信息), 通过调用APIServer执行创建Pod

- APIServer收到用户的Pod创建请求. 将Pod信息写入到etcd中

- 调度器通过通过list-watch方式, 发现有新的pod数据, 但是这个pod还没有绑定到某一个节点中, 就是这个容器, 应该创建在哪一台机器上

- 调度器通过调度算法, 计算出最合适pod运行的节点, 并调用APIServer, 把信息更新到etecd中

- kubelet同样通过list-watch方式, 发现有心得pod调用到本机的节点了, 因此调用容器运行时, 去根据pod的描述信息, 拉取镜像, 启动容器, 同时生成事件信息 (kubelet 开始干活了, 实实在在的下载镜像, 运行容器, 修改配置, 且做好记录)

- 同时, 把容器的信息、事件及状态也通过APIServer写入到etcd中

4.干活来了, 安装k8s (基础配置)

- 二进制安装, 太麻烦, 可能要安装一天

- Minikube, 学习使用

- Kubeadm, 官方推荐, 引导方式安装

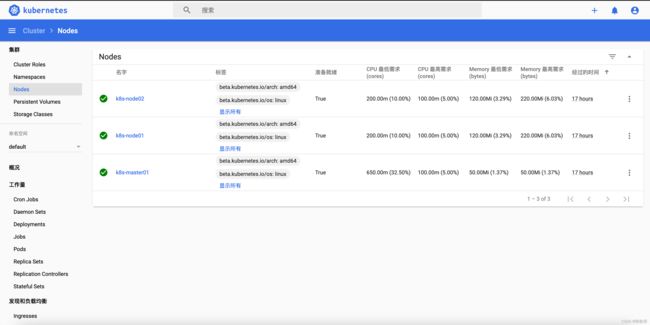

4.1 机器环境准备

准备N台服务器, 内网互通, 这里跟着我用3台就行, 为了实践我买了三台一周的服务器, 机器角色是 master, node, 喜欢记得点赞

机器配置, 建议是不低于2c 4g

| 主机名 | 节点ip | 角色 | 部署组件 |

|---|---|---|---|

| k8s-master01 | 39.101.140.126 | Master01 | etcd, kube-apiserver, kube-controller-manager, kubectl, kubeadm,kubelet, kube-proxy, flannel |

| k8s-node01 | 8.142.174.144 | Node01 | kubectl, kubelet, kube-proxy, flannel |

| k8s-node02 | 8.142.175.44 | Node02 | kubectl, kubelet, kube-proxy, flannel |

4.2 组件版本

| 组件 | 版本 | 说明 |

|---|---|---|

| CentOS | 7.9.2009 | |

| Kernel | 3.10.0-1160.53.1.el7.x86_64 | |

| etcd | 3.3.15 | 使用容器方式部署, 默认数据挂载到本地路径 |

| coredns | 1.6.2 | |

| kubeadm | v1.16.2 | |

| kubectl | v1.16.2 | |

| kubelet | v1.16.2 | |

| Kube-proxy | v1.16.2 | |

| flannel | v0.11.0 |

4.3 安装前环境配置

- 注意, 有的配置是全部机器执行, 有的是部分节点执行

修改主机名

hostnamectl set-hostname k8s-master01

hostnamectl set-hostname k8s-node01

hostnamectl set-hostname k8s-node02

添加hosts解析

cat >> /etc/hosts <<EOF

39.101.140.126 k8s-master01

8.142.174.144 k8s-node01

8.142.175.44 k8s-node02

EOF

Ping 一下检查下

ping k8s-master01

ping k8s-node01

ping k8s-node02

调整系统, 都是所有机器执行

iptables -P FORWARD ACCEPT # 防火墙规则

swapoff -a

# 防止开机自动挂载 swap 分区

sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

# 关闭防火墙以及selinux

sed -ri 's#(SELINUX=).*#\1disabled#' /etc/selinux/config

setenforce 0

systemctl disable firewalld && systemctl stop firewalld

# 开启内核对流量的转发

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward=1

vm.max_map_count=262144

EOF

# 让上面的内核生效一下

modprobe br_netfilter

sysctl -p /etc/sysctl.d/k8s.conf

# 配置yum基础源和docker源

curl -o /etc/yum.repos.d/Centos-7.repo http://mirrors.aliyun.com/repo/Centos-7.repo

curl -o /etc/yum.repos.d/docker-ce.repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# 使用cat生成yum的kubernetes源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum clean all && yum makecache

4.3 安装docker(all nodes)

k8s对容器管理, 我们得选择一款容器, 安装, 那肯定是 docker

yum list docker-ce --showduplicates | sort -r

yum install docker-ce -y

mkdir -p /etc/docker

# 配置docker镜像加速地址

vi /etc/docker/deamon.json

{

"registry-mirrors": ["http://f1361db2.m.daocloud.io"]

}

# 启动docker

systemctl enable docker && systemctl start docker

# 验证docker

docker version

4.4 安装k8s-master01 (master01机器执行)

k8s命令解释

安装 kubeadm, kubelet, kubectl

kubeadm: 用来初始化集群的指令, 安装集群的;kubelet: 在集群中的每个节点上用来启动Pod 和 容器等; 管理docker的;kubectl: 用来与集群通信的命令行工具, 就好比你用 docker ps , docker images类似;

操作节点: 所有的master和slave节点 ( k8s-master, k8s-slave) 需要执行

补充: yaml文件的语法; 配置文件常见的还有: ini, json, xml, yaml

yum install -y kubelet-1.16.2 kubeadm-1.16.2 kubectl-1.16.2 --disableexcludes=kubernetes

## 查看kudeadm版本

kubeadm version

## 设置kubelet开机启动, 作用是管理容器的, 下载镜像, 创建容器, 启停容器

# 确保机器一开机, kubelet服务启动了, 就会自动帮你管理pod(容器)

systemctl enable kubelet

初始化配置文件 (只在k8s-master01执行)

mkdir ~/k8s-install && cd ~/k8s-install

# 生成配置文件

kubeadm config print init-defaults > kubeadm.yaml

# 修改配置文件

vim kubeadm.yaml

只修改有标号1,2,3,4的部分, 其他请忽略

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 10.211.55.22 # 4.修改k8s-master01内网ip

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

imagePullPolicy: IfNotPresent

name: k8s-m1 # 修改为第一台执行节点的hostname

taints: null

---

controlPlaneEndpoint: 192.168.122.100:8443 # 新增控制平台地址

apiServer:

timeoutForControlPlane: 4m0s

extraArgs:

authorization-mode: "Node,RBAC"

# nfs/rbd pvc 需要用到

feature-gates: RemoveSelfLink=false

enable-admission-plugins: "NamespaceLifecycle,LimitRanger,ServiceAccount,PersistentVolumeClaimResize,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,ResourceQuota,Priority"

etcd-servers: https://k8s-m1:2379,https://k8s-m2:2379,https://k8s-m3:2379 # etcd 节点列表

certSANs:

- 192.168.122.100 # VIP 地址

- 10.96.0.1 # service cidr的第一个ip

- 127.0.0.1 # 多个master的时候负载均衡出问题了能够快速使用localhost调试

- k8s-m1

- k8s-m2

- k8s-m3

- kubernetes

- kubernetes.default

- kubernetes.default.svc

- kubernetes.default.svc.cluster.local

extraVolumes:

- hostPath: /etc/localtime

mountPath: /etc/localtime

name: timezone

readOnly: true

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager:

extraVolumes:

- hostPath: /etc/localtime

mountPath: /etc/localtime

name: timezone

readOnly: true

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

# etcd 高可用,需要配置多个节点

serverCertSANs:

- k8s-m1

- k8s-m2

- k8s-m3

peerCertSANs:

- k8s-m1

- k8s-m2

- k8s-m3

imageRepository: registry.aliyuncs.com/google_containers # 1.修改阿里镜像源

kind: ClusterConfiguration

kubernetesVersion: v1.16.2 # 2.修改你安装的k8s版本

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 10.244.0.0/16 # 3.添加pod网段, 设置容器内网络

scheduler:

extraVolumes:

- hostPath: /etc/localtime

mountPath: /etc/localtime

name: timezone

readOnly: true

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 0.0.0.0

bindAddressHardFail: false

clientConnection:

acceptContentTypes: ""

burst: 0

contentType: ""

kubeconfig: /var/lib/kube-proxy/kubeconfig.conf

qps: 0

clusterCIDR: ""

configSyncPeriod: 0s

conntrack:

maxPerCore: null

min: null

tcpCloseWaitTimeout: null

tcpEstablishedTimeout: null

detectLocalMode: ""

enableProfiling: false

healthzBindAddress: ""

hostnameOverride: ""

iptables:

masqueradeAll: false

masqueradeBit: null

minSyncPeriod: 0s

syncPeriod: 0s

ipvs:

excludeCIDRs: null

minSyncPeriod: 0s

scheduler: ""

strictARP: false

syncPeriod: 0s

tcpFinTimeout: 0s

tcpTimeout: 0s

udpTimeout: 0s

kind: KubeProxyConfiguration

metricsBindAddress: ""

mode: "ipvs" # ipvs 模式

nodePortAddresses: null

oomScoreAdj: null

portRange: ""

showHiddenMetricsForVersion: ""

udpIdleTimeout: 0s

winkernel:

enableDSR: false

networkName: ""

sourceVip: ""

---

apiVersion: kubelet.config.k8s.io/v1beta1

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/pki/ca.crt

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 0s

cacheUnauthorizedTTL: 0s

cgroupDriver: systemd

clusterDNS:

- 10.96.0.10

clusterDomain: cluster.local

cpuManagerReconcilePeriod: 0s

evictionPressureTransitionPeriod: 0s

fileCheckFrequency: 0s

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 0s

imageMinimumGCAge: 0s

kind: KubeletConfiguration

logging: {}

memorySwap: {}

nodeStatusReportFrequency: 0s

nodeStatusUpdateFrequency: 0s

rotateCertificates: true

runtimeRequestTimeout: 0s

shutdownGracePeriod: 0s

shutdownGracePeriodCriticalPods: 0s

staticPodPath: /etc/kubernetes/manifests

streamingConnectionIdleTimeout: 0s

syncFrequency: 0s

volumeStatsAggPeriod: 0s

修改完成如下:

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 172.29.58.226 # 4.修改k8s-master01内网ip, 根据自己服务器去填写

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: k8s-master01

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers # 1.修改阿里镜像源

kind: ClusterConfiguration

kubernetesVersion: v1.16.2 # 2.修改你安装的k8s版本

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 10.244.0.0/16 # 3.添加pod网段, 设置容器内网络

scheduler: {}

对上面的资源清单的文档比较复杂, 想要完整了解上面的资源对象对应的属性, 可以查看对应的godoc文档, 地址:

https://pkg.go.dev/k8s.io/kubernetes/cmd/kubeadm/app/apis/kubeadm/v1beta2

提前下载镜像 (只在k8s-master01执行)

# 检查镜像列表

[root@k8s-master01 k8s-install]# kubeadm config images list --config kubeadm.yaml

registry.aliyuncs.com/google_containers/kube-apiserver:v1.16.2

registry.aliyuncs.com/google_containers/kube-controller-manager:v1.16.2

registry.aliyuncs.com/google_containers/kube-scheduler:v1.16.2

registry.aliyuncs.com/google_containers/kube-proxy:v1.16.2

registry.aliyuncs.com/google_containers/pause:3.1

registry.aliyuncs.com/google_containers/etcd:3.3.15-0

registry.aliyuncs.com/google_containers/coredns:1.6.2

# 提前下载镜像

[root@k8s-master01 k8s-install]# kubeadm config images pull --config kubeadm.yaml

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-apiserver:v1.16.2

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-controller-manager:v1.16.2

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-scheduler:v1.16.2

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-proxy:v1.16.2

[config/images] Pulled registry.aliyuncs.com/google_containers/pause:3.1

[config/images] Pulled registry.aliyuncs.com/google_containers/etcd:3.3.15-0

[config/images] Pulled registry.aliyuncs.com/google_containers/coredns:1.6.2

4.5 初始化k8s-master01节点

ssh工具

Mac: iterm2

Wondows : xhshell secureCRT

找一个适合自己的即可

[root@k8s-master01 k8s-install]# kubeadm init --config kubeadm.yaml

[init] Using Kubernetes version: v1.16.2

......

初始化成功如下:

[bootstrap-token] Using token: abcdef.0123456789abcdef

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

# 执行如下命令, 创建配置文件

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

# 你需要创建pod网络, 你的pod才能正常工作

# k8s的集群网络, 需要借助额外的插件

# 这个先不着急, 等下个步骤做

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

# 你需要用如下命令, 将node节点, 加入集群

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 172.29.58.226:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:ebdc26b59529e03a62207699d60b8b64c2c19991db7a912f4aeae836615dd9ea

注意看, 最后几行信息, 就是让k8s-node01/02 公认节点, 加入到k8s集群中的命令

按照上述提示, master01操作

[root@k8s-master01 k8s-install]# mkdir -p $HOME/.kube

[root@k8s-master01 k8s-install]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s-master01 k8s-install]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

此时k8s集群状态, 应该是挂掉的, 这是正常的, 因为我们没有配置好k8s的网络插件

[root@k8s-master01 k8s-install]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01 NotReady master 7m6s v1.16.2

接下来到k8s-node节点操作了。

4.6 添加k8s-node到集群中

所有的node节点操作, 复制上述k8s-master01生成的信息

kubeadm join 172.29.58.226:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:ebdc26b59529e03a62207699d60b8b64c2c19991db7a912f4aeae836615dd9ea

# 成功

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

去k8s-master01检查nodes状态 (可以尝试 -owide 显示更详细)

[root@k8s-master01 k8s-install]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01 NotReady master 8m35s v1.16.2

k8s-node01 NotReady <none> 10s v1.16.2

k8s-node02 NotReady <none> 8s v1.16.2

发现, 集群中, 已经存在我们加入的2个node节点了, 但是状态还是未就绪, 还是因为网络问题

4.7 安装flannel网络插件 (k8s-master01执行)

这里可能有网络问题, 多尝试几次

wget https://raw.githubusercontent.com/coreos/flannel/2140ac876ef134e0ed5af15c65e414cf26827915/Documentation/kube-flannel.yml

修改配置文件, 指定机器的网卡名, 大约在190行

注意: ifconfig 查看自己的网卡名字, 不一定都是eth0; [root@k8s-master01 k8s-install]# ifconfig

[root@k8s-master01 k8s-install]# vim kube-flannel.yml

189 args:

190 - --ip-masq

191 - --kube-subnet-mgr

192 - --iface=eth0 # 添加这个配置

下载flannel网络插件镜像

[root@k8s-master01 k8s-install]# docker pull quay.io/coreos/flannel:v0.11.0-amd64

v0.11.0-amd64: Pulling from coreos/flannel

cd784148e348: Pull complete

04ac94e9255c: Pull complete

e10b013543eb: Pull complete

005e31e443b1: Pull complete

74f794f05817: Pull complete

Digest: sha256:7806805c93b20a168d0bbbd25c6a213f00ac58a511c47e8fa6409543528a204e

Status: Downloaded newer image for quay.io/coreos/flannel:v0.11.0-amd64

quay.io/coreos/flannel:v0.11.0-amd64

# 安装flannel网络插件

[root@k8s-master01 k8s-install]# kubectl create -f kube-flannel.yml

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds-amd64 created

daemonset.apps/kube-flannel-ds-arm64 created

daemonset.apps/kube-flannel-ds-arm created

daemonset.apps/kube-flannel-ds-ppc64le created

daemonset.apps/kube-flannel-ds-s390x created

# 再次检查k8s集群, 确保所有节点是redy

[root@k8s-master01 k8s-install]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01 Ready master 13m v1.16.2

k8s-node01 Ready <none> 4m47s v1.16.2

k8s-node02 Ready <none> 4m45s v1.16.2

# 此时已经是全部正确状态

# 可以再看下集群中所有的pods状态, 确保都是正确的

[root@k8s-master01 k8s-install]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-58cc8c89f4-fgjpn 1/1 Running 0 13m

kube-system coredns-58cc8c89f4-zz245 1/1 Running 0 13m

kube-system etcd-k8s-master01 1/1 Running 0 12m

kube-system kube-apiserver-k8s-master01 1/1 Running 0 12m

kube-system kube-controller-manager-k8s-master01 1/1 Running 0 12m

kube-system kube-flannel-ds-amd64-2sbrp 1/1 Running 0 80s

kube-system kube-flannel-ds-amd64-lpplk 1/1 Running 0 80s

kube-system kube-flannel-ds-amd64-zkn4k 1/1 Running 0 80s

kube-system kube-proxy-dvnfk 1/1 Running 0 5m5s

kube-system kube-proxy-sbwkc 1/1 Running 0 13m

kube-system kube-proxy-sj8bz 1/1 Running 0 5m3s

kube-system kube-scheduler-k8s-master01 1/1 Running 0 12m

到此为止, k8s集群已部署完毕~

三、首次使用k8s部署应用程序

初体验, k8s部署 nginx web服务

# k8s-master01

[root@k8s-master01 k8s-install]# kubectl run my-nginx --image=nginx:alpine

kubectl run --generator=deployment/apps.v1 is DEPRECATED and will be removed in a future version. Use kubectl run --generator=run-pod/v1 or kubectl create instead.

deployment.apps/my-nginx created

# k8s创建pod, 分配到某一个node机器, 然后机器上运行容器

# 发现运行在k8s-node01 机器上

[root@k8s-master01 k8s-install]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE ....

my-nginx-576bb7cb54-m9b2m 1/1 Running 0 92s 10.244.1.2 k8s-node01

[root@k8s-master01 k8s-install]# kubectl get pods

NAME READY STATUS RESTARTS AGE

my-nginx-576bb7cb54-m9b2m 1/1 Running 0 71s

# 就能看到nginx默认网页信息

[root@k8s-master01 k8s-install]# curl 10.244.1.2

发现运行在 k8s-node01 机器上, 我们可以去验证一下docker容器进程

# k8s-node01

# 就会看到docker 容器中有一个 nginx在运行

[root@k8s-node01 ~]# docker ps | grep nginx

cb62569d3bcf nginx "/docker-entrypoint.…" 3 minutes ago Up 3 minutes k8s_my-nginx_my-nginx-576bb7cb54-m9b2m_default_5888f807-0b3a-474d-86cf-8e1c7d6bb895_0

9c9971e61f09 registry.aliyuncs.com/google_containers/pause:3.1 "/pause" 3 minutes ago Up 3 minutes k8s_POD_my-nginx-576bb7cb54-m9b2m_default_5888f807-0b3a-474d-86cf-8e1c7d6bb895_0

3.1 图解、小节

到这里我们就正确的安装好了一个master、2个node的k8s集群

可以看出, 我们和k8s的 api-server 交互, 使用的是kubectl

3.2 配置kubectl命令补全

类似于docker, kubectl是命令行工具, 用于与APIServer交互, 内置了丰富的子命令, 功能很强大

官网: https://kubernetes.io/docs/reference/kubectl/overview/

# 类似docker的命令

docker ps

docker images

# 类似linux下, 用tab支持补全一样

和k8s api-server交互, 使用kubectl命令, 我们在这配置 kubectl 的命令补全, 用起来更加简单了, 和bash的补全一个意思

k8s 命令自动补全

yum install -y bash-completion

source /usr/share/bash-completion/bash_completion

source <(kubectl completion bash)

echo "source <(kubectl completion bash)" >> ~/.bashrc

3.3 k8s快速实践

我们刚才运行了一个nginx服务, 被k8s自动分配到k8s-node01上, 且通过flannel网络插件, 自动分配了ip: 10.244.1.0

[root@k8s-node01 ~]# ifconfig flannel.1

flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 10.244.1.0 netmask 255.255.255.255 broadcast 0.0.0.0

inet6 fe80::d855:cdff:fe52:514c prefixlen 64 scopeid 0x20<link>

ether da:55:cd:52:51:4c txqueuelen 0 (Ethernet)

RX packets 7 bytes 446 (446.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 5 bytes 1121 (1.0 KiB)

TX errors 0 dropped 8 overruns 0 carrier 0 collisions 0

但是这个应用只能在局域网内访问, 如何在外界访问到k8s管理的应用呢? 我们继续看

到这里, 我们就必须要先了解pod, 它是什么东西? 在k8s中又是什么作用?

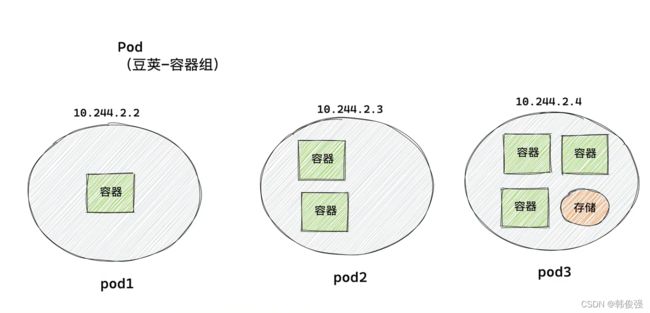

3.4 详解最小调度单元pod

- docker调度的是

容器 - k8s集群中, 最小调度单元的是

pod(豆荚)

pod是k8s中的一个抽象的概念, 用于运行一组容器 (一个或多个)

pod管理一组容器的共享资源:

- 存储, volumes

- 网络, 每个pod在k8s集群中有一个唯一的ip

- 容器, 容器的基本信息, 如镜像版本, 端口映射等

创建k8s应用时, 集群首先创建的是

包含容器的pod, 然后pod和一个node绑定, 被访问, 或者被删除每个k8s-node节点, 至少要运行

- kubelet, 负责master, node之间的通信, 以及管理pod和pod内运行的容器

- kube-proxy 流量转发

- 容器运行时环境, 负责下载镜像, 创建, 运行容器实例

图解: k8s内部工作形式, 以及优点

- 刚才是小试牛刀, 快速的用k8s, 创建pod, 运行nginx, 且可以在集群内访问nginx页面

- pod

- k8s支持容器的高可用

- node01上的应用程序挂了, 能自动重启

- 还支持扩容, 缩容

- 实现负载均衡

3.5 再来部署nginx应用 —deployment

- 这里我们创建一个

deployment资源, 该资源是k8s部署应用的重点, 这里我们先不做介绍, 先来看看它的作用 - 创建deployment资源后, deployment指示k8s如何创建应用实例, k8s-master将应用程序, 调度到具体的node上, 也就是生成pod以及内部的容器实例

- 应用创建后, deployment会持续健康这些pod, 如果node节点出现故障, deployment控制器会自动找到一个更有node, 重新创建新的实例, 这就提供了

自我修复能力, 解决服务器故障问题。

3.6 使用yaml文件

这里一定要注意, 在k8s不同版本下, 所有的命令, 参数, 都是有区别的, 当前实践使用的k8s版本是: 1.16.2

# 查看k8s版本

[root@k8s-master01 k8s-install]# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"16", GitVersion:"v1.16.2", GitCommit:"c97fe5036ef3df2967d086711e6c0c405941e14b", GitTreeState:"clean", BuildDate:"2019-10-15T19:15:39Z", GoVersion:"go1.12.10", Compiler:"gc", Platform:"linux/amd64"}

创建k8s资源有两种方式:

- yaml配置文件, 生产环境使用

- 命令行, 调试使用

我们这里使用 yaml 文件来创建k8s资源:

- 先写yaml

- 应用yaml

[root@k8s-master01 k8s-install]# touch harry-nginx.yaml

[root@k8s-master01 k8s-install]# ls

harry-nginx.yaml kubeadm.yaml kube-flannel.yml

[root@k8s-master01 k8s-install]# vim harry-nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: harry-nginx

labels:

app: nginx

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:alpine

ports:

- containerPort: 80

name: mynginx # 字符串代替端口号

复制以下内容, 注意 :后有一个空格, 值后面都不要有空格

apiVersion: apps/v1

kind: Deployment

metadata:

name: harry-nginx

labels:

app: nginx

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:alpine

ports:

- containerPort: 80

# 创建 harry-nginx.yaml 的 pod

[root@k8s-master01 k8s-install]# kubectl apply -f harry-nginx.yaml

deployment.apps/harry-nginx created

# 查看创建的pod信息, 正确的一概是3个nginx副本 (-o wide)

[root@k8s-master01 k8s-install]# kubectl get pods

NAME READY STATUS RESTARTS AGE

harry-nginx-5c559d5697-c8c9q 1/1 Running 0 55s

harry-nginx-5c559d5697-k5rvt 1/1 Running 0 55s

my-nginx-576bb7cb54-m9b2m 1/1 Running 0 78m

- 可以测试访问3个nginx容器, 只能通过集群内ip访问

[root@k8s-master01 k8s-install]# kubectl get pods -owide

NAME READY STATUS RESTARTS AGE IP NODE

harry-nginx-5c559d5697-c8c9q 1/1 Running 0 4m38s 10.244.1.3 k8s-node01

harry-nginx-5c559d5697-k5rvt 1/1 Running 0 4m38s 10.244.2.2 k8s-node02

my-nginx-576bb7cb54-m9b2m 1/1 Running 0 81m 10.244.1.2 k8s-node01

curl -I 10.244.1.3

curl -I 10.244.2.2

curl -I 10.244.1.2

[root@k8s-master01 k8s-install]# curl -I 10.244.1.3

HTTP/1.1 200 OK

Server: nginx/1.21.6

Date: Sat, 02 Apr 2022 05:00:10 GMT

Content-Type: text/html

Content-Length: 615

Last-Modified: Tue, 25 Jan 2022 15:26:06 GMT

Connection: keep-alive

ETag: "61f0168e-267"

Accept-Ranges: bytes

去对应的node, 检查docker容器实例

docker ps |grep nginx

# k8s-node01

[root@k8s-node01 ~]# docker ps |grep nginx

fd3c06059a83 53722defe627 "/docker-entrypoint.…" 6 minutes ago Up 6 minutes k8s_nginx_harry-nginx-5c559d5697-c8c9q_default_2cfcc866-93a8-42ff-a805-fb991db7d171_0

6b3bd1a3a994 registry.aliyuncs.com/google_containers/pause:3.1 "/pause" 6 minutes ago Up 6 minutes k8s_POD_harry-nginx-5c559d5697-c8c9q_default_2cfcc866-93a8-42ff-a805-fb991db7d171_0

cb62569d3bcf nginx "/docker-entrypoint.…" About an hour ago Up About an hour k8s_my-nginx_my-nginx-576bb7cb54-m9b2m_default_5888f807-0b3a-474d-86cf-8e1c7d6bb895_0

9c9971e61f09 registry.aliyuncs.com/google_containers/pause:3.1 "/pause" About an hour ago Up About an hour k8s_POD_my-nginx-576bb7cb54-m9b2m_default_5888f807-0b3a-474d-86cf-8e1c7d6bb895_0

# k8s-node02

[root@k8s-node02 ~]# docker ps |grep nginx

9d6b6f4f802c nginx "/docker-entrypoint.…" 5 minutes ago Up 5 minutes k8s_nginx_harry-nginx-5c559d5697-k5rvt_default_0c1cb973-5c07-4a74-a084-51986dd1e935_0

1f68204e8273 registry.aliyuncs.com/google_containers/pause:3.1 "/pause" 6 minutes ago Up 6 minutes k8s_POD_harry-nginx-5c559d5697-k5rvt_default_0c1cb973-5c07-4a74-a084-51986dd1e935_0

3.7 service 负载均衡

- k8s的内容很多, 很高级

- service资源

问题来了, 你现在运行了一个deployment类型的应用, 如何通过浏览器访问到这个nginx?

通过以前的学习, 我们已经能够通过deployment来创建一组Pod来提供具有高可用的服务, 虽然每个Pod都会分配一个单独的Pod IP, 然后却存在如下两个问题:

- Pod IP仅仅是集群内可见的虚拟IP, 外部无法访问

- Pod IP会随着Pod的销毁而消失, 当ReplicaSet对Pod进行动态伸缩时, Pod IP可能随时随地都会发生变化, 这样对于我们访问这个服务带来了难度

- 隐私通过pod的ip去访问服务, 基本上是不现实的, 解决方案就是新的资源 (service) 负载均衡

新建一个测试service

vim service-demo.yaml

---

apiVersion: v1

kind: Service

metadata:

name: myservice

spec:

selector:

app: nginx

ports:

- name: harry-nginx

protocol: TCP

port: 80

targetPort: 80

name: mynginx # 字符串代替端口号, 需要和nginx对应, 都用一个字符串

# 创建deployment

[root@k8s-master01 k8s-install]# kubectl create -f service-demo.yaml

service/myservice created

# 查看deployments

[root@k8s-master01 k8s-install]# kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

harry-nginx 2/2 2 2 31m

my-nginx 1/1 1 1 35m

# 查看启动的服务, myservice有了

[root@k8s-master01 k8s-install]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 177m

myservice ClusterIP 10.105.44.135 <none> 80/TCP 14m

# 查看服务详情

[root@k8s-master01 k8s-install]# kubectl describe svc myservice

Name: myservice

Namespace: default

Labels: <none>

Annotations: <none>

Selector: app=nginx

Type: ClusterIP

IP: 10.105.44.135

Port: harry-nginx 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.1.4:80,10.244.2.3:80

Session Affinity: None

Events: <none>

# 如何删除service

[root@k8s-master01 k8s-install]# kubectl delete -f service-demo.yaml

service "myservice" deleted

解释:

https://kubernetes.io/zh/docs/concepts/services-networking/service

kubectl get services是k8s的资源之一service是一组pod的服务抽象, 相当于一组pod的LB, 负责将请求分发给对应的pod, service会为这个LB提供一个IP, 一般称之为: cluster IP

使用Service对象, 通过selector进行标签选择, 找到对应的Pod:

[root@k8s-master01 k8s-install]# kubectl api-resources |grep "^services"

services svc true Service

[root@k8s-master01 k8s-install]# kubectl get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

harry-nginx 2/2 2 2 8m32s

my-nginx 1/1 1 1 85m

# 关于删除, 这里不要操作, 除非有需要

# (如何删除deployment资源? kubectl delete deployments harry-nginx)

# 先暂停pod

kubectl scale --replicas=0 deployment/harry-nginx

kubectl delete deployment harry-nginx

# 删除deployment

kubectl delete deployment harry-nginx

# 删除pod

kubectl delete pod han-nginx-5c559d5697-2nzsh

# 删除svc

kubectl delete svc harry-nginx -n default

# 检查端口映射 类似 docker port 容器id, 端口映射关系

[root@k8s-master01 k8s-install]# kubectl get svc -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

harry-nginx LoadBalancer 10.110.143.254 <pending> 80:30399/TCP 36s app=nginx

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 100m <none>

# 你可以访问这个宿主机的30399端口, 就能够访问到pod内的应用了

http://39.101.140.126:30399

3.8 访问应用

访问方式, 访问集群内的, 任意节点, 都可以

访问方式ip:service-port

# k8s-master01

http://39.101.140.126:30399/

# k8s-node01

http://8.142.174.144:30399/

# k8s-node02

http://8.142.175.44:30399/

可以通过查看pod日志, 观察请求

[root@k8s-master01 k8s-install]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE

harry-nginx-5c559d5697-c8c9q 1/1 Running 0 25m 10.244.1.3 k8s-node01

harry-nginx-5c559d5697-k5rvt 1/1 Running 0 25m 10.244.2.2 k8s-node02

my-nginx-576bb7cb54-m9b2m 1/1 Running 0 102m 10.244.1.2 k8s-node01

[root@k8s-master01 k8s-install]# kubectl logs -f pod-name

[root@k8s-master01 k8s-install]# kubectl logs -f harry-nginx-5c559d5697-c8c9q

/docker-entrypoint.sh: /docker-entrypoint.d/ is not empty, will attempt to perform configuration

/docker-entrypoint.sh: Looking for shell scripts in /docker-entrypoint.d/

/docker-entrypoint.sh: Launching /docker-entrypoint.d/10-listen-on-ipv6-by-default.sh

10-listen-on-ipv6-by-default.sh: info: Getting the checksum of /etc/nginx/conf.d/default.conf

10-listen-on-ipv6-by-default.sh: info: Enabled listen on IPv6 in /etc/nginx/conf.d/default.conf

/docker-entrypoint.sh: Launching /docker-entrypoint.d/20-envsubst-on-templates.sh

/docker-entrypoint.sh: Launching /docker-entrypoint.d/30-tune-worker-processes.sh

/docker-entrypoint.sh: Configuration complete; ready for start up

2022/04/02 04:54:20 [notice] 1#1: using the "epoll" event method

2022/04/02 04:54:20 [notice] 1#1: nginx/1.21.6

2022/04/02 04:54:20 [notice] 1#1: built by gcc 10.3.1 20211027 (Alpine 10.3.1_git20211027)

2022/04/02 04:54:20 [notice] 1#1: OS: Linux 3.10.0-1160.53.1.el7.x86_64

2022/04/02 04:54:20 [notice] 1#1: getrlimit(RLIMIT_NOFILE): 1048576:1048576

2022/04/02 04:54:20 [notice] 1#1: start worker processes

2022/04/02 04:54:20 [notice] 1#1: start worker process 32

2022/04/02 04:54:20 [notice] 1#1: start worker process 33

10.244.0.0 - - [02/Apr/2022:05:00:10 +0000] "HEAD / HTTP/1.1" 200 0 "-" "curl/7.29.0" "-"

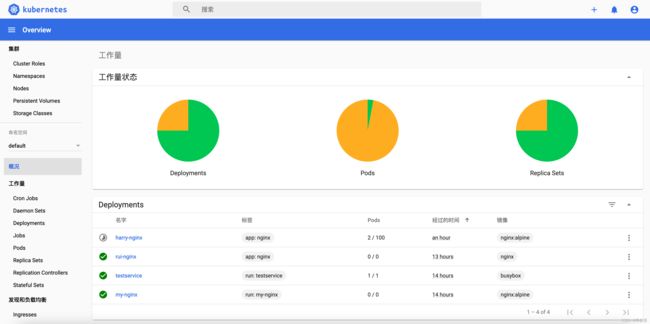

3.9 deployment 其他功能

- 扩容、缩容

- (增加, 减少副本数, 如同集群中的后端节点, 加大负载均衡能力)

- 滚动升级

- 我们部署的2个nginx容器, 想要进行版本升级, 就得一个个把容器中的nginx升级, 重启

- 然而k8s的deployment资源, 能非常简单的, 进行版本升级

- 所以k8s这个杀手锏, 在部署初期, 安装时会麻烦点, 但是部署好之后, 维护大规模, 复杂的应用, 就很方便了

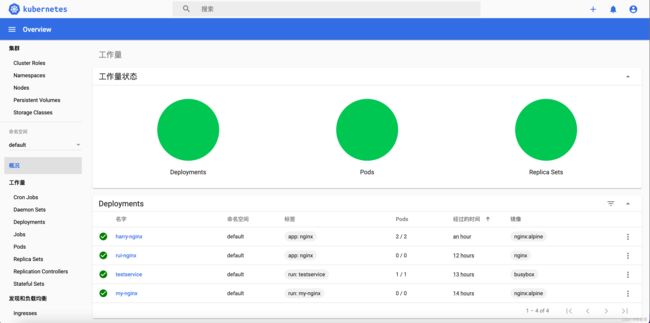

四、部署dashboard

https://github.com/kubernetes/dashboard

4.1 下载k8s yaml文件

kubernetes 仪表板是 kubernetes 集群的通用、基于 web 的 UI。它允许用户管理集群中运行的应用程序, 并对其进行故障排除, 以及管理集群本身。

# 下载k8s yaml文件

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-rc5/aio/deploy/recommended.yaml

# 修改配置 vi recommended.yaml, 修改网络配置信息

[root@k8s-master01 k8s-install]# vi recommended.yaml

39 spec:

40 ports:

41 - port: 443

42 targetPort: 8443

43 selector:

44 k8s-app: kubernetes-dashboard

45 type: NodePort # 加上这个, 能够让你访问宿主机的ip, 就能够访问到集群内的dashboard页面

4.2 创建资源, 测试访问

[root@k8s-master01 k8s-install]# kubectl create -f recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

# 检查状态 如果是ContainerCreating,就要等一会儿, 容器正在创建

[root@k8s-master01 k8s-install]# kubectl -n kubernetes-dashboard get po

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-7b8b58dc8b-xfrzt 0/1 ContainerCreating 0 15s

kubernetes-dashboard-866f987876-kpjfg 0/1 ContainerCreating 0 15s

# 实时监控一下, 状态变为 Running

[root@k8s-master01 k8s-install]# kubectl -n kubernetes-dashboard get po --watch

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-7b8b58dc8b-xfrzt 0/1 ContainerCreating 0 58s

kubernetes-dashboard-866f987876-kpjfg 0/1 ContainerCreating 0 58s

kubernetes-dashboard-866f987876-kpjfg 1/1 Running 0 83s

dashboard-metrics-scraper-7b8b58dc8b-xfrzt 1/1 Running 0 101s

# 查看所有ns下的pod

[root@k8s-master01 k8s-install]# kubectl get po -o wide -A --watch

# 容器正确运行后, 访问测试, 查看service资源

[root@k8s-master01 k8s-install]# kubectl -n kubernetes-dashboard get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.98.91.243 <none> 8000/TCP 3m21s

kubernetes-dashboard NodePort 10.97.53.64 <none> 443:32055/TCP 3m21s

# 可以看到dashboard暴露的端口是443:32055

# 此时宿主机可以访问改端口

[root@k8s-master01 k8s-install]# netstat -tunle|grep 32055

tcp6 0 0 :::32055 :::* LISTEN 0 364647

访问, 注意, 必须是https协议, 一定记得打开端口, 切记!! 切记!! 切记!!

https://39.101.140.126:32055/

只能用火狐浏览器访问, 其他浏览器不信任证书

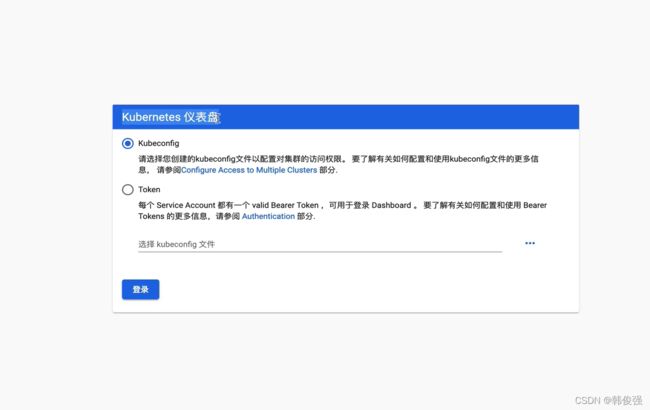

4.3 创建账户访问dashboard

这里涉及k8s的权限认证系统, 跟着操作就好了

[root@k8s-master01 k8s-install]# vim admin.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: admin

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: admin

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin

namespace: kubernetes-dashboard

# 创建

[root@k8s-master01 k8s-install]# kubectl create -f admin.yaml

clusterrolebinding.rbac.authorization.k8s.io/admin created

serviceaccount/admin created

# 查看一下, admin已创建

[root@k8s-master01 k8s-install]# kubectl get serviceaccount -n kubernetes-dashboard

NAME SECRETS AGE

admin 1 107s

default 1 32m

kubernetes-dashboard 1 32m

# 查看描述

[root@k8s-master01 k8s-install]# kubectl describe serviceaccount admin -n kubernetes-dashboard

Name: admin

Namespace: kubernetes-dashboard

Labels: k8s-app=kubernetes-dashboard

Annotations: <none>

Image pull secrets: <none>

Mountable secrets: admin-token-dwpb2

Tokens: admin-token-dwpb2

Events: <none>

# 查看secret, admin-token-dwpb2 下面会用到

[root@k8s-master01 k8s-install]# kubectl -n kubernetes-dashboard get secret |grep admin-token

admin-token-dwpb2 kubernetes.io/service-account-token 3 4m21s

# 根据如下命令, 获取token

# 注意修改 secret 的名字

[root@k8s-master01 k8s-install]# kubectl -n kubernetes-dashboard get secret admin-token-dwpb2 -o jsonpath={.data.token}|base64 -d

# 复制生成的token 填进上面4.2的token输入框

eyJhbGciOiJSUzI1NiIsImtpZCI6InBkLW1nMV8wQXZ2MjhZZlV1MzNLMElBY0RkQzJrdXdQaDJWdC10UHUzYk0ifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi10b2tlbi12cTZicyIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJhZG1pbiIsImt1YmVybmV0ZXMuaW8vc2Vydm1bnQvc2VydmljZS1hY2NvdW50LnVpZCI6ImJjNmI2Y2NkLTU5MTMtNDg2MC1iZDY3LTMyYTEyYjcyMDk0NyIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDphZG1pbiJ9.u4n15Mi3Nr7CjXqx9PgEQGyZPgYnd9bdOOMjDXJ1Q81Fu8XfglUTJKM0dx2QWEMa0z0SuFZeejIFz7tR0HbVK-fRhgJL6UEsXK1iztZgTOmCk0utRvikWHMrQOO1F7NPcImGC4gDKwE7LaSfjG-JUjlY6qpnwDP0o3m0M_aGJd4KT8C9BMzQ7gRyoDY1MkMg8CMT4on4z8cMmO59GS4xdpat8HLh8ugV0eOAlygoGwvLbKe7xd9Otn3MQbfOh-rN3OI-2kc5-Dp4MpB_b-3f-oy0T7rjnnypWoCL3v0VFVypPZAdWFFfHR2Slxt_M31BpgAMoxLu0pnC0P