【CVPR2023】CDDFuse: Correlation-Driven Dual-Branch Feature Decomposition for Multi-Modality Image Fus

CDDFuse: Correlation-Driven Dual-Branch Feature Decomposition for Multi-Modality Image Fusion, CVPR2023

解读:CVPR 2023 | 结合Transformer和CNN的多任务多模态图像融合方法 (qq.com)

论文:https://arxiv.org/abs/2211.14461

代码:https://github.com/Zhaozixiang1228/MMIF-CDDFuse

本文亮点

- 结合CNN和当前火爆的Transformer;

- 将特征解耦的思想引入了图像融合,将跨模态信息分解为共有信息和特有信息,类似于DRF等融合模型;

- 两阶段训练法,第一阶段采用的输入和输出都是源图像的自监督方式,SD-Net、SFA-Fuse采用了这类思想;

- 用高级视觉任务验证了融合效果。

介绍

多模态图像融合目的是结合各个模态图像的特点,如有物理含义的高亮区域和纹理细节。为了能对跨模态进行有效建模,并分解得到期望的各模态共有特征和特有特征,本文提出了Correlation-Driven feature Decomposition Fusion (CDDFuse) 来进行多模态特征分解和图像融合。本文模型分为两阶段,第一阶段CDDFuse首先使用Restormer块来提取跨模态浅层特征,然后引入双分支Transformer-CNN特征提取器,其中 Lite Transformer (LT)块利用长程注意力处理低频全局特征, Invertible Neural Networks (INN) 块则用来提取高频局部特征。基于嵌入的语义信息,低频特征应该是相关的,而高频特征应该是不相关的。因此,提出了相关性驱动损失函数,让网络可以对特征进行更有效的分解。第二阶段,前述的LT和INN模块会输出融合图像。

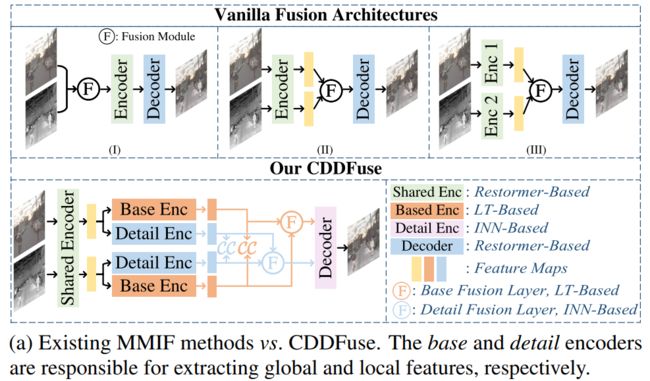

目前已有的多模态图像融合模型很多采用自编码器结构:

但是这种方式有三个缺陷:

- CNN的解释性较差,难以控制,对跨模态特征提取不够充分,如上图前两种都是多模态输入共享编码器,因此难以提取到模态特有的特征,而第三种双分支结构则忽略了各个模态共有属性;

- 上下文独立的CNN结构只能在相对小的感受野内提取到局部信息,很难捕获全局信息,因此目前还不清楚 CNN 的归纳偏差能否对所有模态的输入充分提取特征;

- 网络的前向传播会造成高频信息丢失。

本文探索了一种合理的范式来解决特征提取和融合上的问题。首先给提取到的特征添加相关性约束,提高特征提取的可控制性和可解释性,本文的假设是对于多模态图像融合,两个模态的输入特征在低频上是相关的,表示了所有模态的共有信息,在高频上是不相关的,表示了各个模态独有的信息。比如ir-vis融合,红外与可见光图的场景相同,在低频信息上包含统计上的共有信息,比如背景和大尺度环境特征,而高频部分的信息则是独立的,比如可见光模态纹理细节信息和红外模态的温度信息都是各自模态特有的。因此需要通过分别提高低频部分特征之间相关性、降低高频特征之间的相关性来促进跨模态特征提取。transformer目前在视觉任务上很成功,主要得益于它的自注意力机制和全局特征提取能力,但是往往很大的计算资源,因此本文提出让transformer结合CNN的局部上下文提取和计算高效性的优势。最后,为了解决丢失期望高频输入信息的问题,引入了Invertible Neural networks (INN)块,INN 是通过可逆性设计让输入和输出特征的相互生成来防止信息丢失,符合融合图像中保留高频特征的目标。

方法

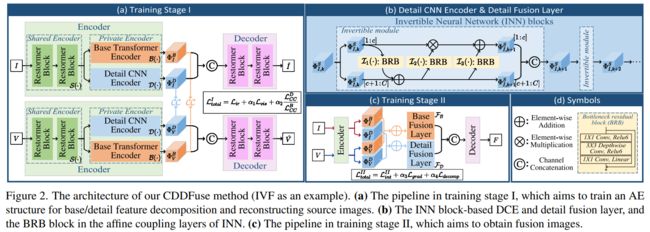

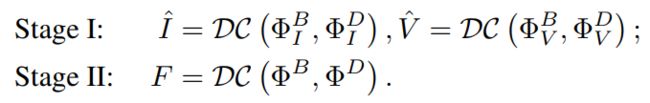

模型整体结构如下图,整体分为四个模块:双分支编码器用于特征提取与分解、解码器用于训练阶段I的图像重建或者训练阶段II的图像融合、base/detail融合层用于融合不同频率的特征。

编码器

包含三部分:Restormer block - based share feature encoder (SFE)、Lite Transformer (LT) block - based base transformer encoder (BTE) 、 Invertible Neural networks (INN) block - based detail CNN encoder (DCE),其中BTE和DCE共同组成长短距离编码器。对于输入的三通道可见光图和单通道红外图,用S、B、D分别表示SFE、BTE、DCE三个模块。首先来看用来提取共有特征的SFE模块,它的目标是提取浅层特征,如下式。

在SFE中使用Restormer block的原因是利用维度间的自注意力机制提取全局特征,因此可以不增加算力的情况下提取跨模态浅层特征.

BTE用来从共有特征中提取低频基特征,如下式。

为了能提取城距离dependency,使用具有空间自注意力的transformer,为了能平衡效果和运算效率,这里采用了LT block作为BTE的基础单元,可以在降低参数量的情况下保证效果。

DCE和BTE相反,用来提取高频细节信息,如下式。

考虑到边缘纹理信息在融合任务中也很重要,这里就希望DCE能尽量保留更多的细节。INN模块通过输入和输出能相互生成来确保输入信息被尽可能保留,因此可以在DCE中用于无损特征提取,具体实现时是用INN搭配affine coupling层,每个可逆层的变换如下。

上式过程和图2的b中过程是对应的,图中BRB的结构如图2中的d(来源于MobileNetV2),每个可逆层中的BRB都可以看做是无损信息映射。

融合层

用于将编码器提取到的特征进行融合。考虑到 base/detail 特征融合的归纳偏置应该和编码器的 base/detail 特征提取相同,使用LT和INN块来实现 base/detail 融合层,如下式。

解码器

解码器首先将分解的特征在通道维度拼接作为输入,然后在训练阶段I将源图作为输出,在训练结算II将融合图作为输出,如下式。

由于输入的特征是跨模态且多频段特征,因此让解码器结构和SFE保持一致,即将Restormer block作为基础单元。

两阶段训练

由于没有GT,采用和RFN-Nest相同的两阶段训练法。一阶段将ir-vis作为SFE的输入来提取浅层特征,然后BTE和DCE提取高低频特征,然后再把红外的base和detail特征拼接,可见光的base和detail特征拼接,送到解码器中,分别用来重建原始输入的红外图和可见光图。二阶段训练时的编码器部分相同,不同之处为提取到base和detail特征之后,可以看图2的c,需要将红外和可见光的base特征相加,detail特征也分别相加,然后分别送入base和detail融合层中,输出再在通道维度上拼接,经过解码器后就是融合图像F了。

损失函数

一阶段损失函数如下。

前两项是红外和可见光的重建损失,第三项是特征分解损失。一阶段损失整体是为了编码和解码过程中信息不会损失。

第一项红外重建损失形式如下。

第二项的可见光重建损失和上式形式是一样的,换成可见光图即可。

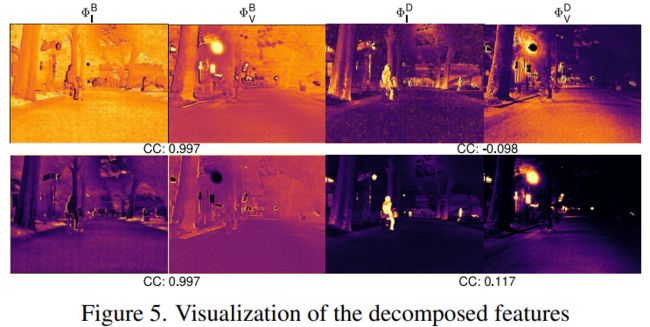

特征分解损失形式如下。

式中的CC是融合中常用的指标correlation coefficient。这一项损失就是前文中描述的让共有特征之间距离尽量近、特有特征之间距离尽量远,搭配相关系数可以测量特征之间距离了,因此将低频base特征作为分母,高频detail特征作为分子。该部分分解的效果如下图。

二阶段的损失函数形式如下。

实验

实验使用ir-vis融合来演示,选择了MSRS、Roadscene、TNO三个经典数据集。训练时将图片裁切为128×128的patch,训练120个epoch,其中第一阶段和第二阶段分别为40和80,batch设置为16。

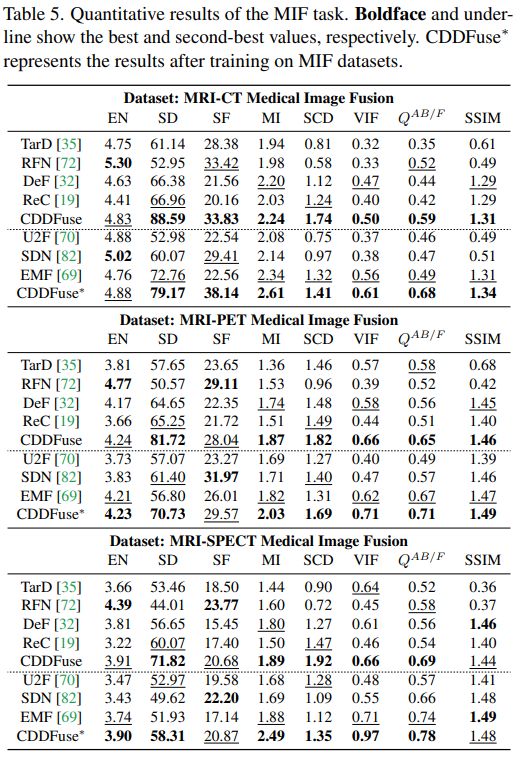

不同方法对比:

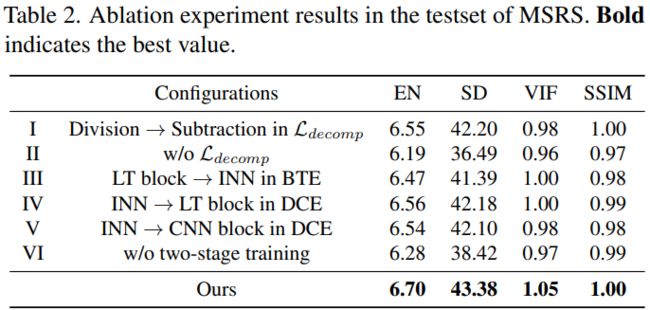

消融实验:

关键代码

net.py

# https://github.com/Zhaozixiang1228/MMIF-CDDFuse/blob/main/net.py

import torch

import torch.nn as nn

import math

import torch.nn.functional as F

import torch.utils.checkpoint as checkpoint

from timm.models.layers import DropPath, to_2tuple, trunc_normal_

from einops import rearrange

def drop_path(x, drop_prob: float = 0., training: bool = False):

"""

Drop paths (Stochastic Depth) per sample (when applied in main path of residual blocks).

This is the same as the DropConnect impl I created for EfficientNet, etc networks, however,

the original name is misleading as 'Drop Connect' is a different form of dropout in a separate paper...

See discussion: https://github.com/tensorflow/tpu/issues/494#issuecomment-532968956 ... I've opted for

changing the layer and argument names to 'drop path' rather than mix DropConnect as a layer name and use

'survival rate' as the argument.

"""

if drop_prob == 0. or not training:

return x

keep_prob = 1 - drop_prob

# work with diff dim tensors, not just 2D ConvNets

shape = (x.shape[0],) + (1,) * (x.ndim - 1)

random_tensor = keep_prob + \

torch.rand(shape, dtype=x.dtype, device=x.device)

random_tensor.floor_() # binarize

output = x.div(keep_prob) * random_tensor

return output

class DropPath(nn.Module):

"""

Drop paths (Stochastic Depth) per sample (when applied in main path of residual blocks).

"""

def __init__(self, drop_prob=None):

super(DropPath, self).__init__()

self.drop_prob = drop_prob

def forward(self, x):

return drop_path(x, self.drop_prob, self.training)

class AttentionBase(nn.Module):

def __init__(self,

dim,

num_heads=8,

qkv_bias=False,):

super(AttentionBase, self).__init__()

self.num_heads = num_heads

head_dim = dim // num_heads

self.scale = nn.Parameter(torch.ones(num_heads, 1, 1))

self.qkv1 = nn.Conv2d(dim, dim*3, kernel_size=1, bias=qkv_bias)

self.qkv2 = nn.Conv2d(dim*3, dim*3, kernel_size=3, padding=1, bias=qkv_bias)

self.proj = nn.Conv2d(dim, dim, kernel_size=1, bias=qkv_bias)

def forward(self, x):

# [batch_size, num_patches + 1, total_embed_dim]

b, c, h, w = x.shape

qkv = self.qkv2(self.qkv1(x))

q, k, v = qkv.chunk(3, dim=1)

q = rearrange(q, 'b (head c) h w -> b head c (h w)',

head=self.num_heads)

k = rearrange(k, 'b (head c) h w -> b head c (h w)',

head=self.num_heads)

v = rearrange(v, 'b (head c) h w -> b head c (h w)',

head=self.num_heads)

q = torch.nn.functional.normalize(q, dim=-1)

k = torch.nn.functional.normalize(k, dim=-1)

# transpose: -> [batch_size, num_heads, embed_dim_per_head, num_patches + 1]

# @: multiply -> [batch_size, num_heads, num_patches + 1, num_patches + 1]

attn = (q @ k.transpose(-2, -1)) * self.scale

attn = attn.softmax(dim=-1)

out = (attn @ v)

out = rearrange(out, 'b head c (h w) -> b (head c) h w',

head=self.num_heads, h=h, w=w)

out = self.proj(out)

return out

class Mlp(nn.Module):

"""

MLP as used in Vision Transformer, MLP-Mixer and related networks

"""

def __init__(self,

in_features,

hidden_features=None,

ffn_expansion_factor = 2,

bias = False):

super().__init__()

hidden_features = int(in_features*ffn_expansion_factor)

self.project_in = nn.Conv2d(

in_features, hidden_features*2, kernel_size=1, bias=bias)

self.dwconv = nn.Conv2d(hidden_features*2, hidden_features*2, kernel_size=3,

stride=1, padding=1, groups=hidden_features, bias=bias)

self.project_out = nn.Conv2d(

hidden_features, in_features, kernel_size=1, bias=bias)

def forward(self, x):

x = self.project_in(x)

x1, x2 = self.dwconv(x).chunk(2, dim=1)

x = F.gelu(x1) * x2

x = self.project_out(x)

return x

class BaseFeatureExtraction(nn.Module):

def __init__(self,

dim,

num_heads,

ffn_expansion_factor=1.,

qkv_bias=False,):

super(BaseFeatureExtraction, self).__init__()

self.norm1 = LayerNorm(dim, 'WithBias')

self.attn = AttentionBase(dim, num_heads=num_heads, qkv_bias=qkv_bias,)

self.norm2 = LayerNorm(dim, 'WithBias')

self.mlp = Mlp(in_features=dim,

ffn_expansion_factor=ffn_expansion_factor,)

def forward(self, x):

x = x + self.attn(self.norm1(x))

x = x + self.mlp(self.norm2(x))

return x

class InvertedResidualBlock(nn.Module):

def __init__(self, inp, oup, expand_ratio):

super(InvertedResidualBlock, self).__init__()

hidden_dim = int(inp * expand_ratio)

self.bottleneckBlock = nn.Sequential(

# pw

nn.Conv2d(inp, hidden_dim, 1, bias=False),

# nn.BatchNorm2d(hidden_dim),

nn.ReLU6(inplace=True),

# dw

nn.ReflectionPad2d(1),

nn.Conv2d(hidden_dim, hidden_dim, 3, groups=hidden_dim, bias=False),

# nn.BatchNorm2d(hidden_dim),

nn.ReLU6(inplace=True),

# pw-linear

nn.Conv2d(hidden_dim, oup, 1, bias=False),

# nn.BatchNorm2d(oup),

)

def forward(self, x):

return self.bottleneckBlock(x)

class DetailNode(nn.Module):

def __init__(self):

super(DetailNode, self).__init__()

# Scale is Ax + b, i.e. affine transformation

self.theta_phi = InvertedResidualBlock(inp=32, oup=32, expand_ratio=2)

self.theta_rho = InvertedResidualBlock(inp=32, oup=32, expand_ratio=2)

self.theta_eta = InvertedResidualBlock(inp=32, oup=32, expand_ratio=2)

self.shffleconv = nn.Conv2d(64, 64, kernel_size=1,

stride=1, padding=0, bias=True)

def separateFeature(self, x):

z1, z2 = x[:, :x.shape[1]//2], x[:, x.shape[1]//2:x.shape[1]]

return z1, z2

def forward(self, z1, z2):

z1, z2 = self.separateFeature(

self.shffleconv(torch.cat((z1, z2), dim=1)))

z2 = z2 + self.theta_phi(z1)

z1 = z1 * torch.exp(self.theta_rho(z2)) + self.theta_eta(z2)

return z1, z2

class DetailFeatureExtraction(nn.Module):

def __init__(self, num_layers=3):

super(DetailFeatureExtraction, self).__init__()

INNmodules = [DetailNode() for _ in range(num_layers)]

self.net = nn.Sequential(*INNmodules)

def forward(self, x):

z1, z2 = x[:, :x.shape[1]//2], x[:, x.shape[1]//2:x.shape[1]]

for layer in self.net:

z1, z2 = layer(z1, z2)

return torch.cat((z1, z2), dim=1)

# =============================================================================

# =============================================================================

import numbers

##########################################################################

## Layer Norm

def to_3d(x):

return rearrange(x, 'b c h w -> b (h w) c')

def to_4d(x, h, w):

return rearrange(x, 'b (h w) c -> b c h w', h=h, w=w)

class BiasFree_LayerNorm(nn.Module):

def __init__(self, normalized_shape):

super(BiasFree_LayerNorm, self).__init__()

if isinstance(normalized_shape, numbers.Integral):

normalized_shape = (normalized_shape,)

normalized_shape = torch.Size(normalized_shape)

assert len(normalized_shape) == 1

self.weight = nn.Parameter(torch.ones(normalized_shape))

self.normalized_shape = normalized_shape

def forward(self, x):

sigma = x.var(-1, keepdim=True, unbiased=False)

return x / torch.sqrt(sigma+1e-5) * self.weight

class WithBias_LayerNorm(nn.Module):

def __init__(self, normalized_shape):

super(WithBias_LayerNorm, self).__init__()

if isinstance(normalized_shape, numbers.Integral):

normalized_shape = (normalized_shape,)

normalized_shape = torch.Size(normalized_shape)

assert len(normalized_shape) == 1

self.weight = nn.Parameter(torch.ones(normalized_shape))

self.bias = nn.Parameter(torch.zeros(normalized_shape))

self.normalized_shape = normalized_shape

def forward(self, x):

mu = x.mean(-1, keepdim=True)

sigma = x.var(-1, keepdim=True, unbiased=False)

return (x - mu) / torch.sqrt(sigma+1e-5) * self.weight + self.bias

class LayerNorm(nn.Module):

def __init__(self, dim, LayerNorm_type):

super(LayerNorm, self).__init__()

if LayerNorm_type == 'BiasFree':

self.body = BiasFree_LayerNorm(dim)

else:

self.body = WithBias_LayerNorm(dim)

def forward(self, x):

h, w = x.shape[-2:]

return to_4d(self.body(to_3d(x)), h, w)

##########################################################################

## Gated-Dconv Feed-Forward Network (GDFN)

class FeedForward(nn.Module):

def __init__(self, dim, ffn_expansion_factor, bias):

super(FeedForward, self).__init__()

hidden_features = int(dim*ffn_expansion_factor)

self.project_in = nn.Conv2d(

dim, hidden_features*2, kernel_size=1, bias=bias)

self.dwconv = nn.Conv2d(hidden_features*2, hidden_features*2, kernel_size=3,

stride=1, padding=1, groups=hidden_features*2, bias=bias)

self.project_out = nn.Conv2d(

hidden_features, dim, kernel_size=1, bias=bias)

def forward(self, x):

x = self.project_in(x)

x1, x2 = self.dwconv(x).chunk(2, dim=1)

x = F.gelu(x1) * x2

x = self.project_out(x)

return x

##########################################################################

## Multi-DConv Head Transposed Self-Attention (MDTA)

class Attention(nn.Module):

def __init__(self, dim, num_heads, bias):

super(Attention, self).__init__()

self.num_heads = num_heads

self.temperature = nn.Parameter(torch.ones(num_heads, 1, 1))

self.qkv = nn.Conv2d(dim, dim*3, kernel_size=1, bias=bias)

self.qkv_dwconv = nn.Conv2d(

dim*3, dim*3, kernel_size=3, stride=1, padding=1, groups=dim*3, bias=bias)

self.project_out = nn.Conv2d(dim, dim, kernel_size=1, bias=bias)

def forward(self, x):

b, c, h, w = x.shape

qkv = self.qkv_dwconv(self.qkv(x))

q, k, v = qkv.chunk(3, dim=1)

q = rearrange(q, 'b (head c) h w -> b head c (h w)',

head=self.num_heads)

k = rearrange(k, 'b (head c) h w -> b head c (h w)',

head=self.num_heads)

v = rearrange(v, 'b (head c) h w -> b head c (h w)',

head=self.num_heads)

q = torch.nn.functional.normalize(q, dim=-1)

k = torch.nn.functional.normalize(k, dim=-1)

attn = (q @ k.transpose(-2, -1)) * self.temperature

attn = attn.softmax(dim=-1)

out = (attn @ v)

out = rearrange(out, 'b head c (h w) -> b (head c) h w',

head=self.num_heads, h=h, w=w)

out = self.project_out(out)

return out

##########################################################################

class TransformerBlock(nn.Module):

def __init__(self, dim, num_heads, ffn_expansion_factor, bias, LayerNorm_type):

super(TransformerBlock, self).__init__()

self.norm1 = LayerNorm(dim, LayerNorm_type)

self.attn = Attention(dim, num_heads, bias)

self.norm2 = LayerNorm(dim, LayerNorm_type)

self.ffn = FeedForward(dim, ffn_expansion_factor, bias)

def forward(self, x):

x = x + self.attn(self.norm1(x))

x = x + self.ffn(self.norm2(x))

return x

##########################################################################

## Overlapped image patch embedding with 3x3 Conv

class OverlapPatchEmbed(nn.Module):

def __init__(self, in_c=3, embed_dim=48, bias=False):

super(OverlapPatchEmbed, self).__init__()

self.proj = nn.Conv2d(in_c, embed_dim, kernel_size=3,

stride=1, padding=1, bias=bias)

def forward(self, x):

x = self.proj(x)

return x

class Restormer_Encoder(nn.Module):

def __init__(self,

inp_channels=1,

out_channels=1,

dim=64,

num_blocks=[4, 4],

heads=[8, 8, 8],

ffn_expansion_factor=2,

bias=False,

LayerNorm_type='WithBias',

):

super(Restormer_Encoder, self).__init__()

self.patch_embed = OverlapPatchEmbed(inp_channels, dim)

self.encoder_level1 = nn.Sequential(*[TransformerBlock(dim=dim, num_heads=heads[0], ffn_expansion_factor=ffn_expansion_factor,

bias=bias, LayerNorm_type=LayerNorm_type) for i in range(num_blocks[0])])

self.baseFeature = BaseFeatureExtraction(dim=dim, num_heads = heads[2])

self.detailFeature = DetailFeatureExtraction()

def forward(self, inp_img):

inp_enc_level1 = self.patch_embed(inp_img)

out_enc_level1 = self.encoder_level1(inp_enc_level1)

base_feature = self.baseFeature(out_enc_level1)

detail_feature = self.detailFeature(out_enc_level1)

return base_feature, detail_feature, out_enc_level1

class Restormer_Decoder(nn.Module):

def __init__(self,

inp_channels=1,

out_channels=1,

dim=64,

num_blocks=[4, 4],

heads=[8, 8, 8],

ffn_expansion_factor=2,

bias=False,

LayerNorm_type='WithBias',

):

super(Restormer_Decoder, self).__init__()

self.reduce_channel = nn.Conv2d(int(dim*2), int(dim), kernel_size=1, bias=bias)

self.encoder_level2 = nn.Sequential(*[TransformerBlock(dim=dim, num_heads=heads[1], ffn_expansion_factor=ffn_expansion_factor,

bias=bias, LayerNorm_type=LayerNorm_type) for i in range(num_blocks[1])])

self.output = nn.Sequential(

nn.Conv2d(int(dim), int(dim)//2, kernel_size=3,

stride=1, padding=1, bias=bias),

nn.LeakyReLU(),

nn.Conv2d(int(dim)//2, out_channels, kernel_size=3,

stride=1, padding=1, bias=bias),)

self.sigmoid = nn.Sigmoid()

def forward(self, inp_img, base_feature, detail_feature):

out_enc_level0 = torch.cat((base_feature, detail_feature), dim=1)

out_enc_level0 = self.reduce_channel(out_enc_level0)

out_enc_level1 = self.encoder_level2(out_enc_level0)

if inp_img is not None:

out_enc_level1 = self.output(out_enc_level1) + inp_img

else:

out_enc_level1 = self.output(out_enc_level1)

return self.sigmoid(out_enc_level1), out_enc_level0

if __name__ == '__main__':

height = 128

width = 128

window_size = 8

modelE = Restormer_Encoder().cuda()

modelD = Restormer_Decoder().cuda()