高可用集群项目实战 -- LVS+KEEPALIVED高可用集群 实现企业 OA 自动化办公系统(项目实战)

LVS+KEEPALIVED高可用集群 实现企业 OA 自动化办公系统

- 一、需求背景

-

- 1、需求背景

- 2、集群系统需求分析

- 二、集群系统设计

-

- 1、总体设计

- 2、网络设计

- 三、集群配置方案集群配置方案

-

- 1、负载调度器

- 2、应用服务器

- 3、存储服务器

- 4、数据库服务器

- 5、集群整体架构设计

- 6、网络部署方案

- 四、集群系统实现

-

- 1、系统初始及优化

- 2、数据库服务器安装配置

-

- 2.1 部署MySQL

- 2.2 MySQL主从同步

- 2.3 MySQL读写分离

- 3、存储服务器安装配置

-

- 3.1 配置 DRBD 存储

-

- a. 部署 DRBD

- b. 配置 DRBD

- 3.2 配置 NFS

-

- a. 部署 NFS

- b. 配置 NFS

- 3.3 配置 Keepalived

-

- a. 部署 Keepalived

- b. 配置 Keepalived

- 4、应用服务器安装配置

-

- 4.1 部署 LNMP

-

- a. 安装 LNMP

- b. 配置 LNMP

- 4.2 配置 LVS

- 4.3 部署 OA 系统

- 5、负载调度器安装配置

-

- 5.1 部署 LVS + Keepalived

- 5.2 配置 Keepalived

- 6、负载调度器安装配置

-

- 6.1 配置 keepalived

- 五、集群系统测试

-

- 1、数据库服务器测试

-

- 1.1 MySQL主从同步功能测试

- 1.2 MyCAT读写分离功能测试

- 2、存储服务器测试

-

- 2.1 DRBD 功能测试

- 2.2 NFS 功能测试

- 2.3 高可用功能测试

-

- a. 测试 Keepalived 针对 NFS 监控检查

- b. 测试 Keepalived 故障切换

- 3、应用服务器测试

-

- 3.1 应用服务器挂载共享存储

- 应用服务器访问 Web 服务(OA)

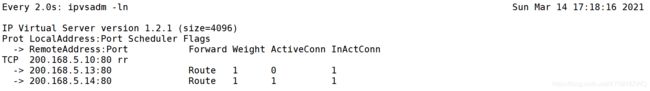

- 4、负载均衡器调度算法测试

- 5、负载均衡器健康检查测试

- 6、负载均衡器高可用测试

-

- 6.1 主调度器的keepalived检查故障转移

- 6.2 主调度器的keepalived检查故障恢复不抢占

- 7、用户访问OA 自动化办公系统

- 六、项目升级改进方案

一、需求背景

1、需求背景

针对公司集群系统需求进行分析,选择使用LVS三层结构设计,调度算法使用轮询,然后使用keepalived对负载均衡器实现高可用。服务器使用LNMP环境,后端共享存储基于drbd+nfs,使用keepalived实现高可用,数据库选用MySQL,基于MyCAT在主从同步基础上实现读写分离。此系统可以满足实现高可用、负载均衡和可扩展的需求。

2、集群系统需求分析

根据公司现有软硬件资源,为了实现一个能满足公司及分支机构员工即时访问需求,实现集群负载均衡、高可用和可扩展,集群系统应该满足以下要求:

1、负载调度器接收用户访问请求后,尽可能均匀将请求分发到后端真实服务器;

2、负载调度器应该具有健康检查功能,即当后端真实服务器发生故障时从转发池里剔除,修复故障后加入转发池;

3、负载调度器具有热备功能,当主调度器发生故障时,由备调度器来接管资源,为了不影响用户使用,主调度器修复故障后不抢占;

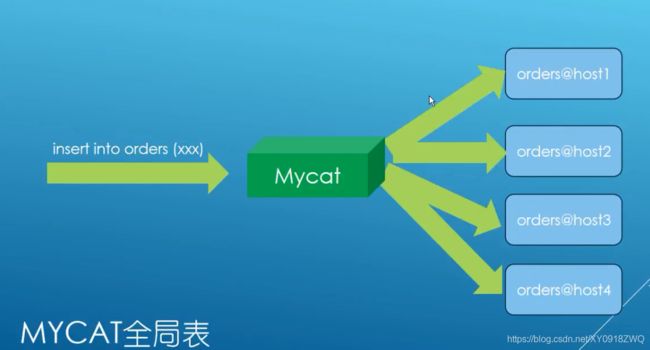

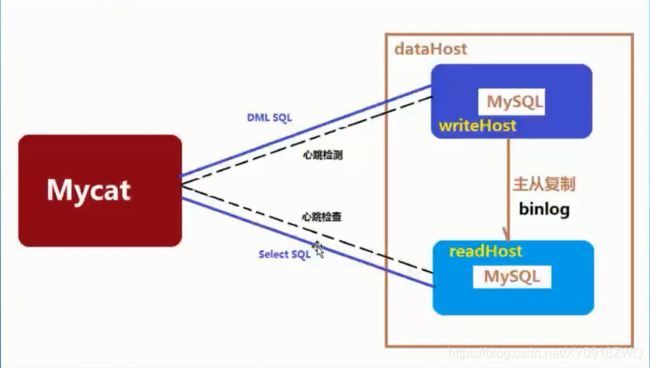

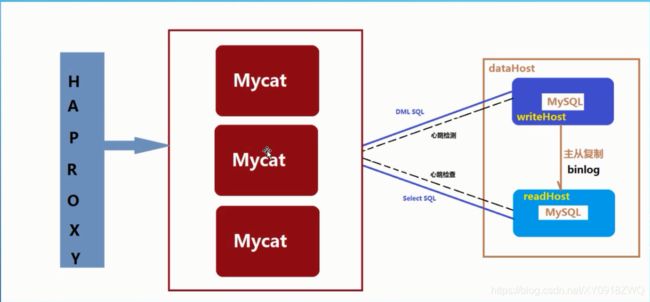

4、针对数据库读多写少的需求,实现MySQL主从同步,基于MyCAT实现读写分离;

5、共享存储具有冗余功能并实现高可用;

6、集群对终端用户而言是透明的,用户只需要使用原有的访问方式即可;

二、集群系统设计

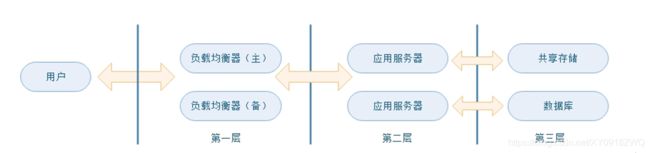

1、总体设计

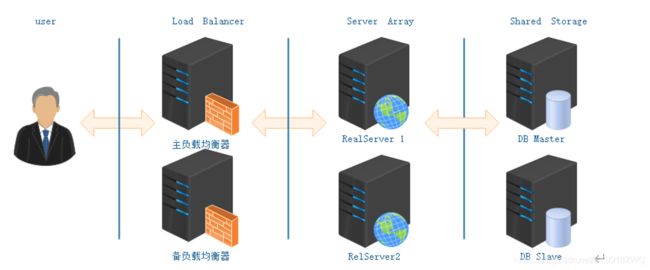

如上图,第一层为负载调度层,第二层为应用服务器层,第三层为数据库和共享存储。

第一层:负载调度层

本层负责接收用户请求并转发,并具有故障切换功能。本文中采用两台服务器一主一备模式。同时对后端的real server做健康检查,对后端的故障服务器做故障隔离,修复后加入转发队列。基于性能考虑,采用DR工作模式,调度算法采用轮询。

2、网络设计

本集群系统根据需求网络规划分为 公网网段 和 私有网段 。其中公网 IP 提供给负载调度器和应用服务器使用。内网主要有两大作用:一是用于负载调度器主备之间心跳检测;其次是用户应用服务器和后端的数据库以及共享存储之间的内部通讯。

应用服务器需要有两块网卡,分别连接公网和内网后端数据库及共享存储。

负载调度器需要有两块网卡,其中一个用于心跳线,一个连接外网。

第二层:应用服务器层

本层是real server,由两台以上的服务器组成,应用环境为LNMP,应用服务器的数据同步依赖于后端的共享存储。

第三层:数据库/共享存储层

共享存储用于保障应用服务器数据同步,采用开源drbd+nfs来实现,基于keepalived实现高可用。数据库采用MySQL,一主两从,使用MyCAT配置实现读写分离,保证了数据库的高可用和负载均衡。

此次项目采用LVS-DR模式 + Keepalived实现高可用、可扩展、负载均衡集群,总体逻辑层次图如下图所示。

三、集群配置方案集群配置方案

1、负载调度器

操作系统:CentOS 7.8

#cat /etc/redhat-release

CentOS Linux release 7.8.2003 (Core)

负载均衡:lvs

高可用:keepalived

2、应用服务器

由于在线协同系统是php+MySQL,采用业界广泛使用的LNMP。

操作系统:CentOS 7.8

#cat /etc/redhat-release

CentOS Linux release 7.8.2003 (Core)

应用:LNMP

3、存储服务器

操作系统:CentOS 7.8

#cat /etc/redhat-release

CentOS Linux release 7.8.2003 (Core)

本文使用nfs,采用drbd+nfs实现,DRBD是一种块设备,可以被用于高可用(HA)之中,它类似于一个网络RAID-1功能提供冗余,然后采用keepalived实现高可用。

4、数据库服务器

操作系统:CentOS 7.8

#cat /etc/redhat-release

CentOS Linux release 7.8.2003 (Core)

为了减轻数据库压力,采用一主两从配置主从同步,基于开源的MyCAT配置实现读写分离,即保证了数据安全又实现了负载均衡。

5、集群整体架构设计

6、网络部署方案

实验机 + 测试机 :11台 Linux 主机 !

| Role | Interface | Host | IP | VIP | Use |

|---|---|---|---|---|---|

| 主负载调度器 | ens33 | node01 | 200.168.5.11 | 200.168.5.10 | 用于Wan数据 转发 |

| 主负载调度器 | ens38 | node01 | 172.24.8.11 | 200.168.5.10 | 用于LB间心跳连接 |

| 备负载调度器 | ens33 | node02 | 200.168.5.12 | 200.168.5.10 | 用于Wan数据 转发 |

| 备负载调度器 | ens38 | node02 | 172.24.8.12 | 200.168.5.10 | 用于LB间心跳连接 |

| 应用服务器 1 | ens33 | real_server1 | 200.168.5.13 | ---- | 用于Wan数据 转发 |

| 应用服务器 1 | ens38 | real_server1 | 172.24.8.13 | ---- | 用于Lan数据 转发 |

| 应用服务器 2 | ens33 | real_server2 | 200.168.5.14 | ---- | 用于Wan数据 转发 |

| 应用服务器 2 | ens33 | real_server2 | 172.24.8.14 | ---- | 用于Lan数据 转发 |

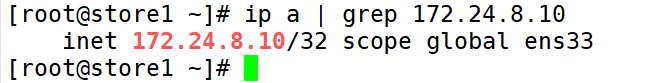

| 存储服务器 1 | ens33 | store1 | 172.24.5.15 | 172.24.8.10 | 用于Lan数据 转发 |

| 存储服务器 2 | ens33 | store2 | 172.24.5.16 | 172.24.8.10 | 用于Lan数据 转发 |

| 主数据库 | ens33 | db1 | 200.168.5.17 | ---- | 用于Lan数据 转发 |

| 从数据库 1 | ens33 | db2 | 172.24.8.18 | ---- | 用于Lan数据 转发 |

| 从数据库 2 | ens33 | db3 | 172.24.8.19 | ---- | 用于Lan数据 转发 |

| 数据库代理 | ens33 | proxy_db | 172.24.8.20 | ---- | 用于Lan数据 转发 |

| 客户端测试机 | eno16777736 | rhel7 | 200.168.5.9 | ---- | 用于客户端测试 |

四、集群系统实现

每个机器都执行 !

1、系统初始及优化

系统服务优化

# mkdir /scripts

# vim /scripts/init_CentOS7.sh

#!/bin/bash

##############################################################

# File Name: init_CentOS7.sh

# Version: V1.0

# Author: wan

# Email: [email protected]

# Organization: http://www.cnblogs.com/kongd/

# Created Time :2021-3-10

# Description: init_CentOS7

##############################################################

# 1、disable SELinux and stop firewall

systemctl stop firewalld

systemctl disable firewalld

sed -i '/^SELINUX=/ cSELINUX=disabled' /etc/selinux/config

setenforce 0

# 2、config epel

wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo &>/dev/null

# 3、install software

yum install bash-completion net-tools wget lrzsz psmisc vim dos2unix unzip tree -y &>/dev/null

# 4、config sshd

cp /etc/ssh/sshd_config{,.`date +%F`.bak}

sed -i 's/^GSSAPIAuthentication yes$/GSSAPIAuthentication no/' /etc/ssh/sshd_config

sed -i 's/#UseDNS yes/UseDNS no/' /etc/ssh/sshd_config

# 5、config history

echo 'export TMOUT=300' >>/etc/profile

echo 'export HISTFILESIZE=100' >>/etc/profile

echo 'export HISTSIZE=100' >>/etc/profile

echo 'export HISTTIMEFORMAT="%F %T [`whoami`] "' >>/etc/profile

# 6、adjust descriptor

cat >> /etc/security/limits.conf << EOF

* soft nproc unlimited

* hard nproc unlimited

* soft nofile 655350

* hard nofile 655350

EOF

# 7、kernel parameter

cat >> /etc/sysctl.conf << EOF

net.ipv4.ip_local_port_range = 1024 65535

net.core.rmem_max = 16777216

net.core.wmem_max = 16777216

net.ipv4.tcp_rmem = 4096 87380 16777216

net.ipv4.tcp_wmem = 4096 65536 16777216

net.ipv4.tcp_fin_timeout = 10

net.ipv4.tcp_tw_recycle = 1

net.ipv4.tcp_timestamps = 0

net.ipv4.tcp_window_scaling = 0

net.ipv4.tcp_sack = 0

net.core.netdev_max_backlog = 30000

net.ipv4.tcp_no_metrics_save = 1

net.core.somaxconn = 22144

net.ipv4.tcp_syncookies = 0

net.ipv4.tcp_max_orphans = 262144

net.ipv4.tcp_max_syn_backlog = 262144

net.ipv4.tcp_synack_retries = 2

net.ipv4.tcp_syn_retries = 2

vm.overcommit_memory = 1

fs.file-max = 2000000

fs.nr_open = 2000000

EOF

/sbin/sysctl -p

# chmod +x /scripts/init_CentOS7.sh

# /scripts/init_CentOS7.sh

服务器时间同步

可以访问公网的主机配置阿里云时间服务器,同步互联网时间,其他主机统一向这台主机同步时间,确保群集时间的一致 !

# vim /etc/chrony.conf

server 0.centos.pool.ntp.org iburst

server 1.centos.pool.ntp.org iburst

server 2.centos.pool.ntp.org iburst

server 3.centos.pool.ntp.org iburst

server ntp.aliyun.com iburst

allow 172.24.8.0/24

local stratum 10

# systemctl restart chronyd

2、数据库服务器安装配置

2.1 部署MySQL

三台数据库机器(db1、db2、db3)都要部署MySQL-5.7.20(通用二进制安装)

# 创建用户、组

[root@db1]# groupadd -r -g 308 mysql

[root@db1]# useradd -r -g 308 -u 308 -c "MySQL Sever" -s /bin/false mysql

# 上传软件

[root@db1 tools]# ll

total 656952

-rw-r--r-- 1 root root 672716800 Mar 10 20:06 mysql-5.7.14-linux-glibc2.5-x86_64.tar

# 安装 MySQL

[root@db1 tools]# tar xf mysql-5.7.14-linux-glibc2.5-x86_64.tar -C /usr/local/

[root@db1 local]# tar xf mysql-5.7.14-linux-glibc2.5-x86_64.tar.gz

[root@db1 local]# ln -sv mysql-5.7.14-linux-glibc2.5-x86_64 mysql

# 初始化

[root@db1 local]# mysql/bin/mysqld --initialize --user=mysql --basedir=/usr/local/mysql --datadir=/usr/local/mysql/data

# 提供服务配置文件

[root@db1 local]# mkdir /usr/local/mysql/logs

[root@db1 local]# chown -R mysql.mysql /usr/local/mysql/logs

[root@db1 local]# mv /etc/my.cnf{,.bak}

[root@db1 local]# vim /etc/my.cnf

# 提供系统服务脚本

[root@db1 mysql]# cp support-files/mysql.server /etc/rc.d/init.d/mysqld

[root@db1 mysql]# ll /etc/rc.d/init.d/mysqld

-rwxr-xr-x 1 root root 10975 Mar 10 19:16 /etc/rc.d/init.d/mysqld

# 启动 MySQL服务

[root@db1 mysql]# chkconfig --add mysqld

[root@db1 mysql]# chkconfig mysqld on

[root@db1 mysql]# systemctl start mysqld

# 添加环境变量

[root@db1 ~]# vim /etc/profile.d/mysql.sh

[root@db1 ~]# source /etc/profile.d/mysql.sh

# 设置root用户登录密码

[(none)] >alter user root@localhost identified by '123456';

# 安全初始设置

[root@db1 ~]# mysql_secure_installation

2.2 MySQL主从同步

1、主库配置

修改MySQL配置文件

[root@db1 ~]# vim /etc/my.cnf

[mysqld]

server-id = 1

log_bin = mysql-bin

binlog_ignore_db=mysql

gtid-mode=on

enforce-gtid-consistency=true

log-slave-updates=1

[mysql]

prompt=db1 [\\d] >

[root@db1 ~]# systemctl restart mysqld

创建主从同步用户

[root@db1 ~]# mysql -uroot -p123456 -e "grant replication slave on *.* to 'rep'@'172.24.8.%' identified by '123456';"

2、从库配置

---db2

[root@db2 ~]# vim /etc/my.cnf

[mysqld]

server-id = 2

log_bin = mysql-bin

binlog_ignore_db=mysql

gtid-mode=on

enforce-gtid-consistency=true

log-slave-updates=1

[mysql]

prompt=db1 [\\d] >

[root@db2 ~]# systemctl restart mysqld

---db3

[root@db3 ~]# vim /etc/my.cnf

[mysqld]

server-id = 3

log_bin = mysql-bin

binlog_ignore_db=mysql

gtid-mode=on

enforce-gtid-consistency=true

log-slave-updates=1

[mysql]

prompt=db1 [\\d] >

[root@db3 ~]# systemctl restart mysqld

3、配置主从同步

---db2

db2 [(none)] >change master to

-> master_host='172.24.8.17',

-> master_user='rep',

-> master_password='123456' ,

-> MASTER_AUTO_POSITION=1;

Query OK, 0 rows affected, 2 warnings (0.00 sec)

---db3

db3 [(none)] >change master to

-> master_host='172.24.8.17',

-> master_user='rep',

-> master_password='123456' ,

-> MASTER_AUTO_POSITION=1;

Query OK, 0 rows affected, 2 warnings (0.08 sec)

4、开启主从

---db2

db2 [(none)] >start slave;

Query OK, 0 rows affected (0.00 sec)

---db3

db3 [(none)] >start slave;

Query OK, 0 rows affected (0.00 sec)

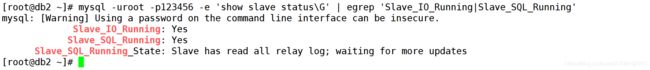

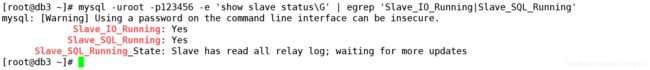

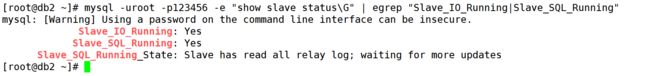

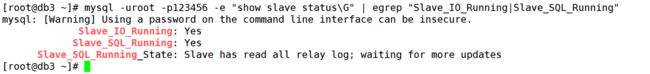

查看主从同步

2.3 MySQL读写分离

MySQL 读写分离基于 MyCat 实现

1、部署 MyCat

# 上传软件包

[root@proxy_db tools]# ll

total 139744

-rw-r--r-- 1 root root 127431820 Aug 12 2020 jdk-8u261-linux-x64.rpm

-rw-r--r-- 1 root root 15662280 Jan 21 21:46 Mycat-server-1.6-RELEASE-20161028204710-linux.tar.gz

# 部署 Jdk

[root@proxy_db tools]# yum install jdk-8u261-linux-x64.rpm -y

# 部署 MyCat

[root@proxy_db tools]# tar xf Mycat-server-1.6-RELEASE-20161028204710-linux.tar.gz -C /usr/local/

[root@proxy_db tools]# vim /etc/profile.d/mycat.sh

i[root@proxy_db tools]# source /etc/profile.d/mycat.sh

2、配置MyCat

[root@proxy_db tools]# cd /usr/local/mycat/conf/

[root@proxy_db conf]# vim schema.xml

<?xml version="1.0"?>

<!DOCTYPE mycat:schema SYSTEM "schema.dtd">

<mycat:schema xmlns:mycat="http://io.mycat/">

<schema name="TESTDB" checkSQLschema="false" sqlMaxLimit="100" dataNode="dn1">

</schema>

<dataNode name="dn1" dataHost="localhost1" database="oa" />

<dataHost name="localhost1" maxCon="1000" minCon="10" balance="1"

writeType="0" dbType="mysql" dbDriver="native" switchType="1" slaveThreshold="100">

<heartbeat>select user()</heartbeat>

<writeHost host="Master" url="172.24.8.17:3306" user="mycat" password="123456">

<readHost host="Salve1" url="172.24.8.18:3306" user="root" password="123456" />

<readHost host="Salve2" url="172.24.8.19:3306" user="root" password="123456" />

</writeHost>

</dataHost>

</mycat:schema>

[root@proxy_db ~]# vim /usr/local/mycat/conf/server.xml

<property name="serverPort">3306</property> <property name="managerPort">9066</property>

2、创建读写分离的用户

---db1

db1 [(none)] >grant all on oa.* to mycat@'172.24.8.%' identified by '123456';

Query OK, 0 rows affected, 1 warning (0.22 sec)

---db2

db2 [(none)] >GRANT SELECT ON oa.* TO 'mycat'@'172.24.8.%' identified by '123456';

Query OK, 0 rows affected, 1 warning (0.00 sec)

---db3

db3 [(none)] >GRANT SELECT ON oa.* TO 'mycat'@'172.24.8.%' identified by '123456';

Query OK, 0 rows affected, 1 warning (0.00 sec)

创建数据库

db1 [(none)] >create database oa;

启动MyCat

[root@proxy_db ~]# mycat start

[root@proxy_db ~]# netstat -lnutp | egrep '[89]066'

tcp 0 0 0.0.0.0:8066 0.0.0.0:* LISTEN 7121/java

tcp 0 0 0.0.0.0:9066 0.0.0.0:* LISTEN 7121/java

[root@proxy_db ~]# mycat status

Mycat-server is running (7119).

设置开机自启

[root@proxy_db ~]# vim /etc/rc.local

/usr/local/mycat/bin/mycat start

[root@proxy_db ~]# chmod +x /etc/rc.d/rc.local

[root@proxy_db ~]# ll /etc/rc.d/rc.local

-rwxr-xr-x. 1 root root 542 Mar 11 20:27 /etc/rc.d/rc.local

3、存储服务器安装配置

3.1 配置 DRBD 存储

两台主机添加一块10G的硬盘,并分区2G

a. 部署 DRBD

两台主机(store1、store2)都需要执行如下配置

# 配置 hosts解析

[root@store1 ~]# vim /etc/hosts +

172.24.8.15 store1

172.24.8.16 store2

# 配置免密钥互信

[root@store1 ~]# ssh-keygen -f ~/.ssh/id_rsa -P '' -q

[root@store1 ~]# ssh-copy-id store1

[root@store1 ~]# ssh-copy-id store2

# 配置 DRBD yum 源

[root@store1 ~]# vim /etc/yum.repos.d/drbd.repo

[drbd]

name=drbd

baseurl=https://mirrors.tuna.tsinghua.edu.cn/elrepo/elrepo/el7/x86_64/

gpgcheck=0

# 安装 DRBD

[root@store1 ~]# yum install drbd84-utils kmod-drbd84 -y

# 加载内核

[root@store1 ~]# yum -y install kernel-devel kernel kernel-headers

[root@store1 ~]# lsmod | grep drbd

[root@store1 ~]# modprobe drbd

[root@store1 ~]# lsmod | grep drbd

drbd 397041 0

libcrc32c 12644 2 xfs,drbd

# 磁盘分区

[root@store1 ~]# lsblk | grep sdb

sdb 8:16 0 10G 0 disk

└─sdb1 8:17 0 2G 0 part

b. 配置 DRBD

修改全局配置文件

[root@store1 ~]# vim /etc/drbd.d/global_common.conf

global {

usage-count yes;

udev-always-use-vnr;

}

common {

protocol C;

handlers {

pri-on-incon-degr "/usr/lib/drbd/notify-pri-on-incon-degr.sh; /usr/lib/drbd/notify-emergency-reboot.sh; echo b > /proc/sysrq-trigger ; reboot -f";

pri-lost-after-sb "/usr/lib/drbd/notify-pri-lost-after-sb.sh; /usr/lib/drbd/notify-emergency-reboot.sh; echo b > /proc/sysrq-trigger ; reboot -f";

local-io-error "/usr/lib/drbd/notify-io-error.sh; /usr/lib/drbd/notify-emergency-shutdown.sh; echo o > /proc/sysrq-trigger ; halt -f";

}

startup {

}

options {

}

disk {

on-io-error detach;

}

net {

cram-hmac-alg "sha1";

shared-secret "nfs-HA";

}

syncer { rate 1000M; }

}

配置资源文件

[root@store1 ~]# vim /etc/drbd.d/nfs.res

resource nfs {

meta-disk internal;

device /dev/drbd1;

disk /dev/sdb1;

on store1 {

address 172.24.8.15:7789;

}

on store2 {

address 172.24.8.16:7789;

}

}

配置文件拷贝到 store2

[root@store1 ~]# scp /etc/drbd.d/* store2:/etc/drbd.d/

创建程序用户

[root@store1 ~]# useradd -M -s /sbin/nologin haclient

[root@store1 ~]# chgrp haclient /lib/drbd/drbdsetup-84^C

[root@store1 ~]# chgrp haclient /lib/drbd/drbdsetup-84

[root@store1 ~]# chmod o-x /lib/drbd/drbdsetup-84

[root@store1 ~]# chmod u+s /lib/drbd/drbdsetup-84

[root@store1 ~]# chgrp haclient /usr/sbin/drbdmeta

[root@store1 ~]# chmod o-x /usr/sbin/drbdmeta

[root@store1 ~]# chmod u+s /usr/sbin/drbdmeta

激活资源、启动 DRBD 服务

[root@store1 ~]# drbdadm create-md nfs

[root@store1 ~]# systemctl enable --now drbd

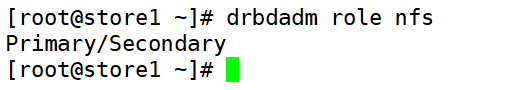

store1 强制升级为 Primary

[root@store1 ~]# drbdadm --force primary nfs

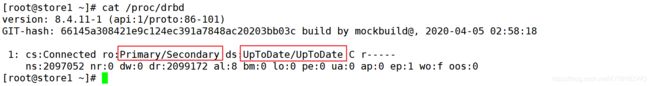

查看 DRBD 状态

3.2 配置 NFS

a. 部署 NFS

[root@store1 ~]# yum install install rpcbind nfs-utils -y

b. 配置 NFS

# 修改 nfs 配置文件

[root@store1 ~]# mkdir /data

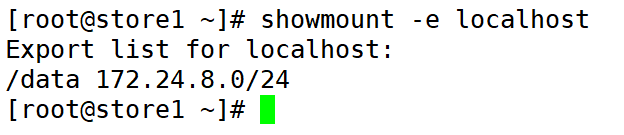

/data 172.24.8.0/24(rw,sync,no_root_squash)

# 创建挂载点目录

[root@store1 ~]# mkdir /data

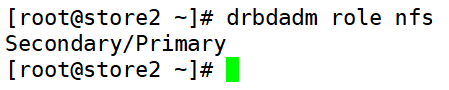

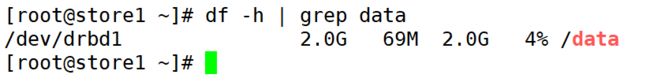

# 格式化 DRBD 资源并挂载(主服务器执行操作)

[root@store1 ~]# mkfs.xfs /dev/drbd1

[root@store1 ~]# mount /dev/drbd1 /data

[root@store1 ~]# systemctl enable --now rpcbind

[root@store1 ~]# systemctl enable --now nfs

3.3 配置 Keepalived

a. 部署 Keepalived

[root@store1 ~]# yum install keepalived -y

b. 配置 Keepalived

修改 Keepalived 配置文件

[root@store1 ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_1

}

vrrp_script chk_nfs {

script "/etc/keepalived/chk_nfs.sh"

}

vrrp_instance VI_1 {

state BACKUP

nopreempt

interface ens33

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

track_script {

chk_nfs

}

notify_stop /etc/keepalived/notify_stop.sh

notify_master /etc/keepalived/notify_master.sh

notify_backup /etc/keepalived/notify_backup.sh

virtual_ipaddress {

172.24.8.10

}

}

提供 NFS 心跳检测的脚本

[root@store1 ~]# vim /etc/keepalived/chk_nfs.sh

#!/bin/sh

/sbin/service nfs status &>/dev/null

if [ $? -ne 0 ];then

/sbin/service nfs restart

/sbin/service nfs status &>/dev/null

if [ $? -ne 0 ];then

umount /dev/drbd0

drbdadm secondary r0

/sbin/service keepalived stop

fi

fi

提供 Keepalived 主服务器脚本

[root@store1 ~]# vim /etc/keepalived/notify_master.sh

#!/bin/bash

log_dir=/etc/keepalived/logs

time=`date "+%F %T"`

[ -d ${log_dir} ] || mkdir -p ${log_dir}

echo -e "$time ------notify_master------\n" >> ${log_dir}/notify_master.log

/sbin/drbdadm primary nfs &>> ${log_dir}/notify_master.log

/bin/mount /dev/drbd1 /data &>> ${log_dir}/notify_master.log

/sbin/service nfs restart &>> ${log_dir}/notify_master.log

echo -e "\n" >> ${log_dir}/notify_master.log

提供 Keepalived 备份服务器脚本

[root@store1 ~]# vim /etc/keepalived/notify_backup.sh

#!/bin/bash

log_dir=/etc/keepalived/logs

[ -d ${log_dir} ] || mkdir -p ${log_dir}

time=`date "+%F %T"`

echo -e "$time ------notify_backup------\n" >> ${log_dir}/notify_backup.log

/sbin/service nfs stop &>> ${log_dir}/notify_backup.log

/bin/umount /dev/drbd1 &>> ${log_dir}/notify_backup.log

/sbin/drbdadm secondary nfs &>> ${log_dir}/notify_backup.log

echo -e "\n" >> ${log_dir}/notify_backup.log

提供 Keepalived 停止服务器脚本

[root@store1 ~]# vim /etc/keepalived/notify_stop.sh

#!/bin/bash

time=`date "+%F %T"`

log_dir=/etc/keepalived/logs

[ -d ${log_dir} ] || mkdir -p ${log_dir}

echo -e "$time ------notify_stop------\n" >> ${log_dir}/notify_stop.log

/sbin/service nfs stop &>> ${log_dir}/notify_stop.log

/bin/umount /data &>> ${log_dir}/notify_stop.log

/sbin/drbdadm secondary nfs &>> ${log_dir}/notify_stop.log

echo -e "\n" >> ${log_dir}/notify_stop.log

拷贝 store1 Keepalived 配置文件、脚本到store2

[root@store1 ~]# scp /etc/keepalived/* store2:/etc/keepalived/

[root@store2 ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_2

}

vrrp_script chk_nfs {

script "/etc/keepalived/chk_nfs.sh"

}

vrrp_instance VI_1 {

state BACKUP

nopreempt

interface ens33

virtual_router_id 51

priority 50

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

track_script {

chk_nfs

}

notify_stop /etc/keepalived/notify_stop.sh

notify_master /etc/keepalived/notify_master.sh

notify_backup /etc/keepalived/notify_backup.sh

virtual_ipaddress {

172.24.8.10

}

}

启动 Keepalived 服务

[root@store1 ~]# systemctl enable --now keepalived.service

4、应用服务器安装配置

应用服务器采用业界流行的LNMP解决方案,网站的代码存放在共享存储,共享存储挂载至网页默认目录,后端使用数据库。

4.1 部署 LNMP

a. 安装 LNMP

[root@real_server1 ~]# yum install nginx php php-mysql php-gd php-fpm -y

b. 配置 LNMP

修改 Nginx 配置文件

server 标签中添加如下内容

[root@real_server1 ~]# vim /etc/nginx/nginx.conf

... ...

location / {

index index.php index.html index.htm;

}

location ~ \.php$ {

root /usr/share/nginx/html;

fastcgi_pass 127.0.0.1:9000;

fastcgi_index index.php;

#fastcgi_param SCRIPT_FILENAME /scripts$fastcgi_script_name;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

include fastcgi_params;

}

... ...

[root@real_server1 ~]# scp /etc/nginx/nginx.conf 172.24.8.14:/etc/nginx/

修改 php.ini 文件

[root@real_server1 ~]# vim /etc/php.ini

cgi.fix_pathinfo=0

date.timezone = "Asia/shanghai"

[root@real_server1 ~]# scp /etc/php.ini 172.24.8.14:/etc/

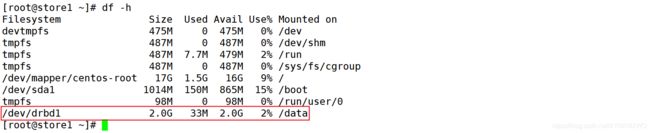

配置 共享存储

网页目录使用共享存储

# 安装 nfs-tils

[root@real_server1 ~]# yum install -y nfs-utils

# 挂载共享 NFS 存储

[root@real_server1 ~]# vim /etc/fstab +

172.24.8.15:/data /usr/share/nginx/html nfs defaults,_netdev 0 0

[root@real_server1 ~]# mount -a

[root@real_server1 ~]# df -h | grep data

172.24.8.15:/data 2.0G 33M 2.0G 2% /usr/share/nginx/html

[root@real_server1 ~]# scp /etc/fstab 172.24.8.14:/etc/

4.2 配置 LVS

[root@real_server1 ~]# vim /etc/init.d/lvs_dr

#!/bin/sh

#

# Startup script handle the initialisation of LVS

# chkconfig: - 28 72

# description: Initialise the Linux Virtual Server for DR

#

### BEGIN INIT INFO

# Provides: ipvsadm

# Required-Start: $local_fs $network $named

# Required-Stop: $local_fs $remote_fs $network

# Short-Description: Initialise the Linux Virtual Server

# Description: The Linux Virtual Server is a highly scalable and highly

# available server built on a cluster of real servers, with the load

# balancer running on Linux.

# description: start LVS of DR-RIP

LOCK=/var/lock/ipvsadm.lock

VIP=200.168.5.10

. /etc/rc.d/init.d/functions

start() {

PID=`ifconfig | grep lo:100 | wc -l`

if [ $PID -ne 0 ];

then

echo "The LVS-DR-RIP Server is already running !"

else

/sbin/ifconfig lo:100 $VIP netmask 255.255.255.255 broadcast $VIP up

/sbin/route add -host $VIP dev lo:100

echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "1" >/proc/sys/net/ipv4/conf/ens33/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/ens33/arp_announce

echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce

/bin/touch $LOCK

echo "starting LVS-DR-RIP server is ok !"

fi

}

stop() {

/sbin/route del -host $VIP dev lo:100

/sbin/ifconfig lo:100 down >/dev/null

echo "0" >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo "0" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "0" >/proc/sys/net/ipv4/conf/ens33/arp_ignore

echo "0" >/proc/sys/net/ipv4/conf/ens33/arp_announce

echo "0" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "0" >/proc/sys/net/ipv4/conf/all/arp_announce

rm -rf $LOCK

echo "stopping LVS-DR-RIP server is ok !"

}

status() {

if [ -e $LOCK ];

then

echo "The LVS-DR-RIP Server is already running !"

else

echo "The LVS-DR-RIP Server is not running !"

fi

}

case "$1" in

start)

start

;;

stop)

stop

;;

restart)

stop

start

;;

status)

status

;;

*)

echo "Usage: $1 {start|stop|restart|status}"

exit 1

esac

exit 0

[root@real_server1 ~]# chmod +x /etc/init.d/lvs_dr

[root@real_server1 ~]# chkconfig --add /etc/init.d/lvs_dr

[root@real_server1 ~]# chkconfig lvs_dr on

[root@real_server1 ~]# systemctl start lvs_dr

[root@real_server1 ~]# scp /etc/init.d/lvs_dr 172.24.8.14:/etc/init.d/

[root@real_server1 ~]# systemctl enable nginx.service php-fpm.service

4.3 部署 OA 系统

# 上传软件包,解压

[root@real_server1 script]# ll

total 37644

-rw-r--r-- 1 root root 38545425 Aug 12 2020 PHPOA_v4.0.zip

[root@real_server1 script]# unzip PHPOA_v4.0.zip

[root@real_server1 script]# mv PHPOA_v4.0/www/* /usr/share/nginx/html/

# 修改 PHP 配置文件

[root@real_server1 ~]# vim /etc/php.ini

short_open_tag = On

[root@real_server1 ~]# systemctl restart php-fpm

[root@real_server1 ~]# scp /etc/php.ini 172.24.8.14:/etc/

#修改权限

[root@real_server1 ~]# cd /usr/share/nginx/html/

[root@real_server1 html]# chmod -R 777 ./config.php ./cache/ ./data/

*浏览器在线部署:http://172.24.8.13/install/install.php

5、负载调度器安装配置

1、Keepalived配置基于tcp健康检查,对后端的real server故障时进行故障隔离,即从转发池中剔除,恢复后加入转发池。

2、Keepalived故障转移基于vrrp,考虑到故障切换对用户影响,配置为非抢占模式,最大程度减少故障切换对终端用户的影响。

5.1 部署 LVS + Keepalived

[root@directory1 ~]# yum install keepalived ipvsadm -y

5.2 配置 Keepalived

MASTER

[root@directory1 ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

[email protected]

}

notification_email_from [email protected]

smtp_server 200.168.5.122

router_id LVS_1

}

vrrp_instance VI_1 {

state BACKUP

nopreempt

interface ens38

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

200.168.5.10 dev ens33 label ens33:10

}

}

virtual_server 200.168.5.10 80 {

delay_loop 6

lb_algo rr

lb_kind DR

#persistence_timeout 50

protocol TCP

real_server 200.168.5.13 80 {

weight 1

TCP_CHECK {

connect_timeout 8

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

real_server 200.168.5.14 80 {

weight 1

TCP_CHECK {

connect_timeout 8

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

}

BACKUP

[root@directory2 ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

[email protected]

}

notification_email_from [email protected]

smtp_server 200.168.5.122

router_id LVS_2

}

vrrp_instance VI_1 {

state BACKUP

interface ens38

virtual_router_id 51

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

200.168.5.10 dev ens33 label ens33:10

}

}

virtual_server 200.168.5.10 80 {

delay_loop 6

lb_algo rr

lb_kind DR

#persistence_timeout 50

protocol TCP

real_server 200.168.5.13 80 {

weight 1

TCP_CHECK {

connect_timeout 8

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

real_server 200.168.5.14 80 {

weight 1

TCP_CHECK {

connect_timeout 8

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

}

6、负载调度器安装配置

安装软件

[root@directory1 ~]# yum install keepalived ipvsadm -y

[root@directory2 ~]# yum install keepalived ipvsadm -y

6.1 配置 keepalived

---directory1

[root@directory1 ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

[email protected]

}

notification_email_from [email protected]

smtp_server 200.168.5.5

router_id LVS_1

}

vrrp_instance VI_1 {

state BACKUP

nopreempt

interface ens38

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

200.168.5.10 dev ens33 label ens33:10

}

}

virtual_server 200.168.5.10 80 {

delay_loop 6

lb_algo rr

lb_kind DR

#persistence_timeout 50

protocol TCP

real_server 200.168.5.13 80 {

weight 1

TCP_CHECK {

connect_timeout 8

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

real_server 200.168.5.14 80 {

weight 1

TCP_CHECK {

connect_timeout 8

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

}

---directory2

! Configuration File for keepalived

global_defs {

notification_email {

[email protected]

}

notification_email_from [email protected]

smtp_server 200.168.5.5

router_id LVS_2

}

vrrp_instance VI_1 {

state BACKUP

interface ens38

virtual_router_id 51

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

200.168.5.10 dev ens33 label ens33:10

}

}

virtual_server 200.168.5.10 80 {

delay_loop 6

lb_algo rr

lb_kind DR

#persistence_timeout 50

protocol TCP

real_server 200.168.5.13 80 {

weight 1

TCP_CHECK {

connect_timeout 8

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

real_server 200.168.5.14 80 {

weight 1

TCP_CHECK {

connect_timeout 8

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

}

[root@directory1 ~]# systemctl enable --now keepalived.service

[root@directory2 ~]# systemctl enable --now keepalived.service

五、集群系统测试

1、数据库服务器测试

配置过程存在文件以及解决方案。

1、登录oa后报MySQL 1364 错误

原因:sql_mode = STRICT_TRANS_TABLES,MySQL严格检查模式(STRICT_TRANS_TABLES)打开,大部分开发者建立数据库和程序时候并不会考虑默认值的问题,一般都是数据库默认处理空值。如果开启了严格模式,好比一个字段本身存储数字类型,这时候你传入空,则报错。

解决方法:取消严格模式配置。

2、Mycat没有开机自启动。

原因:CentOS7需要给/etc/rc.d/rc.local文件增加执行权限。

习惯于在/etc/rc.local文件里配置我们需要开机启动的服务,这个在CentOS6系统下是正常生效的。但是到了CentO S7系统下,发现/etc/rc.local文件里的开机启动项不执行了。如果不添加执行权限会导致没有实现自启动。以下是CentOS7 /etc/rc.d/rc.local里面说明。

#It is highly advisable to create own systemd services or udev rules

#to run scripts during boot instead of using this file.

…

#Please note that you must run ‘chmod +x /etc/rc.d/rc.local’ to ensure

#这个文件是为了兼容性的问题而添加的。

#强烈建议创建自己的systemd服务或udev规则来在开机时运行脚本而不是使用这个文件。

#请记住,你必须执行“chmod +x /etc/rc.d/rc.local”来确保确保这个脚本在引导时执行。

1.1 MySQL主从同步功能测试

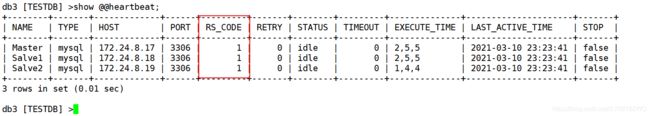

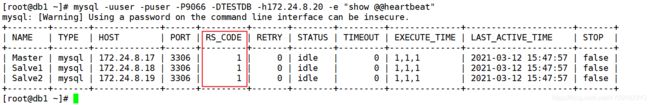

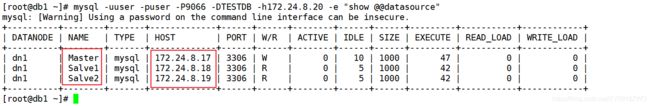

1.2 MyCAT读写分离功能测试

查看 MyCAT 心跳检测

查看 MyCAT 数据源

查看 MyCAT 数据节点

2、存储服务器测试

2.1 DRBD 功能测试

查看 DRBD 磁盘同步状况

2.2 NFS 功能测试

2.3 高可用功能测试

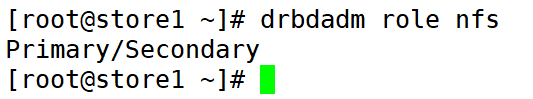

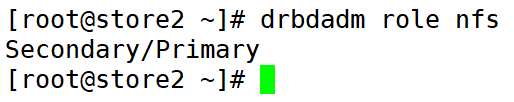

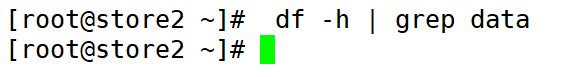

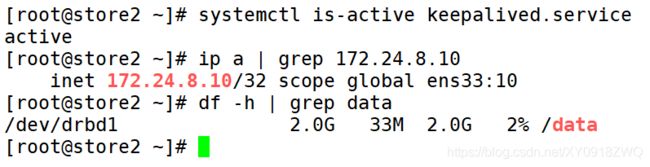

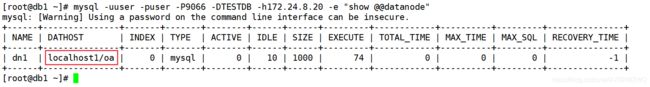

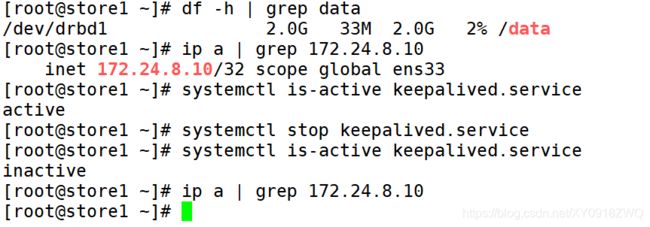

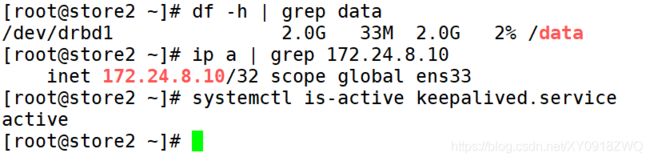

查看 VIP 和 DRBD 挂载情况

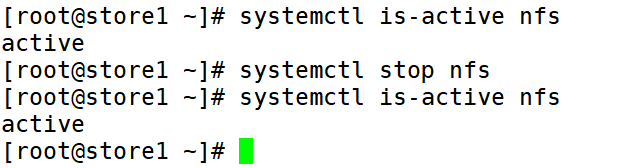

a. 测试 Keepalived 针对 NFS 监控检查

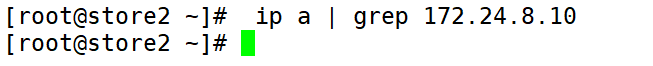

b. 测试 Keepalived 故障切换

Keepalived 发生故障

store1

store2

Keepalived 故障恢复

3、应用服务器测试

3.1 应用服务器挂载共享存储

应用服务器访问 Web 服务(OA)

real_server1

浏览器访问:http://172.24.8.13

real_server2

浏览器访问:http://172.24.8.14

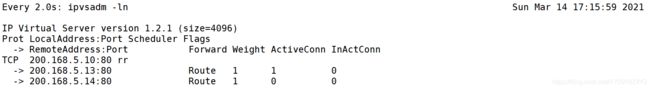

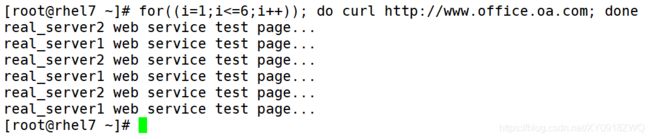

4、负载均衡器调度算法测试

本文使用负载调度算法采用1:1轮询。

测试时临时断开共享存储,每个节点web页面内容不同进行测试调度算法。

添加hosts解析

[root@rhel7 ~]# vim /etc/hosts +

200.168.5.10 www.office.oa.com

# 卸载共享存储,自定义网页文件

---real_server1

[root@real_server1 ~]# umount /usr/share/nginx/html

[root@real_server1 ~]# echo 'real_server1 web service test page...' > /usr/share/nginx/html/index.html

---real_server2

[root@real_server2 ~]# umount /usr/share/nginx/html

[root@real_server2 ~]# echo 'real_server2 web service test page...' > /usr/share/nginx/html/index.html

访问测试

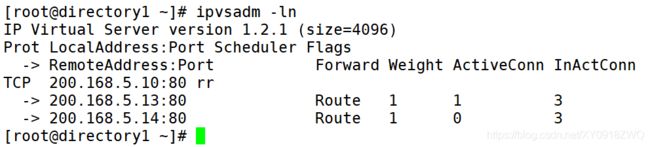

负载都调度器访问记录

测试完毕,删除index.html网页文件,重新挂载共享存储

---real_server1

[root@real_server1 ~]# rm -f /usr/share/nginx/html/index.html

[root@real_server1 ~]# mount -a

[root@real_server1 ~]# df -h | grep data

172.24.8.15:/data 17G 1.7G 16G 10% /usr/share/nginx/html

---real_server2

[root@real_server2 ~]# rm -f /usr/share/nginx/html/index.html

[root@real_server2 ~]# mount -a

[root@real_server2 ~]# df -h | grep data

172.24.8.15:/data 17G 1.7G 16G 10% /usr/share/nginx/html

5、负载均衡器健康检查测试

主调度器使用watch动态监控ipvsadm -Ln

模拟 real_server1 发生故障

[root@real_server1 ~]# systemctl stop nginx

[root@real_server1 ~]# systemctl is-active nginx

inactive

[root@real_server1 ~]# systemctl start nginx

[root@real_server1 ~]# systemctl is-active nginx

active

6、负载均衡器高可用测试

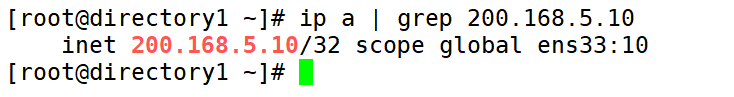

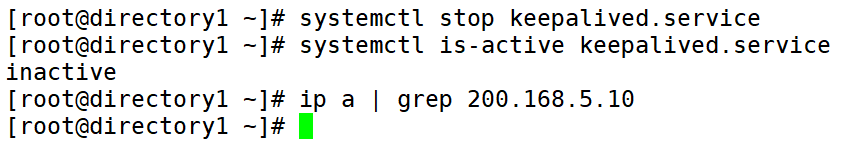

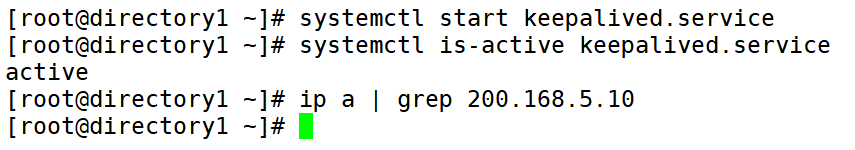

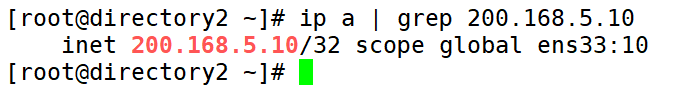

6.1 主调度器的keepalived检查故障转移

查看 VIP

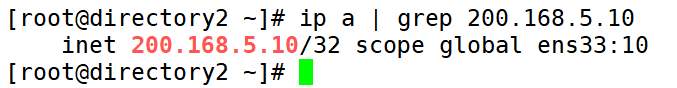

6.2 主调度器的keepalived检查故障恢复不抢占

模拟主节点故障恢复

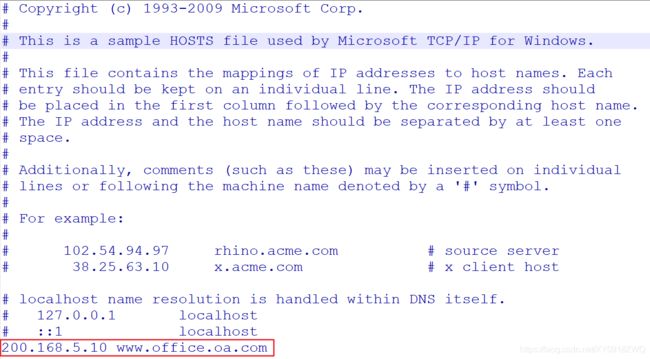

7、用户访问OA 自动化办公系统

添加hosts解析

windows主机:C:\Windows\System32\drivers\etc\hosts

用户访问

六、项目升级改进方案

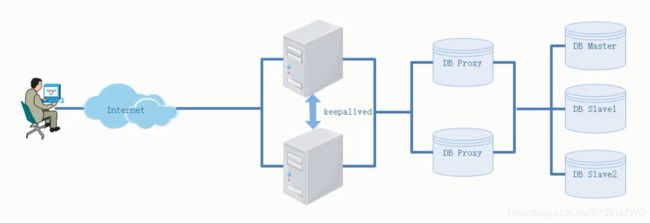

虽然我们实现了OA系统web、存储负载均衡高可用性能,但是我们后端数据库代理服务器MyCAT,仍然存在单点故障,为了解决这个问题,我们还可以通过以下架构,对MyCAT做出高可用性能的优化

前端:Nginx + keepalived

代理:MyCAT

数据库:主从同步