目标检测YOLOv5运行遇到的问题及解决方法

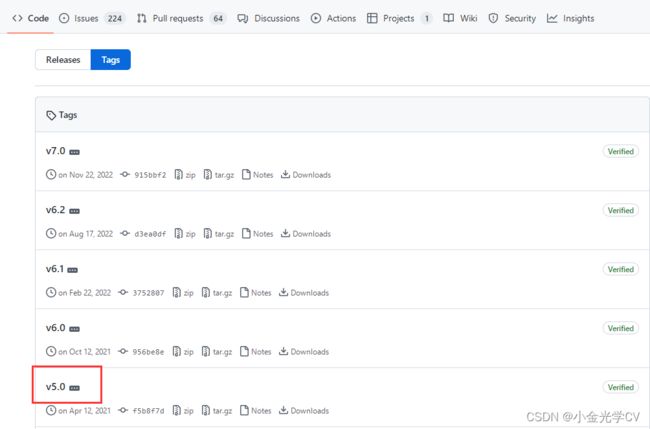

我此处下载YOLOv5版本的v5.0。

运行detect.py报错:

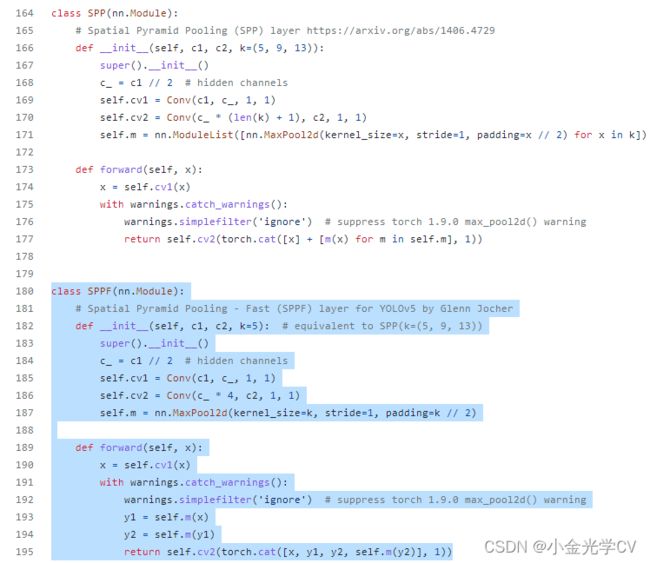

(1)AttributeError: Can't get attribute 'SPPF' on

解决方法:

去V6版本里面的model/common.py里面去找到这个SPPF的类,把它拷过来到你的这个V5的model/common.py里面,这样你的代码就也有这个类了。然后在common.py中引入warnings包就可以了。

直接复制粘贴下面这段代码即可 :

class SPPF(nn.Module):

# Spatial Pyramid Pooling - Fast (SPPF) layer for YOLOv5 by Glenn Jocher

def __init__(self, c1, c2, k=5): # equivalent to SPP(k=(5, 9, 13))

super().__init__()

c_ = c1 // 2 # hidden channels

self.cv1 = Conv(c1, c_, 1, 1)

self.cv2 = Conv(c_ * 4, c2, 1, 1)

self.m = nn.MaxPool2d(kernel_size=k, stride=1, padding=k // 2)

def forward(self, x):

x = self.cv1(x)

with warnings.catch_warnings():

warnings.simplefilter('ignore') # suppress torch 1.9.0 max_pool2d() warning

y1 = self.m(x)

y2 = self.m(y1)

return self.cv2(torch.cat([x, y1, y2, self.m(y2)], 1))

(2)RuntimeError: The size of tensor a (80) must match the size of tensor b (56) at non-singleton dimension 3

解决方法:

这是由于每一个版本的模型有差异,例如,你运行v5.0,但默认下载的是最新版本的yolov5s.pt就会这样(默认下载的是V6.1的),所以你需要到运行版本的assets下载对应的模型。

(3)OSError: [WinError 1455] 页面文件太小,无法完成操作。Error loading"xxx\caffe2_detectron_ops_gpu.dll" or one of its dependencies.

解决方法:

按住CTRL,鼠标点一下这个data_loader,就跳转到了名为data_processor.py的源码中,在其中就能看到torch.utils.data.DataLoader()的函数,把其中的参数num_workers改为0即可。GPU不足就减小batchsize。

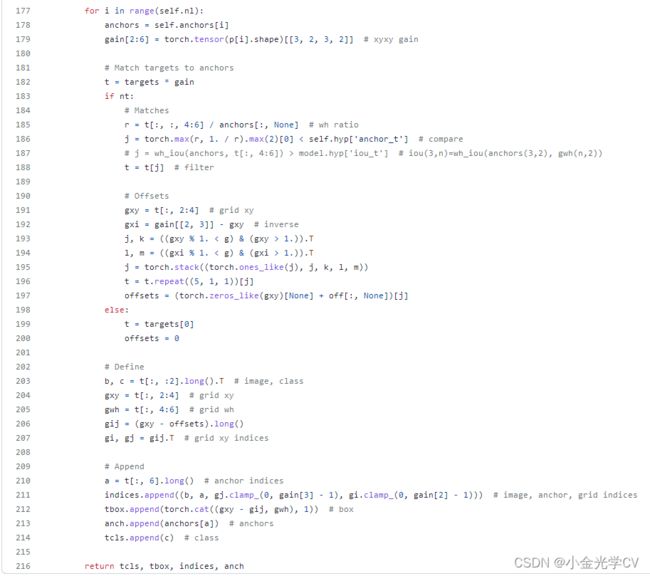

(4)RuntimeError: result type Float can't be cast to the desired output type __int64

找到5.0版报错的loss.py中最后那段for函数,将其整体替换为yolov5-master版中loss.py最后一段for函数即可正常运行。

复制下面的for函数替换utils/loss.py中最后那段for函数即可。

复制下面的for函数替换utils/loss.py中最后那段for函数即可。

for i in range(self.nl):

anchors, shape = self.anchors[i], p[i].shape

gain[2:6] = torch.tensor(shape)[[3, 2, 3, 2]] # xyxy gain

# Match targets to anchors

t = targets * gain # shape(3,n,7)

if nt:

# Matches

r = t[..., 4:6] / anchors[:, None] # wh ratio

j = torch.max(r, 1 / r).max(2)[0] < self.hyp['anchor_t'] # compare

# j = wh_iou(anchors, t[:, 4:6]) > model.hyp['iou_t'] # iou(3,n)=wh_iou(anchors(3,2), gwh(n,2))

t = t[j] # filter

# Offsets

gxy = t[:, 2:4] # grid xy

gxi = gain[[2, 3]] - gxy # inverse

j, k = ((gxy % 1 < g) & (gxy > 1)).T

l, m = ((gxi % 1 < g) & (gxi > 1)).T

j = torch.stack((torch.ones_like(j), j, k, l, m))

t = t.repeat((5, 1, 1))[j]

offsets = (torch.zeros_like(gxy)[None] + off[:, None])[j]

else:

t = targets[0]

offsets = 0

# Define

bc, gxy, gwh, a = t.chunk(4, 1) # (image, class), grid xy, grid wh, anchors

a, (b, c) = a.long().view(-1), bc.long().T # anchors, image, class

gij = (gxy - offsets).long()

gi, gj = gij.T # grid indices

# Append

indices.append((b, a, gj.clamp_(0, shape[2] - 1), gi.clamp_(0, shape[3] - 1))) # image, anchor, grid

tbox.append(torch.cat((gxy - gij, gwh), 1)) # box

anch.append(anchors[a]) # anchors

tcls.append(c) # class

return tcls, tbox, indices, anch