K8s NetworkPolicy、LimitRange和ResourceQuota详解、K8s运行ZooKeeper,Mysql,Jenkins集群、K8s集群及应用监控Prometheus

1. 基于 NetworkPolicy 限制 magedu namespace 中的所有 pod 不能跨 namespace 访问 (只能访问当前 namespace 中的所有 pod)。

#在default下创建2个deploy, centos7-default和nginx1-default

root@k8s-master1:~/20230328# vim centos7-default.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: centos7-default

name: centos7-default

namespace: default

spec:

replicas: 1

selector:

matchLabels:

app: centos7-default

template:

metadata:

labels:

app: centos7-default

spec:

containers:

- image: centos:centos7.9.2009

name: centos

command:

- sleep

- "50000000"

root@k8s-master1:~/20230328# kubectl apply -f centos7-default.yaml

deployment.apps/centos7-default created

root@k8s-master1:~/20230328# kubectl create deploy nginx1-default --image=nginx

deployment.apps/nginx1-default created

#查看default下创建的deploy

root@k8s-master1:~/20230328# kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

centos7-default-7cff9984c9-t7sdp 1/1 Running 0 9m50s app=centos7-default,pod-template-hash=7cff9984c9

nginx1-default-76d65dfb67-gsdm5 1/1 Running 0 15s app=nginx1-default,pod-template-hash=76d65dfb67

root@k8s-master1:~/20230328# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

centos7-default-7cff9984c9-t7sdp 1/1 Running 0 10m 10.200.218.71 192.168.7.113 <none> <none>

nginx1-default-76d65dfb67-gsdm5 1/1 Running 0 75s 10.200.151.200 192.168.7.112 <none> <none>

#创建namespace magedu,并在此空间下创建2个deploy

root@k8s-master1:~/20230328# cat centos7-magedu.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: centos7-magedu

name: centos7-magedu

namespace: magedu

spec:

replicas: 1

selector:

matchLabels:

app: centos7-magedu

template:

metadata:

labels:

app: centos7-magedu

spec:

containers:

- image: centos:centos7.9.2009

name: centos

command:

- sleep

- "50000000"

root@k8s-master1:~/20230328# kubectl apply -f centos7-magedu.yaml

deployment.apps/centos7-magedu created

root@k8s-master1:~/20230328# kubectl create deploy nginx2-magedu --image=nginx --namespace magedu

deployment.apps/nginx2-magedu created

#查看magedu下创建的资源

root@k8s-master1:~/20230328# kubectl get pods -n magedu --show-labels

NAME READY STATUS RESTARTS AGE LABELS

centos7-magedu-bc6b4665f-9g6zh 1/1 Running 0 25m app=centos7-magedu,pod-template-hash=bc6b4665f

nginx2-magedu-5ddc8898d6-v98v4 1/1 Running 0 7m54s app=nginx2-magedu,pod-template-hash=5ddc8898d6

root@k8s-master1:~/20230328# kubectl get pods -n magedu -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

centos7-magedu-bc6b4665f-9g6zh 1/1 Running 0 24m 10.200.218.1 192.168.7.111 <none> <none>

nginx2-magedu-5ddc8898d6-v98v4 1/1 Running 0 7m45s 10.200.151.199 192.168.7.112 <none> <none>

#进入default空间下centos7-default pod访问magedu空间下nginx2-magedu pod服务,可正常访问

root@k8s-master1:~/20230328# kubectl exec -it centos7-default-7cff9984c9-t7sdp bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

[root@centos7-default-7cff9984c9-t7sdp /]# curl 10.200.151.199

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

#进入magedu空间下centos7-magedu的pod,访问default空间下nginx1-default服务,访问正常

root@k8s-master1:~/20230328# kubectl exec -it centos7-magedu-bc6b4665f-9g6zh bash -n magedu

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

[root@centos7-magedu-bc6b4665f-9g6zh /]# curl 10.200.151.200

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

#创建networkpolicy

root@k8s-master1:~# vi Egress-magedu.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: egress-access-networkpolicy

namespace: magedu

spec:

policyTypes:

- Egress

podSelector:

matchLabels: {}

egress:

- to:

- podSelector:

matchLabels: {}

root@k8s-master1:~/20230328# kubectl apply -f Egress-magedu.yaml

networkpolicy.networking.k8s.io/egress-access-networkpolicy created

#查看刚创建的networkpolicy

root@k8s-master1:~/20230328# kubectl get networkpolicy -n magedu

NAME POD-SELECTOR AGE

egress-access-networkpolicy <none> 17s

#列出default和magedu命名空间下的pod

root@k8s-master1:~/20230328# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

centos7-default-7cff9984c9-t7sdp 1/1 Running 0 3h46m 10.200.218.71 192.168.7.113

nginx1-default-76d65dfb67-gsdm5 1/1 Running 0 3h36m 10.200.151.200 192.168.7.112

root@k8s-master1:~/20230328# kubectl get pods -n magedu -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

centos7-magedu-bc6b4665f-9g6zh 1/1 Running 0 4h7m 10.200.218.1 192.168.7.111

nginx2-magedu-5ddc8898d6-v98v4 1/1 Running 0 3h50m 10.200.151.199 192.168.7.112

#default下pod能访问magedu空间下pod, 正常访问

root@k8s-master1:~/20230328# kubectl exec -it centos7-default-7cff9984c9-t7sdp bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

[root@centos7-default-7cff9984c9-t7sdp /]# curl 10.200.151.199

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

#magedu下pod访问default空间下pod, 不能正常访问

root@k8s-master1:~/20230328# kubectl exec -it centos7-magedu-bc6b4665f-9g6zh bash -n magedu kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead. [root@centos7-magedu-bc6b4665f-9g6zh /]# curl 10.200.151.200 ^C

##magedu下pod访问同命名空间下pod, 正常访问

root@k8s-master1:~/20230328# kubectl exec -it centos7-magedu-bc6b4665f-9g6zh bash -n magedu

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

[root@centos7-magedu-bc6b4665f-9g6zh /]# curl 10.200.151.199

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

总结:网络策略生效后,其它namespace可以访问magedu namespace内的服务,magedu内的pod无法访问外部的服务

2. 在 kubernetes 环境部署 zookeeper 集群并基于 NFS 或 StorageClass 等方式实现创建持久化。

#下载jdk8镜像

root@k8s-master1:/opt/k8s-data# docker pull elevy/slim_java:8

8: Pulling from elevy/slim_java

88286f41530e: Downloading

7141511c4dad: Download complete

fd529fe251b3: Download complete

8: Pulling from elevy/slim_java

88286f41530e: Pull complete

7141511c4dad: Pull complete

fd529fe251b3: Pull complete

Digest: sha256:044e42fb89cda51e83701349a9b79e8117300f4841511ed853f73caf7fc98a51

Status: Downloaded newer image for elevy/slim_java:8

docker.io/elevy/slim_java:8

#镜像重命名,打tag

root@k8s-master1:/opt/k8s-data# docker tag docker.io/elevy/slim_java:8 harbor.magedu.net/baseimages/slim_java:8

root@k8s-master1:/opt/k8s-data# docker login harbor.magedu.net

Username: admin

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

#push到本地镜像仓库

root@k8s-master1:/opt/k8s-data# docker push harbor.magedu.net/baseimages/slim_java:8

The push refers to repository [harbor.magedu.net/baseimages/slim_java]

e053edd72ca6: Pushed

aba783efb1a4: Pushed

5bef08742407: Pushed

8: digest: sha256:817d0af5d4f16c29509b8397784f5d4ec3accb1bfde4e474244ed3be7f41a604 size: 952

##修改dockerfile 依赖镜像地址

root@k8s-master1:/opt/k8s-data# cd dockerfile/web/magedu/zookeeper/

FROM harbor.magedu.net/baseimages/slim_java:8

##修改编译脚本镜像地址

root@k8s-master1:/opt/k8s-data/dockerfile/web/magedu/zookeeper# vi build-command.sh

docker build -t harbor.magedu.net/magedu/zookeeper:${TAG} .

docker push harbor.magedu.net/magedu/zookeeper:${TAG}

##编译并上传镜像

root@k8s-master1:/opt/k8s-data/dockerfile/web/magedu/zookeeper# bash build-command.sh v3.4.14

5bef08742407: Mounted from baseimages/slim_java

v3.4.14: digest: sha256:f10eb1634d0d2d5eae520c0b9b170c00ef9a209c3b614139bc3207073509987c size: 2621

#测试镜像

root@k8s-master1:/opt/k8s-data/dockerfile/web/magedu/zookeeper# docker run -it --rm harbor.magedu.net/magedu/zookeeper:v3.4.14

2023-03-28 14:28:46,666 [myid:] - INFO [main:ServerCnxnFactory@117] - Using org.apache.zookeeper.server.NIOServerCnxnFactory as server connection factory

2023-03-28 14:28:46,679 [myid:] - INFO [main:NIOServerCnxnFactory@89] - binding to port 0.0.0.0/0.0.0.0:2181

##NFS 服务器创建zookeeper的pv数据目录

root@haproxy1:~# mkdir -p /data/k8sdata/magedu/zookeeper-datadir-1

root@haproxy1:~# mkdir -p /data/k8sdata/magedu/zookeeper-datadir-2

root@haproxy1:~# mkdir -p /data/k8sdata/magedu/zookeeper-datadir-3

##NFS配置共享目录

root@haproxy1:~# vi /etc/exports

/data/k8sdata *(rw,no_root_squash,no_subtree_check)

##生效NFS配置

root@haproxy1:~# exportfs -r

##master1测试NFS服务

root@k8s-master1:~# showmount -e 172.31.7.109

Export list for 172.31.7.109:

/data/k8sdata *

#创建PV和PVC

root@k8s-master1:/opt/k8s-data/yaml/magedu/zookeeper/pv# kubectl apply -f .

persistentvolume/zookeeper-datadir-pv-1 created

persistentvolume/zookeeper-datadir-pv-2 created

persistentvolume/zookeeper-datadir-pv-3 created

persistentvolumeclaim/zookeeper-datadir-pvc-1 created

persistentvolumeclaim/zookeeper-datadir-pvc-2 created

persistentvolumeclaim/zookeeper-datadir-pvc-3 created

#查看创建的PVC

root@k8s-master1:/opt/k8s-data/yaml/magedu/zookeeper/pv# kubectl get pvc -n magedu

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

zookeeper-datadir-pvc-1 Bound zookeeper-datadir-pv-1 20Gi RWO 47s

zookeeper-datadir-pvc-2 Bound zookeeper-datadir-pv-2 20Gi RWO 47s

zookeeper-datadir-pvc-3 Bound zookeeper-datadir-pv-3 20Gi RWO 47s

#修改zookeeper镜像地址

root@k8s-master1:/opt/k8s-data/yaml/magedu/zookeeper# vim zookeeper.yaml

image: harbor.magedu.net/magedu/zookeeper:v3.4.14

#部署zookeeper

root@k8s-master1:/opt/k8s-data/yaml/magedu/zookeeper# kubectl apply -f zookeeper.yaml

service/zookeeper created

service/zookeeper1 created

service/zookeeper2 created

service/zookeeper3 created

deployment.apps/zookeeper1 created

deployment.apps/zookeeper2 created

deployment.apps/zookeeper3 created

#查看创建的zookeeper pod和svc信息

root@k8s-master1:/opt/k8s-data/yaml/magedu/zookeeper# kubectl get pod,svc -n magedu

NAME READY STATUS RESTARTS AGE

pod/zookeeper1-6c75b979c6-r6txt 1/1 Running 0 113s

pod/zookeeper2-7b899bbf59-zhd25 1/1 Running 0 113s

pod/zookeeper3-7ddb69695d-gf8gp 1/1 Running 0 113s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/zookeeper ClusterIP 10.100.70.62 <none> 2181/TCP 114s

service/zookeeper1 NodePort 10.100.81.96 <none> 2181:32181/TCP,2888:43358/TCP,3888:31343/TCP 114s

service/zookeeper2 NodePort 10.100.52.199 <none> 2181:32182/TCP,2888:32121/TCP,3888:34179/TCP 114s

service/zookeeper3 NodePort 10.100.168.98 <none> 2181:32183/TCP,2888:32821/TCP,3888:50631/TCP 113s

#验证zookeeper集群状态,是选举关系

root@k8s-master1:/opt/k8s-data/yaml/magedu/zookeeper# kubectl -n magedu exec -it zookeeper1-6c75b979c6-r6txt -- /zookeeper/bin/zkServer.sh status

ZooKeeper JMX enabled by default

ZooKeeper remote JMX Port set to 9010

ZooKeeper remote JMX authenticate set to false

ZooKeeper remote JMX ssl set to false

ZooKeeper remote JMX log4j set to true

Using config: /zookeeper/bin/../conf/zoo.cfg

Mode: follower

root@k8s-master1:/opt/k8s-data/yaml/magedu/zookeeper# kubectl -n magedu exec -it zookeeper2-7b899bbf59-zhd25 -- /zookeeper/bin/zkServer.sh status

ZooKeeper JMX enabled by default

ZooKeeper remote JMX Port set to 9010

ZooKeeper remote JMX authenticate set to false

ZooKeeper remote JMX ssl set to false

ZooKeeper remote JMX log4j set to true

Using config: /zookeeper/bin/../conf/zoo.cfg

Mode: follower

root@k8s-master1:/opt/k8s-data/yaml/magedu/zookeeper# kubectl -n magedu exec -it zookeeper3-7ddb69695d-gf8gp -- /zookeeper/bin/zkServer.sh status

ZooKeeper JMX enabled by default

ZooKeeper remote JMX Port set to 9010

ZooKeeper remote JMX authenticate set to false

ZooKeeper remote JMX ssl set to false

ZooKeeper remote JMX log4j set to true

Using config: /zookeeper/bin/../conf/zoo.cfg

Mode: leader

3. 在 Kubernetes 环境部署基于 StatefulSet 运行 MySQL 一主多从并基于 NFS 或 StorageClass 等方式实现数据持久化。

3.1 准备基础镜像

#下载mysql镜像

root@k8s-master1:~# docker pull mysql:5.7.36

#镜像重命名

root@k8s-master1:~# docker tag mysql:5.7.36 harbor.magedu.net/magedu/mysql:5.7.36

#推送到本地镜像仓库

root@k8s-master1:~# docker push harbor.magedu.net/magedu/mysql:5.7.36

#下载xtrabackup镜像

root@k8s-master1:~# docker pull zhangshijie/xtrabackup:1.0

#镜像重命名

root@k8s-master1:~# docker tag zhangshijie/xtrabackup:1.0 harbor.magedu.net/magedu/xtrabackup:1.0

#推送到本地镜像仓库

root@k8s-master1:~# docker push harbor.magedu.net/magedu/xtrabackup:1.0

3.2 创建mysql存储

#NFS服务器创建mysql存储目录

root@haproxy1:~# mkdir -p /data/k8sdata/magedu/mysql-datadir-{1..6}

#切换到mysql部署目录

root@k8s-master1:~# cd /opt/k8s-data/yaml/magedu/mysql/

#创建pv

root@k8s-master1:/opt/k8s-data/yaml/magedu/mysql# kubectl apply -f pv/

persistentvolume/mysql-datadir-1 created

persistentvolume/mysql-datadir-2 created

persistentvolume/mysql-datadir-3 created

persistentvolume/mysql-datadir-4 created

persistentvolume/mysql-datadir-5 created

persistentvolume/mysql-datadir-6 created

#查看pv

root@k8s-master1:/opt/k8s-data/yaml/magedu/mysql# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

mysql-datadir-1 50Gi RWO Retain Available 18s

mysql-datadir-2 50Gi RWO Retain Available 18s

mysql-datadir-3 50Gi RWO Retain Available 18s

mysql-datadir-4 50Gi RWO Retain Available 18s

mysql-datadir-5 50Gi RWO Retain Available 18s

mysql-datadir-6 50Gi RWO Retain Available 18s

3.3 部署mysql

#修改镜像地址

root@k8s-master1:/opt/k8s-data/yaml/magedu/mysql# grep -n "image" mysql-statefulset.yaml

19: image: harbor.magedu.net/magedu/mysql:5.7.36

43: image: harbor.magedu.net/magedu/xtrabackup:1.0

67: image: harbor.magedu.net/magedu/mysql:5.7.36

98: image: harbor.magedu.net/magedu/xtrabackup:1.0

#创建statefulset mysql,configmap,service

root@k8s-master1:/opt/k8s-data/yaml/magedu/mysql# kubectl apply -f .

configmap/mysql created

service/mysql created

service/mysql-read created

statefulset.apps/mysql created

#查看生成的pod,svc资源

root@k8s-master1:/opt/k8s-data/yaml/magedu/zookeeper# kubectl get pod,svc -n magedu

NAME READY STATUS RESTARTS AGE

pod/mysql-0 2/2 Running 0 9m11s

pod/mysql-1 2/2 Running 1 (7m8s ago) 7m55s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/mysql ClusterIP None <none> 3306/TCP 9m12s

service/mysql-read ClusterIP 10.100.163.220 <none> 3306/TCP 9m12s

3.4 验证

#修改副本为3

root@k8s-master1:/opt/k8s-data/yaml/magedu/mysql# vi mysql-statefulset.yaml

replicas: 3

#执行statefulset

root@k8s-master1:/opt/k8s-data/yaml/magedu/mysql# kubectl apply -f mysql-statefulset.yaml

statefulset.apps/mysql configured

#查看执行后的pod

root@k8s-master1:/opt/k8s-data/yaml/magedu/mysql# kubectl get pod -n magedu

NAME READY STATUS RESTARTS AGE

mysql-0 2/2 Running 0 19m

mysql-1 2/2 Running 1 (17m ago) 17m

mysql-2 2/2 Running 1 (65s ago) 2m15s

#查看主节点状态

root@k8s-master1:/opt/k8s-data/yaml/magedu/mysql# kubectl exec -it mysql-0 bash -n magedu

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

Defaulted container "mysql" out of: mysql, xtrabackup, init-mysql (init), clone-mysql (init)

root@mysql-0:/# mysql

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 817

Server version: 5.7.36-log MySQL Community Server (GPL)

Copyright (c) 2000, 2021, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> show master status \G;

*************************** 1. row ***************************

File: mysql-0-bin.000003

Position: 154

Binlog_Do_DB:

Binlog_Ignore_DB:

Executed_Gtid_Set:

1 row in set (0.00 sec)

ERROR:

No query specified

#从节点mysql-1,mysql-2状态

root@k8s-master1:/opt/k8s-data/yaml/magedu/mysql# kubectl exec -it mysql-1 bash -n magedu

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

Defaulted container "mysql" out of: mysql, xtrabackup, init-mysql (init), clone-mysql (init)

root@mysql-1:/# mysql

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 828

Server version: 5.7.36 MySQL Community Server (GPL)

Copyright (c) 2000, 2021, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> show slave status \G;

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: mysql-0.mysql

Master_User: root

Master_Port: 3306

Connect_Retry: 10

Master_Log_File: mysql-0-bin.000003

Read_Master_Log_Pos: 154

Relay_Log_File: mysql-1-relay-bin.000002

Relay_Log_Pos: 322

Relay_Master_Log_File: mysql-0-bin.000003

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Replicate_Do_DB:

Replicate_Ignore_DB:

Replicate_Do_Table:

Replicate_Ignore_Table:

Replicate_Wild_Do_Table:

Replicate_Wild_Ignore_Table:

Last_Errno: 0

Last_Error:

Skip_Counter: 0

Exec_Master_Log_Pos: 154

Relay_Log_Space: 531

Until_Condition: None

Until_Log_File:

Until_Log_Pos: 0

Master_SSL_Allowed: No

Master_SSL_CA_File:

Master_SSL_CA_Path:

Master_SSL_Cert:

Master_SSL_Cipher:

Master_SSL_Key:

Seconds_Behind_Master: 0

Master_SSL_Verify_Server_Cert: No

Last_IO_Errno: 0

Last_IO_Error:

Last_SQL_Errno: 0

Last_SQL_Error:

Replicate_Ignore_Server_Ids:

Master_Server_Id: 100

Master_UUID: 59d3b5ec-cefe-11ed-8c8b-3ee650365657

Master_Info_File: /var/lib/mysql/master.info

SQL_Delay: 0

SQL_Remaining_Delay: NULL

Slave_SQL_Running_State: Slave has read all relay log; waiting for more updates

Master_Retry_Count: 86400

Master_Bind:

Last_IO_Error_Timestamp:

Last_SQL_Error_Timestamp:

Master_SSL_Crl:

Master_SSL_Crlpath:

Retrieved_Gtid_Set:

Executed_Gtid_Set:

Auto_Position: 0

Replicate_Rewrite_DB:

Channel_Name:

Master_TLS_Version:

1 row in set (0.00 sec)

ERROR:

No query specified

mysql> exit

Bye

root@mysql-1:/# exit

exit

root@k8s-master1:/opt/k8s-data/yaml/magedu/mysql# kubectl exec -it mysql-2 bash -n magedu

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

Defaulted container "mysql" out of: mysql, xtrabackup, init-mysql (init), clone-mysql (init)

root@mysql-2:/# mysql

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 287

Server version: 5.7.36 MySQL Community Server (GPL)

Copyright (c) 2000, 2021, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> show slave status \G;

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: mysql-0.mysql

Master_User: root

Master_Port: 3306

Connect_Retry: 10

Master_Log_File: mysql-0-bin.000003

Read_Master_Log_Pos: 154

Relay_Log_File: mysql-2-relay-bin.000002

Relay_Log_Pos: 322

Relay_Master_Log_File: mysql-0-bin.000003

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Replicate_Do_DB:

Replicate_Ignore_DB:

Replicate_Do_Table:

Replicate_Ignore_Table:

Replicate_Wild_Do_Table:

Replicate_Wild_Ignore_Table:

Last_Errno: 0

Last_Error:

Skip_Counter: 0

Exec_Master_Log_Pos: 154

Relay_Log_Space: 531

Until_Condition: None

Until_Log_File:

Until_Log_Pos: 0

Master_SSL_Allowed: No

Master_SSL_CA_File:

Master_SSL_CA_Path:

Master_SSL_Cert:

Master_SSL_Cipher:

Master_SSL_Key:

Seconds_Behind_Master: 0

Master_SSL_Verify_Server_Cert: No

Last_IO_Errno: 0

Last_IO_Error:

Last_SQL_Errno: 0

Last_SQL_Error:

Replicate_Ignore_Server_Ids:

Master_Server_Id: 100

Master_UUID: 59d3b5ec-cefe-11ed-8c8b-3ee650365657

Master_Info_File: /var/lib/mysql/master.info

SQL_Delay: 0

SQL_Remaining_Delay: NULL

Slave_SQL_Running_State: Slave has read all relay log; waiting for more updates

Master_Retry_Count: 86400

Master_Bind:

Last_IO_Error_Timestamp:

Last_SQL_Error_Timestamp:

Master_SSL_Crl:

Master_SSL_Crlpath:

Retrieved_Gtid_Set:

Executed_Gtid_Set:

Auto_Position: 0

Replicate_Rewrite_DB:

Channel_Name:

Master_TLS_Version:

1 row in set (0.01 sec)

ERROR:

No query specified

#在主节点创建新数据库,看是否同步到从节点

root@k8s-master1:/opt/k8s-data/yaml/magedu/mysql# kubectl exec -it mysql-0 bash -n magedu

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

Defaulted container "mysql" out of: mysql, xtrabackup, init-mysql (init), clone-mysql (init)

root@mysql-0:/# mysql

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 927

Server version: 5.7.36-log MySQL Community Server (GPL)

Copyright (c) 2000, 2021, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> create database magedu;

Query OK, 1 row affected (0.03 sec)

mysql> show databases;

+------------------------+

| Database |

+------------------------+

| information_schema |

| magedu |

| mysql |

| performance_schema |

| sys |

| xtrabackup_backupfiles |

+------------------------+

6 rows in set (0.02 sec)

#从库查看数据库同步

root@k8s-master1:/opt/k8s-data/yaml/magedu/mysql# kubectl exec -it mysql-1 bash -n magedu

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

Defaulted container "mysql" out of: mysql, xtrabackup, init-mysql (init), clone-mysql (init)

root@mysql-1:/# mysql

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 922

Server version: 5.7.36 MySQL Community Server (GPL)

Copyright (c) 2000, 2021, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> show databases;

+------------------------+

| Database |

+------------------------+

| information_schema |

| magedu |

| mysql |

| performance_schema |

| sys |

| xtrabackup_backupfiles |

+------------------------+

6 rows in set (0.03 sec)

- 在 Kubernetes 环境运行 java 单体服务 Jenkins(自己构建镜像或使用官方镜像)、以及实现单 Pod 中以多容器模式运行基于 LNMP 的 WordPress(自己构建镜像或使用官方镜像),数据库使用上一步骤运行在 K8S 中的 MySQL。

4.1 运行java单体服务Jenkins

4.1.1 构建基础镜像

#进入到centos目录构建镜像

root@k8s-master1:~# cd /opt/k8s-data/dockerfile/system/centos/

#修改脚本镜像地址

root@k8s-master1:/opt/k8s-data/dockerfile/system/centos# sed -e 's/harbor.linuxarchitect.io/harbor.magedu.net/g' -i build-command.sh

#构建centos7镜像

root@k8s-master1:/opt/k8s-data/dockerfile/system/centos# bash build-command.sh

#切换到jdk镜像构建目录

root@k8s-master1:/opt/k8s-data/dockerfile/system/centos# cd ../../web/pub-images/jdk-1.8.212/

#修改镜像地址

root@k8s-master1:/opt/k8s-data/dockerfile/web/pub-images/jdk-1.8.212# sed -e 's/harbor.linuxarchitect.io/harbor.magedu.net/g' -i build-command.sh Dockerfile

#构建jdk镜像

root@k8s-master1:/opt/k8s-data/dockerfile/web/pub-images/jdk-1.8.212# bash build-command.sh

#切换到tomcat镜像构建目录

root@k8s-master1:/opt/k8s-data/dockerfile/web/pub-images/jdk-1.8.212# cd ../tomcat-base-8.5.43/

#修改镜像地址

root@k8s-master1:/opt/k8s-data/dockerfile/web/pub-images/tomcat-base-8.5.43# sed -e 's/harbor.linuxarchitect.io/harbor.magedu.net/g' -i build-command.sh

#构建tomcat镜像

root@k8s-master1:/opt/k8s-data/dockerfile/web/pub-images/tomcat-base-8.5.43# bash build-command.sh

#切换到jenkins镜像构建目录

root@k8s-master1:/opt/k8s-data/dockerfile/web/pub-images/tomcat-base-8.5.43# cd ../../magedu/jenkins/

#修改镜像地址

root@k8s-master1:/opt/k8s-data/dockerfile/web/magedu/jenkins# sed -e 's/harbor.linuxarchitect.io/harbor.magedu./g'net -i build-command.sh

#构建jenkins镜像

root@k8s-master1:/opt/k8s-data/dockerfile/web/magedu/jenkins# bash build-command.sh

#测试镜像

root@k8s-master1:/opt/k8s-data/dockerfile/web/magedu/jenkins# docker run -it --rm -p 8080:8080 harbor.magedu.net/magedu/jenkins:v2.319.2

Running from: /apps/jenkins/jenkins.war

2023-03-31 14:32:11.493+0000 [id=1] INFO org.eclipse.jetty.util.log.Log#initialized: Logging initialized @780ms to org.eclipse.jetty.util.log.JavaUtilLog

2023-03-31 14:32:11.700+0000 [id=1] INFO winstone.Logger#logInternal: Beginning extraction from war file

2023-03-31 14:32:14.669+0000 [id=1] WARNING o.e.j.s.handler.ContextHandler#setContextPath: Empty contextPath

2023-03-31 14:32:14.883+0000 [id=1] INFO org.eclipse.jetty.server.Server#doStart: jetty-9.4.43.v20210629; built: 2021-06-30T11:07:22.254Z; git: 526006ecfa3af7f1a27ef3a288e2bef7ea9dd7e8; jvm 1.8.0_212-b10

2023-03-31 14:32:16.089+0000 [id=1] INFO o.e.j.w.StandardDescriptorProcessor#visitServlet: NO JSP Support for /, did not find org.eclipse.jetty.jsp.JettyJspServlet

2023-03-31 14:32:16.359+0000 [id=1] INFO o.e.j.s.s.DefaultSessionIdManager#doStart: DefaultSessionIdManager workerName=node0

2023-03-31 14:32:16.363+0000 [id=1] INFO o.e.j.s.s.DefaultSessionIdManager#doStart: No SessionScavenger set, using defaults

2023-03-31 14:32:16.375+0000 [id=1] INFO o.e.j.server.session.HouseKeeper#startScavenging: node0 Scavenging every 600000ms

2023-03-31 14:32:17.671+0000 [id=1] INFO hudson.WebAppMain#contextInitialized: Jenkins home directory: /root/.jenkins found at: $user.home/.jenkins

2023-03-31 14:32:17.862+0000 [id=1] INFO o.e.j.s.handler.ContextHandler#doStart: Started w.@6c451c9c{Jenkins v2.319.2,/,file:///apps/jenkins/jenkins-data/,AVAILABLE}{/apps/jenkins/jenkins-data}

2023-03-31 14:32:17.890+0000 [id=1] INFO o.e.j.server.AbstractConnector#doStart: Started ServerConnector@78452606{HTTP/1.1, (http/1.1)}{0.0.0.0:8080}

2023-03-31 14:32:17.891+0000 [id=1] INFO org.eclipse.jetty.server.Server#doStart: Started @7178ms

2023-03-31 14:32:17.899+0000 [id=22] INFO winstone.Logger#logInternal: Winstone Servlet Engine running: controlPort=disabled

2023-03-31 14:32:19.730+0000 [id=29] INFO jenkins.InitReactorRunner$1#onAttained: Started initialization

2023-03-31 14:32:19.801+0000 [id=29] INFO jenkins.InitReactorRunner$1#onAttained: Listed all plugins

2023-03-31 14:32:21.865+0000 [id=29] INFO jenkins.InitReactorRunner$1#onAttained: Prepared all plugins

2023-03-31 14:32:21.875+0000 [id=29] INFO jenkins.InitReactorRunner$1#onAttained: Started all plugins

2023-03-31 14:32:21.884+0000 [id=30] INFO jenkins.InitReactorRunner$1#onAttained: Augmented all extensions

2023-03-31 14:32:23.001+0000 [id=28] INFO jenkins.InitReactorRunner$1#onAttained: System config loaded

2023-03-31 14:32:23.002+0000 [id=31] INFO jenkins.InitReactorRunner$1#onAttained: System config adapted

2023-03-31 14:32:23.003+0000 [id=33] INFO jenkins.InitReactorRunner$1#onAttained: Loaded all jobs

2023-03-31 14:32:23.005+0000 [id=32] INFO jenkins.InitReactorRunner$1#onAttained: Configuration for all jobs updated

2023-03-31 14:32:23.026+0000 [id=47] INFO hudson.model.AsyncPeriodicWork#lambda$doRun$1: Started Download metadata

2023-03-31 14:32:23.053+0000 [id=47] INFO hudson.util.Retrier#start: Attempt #1 to do the action check updates server

2023-03-31 14:32:23.443+0000 [id=31] INFO jenkins.install.SetupWizard#init:

*************************************************************

*************************************************************

*************************************************************

Jenkins initial setup is required. An admin user has been created and a password generated.

Please use the following password to proceed to installation:

4cfbb30a3b574766afa51faa1b4e093a

This may also be found at: /root/.jenkins/secrets/initialAdminPassword

*************************************************************

*************************************************************

*************************************************************

2023-03-31 14:34:20.756+0000 [id=47] INFO h.m.DownloadService$Downloadable#load: Obtained the updated data file for hudson.tasks.Maven.MavenInstaller

2023-03-31 14:34:20.757+0000 [id=47] INFO hudson.util.Retrier#start: Performed the action check updates server successfully at the attempt #1

2023-03-31 14:34:20.771+0000 [id=47] INFO hudson.model.AsyncPeriodicWork#lambda$doRun$1: Finished Download metadata. 117,742 ms

2023-03-31 14:34:36.156+0000 [id=28] INFO jenkins.InitReactorRunner$1#onAttained: Completed initialization

2023-03-31 14:34:36.201+0000 [id=21] INFO hudson.WebAppMain$3#run: Jenkins is fully up and running

4.2 单Pod中以多容器模式运行基于LNMP的 WordPress

4.2.1 构建镜像

#切换到nginx base镜像构建目录

root@k8s-master1:~# cd /opt/k8s-data/dockerfile/web/pub-images/nginx-base

##修改镜像地址

root@k8s-master1:/opt/k8s-data/dockerfile/web/pub-images/nginx-base# sed -e 's/harbor.linuxarchitect.io/harbor.magedu.net/g' -i build-command.sh Dockerfile

##构建镜像

root@k8s-master1:/opt/k8s-data/dockerfile/web/pub-images/nginx-base# bash build-command.sh

##切换到nginx-base-wordpress镜像构建目录

root@k8s-master1:/opt/k8s-data/dockerfile/web/pub-images/nginx-base# cd ../nginx-base-wordpress/

##修改镜像地址

root@k8s-master1:/opt/k8s-data/dockerfile/web/pub-images/nginx-base-wordpress# sed -e 's/harbor.linuxarchitect.io/harbor.magedu.net/g' -i build-command.sh Dockerfile

##构建镜像

root@k8s-master1:/opt/k8s-data/dockerfile/web/pub-images/nginx-base-wordpress# bash build-command.sh

##切换wordpress构建构建目录

root@k8s-master1:/opt/k8s-data/dockerfile/web/pub-images/nginx-base-wordpress# cd ../../magedu/wordpress/nginx

##修改镜像地址

root@k8s-master1:/opt/k8s-data/dockerfile/web/magedu/wordpress/nginx# sed -e 's/harbor.linuxarchitect.io/harbor.magedu.net/g' -i build-command.sh Dockerfile

##构建镜像

root@k8s-master1:/opt/k8s-data/dockerfile/web/magedu/wordpress/nginx# bash build-command.sh v1

##切换到php镜像构建目录

root@k8s-master1:/opt/k8s-data/dockerfile/web/magedu/wordpress/nginx# cd ../php/

##修改镜像地址

root@k8s-master1:/opt/k8s-data/dockerfile/web/magedu/wordpress/php# sed -e 's/harbor.linuxarchitect.io/harbor.magedu.net/g' -i build-command.sh Dockerfile

##构建镜像

root@k8s-master1:/opt/k8s-data/dockerfile/web/magedu/wordpress/php# bash build-command.sh v1

4.2.2 部署wordpress

##NFS服务器创建wordpress数据目录

root@haproxy1:~# mkdir -p /data/k8sdata/magedu/wordpress

##切换到wordpress资源编排目录

root@k8s-master1:~# cd /opt/k8s-data/yaml/magedu/wordpress/

##修改镜像地址

root@k8s-master1:/opt/k8s-data/yaml/magedu/wordpress# sed -e 's/harbor.linuxarchitect.io/harbor.magedu.net/g' -i wordpress.yaml

root@k8s-master1:/opt/k8s-data/yaml/magedu/wordpress# grep -n image: wordpress.yaml

21: image: harbor.magedu.net/magedu/wordpress-nginx:v1

36: image: harbor.magedu.net/magedu/wordpress-php-5.6:v1

##部署wordpress

root@k8s-master1:/opt/k8s-data/yaml/magedu/wordpress# kubectl apply -f wordpress.yaml

deployment.apps/wordpress-app-deployment created

service/wordpress-app-spec created

##查看部署的资源

root@k8s-master1:/opt/k8s-data/yaml/magedu/wordpress# kubectl get pod,svc -n magedu

NAME READY STATUS RESTARTS AGE

pod/wordpress-app-deployment-589d8cf86d-h55jr 2/2 Running 0 100s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/wordpress-app-spec NodePort 10.100.123.220 <none> 80:30031/TCP,443:30033/TCP 100s

#在宿主机内添加域名解析,访问wordpress

4.2.3 部署wordpress站点文件

##上传wordpress资源文件到NFS服务器

#scp wordpress-5.0.16-zh_CN.tar.gz [email protected]:/data/k8sdata/magedu/wordpress/

##解压文件

root@haproxy1:/data/k8sdata/magedu/wordpress# tar xvf wordpress-5.0.16-zh_CN.tar.gz

##把资源文件移动到wordpress部署的根目录

root@haproxy1:/data/k8sdata/magedu/wordpress# mv wordpress/* .

root@haproxy1:/data/k8sdata/magedu/wordpress# rm wordpress wordpress

wordpress/ wordpress-5.0.16-zh_CN.tar.gz

root@haproxy1:/data/k8sdata/magedu/wordpress# rm wordpress wordpress-5.0.16-zh_CN.tar.gz -rf

##查看nginx和php的uid

root@k8s-master1:/opt/k8s-data/yaml/magedu/wordpress# kubectl -n magedu exec -it wordpress-app-deployment-589d8cf86d-h55jr -c wordpress-app-php -- id nginx

uid=2088(nginx) gid=2088(nginx) groups=2088(nginx)

##修改NFS服务器wordpress数据库目录权限

root@haproxy1:/data/k8sdata/magedu# chown 2088.2088 wordpress/ -R

4.2.4 创建wordpress站点数据库

root@k8s-master1:/opt/k8s-data/yaml/magedu/wordpress# kubectl exec -it mysql-0 bash -n magedu

root@mysql-0:/# mysql

mysql> CREATE DATABASE wordpress;

Query OK, 1 row affected (0.01 sec)

mysql> GRANT ALL PRIVILEGES ON wordpress.* TO "wordpress"@"%" IDENTIFIED BY "wordpress";

Query OK, 0 rows affected, 1 warning (0.03 sec)

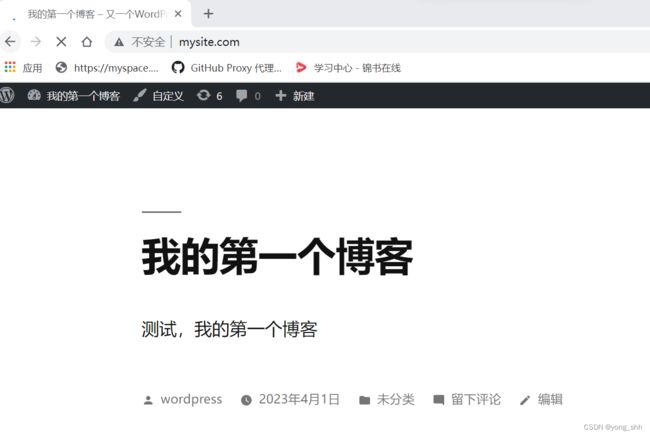

4.2.5 配置wordpress站点

访问wordpress首页

登录管理用户

#访问首页查看刚创建的博客

5. 基于 LimitRange 限制 magedu namespace 中单个 container 最大 1C1G,单个 pod 最大 2C2G,并默认为 CPU limit 为 0.5 核、默认内存 limit 为 512M。

##编写limitRange配置文件

root@k8s-master1:~# vi LimitRange-magedu.yaml

apiVersion: v1

kind: LimitRange

metadata:

name: limitrange-magedu

namespace: magedu

spec:

limits:

- type: Container #限制的资源类型

max:

cpu: "1" #限制单个容器的最大CPU

memory: "1Gi" #限制单个容器的最大内存

default:

cpu: "500m" #默认单个容器的CPU限制

memory: "512Mi" #默认单个容器的内存限制

defaultRequest:

cpu: "500m" #默认单个容器的CPU创建请求

memory: "512Mi" #默认单个容器的内存创建请求

- type: Pod

max:

cpu: "2" #限制单个Pod的最大CPU

memory: "2Gi" #限制单个Pod最大内存

##应用limitRange

root@k8s-master1:~# kubectl apply -f LimitRange-magedu.yaml

##查看limitRange

root@k8s-master1:~# kubectl get limitranges -n magedu

NAME CREATED AT

limitrange-magedu 2023-03-11T04:34:43Z

##生成一个nginx 编排的yaml文件

root@k8s-master1:~# kubectl create deploy nginx --image=nginx -n magedu --dry-run=client -o yaml >nginx.yaml

##编辑yaml文件

root@k8s-master1:~# vi nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx

name: nginx

namespace: magedu

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- image: nginx

name: nginx

##部署修改后的nginx编排文件

root@k8s-master1:~# kubectl apply -f nginx.yaml

deployment.apps/nginx created

##查看pod

root@k8s-master1:~/20230401# kubectl get pod -n magedu

NAME READY STATUS RESTARTS AGE

mysql-0 2/2 Running 0 110m

mysql-1 2/2 Running 0 110m

mysql-2 0/2 Pending 0 110m

nginx-85b98978db-mcq7t 1/1 Running 0 8m28s

wordpress-app-deployment-589d8cf86d-h55jr 2/2 Running 0 9h

#查看pod的资源限制

root@k8s-master1:~/20230401# kubectl describe pod nginx-85b98978db-mcq7t -n magedu

Name: nginx-85b98978db-mcq7t

Namespace: magedu

Priority: 0

Node: 192.168.7.112/192.168.7.112

Start Time: Sat, 01 Apr 2023 20:03:54 +0800

Labels: app=nginx

pod-template-hash=85b98978db

Annotations: kubernetes.io/limit-ranger: LimitRanger plugin set: cpu, memory request for container nginx; cpu, memory limit for container nginx

Status: Running

IP: 10.200.151.239

IPs:

IP: 10.200.151.239

Controlled By: ReplicaSet/nginx-85b98978db

Containers:

nginx:

Container ID: docker://87ba871baf95ae06ad4ebaa68f0c7a860fe41f0c516dc10df4171f227e723e2d

Image: nginx

Image ID: docker-pullable://nginx@sha256:2ab30d6ac53580a6db8b657abf0f68d75360ff5cc1670a85acb5bd85ba1b19c0

Port: <none>

Host Port: <none>

State: Running

Started: Sat, 01 Apr 2023 20:04:08 +0800

Ready: True

Restart Count: 0

Limits:

cpu: 500m

memory: 512Mi

Requests:

cpu: 500m

memory: 512Mi

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-qjfcq (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

kube-api-access-qjfcq:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: Guaranteed

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 71s default-scheduler Successfully assigned magedu/nginx-85b98978db-mcq7t to 192.168.7.112

Normal Pulling 68s kubelet Pulling image "nginx"

Normal Pulled 57s kubelet Successfully pulled image "nginx" in 10.954477732s

Normal Created 57s kubelet Created container nginx

Normal Started 57s kubelet Started container nginx

#修改应用的resource配置,使其不符合limitRange配置,超过单个容器的最大内存1Gi限制

root@k8s-master1:~# vi nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx

name: nginx

namespace: magedu

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- image: nginx

name: nginx

resources:

requests:

memory: "200Mi"

cpu: "250m"

limits:

memory: "1200Mi"

cpu: "500m"

##应用配置

root@k8s-master1:~# kubectl apply -f nginx.yaml

deployment.apps/nginx created

##查看deployment状态

root@k8s-master1:~/20230401# kubectl get deploy -n magedu

NAME READY UP-TO-DATE AVAILABLE AGE

nginx 1/1 0 1 9m10s

wordpress-app-deployment 1/1 1 1 9h

root@k8s-master1:~/20230401# kubectl get deploy nginx -o yaml -n magedu

apiVersion: apps/v1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "2"

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"apps/v1","kind":"Deployment","metadata":{"annotations":{},"labels":{"app":"nginx"},"name":"nginx","namespace":"magedu"},"spec":{"replicas":1,"selector":{"matchLabels":{"app":"nginx"}},"strategy":{},"template":{"metadata":{"labels":{"app":"nginx"}},"spec":{"containers":[{"image":"nginx","name":"nginx","resources":{"limits":{"cpu":"500m","memory":"1200Mi"},"requests":{"cpu":"250m","memory":"200Mi"}}}]}}},"status":{}}

creationTimestamp: "2023-04-01T12:03:54Z"

generation: 2

labels:

app: nginx

name: nginx

namespace: magedu

resourceVersion: "227690"

uid: 567d478a-bb94-4e3a-9243-79cd7542f70f

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

app: nginx

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

creationTimestamp: null

labels:

app: nginx

spec:

containers:

- image: nginx

imagePullPolicy: Always

name: nginx

resources:

limits:

cpu: 500m

memory: 1200Mi

requests:

cpu: 250m

memory: 200Mi

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

status:

availableReplicas: 1

conditions:

- lastTransitionTime: "2023-04-01T12:04:08Z"

lastUpdateTime: "2023-04-01T12:04:08Z"

message: Deployment has minimum availability.

reason: MinimumReplicasAvailable

status: "True"

type: Available

- lastTransitionTime: "2023-04-01T12:03:54Z"

lastUpdateTime: "2023-04-01T12:12:11Z"

message: Created new replica set "nginx-7f8f4f9dcb"

reason: NewReplicaSetCreated

status: "True"

type: Progressing

- lastTransitionTime: "2023-04-01T12:12:11Z"

lastUpdateTime: "2023-04-01T12:12:11Z"

message: 'pods "nginx-7f8f4f9dcb-g5n99" is forbidden: maximum memory usage per

Container is 1Gi, but limit is 1200Mi'

reason: FailedCreate

status: "True"

type: ReplicaFailure

observedGeneration: 2

readyReplicas: 1

replicas: 1

unavailableReplicas: 1

##修改nginx资源编排,使其单个pod超过limitRange限制,超出默认单个容器的CPU 2限制

root@k8s-master1:~# vi nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx

name: nginx

namespace: magedu

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- image: nginx

name: nginx

resources:

requests:

memory: "200Mi"

cpu: "250m"

limits:

memory: "500Mi"

cpu: "800m"

- image: redis

name: redis

resources:

requests:

memory: "200Mi"

cpu: "250m"

limits:

memory: "500Mi"

cpu: "800m"

- image: tomcat

name: tomcat

resources:

requests:

memory: "200Mi"

cpu: "250m"

limits:

memory: "500Mi"

cpu: "800m"

##创建应用

root@k8s-master1:~# kubectl apply -f nginx.yaml

deployment.apps/nginx created

##查看deployment

root@k8s-master1:~/20230401# kubectl get deploy -n magedu

NAME READY UP-TO-DATE AVAILABLE AGE

nginx 0/1 0 0 46s

wordpress-app-deployment 1/1 1 1 11h

root@k8s-master1:~/20230401# kubectl get deploy nginx -n magedu -o yaml

apiVersion: apps/v1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "1"

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"apps/v1","kind":"Deployment","metadata":{"annotations":{},"labels":{"app":"nginx"},"name":"nginx","namespace":"magedu"},"spec":{"replicas":1,"selector":{"matchLabels":{"app":"nginx"}},"template":{"metadata":{"labels":{"app":"nginx"}},"spec":{"containers":[{"image":"nginx","name":"nginx","resources":{"limits":{"cpu":"800m","memory":"500Mi"},"requests":{"cpu":"250m","memory":"200Mi"}}},{"image":"redis","name":"redis","resources":{"limits":{"cpu":"800m","memory":"500Mi"},"requests":{"cpu":"250m","memory":"200Mi"}}},{"image":"tomcat","name":"tomcat","resources":{"limits":{"cpu":"800m","memory":"500Mi"},"requests":{"cpu":"250m","memory":"200Mi"}}}]}}}}

creationTimestamp: "2023-04-01T13:48:16Z"

generation: 1

labels:

app: nginx

name: nginx

namespace: magedu

resourceVersion: "235273"

uid: 808b35f1-6ce7-4426-bda6-428a80232bbf

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

app: nginx

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

creationTimestamp: null

labels:

app: nginx

spec:

containers:

- image: nginx

imagePullPolicy: Always

name: nginx

resources:

limits:

cpu: 800m

memory: 500Mi

requests:

cpu: 250m

memory: 200Mi

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

- image: redis

imagePullPolicy: Always

name: redis

resources:

limits:

cpu: 800m

memory: 500Mi

requests:

cpu: 250m

memory: 200Mi

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

- image: tomcat

imagePullPolicy: Always

name: tomcat

resources:

limits:

cpu: 800m

memory: 500Mi

requests:

cpu: 250m

memory: 200Mi

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

status:

conditions:

- lastTransitionTime: "2023-04-01T13:48:16Z"

lastUpdateTime: "2023-04-01T13:48:16Z"

message: Created new replica set "nginx-778f79884d"

reason: NewReplicaSetCreated

status: "True"

type: Progressing

- lastTransitionTime: "2023-04-01T13:48:16Z"

lastUpdateTime: "2023-04-01T13:48:16Z"

message: Deployment does not have minimum availability.

reason: MinimumReplicasUnavailable

status: "False"

type: Available

- lastTransitionTime: "2023-04-01T13:48:16Z"

lastUpdateTime: "2023-04-01T13:48:16Z"

message: 'pods "nginx-778f79884d-nsfd5" is forbidden: maximum cpu usage per Pod

is 2, but limit is 2400m'

reason: FailedCreate

status: "True"

type: ReplicaFailure

observedGeneration: 1

unavailableReplicas: 1

- 基于 ResourceQuota 限制 magedu namespace 中最多可分配 CPU 192C,内存 512G。

##编写ResourceQuota配置文件

root@k8s-master1:~# vim ResourceQuota-magedu.yaml

apiVersion: v1

kind: ResourceQuota

metadata:

name: quota-magedu

namespace: magedu

spec:

hard:

limits.cpu: "192"

limits.memory: 512Gi

##应用ResourceQuota配置文件

root@k8s-master1:~# kubectl apply -f ResourceQuota-magedu.yaml

resourcequota/quota-magedu created

##查看ResourceQuota

root@k8s-master1:~# kubectl get resourcequotas -n magedu

NAME AGE REQUEST LIMIT

quota-magedu 11s limits.cpu: 0/192, limits.memory: 0/512Gi

##编辑一个nginx应用,使其超过ResourceQuota限制

root@k8s-master1:~# vim nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx

name: nginx

namespace: magedu

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- image: nginx

name: nginx

resources:

requests:

memory: "200Mi"

cpu: "250m"

limits:

memory: "500Mi"

cpu: "193"

##部署应用

root@k8s-master1:~/20230401# kubectl apply -f nginx2.yaml

deployment.apps/nginx created

##查看deployment

root@k8s-master1:~/20230401# kubectl get deploy -n magedu

NAME READY UP-TO-DATE AVAILABLE AGE

nginx 0/1 0 0 28s

wordpress-app-deployment 1/1 1 1 11h

##查看deployment应用状态

root@k8s-master1:~/20230401# kubectl get deploy nginx -n magedu -o yaml

apiVersion: apps/v1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "1"

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"apps/v1","kind":"Deployment","metadata":{"annotations":{},"labels":{"app":"nginx"},"name":"nginx","namespace":"magedu"},"spec":{"replicas":1,"selector":{"matchLabels":{"app":"nginx"}},"template":{"metadata":{"labels":{"app":"nginx"}},"spec":{"containers":[{"image":"nginx","name":"nginx","resources":{"limits":{"cpu":"193","memory":"500Mi"},"requests":{"cpu":"250m","memory":"200Mi"}}}]}}}}

creationTimestamp: "2023-04-01T14:01:36Z"

generation: 1

labels:

app: nginx

name: nginx

namespace: magedu

resourceVersion: "237950"

uid: abf16af5-d89d-43bb-9599-c3d0248ef21a

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

app: nginx

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

creationTimestamp: null

labels:

app: nginx

spec:

containers:

- image: nginx

imagePullPolicy: Always

name: nginx

resources:

limits:

cpu: "193"

memory: 500Mi

requests:

cpu: 250m

memory: 200Mi

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

status:

conditions:

- lastTransitionTime: "2023-04-01T14:01:36Z"

lastUpdateTime: "2023-04-01T14:01:36Z"

message: Deployment does not have minimum availability.

reason: MinimumReplicasUnavailable

status: "False"

type: Available

- lastTransitionTime: "2023-04-01T14:01:36Z"

lastUpdateTime: "2023-04-01T14:01:36Z"

message: 'pods "nginx-7fb6f9b749-qjcbx" is forbidden: [maximum cpu usage per Container

is 1, but limit is 193, maximum cpu usage per Pod is 2, but limit is 193]'

reason: FailedCreate

status: "True"

type: ReplicaFailure

- lastTransitionTime: "2023-04-01T14:11:37Z"

lastUpdateTime: "2023-04-01T14:11:37Z"

message: ReplicaSet "nginx-7fb6f9b749" has timed out progressing.

reason: ProgressDeadlineExceeded

status: "False"

type: Progressing

observedGeneration: 1

unavailableReplicas: 1

- 基于 Operator 在 Kubernetes 环境部署 prometheus 监控环境 (prometheus-server、cAdvisor、grafana、node-exporter 等)。

##下载prometheus operator代码

root@k8s-master1:~# git clone -b release-0.11 https://github.com/prometheus-operator/kube-prometheus.git

##查看不能下载的镜像

root@k8s-master1:~/kube-prometheus# grep image: manifests/ -R | grep "k8s.gcr.io"

manifests/kubeStateMetrics-deployment.yaml: image: k8s.gcr.io/kube-state-metrics/kube-state-metrics:v2.5.0

manifests/prometheusAdapter-deployment.yaml: image: k8s.gcr.io/prometheus-adapter/prometheus-adapter:v0.9.1

##从dockerhub下载相关镜像并推送到本地harbor

root@k8s-master1:~/kube-prometheus# docker pull bitnami/kube-state-metrics:2.5.0

2.5.0: Pulling from bitnami/kube-state-metrics

3b5e91f25ce6: Pull complete

f4edd0de0a59: Pull complete

29c2ee9f0667: Pull complete

1c11a2150f85: Pull complete

1af4156b1895: Pull complete

b1846fa756d9: Pull complete

Digest: sha256:5241a94a2680e40b10c1168d4980f66536cb8451a516dc29d351cd7b04e61203

Status: Downloaded newer image for bitnami/kube-state-metrics:2.5.0

docker.io/bitnami/kube-state-metrics:2.5.0

root@k8s-master1:~/kube-prometheus# docker tag bitnami/kube-state-metrics:2.5.0 harbor.magedu.net/baseimages/kube-state-metrics:2.5.0

root@k8s-master1:~/kube-prometheus# docker push harbor.magedu.net/baseimages/kube-state-metrics:2.5.0

The push refers to repository [harbor.magedu.net/baseimages/kube-state-metrics]

8c945a3cca19: Pushed

ed0e9008e164: Pushed

529acdee5da6: Pushed

22bd32907ca2: Pushed

cb455b8ae029: Pushed

d745f418fc70: Pushed

2.5.0: digest: sha256:5241a94a2680e40b10c1168d4980f66536cb8451a516dc29d351cd7b04e61203 size: 1577

root@k8s-master1:~/kube-prometheus# docker pull willdockerhub/prometheus-adapter:v0.9.1

v0.9.1: Pulling from willdockerhub/prometheus-adapter

v0.9.1: Pulling from willdockerhub/prometheus-adapter

ec52731e9273: Pull complete

328505386212: Pull complete

Digest: sha256:d025d1a109234c28b4a97f5d35d759943124be8885a5bce22a91363025304e9d

Status: Downloaded newer image for willdockerhub/prometheus-adapter:v0.9.1

docker.io/willdockerhub/prometheus-adapter:v0.9.1

root@k8s-master1:~/kube-prometheus# docker tag willdockerhub/prometheus-adapter:v0.9.1 harbor.magedu.net/baseimages/prometheus-adapter:v0.9.1

root@k8s-master1:~/kube-prometheus# docker push harbor.yanggc.cn/baseimages/prometheus-adapter:v0.9.1

The push refers to repository [harbor.magedu.net/baseimages/prometheus-adapter]

53fec7bf54e1: Pushed

c0d270ab7e0d: Pushed

v0.9.1: digest: sha256:2e5612ba2ed7f3cc1447bb8f00f3e0a8e35eb32cc6a0fc111abb13bf4a35144e size: 739

##修改yaml文件中的镜像地址

root@k8s-master1:~/kube-prometheus# sed -i 's;k8s.gcr.io/kube-state-metrics/kube-state-metrics:v2.5.0;harbor.magedu.net/baseimages/kube-state-metrics:2.5.0;g' manifests/kubeStateMetrics-deployment.yaml

root@k8s-master1:~/kube-prometheus# sed -i 's;k8s.gcr.io/prometheus-adapter/prometheus-adapter:v0.9.1;harbor.magedu.net/baseimages/prometheus-adapter:v0.9.1;g' manifests/prometheusAdapter-deployment.yaml

root@k8s-master1:~/kube-prometheus# grep -n "harbor.magedu.net" -R manifests

manifests/kubeStateMetrics-deployment.yaml:35: image: harbor.yanggc.cn/baseimages/kube-state-metrics:2.5.0

manifests/prometheusAdapter-deployment.yaml:40: image: harbor.yanggc.cn/baseimages/prometheus-adapter:v0.9.1

##创建命名空间和CRD

root@k8s-master1:~/kube-prometheus# kubectl apply --server-side -f manifests/setup

customresourcedefinition.apiextensions.k8s.io/alertmanagerconfigs.monitoring.coreos.com serverside-applied

customresourcedefinition.apiextensions.k8s.io/alertmanagers.monitoring.coreos.com serverside-applied

customresourcedefinition.apiextensions.k8s.io/podmonitors.monitoring.coreos.com serverside-applied

customresourcedefinition.apiextensions.k8s.io/probes.monitoring.coreos.com serverside-applied

customresourcedefinition.apiextensions.k8s.io/prometheuses.monitoring.coreos.com serverside-applied

customresourcedefinition.apiextensions.k8s.io/prometheusrules.monitoring.coreos.com serverside-applied

customresourcedefinition.apiextensions.k8s.io/servicemonitors.monitoring.coreos.com serverside-applied

customresourcedefinition.apiextensions.k8s.io/thanosrulers.monitoring.coreos.com serverside-applied

namespace/monitoring serverside-applied

##等待命名空间和CRD创建完成

root@k8s-master1:~/kube-prometheus# kubectl wait \

--for condition=Established \

--all CustomResourceDefinition \

--namespace=monitoring

customresourcedefinition.apiextensions.k8s.io/alertmanagerconfigs.monitoring.coreos.com condition met

customresourcedefinition.apiextensions.k8s.io/alertmanagers.monitoring.coreos.com condition met

customresourcedefinition.apiextensions.k8s.io/podmonitors.monitoring.coreos.com condition met

customresourcedefinition.apiextensions.k8s.io/probes.monitoring.coreos.com condition met

customresourcedefinition.apiextensions.k8s.io/prometheuses.monitoring.coreos.com condition met

customresourcedefinition.apiextensions.k8s.io/prometheusrules.monitoring.coreos.com condition met

customresourcedefinition.apiextensions.k8s.io/servicemonitors.monitoring.coreos.com condition met

customresourcedefinition.apiextensions.k8s.io/thanosrulers.monitoring.coreos.com condition met

##部署prometheus operator

root@k8s-master1:~/kube-prometheus# kubectl apply -f manifests/

##查看monitoring namespace 下的所有pod

root@k8s-master1:~/20230401/kube-prometheus# kubectl get pod -n monitoring -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

alertmanager-main-0 2/2 Running 0 8m52s 10.200.218.30 192.168.7.111 <none> <none>

alertmanager-main-1 2/2 Running 0 8m52s 10.200.151.246 192.168.7.112 <none> <none>

alertmanager-main-2 2/2 Running 0 8m52s 10.200.218.95 192.168.7.113 <none> <none>

blackbox-exporter-746c64fd88-8h8fn 3/3 Running 0 10m 10.200.218.94 192.168.7.113 <none> <none>

grafana-5fc7f9f55d-c54rr 1/1 Running 0 10m 10.200.218.91 192.168.7.113 <none> <none>

kube-state-metrics-5694c7bc99-mhms8 3/3 Running 0 10m 10.200.218.92 192.168.7.113 <none> <none>

node-exporter-9pc6d 2/2 Running 0 10m 192.168.7.111 192.168.7.111 <none> <none>

node-exporter-f5zv9 2/2 Running 0 10m 192.168.7.103 192.168.7.103 <none> <none>

node-exporter-fb9kt 2/2 Running 0 10m 192.168.7.112 192.168.7.112 <none> <none>

node-exporter-nqrlw 2/2 Running 0 10m 192.168.7.113 192.168.7.113 <none> <none>

node-exporter-rsgw9 2/2 Running 0 10m 192.168.7.101 192.168.7.101 <none> <none>

node-exporter-x6bsf 2/2 Running 0 10m 192.168.7.102 192.168.7.102 <none> <none>

prometheus-adapter-644fcf6bdd-dcxrr 1/1 Running 0 10m 10.200.218.93 192.168.7.113 <none> <none>

prometheus-adapter-644fcf6bdd-v7nzb 1/1 Running 0 10m 10.200.151.244 192.168.7.112 <none> <none>

prometheus-k8s-0 2/2 Running 0 8m49s 10.200.218.29 192.168.7.111 <none> <none>

prometheus-k8s-1 2/2 Running 0 8m49s 10.200.151.245 192.168.7.112 <none> <none>

prometheus-operator-f59c8b954-5n9td 2/2 Running 0 10m 10.200.151.243 192.168.7.112 <none> <none>

##修改网络策略及svc实现从外网访问prometheus server

root@k8s-master1:~/kube-prometheus# mkdir networkPolicy

root@k8s-master1:~/kube-prometheus# mv manifests/*networkPolicy* networkPolicy/

root@k8s-master1:~/kube-prometheus# kubectl delete -f networkPolicy/

networkpolicy.networking.k8s.io "alertmanager-main" deleted

networkpolicy.networking.k8s.io "blackbox-exporter" deleted

networkpolicy.networking.k8s.io "grafana" deleted

networkpolicy.networking.k8s.io "kube-state-metrics" deleted

networkpolicy.networking.k8s.io "node-exporter" deleted

networkpolicy.networking.k8s.io "prometheus-k8s" deleted

networkpolicy.networking.k8s.io "prometheus-adapter" deleted

networkpolicy.networking.k8s.io "prometheus-operator" deleted

root@k8s-master1:~/kube-prometheus# vim manifests/prometheus-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: prometheus

app.kubernetes.io/instance: k8s

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 2.36.1

name: prometheus-k8s

namespace: monitoring

spec:

type: NodePort

ports:

- name: web

port: 9090

targetPort: web

nodePort: 39090

- name: reloader-web

port: 8080

targetPort: reloader-web

nodePort: 38080

selector:

app.kubernetes.io/component: prometheus

app.kubernetes.io/instance: k8s

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

sessionAffinity: ClientIP

root@k8s-master1:~/kube-prometheus# kubectl apply -f manifests/prometheus-service.yaml

service/prometheus-k8s configured

##修改grafana svc实现从外网访问grafana server

root@k8s-master1:~/kube-prometheus# vim manifests/grafana-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: grafana

app.kubernetes.io/name: grafana

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 8.5.5

name: grafana

namespace: monitoring

spec:

type: NodePort

ports:

- name: http

port: 3000

targetPort: http

nodePort: 33000

selector:

app.kubernetes.io/component: grafana

app.kubernetes.io/name: grafana

app.kubernetes.io/part-of: kube-prometheus

root@k8s-master1:~/kube-prometheus# kubectl apply -f manifests/grafana-service.yaml

service/grafana configured

- 手动在 kubernetes 中部署 prometheus 监控环境 (prometheus-server、cAdvisor、grafana、node-exporter 等)。

8.1 部署cadvisor

##切换到本地资源目录

root@k8s-master1:~# cd /usr/local/src/

##解压prometheus手动部署资源文件

root@k8s-master1:/usr/local/src# unzip prometheus-case-files.zip

##进入到prometheus资源文件目录

root@k8s-master1:/usr/local/src# cd prometheus-case-files/

##创建namespace

root@k8s-master1:/usr/local/src/1.prometheus-case-files# kubectl create ns monitoring

namespace/monitoring created

##部署cadvisor

root@k8s-master1:/usr/local/src/1.prometheus-case-files# kubectl apply -f case1-daemonset-deploy-cadvisor.yaml

daemonset.apps/cadvisor created

##查看pod

root@k8s-master1:/usr/local/src/prometheus-case-files# kubectl get pod -n monitoring

NAME READY STATUS RESTARTS AGE

cadvisor-4m25c 1/1 Running 0 93s

cadvisor-72ljt 1/1 Running 0 93s

cadvisor-9lkxc 1/1 Running 0 93s

cadvisor-h5skt 1/1 Running 0 93s

cadvisor-hxxlb 1/1 Running 0 93s

cadvisor-w47nr 1/1 Running 0 93s

#访问cAdvisor,它收集pod的日志

8.2 部署node_exporter

##应用node_exporter资源编排文件

root@k8s-master1:/usr/local/src/prometheus-case-files# kubectl apply -f case2-daemonset-deploy-node-exporter.yaml

daemonset.apps/node-exporter created

service/node-exporter created

##查看pod

root@k8s-master1:/usr/local/src/prometheus-case-files# kubectl get pod -n monitoring

NAME READY STATUS RESTARTS AGE

cadvisor-4m25c 1/1 Running 0 16m

cadvisor-72ljt 1/1 Running 0 16m

cadvisor-9lkxc 1/1 Running 0 16m

cadvisor-h5skt 1/1 Running 0 16m

cadvisor-hxxlb 1/1 Running 0 16m

cadvisor-w47nr 1/1 Running 0 16m

node-exporter-4nl9h 1/1 Running 0 17s

node-exporter-8s86h 1/1 Running 0 17s

node-exporter-hrt95 1/1 Running 0 17s

node-exporter-mpp7s 1/1 Running 0 17s

node-exporter-w4644 1/1 Running 0 17s

node-exporter-z8jjd 1/1 Running 0 17s

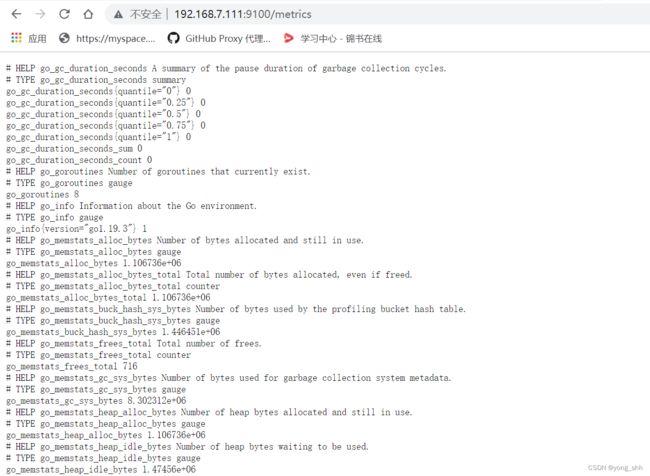

#访问node_exporter,它收集node日志

8.3 prometheus server

##NFS服务器创建prometheus server数据目录

root@haproxy1:~# mkdir -p /data/k8sdata/prometheusdata

##修改目录权限

root@haproxy1:~# chown 65534.65534 /data/k8sdata/prometheusdata -R

##创建monitor serviceaccount

root@k8s-master1:/usr/local/src/prometheus-case-files# kubectl create serviceaccount monitor -n monitoring

serviceaccount/monitor created

##为monitor授予cluster-admin权限

root@k8s-master1:/usr/local/src/prometheus-case-files# kubectl create clusterrolebinding monitor-clusterrolebinding -n monitoring --clusterrole=cluster-admin --serviceaccount=monitoring:monitor

clusterrolebinding.rbac.authorization.k8s.io/monitor-clusterrolebinding create

##部署prometheus server资源编排文件

root@k8s-master1:/usr/local/src/prometheus-case-files# kubectl apply -f case3-1-prometheus-cfg.yaml

configmap/prometheus-config created

root@k8s-master1:/usr/local/src/prometheus-case-files# kubectl apply -f case3-2-prometheus-deployment.yaml

deployment.apps/prometheus-server created

root@k8s-master1:/usr/local/src/prometheus-case-files# kubectl apply -f case3-3-prometheus-svc.yaml

service/prometheus created

##查看部署的资源

root@k8s-master1:/usr/local/src/prometheus-case-files# kubectl get pod,svc -n monitoring

NAME READY STATUS RESTARTS AGE

pod/cadvisor-4m25c 1/1 Running 0 25m

pod/cadvisor-72ljt 1/1 Running 0 25m

pod/cadvisor-9lkxc 1/1 Running 0 25m

pod/cadvisor-h5skt 1/1 Running 0 25m

pod/cadvisor-hxxlb 1/1 Running 0 25m

pod/cadvisor-w47nr 1/1 Running 0 25m

pod/node-exporter-4nl9h 1/1 Running 0 9m9s

pod/node-exporter-8s86h 1/1 Running 0 9m9s

pod/node-exporter-hrt95 1/1 Running 0 9m9s

pod/node-exporter-mpp7s 1/1 Running 0 9m9s

pod/node-exporter-w4644 1/1 Running 0 9m9s

pod/node-exporter-z8jjd 1/1 Running 0 9m9s

pod/prometheus-server-855dd68677-tt4cr 1/1 Running 0 32s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/node-exporter NodePort 10.100.185.2 <none> 9100:39100/TCP 9m9s

service/prometheus NodePort 10.100.151.191 <none> 9090:30090/TCP 24s

##访问prometheus

8.4 部署grafana server

##NFS服务器创建grafana server数据目录

root@haproxy1:~# mkdir -p /data/k8sdata/grafana

##修改目录权限

root@haproxy1:~# chown 472.0 /data/k8sdata/grafana/ -R

##部署grafana server 资源编排文件

root@k8s-master1:/usr/local/src/prometheus-case-files# kubectl apply -f case4-grafana.yaml

deployment.apps/grafana created

service/grafana created

##查看创建的资源

root@k8s-master1:/usr/local/src/prometheus-case-files# kubectl get pod,svc -n monitoring

NAME READY STATUS RESTARTS AGE

pod/cadvisor-4m25c 1/1 Running 0 44m

pod/cadvisor-72ljt 1/1 Running 0 44m

pod/cadvisor-9lkxc 1/1 Running 0 44m

pod/cadvisor-h5skt 1/1 Running 0 44m

pod/cadvisor-hxxlb 1/1 Running 0 44m

pod/cadvisor-w47nr 1/1 Running 0 44m

pod/grafana-68568b858b-rp5hr 0/1 ContainerCreating 0 24s

pod/node-exporter-4nl9h 1/1 Running 0 28m

pod/node-exporter-8s86h 1/1 Running 0 28m

pod/node-exporter-hrt95 1/1 Running 0 28m

pod/node-exporter-mpp7s 1/1 Running 0 28m

pod/node-exporter-w4644 1/1 Running 0 28m

pod/node-exporter-z8jjd 1/1 Running 0 28m

pod/prometheus-server-855dd68677-tt4cr 1/1 Running 0 19m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/grafana NodePort 10.100.11.83 <none> 3000:33000/TCP 24s

service/node-exporter NodePort 10.100.185.2 <none> 9100:39100/TCP 28m

service/prometheus NodePort 10.100.151.191 <none> 9090:30090/TCP 19m

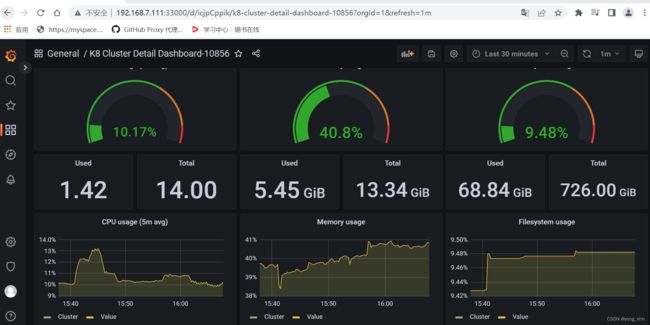

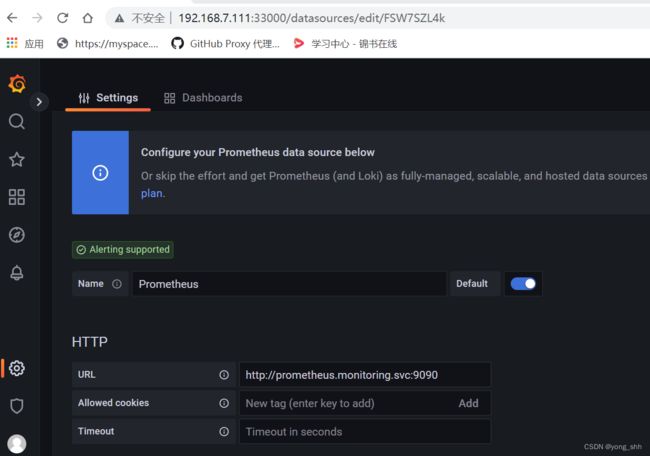

##grafana添加prome数据源

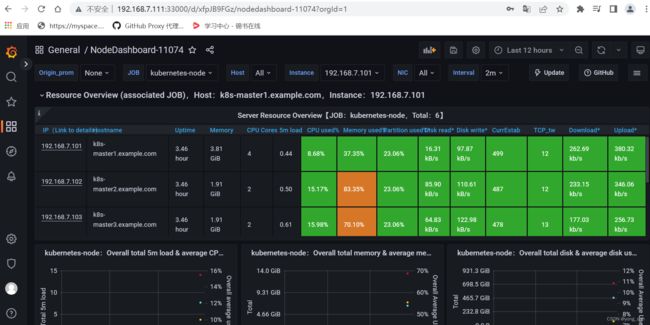

##grafana导入pod模板11074并验证数据

#grafana导入pod模板893并验证数据

8.5 部署kube-state-metrics

##部署 kube-state-metrics资源编排文件

root@k8s-master1:/usr/local/src/prometheus-case-files# kubectl apply -f case5-kube-state-metrics-deploy.yaml

deployment.apps/kube-state-metrics created

serviceaccount/kube-state-metrics created

clusterrole.rbac.authorization.k8s.io/kube-state-metrics created

clusterrolebinding.rbac.authorization.k8s.io/kube-state-metrics created

service/kube-state-metrics created

##查看pod,svc

root@k8s-master1:/usr/local/src/prometheus-case-files# kubectl get pod,svc -n kube-system | grep kube-state-metrics

pod/kube-state-metrics-7d6bc6767b-h9pgg 1/1 Running 0 13m

service/kube-state-metrics NodePort 10.100.69.111 <none> 8080:31666/TCP 13m

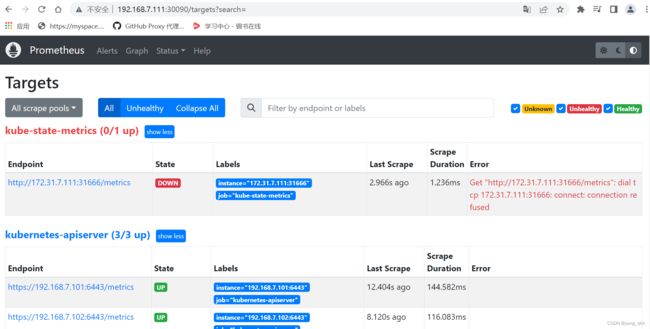

##prometheus查看kube-state-metrics抓取状态

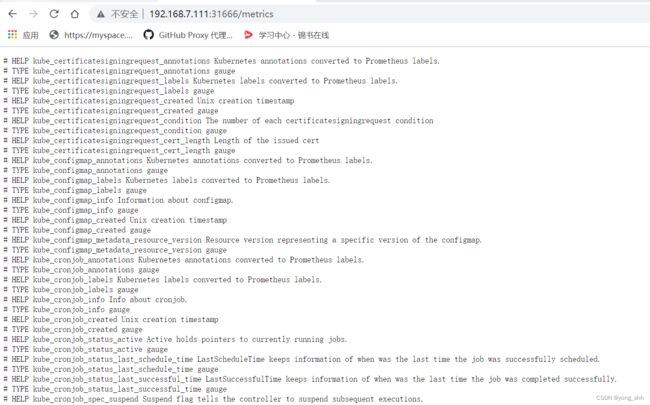

##访问kube-state-metrics

##导入kube-state-metrics模板10856并验证数据