Spark 面试题

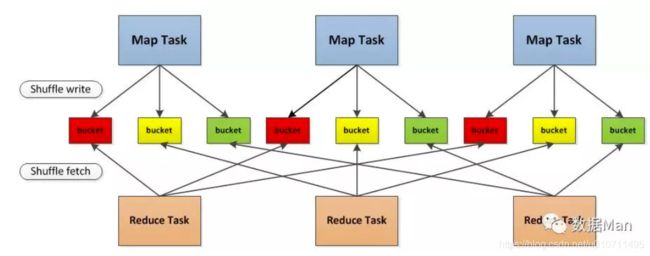

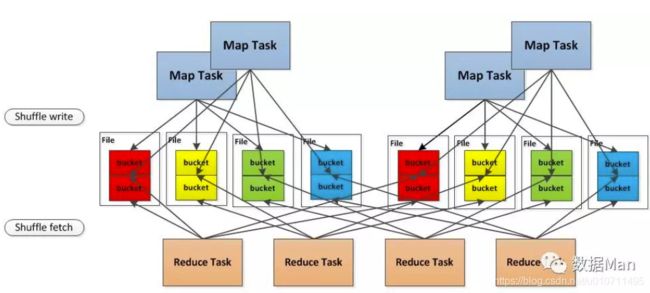

1. 了解shuffle代码

SortShuffle

改进的主要原因

Linux最大一次能打开的文件数量是1024个,所以优化的方向就是减少文件数量

hash shuffle 文件数=executor数量* core数* map task数* 分区数

改进后的hashshuffle文件数=executor数量* core数* 1*分区数

sorshuffle文件数=executor数量* core数

2. Spark的优化?

- 合理设置并行度,比如某个stage有150个task要运行,那么可以比如说50个executor,然后每个executo**r 3个core . 如果你只有100个task需要运行,那么这个配置就属于资源浪费了

3. Task与Job之间的关系

job -> 一个或多个stage -> 一个或多个task

4. 任务提交流程

1 提交任务的节点启动一个driver(client)进程;

2 dirver进程启动以后,首先是构建sparkcontext,sparkcontext主要包含两部分:DAGScheduler和TaskScheduler

DAGScheduler:

根据job划分N个stage,每一个stage都会有一个taskSet,

然后将taskSet发送给taskScheduler

TaskScheduler

会寻找Master节点,Master节点接收到Application的注册请求后

,通过资源调度算法,在自己的集群的worker上启动Executor进程;

启动的executor也会反向注册到 TaskScheduler上

3 Executor每接收到一个task,都会用TaskRunner封装task,然后从线程池中取出一个线程去执行taskTaskRunner

主要包含两种task:ShuffleMapTask和ResultTask

5. RDD的弹性表现在哪里?

1.自动进行内存和磁盘切换

2.基于lineage的高效容错

3.task如果失败会特定次数的重试

4.stage如果失败会自动进行特定次数的重试,而且只会只计算失败的分片

5.checkpoint【每次对RDD操作都会产生新的RDD,如果链条比较长,计算比较笨重,就把数据放在硬盘中】和persist 【内存或磁盘中对数据进行复用】(检查点、持久化)

6.数据调度弹性:DAG TASK 和资源管理无关

7.数据分片的高度弹性repartion

6. Transform 类型的RDD与action类型的RDD各有哪些?

Transform 类型:map,filter,mapPartitions,xxbyKey,join,repartition,distinct等等

action类型:collect,reduce,take,takeOrdered,

7. 发生Shuffle的算子有哪些?

1.去重操作:

2.聚合,byKey类操作

reduceByKey、groupByKey、sortByKey等。

byKey类的操作要对一个key,进行聚合操作,那么肯定要保证集群中,所有节点上的相同的key,移动到同一个节点上进行处理。

3.排序操作:

sortByKey等。

4.重分区操作:

repartition、repartitionAndSortWithinPartitions、coalesce(shuffle=true)等。

重分区一般会shuffle,因为需要在整个集群中,对之前所有的分区的数据进行随机,均匀的打乱,然后把数据放入下游新的指定数量的分区内。

5.集合或者表操作:

join、cogroup等。

两个rdd进行join,就必须将相同join key的数据,shuffle到同一个节点上,然后进行相同key的两个rdd数据的笛卡尔乘积。

8. Spark Streaming对应kafka中的三种语义,分别是什么?

9. Spark任务提交参数有哪些?(结合项目)

spark-submit --class org.apache.spark.examples.SparkPi \

--master yarn \

--deploy-mode client \

--driver-memory 2g \

--executor-memory 2g \

--executor-cores 2 \

/usr/local/spark/examples/jars/spark-examples_2.11-2.2.3.jar \

100

10. RDD的特性?

1 不可变的:RDD一旦被创建,数据是只读的,如果更改数据,会产生新的RDD

2 分区:一个RDD有多个Partition,分区也可以根据业务需求来指定

3 并行的: 因为有多个分区,所以可以并行计算.

11. 写Spark SQL

DSL风格

def test8(): Unit ={

val spark:SparkSession = SparkSession.builder()

.master("local[1]")

.appName("SparkByExamples.com")

.getOrCreate()

import spark.implicits._

spark.conf.set("spark.sql.shuffle.partitions",100)

val simpleData = Seq(("James","Sales","NY",90000,34,10000),

("Michael","Sales","NY",86000,56,20000),

("Robert","Sales","CA",81000,30,23000),

("Maria","Finance","CA",90000,24,23000),

("Raman","Finance","CA",99000,40,24000),

("Scott","Finance","NY",83000,36,19000),

("Jen","Finance","NY",79000,53,15000),

("Jeff","Marketing","CA",80000,25,18000),

("Kumar","Marketing","NY",91000,50,21000)

)

val df: DataFrame = simpleData.toDF("employee_name","department","state","salary","age","bonus")

df.select("*").where("state='NY'").show()

}

运行结果

+-------------+----------+-----+------+---+-----+

|employee_name|department|state|salary|age|bonus|

+-------------+----------+-----+------+---+-----+

| James| Sales| NY| 90000| 34|10000|

| Michael| Sales| NY| 86000| 56|20000|

| Scott| Finance| NY| 83000| 36|19000|

| Jen| Finance| NY| 79000| 53|15000|

| Kumar| Marketing| NY| 91000| 50|21000|

+-------------+----------+-----+------+---+-----+

SQL风格

def test7(): Unit ={

val spark:SparkSession = SparkSession.builder()

.master("local[1]")

.appName("SparkByExamples.com")

.getOrCreate()

import spark.implicits._

spark.conf.set("spark.sql.shuffle.partitions",100)

val simpleData = Seq(("James","Sales","NY",90000,34,10000),

("Michael","Sales","NY",86000,56,20000),

("Robert","Sales","CA",81000,30,23000),

("Maria","Finance","CA",90000,24,23000),

("Raman","Finance","CA",99000,40,24000),

("Scott","Finance","NY",83000,36,19000),

("Jen","Finance","NY",79000,53,15000),

("Jeff","Marketing","CA",80000,25,18000),

("Kumar","Marketing","NY",91000,50,21000)

)

val df: DataFrame = simpleData.toDF("employee_name","department","state","salary","age","bonus")

df.createTempView("tmp")

val sql: String =

"""

|select * from tmp

|where state="NY"

|""".stripMargin

spark.sql(sql).show()

}