【目标检测】手写HOG&HOI特征提取器+cv::ml::SVM模块使用+手写PSO粒子群优化方法+Cpp实例开发+红外行人数据集LSIFIR

目录

1. 手写HOG&HOI特征提取器

1.1. 梯度反向直方图

1.2. HOG特征提取算法的整个实现过程

2. cv::ml::SVM模块使用

3. 手写PSO粒子群优化方法

4. 数据集和源码下载

0.参考博客

[1]写好了train和detect部分的代码(python和C++):

https://blog.csdn.net/hongbin_xu/article/details/79845290

[2]利用OpenCV里面提供的HOG和SVM工具做的行人检测(没有训练部分):

https://blog.csdn.net/lindamtd/article/details/80693720

[3]从原理上讲解了HOG的生成原理,主要是要理解HOG的特征向量的维度的计算方法:

https://blog.csdn.net/chaipp0607/article/details/70888899

[4]对detectMultiScale函数多尺度行人检测的解析:

http://blog.sina.com.cn/s/blog_844b767a0102wq9q.html

[5]OpenCV的cv::ml::SVM模块的参数优化思路:

https://blog.csdn.net/justin_kang/article/details/79015601

[6]对于HOG的深刻理解,了解HOG的本质:

https://www.cnblogs.com/zhazhiqiang/p/3595266.html

https://www.cnblogs.com/wjgaas/p/3597248.html

https://www.leiphone.com/news/201708/ZKsGd2JRKr766wEd.html

[7]80行python代码实现HOG,非常值得参考:

https://blog.csdn.net/ppp8300885/article/details/71078555

[8]理解PSO算法,利用MatLab实现PSO算法,主要是抓住更新位置和更新速度的方法:

https://blog.csdn.net/weixin_40679412/article/details/80571854

1. 手写HOG&HOI特征提取器

Histogram of Oriented Gradient,简称HOG,顾名思义就是,方向梯度直方图。

Histogram of Intensity,简称HOI,也就是习惯说的,灰度直方图,在这里你可以叫它,亮度直方图。

其实,这两个的本质都是:统计图像局部区域的梯度方向信息或者亮度信息来作为该局部图像区域的表征

HOG:局部归一化的梯度方向直方图,是一种对图像局部重叠区域的密集型描述符, 它通过计算局部区域的梯度方向直方图来构成特征

HOI:看完相关的HOI论文后,了解HOI也就是在HOG的框架上将局部区域的特征提取方法,换为亮度直方图,nothing new!

那么我们首先实现HOG的提取方法,然后将中间局部区域的特征提取方法替换为亮度直方图,就可以得到hog和hoi了!

对于HOG特征向量的提取,我们参考博文[7]的python实现方法,用C++复现和封装!

1.1. 梯度反向直方图

中间: 一个网格用箭头表示梯度 右边: 这个网格用数字表示的梯度

为这些8*8的网格创建直方图,直方图包含了9个bin来对应0,20,40,...160这些角度。

下面这张图解释了这个过程。我们用了上一张图里面的那个网格的梯度幅值和方向。根据方向选择用哪个bin, 根据幅值来确定这个bin的大小。先来看蓝色圆圈圈出来的像素点,它的角度是80,幅值是2,所以它在第五个bin里面加了2,再来看红色的圈圆圈圈出来的像素点,它的角度是10,幅值是4,因为角度10介于0-20度的中间(正好一半),所以把幅值一分为二地放到0和20两个bin里面去。

1.2. HOG特征提取算法的整个实现过程

首先定义HOG.h头文件

#pragma once

#ifndef _HOG_H

#define _HOG_H

#include

#include

#include

#define PI 3.14159

//int bin_size;//角度分区,最小单元特征向量维度数

//int cell_size;//图像分块,行像素点数量

//int scaleBlock;//行cell数量

//int stride;//block,滑动步长,单位是cell_size,即滑动一步的步长为一个cell_size

//MYHOGIDescriptor(int bin_size, int cell_size, int saleBlock, int stride);

class MYHOGDescriptor

{

public:

MYHOGDescriptor(int bin_size, int cell_size, int saleBlock, int stride);//构建函数

~MYHOGDescriptor();//析构函数

std::vector> compute(cv::Mat &img);//计算特征向量

cv::Mat getHOGpic();//得到HOG特征向量的反向映射图

private:

std::vector cal_cell_gradient(cv::Mat &cell_magnitude, cv::Mat &cell_angle);//计算单个cell的方向梯度直方图

double findMax3D(std::vector>> vec);//寻找向量中的最大值

void normalizeVector(std::vector &vec);//向量的归一化

private:

int _height;//图像的行数

int _width;//图像的列数

int _angle_unit;//angle_unit=360 / bin_size

int _he;//he=int(height/cell_size)

int _wi;//wi=int(width/cell_size)

int _block_size;//行像素点数量,block_size=scaleBlock*cell_size,多余变量

std::vector > > _cell_gradient_vector;//用来装载每个cell中未归一化的方向梯度向量

int _bin_size;//角度分区,最小单元特征向量维度数

int _cell_size;//图像分块,行像素点数量

int _scaleBlock;//行cell数量

int _stride;//block,滑动步长,单位是cell_size,即滑动一步的步长为一个cell_size

};

#endif // !_HOG_H

接着是HOG.cpp

#include"HOG.h"

using namespace cv;

using namespace std;

MYHOGDescriptor::MYHOGDescriptor(int bin_size, int cell_size, int scaleBlock, int stride)

{

_bin_size = bin_size;

_cell_size = cell_size;

_scaleBlock = scaleBlock;

_stride = stride;

_angle_unit = 360 / _bin_size; //360 / 8 = 45

_block_size = _scaleBlock*_cell_size;

};

MYHOGDescriptor::~MYHOGDescriptor()

{

};

std::vector> MYHOGDescriptor::compute(cv::Mat &img)

{

_height = img.rows;//图像的高

_width = img.cols;//图像的宽

_he = _height / _cell_size;//纵向cell数

_wi = _width / _cell_size;//横向cell数

std::vector > > tmp_cell_gradient_vector(_he, std::vector >(_wi, std::vector(_bin_size)));

_cell_gradient_vector.swap(tmp_cell_gradient_vector);//用vector中的swap方法,初始化_cell_gradient_vector

Mat MatTemp;

cvtColor(img, MatTemp, CV_BGR2GRAY);//cvtColor将图像转为灰度图像

//求sobel梯度

int scale = 1;

int delta = 0;

Mat gradient_values_x = Mat::zeros(MatTemp.size(), CV_64FC1);

Mat gradient_values_y = Mat::zeros(MatTemp.size(), CV_64FC1);

Sobel(MatTemp, gradient_values_x, CV_64FC1, 1, 0, 5, scale, delta, BORDER_DEFAULT);//cv::Sobel对得图像x轴方向、y轴方向的偏导图

Sobel(MatTemp, gradient_values_y, CV_64FC1, 0, 1, 5, scale, delta, BORDER_DEFAULT);

//cout << "gradient_values_x --> "<< gradient_values_x << endl;

//cout << "gradient_values_y --> " << gradient_values_y << endl;

//计算梯度的幅值和角度

Mat gradient_magnitude = Mat::zeros(MatTemp.size(), CV_64FC1);

Mat gradient_angle = Mat::zeros(MatTemp.size(), CV_64FC1);

addWeighted(gradient_values_x, 0.5, gradient_values_y, 0.5, 0, gradient_magnitude);//cv::addWeighted将两个偏导图合并为梯度幅值图

gradient_magnitude = abs(gradient_magnitude);

phase(gradient_values_x, gradient_values_y, gradient_angle, true);//cv::phase将两个偏导图合并为梯度方向图

//cout << "gradient_magnitude.shape --> " << gradient_magnitude.size() << "gradient_angle.shape --> " << gradient_angle.size() << endl;

//cout << "gradient_magnitude --> "<< gradient_magnitude << endl;

//cout << "gradient_angle --> " << gradient_angle << endl;

//cout << "img.shape --> "< "<< endl;

输出三维数组

//for (int x = 0; x < he; x++)

//{

// for (int y = 0; y < he; y++)

// {

// for (int k = 0; k < bin_size; k++)

// {

// cout << setw(5) << cell_gradient_vector[x][y][k] << " ";

// }

// cout << endl;

// }

// cout << endl;

//}

//cout << "cell_gradient_vector.size() --> " << cell_gradient_vector.size() << endl;

//cout << "he --> " << he << endl;

//cout << "wi --> " << wi << endl;

//cout << "cell_gradient_vector.shape[0] --> " << cell_gradient_vector.size() << endl;//16

//cout << "cell_gradient_vector.shape[1] --> " << cell_gradient_vector[0].size() << endl;//21

//cout << "cell_gradient_vector.shape[2] --> " << cell_gradient_vector[0][0].size() << endl;//8

for (int i = 0; i < _cell_gradient_vector.size(); i++)//16

{

for (int j = 0; j < _cell_gradient_vector[0].size(); j++)//21

{

//依次取出各个cell对应的幅值图和方向图,分别为cell_magnitude和cell_angle

Mat cell_magnitude = gradient_magnitude(Range(i * _cell_size, (i + 1) * _cell_size), Range(j * _cell_size, (j + 1) * _cell_size));

Mat cell_angle = gradient_angle(Range(i * _cell_size, (i + 1) * _cell_size), Range(j * _cell_size, (j + 1) * _cell_size));

//cout << "cell_magnitude --> " << format(cell_magnitude, Formatter::FMT_PYTHON) << endl;

//cout << "cell_angle --> " << format(cell_angle, Formatter::FMT_PYTHON) << endl;

//double minv = 0.0, maxv = 0.0;

//double* minp = &minv;

//double* maxp = &maxv;

//minMaxIdx(cell_angle, minp, maxp);

//cout << maxv << endl;

_cell_gradient_vector[i][j] = cal_cell_gradient(cell_magnitude, cell_angle);//将cell对应的幅值图和方向图输入cal_cell_gradient,计算cell的方向梯度直方图

}

}

vector> hog_vector;

//按照之前定义的bolck包含的cell数量,以及block的滑行步长stride,stride为cell的整数倍

int xconfig = _cell_gradient_vector.size() - (_scaleBlock - 2) - _stride;

int yconfig = _cell_gradient_vector[0].size() - (_scaleBlock - 2) - _stride;

for (int i = 0; i < xconfig; i = i + _stride)

{

for (int j = 0; j < yconfig; j = j + _stride)

{

vector block_vector;

for (int z = 0; z < _scaleBlock; z++)

{

for (int k = 0; k < _scaleBlock; k++)

{

//用block_vector装载单个block的全部cell中的方向梯度直方图

block_vector.insert(block_vector.end(), _cell_gradient_vector[i + z][j + k].begin(), _cell_gradient_vector[i + z][j + k].end());

}

}

normalizeVector(block_vector);//normalizeVector对一个bolck中的所有方向梯度直方图做归一化

hog_vector.push_back(block_vector);//推入hog_vector中,单个block计算完毕!

}

}

//cout << "[ " << hog_vector.size() << "," << hog_vector[0].size() << " ]" << endl;

return hog_vector;

}

//将cell对应的幅值图和方向图输入cal_cell_gradient,计算cell的方向梯度直方图

vector MYHOGDescriptor::cal_cell_gradient(Mat &cell_magnitude, Mat &cell_angle)

{

vector orientation_centers = vector(_bin_size);

for (int i = 0; i < cell_magnitude.rows; i++)

{

for (int j = 0; j < cell_magnitude.cols; j++)

{

//gradient_angle中的角度在0~360之间

double gradient_strength = cell_magnitude.at(i, j);

double gradient_angle = cell_angle.at(i, j);//80

int min_angle = int(gradient_angle / _angle_unit) % _bin_size;//(80/45)%8=1

int max_angle = (min_angle + 1) % _bin_size;//(1+1)%8=2//这里的最大角度是指角度可能的最大角度,一般就是max_angle=min_angle+45,有增强边缘特征的作用

int mod = int(gradient_angle) % _angle_unit; //80 % 45=35

orientation_centers[min_angle] += (gradient_strength * (1 - (double(mod) / double(_angle_unit))));

orientation_centers[max_angle] += (gradient_strength * (double(mod) / double(_angle_unit)));

}

}

//cout << "orientation_centers --> " << endl;

//for (int i = 0; i < orientation_centers.size(); i++)

//{

// cout << orientation_centers[i] << ",";

//}

//cout << endl;

//cout << "orientation_centers.size() --> " << orientation_centers.size() << endl;

return orientation_centers;

}

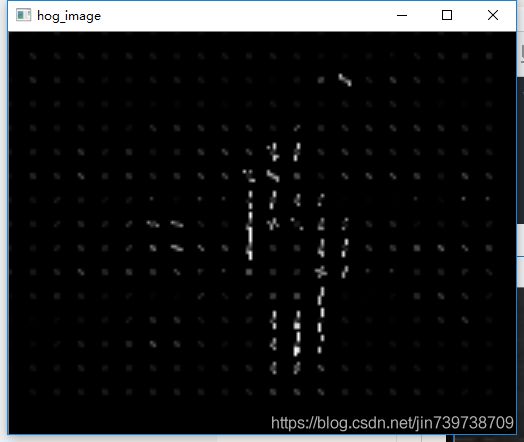

Mat MYHOGDescriptor::getHOGpic()

{

Mat hog_image = Mat::zeros(Size(_width,_height), CV_8U);

vector > > cell_gradient(_cell_gradient_vector);

int cell_width = _cell_size / 2;

double max_mag = findMax3D(cell_gradient);

for (int x = 0; x < cell_gradient.size(); x++)

{

for (int y = 0; y < cell_gradient[0].size(); y++)

{

vector cell_grad = vector(_bin_size);

double angle = 0.0;

int angle_gap = _angle_unit;

for (int k = 0; k < cell_grad.size(); k++)

{

cell_grad[k] = cell_gradient[x][y][k] / max_mag;

double magnitude = cell_grad[k];

double angle_radian = angle*PI / 180;

int x1 = int(x * _cell_size + magnitude * cell_width * cos(angle_radian));//计算余弦值

int y1 = int(y * _cell_size + magnitude * cell_width * sin(angle_radian));

int x2 = int(x * _cell_size - magnitude * cell_width * cos(angle_radian));

int y2 = int(y * _cell_size - magnitude * cell_width * sin(angle_radian));

line(hog_image, Point(y1, x1), Point(y2, x2), Scalar(int(255 * sqrt(magnitude))));

angle += angle_gap;

}

}

}

return hog_image;

}

double MYHOGDescriptor::findMax3D(vector > > vec)

{

double max = -999;

for (int i = 0; i < vec.size(); i++)

{

for (int j = 0; j < vec[0].size(); j++)

{

for (int k = 0; k < vec[0][0].size(); k++)

{

if (max < vec[i][j][k])

{

max = vec[i][j][k];

}

}

}

}

return max;

}

void MYHOGDescriptor::normalizeVector(vector &vec)

{

//采用Min-Max Normalization,简单缩放

double ymax = 1;

float ymin = 0;

float dMaxValue = *max_element(vec.begin(), vec.end()); //求最大值

float dMinValue = *min_element(vec.begin(), vec.end()); //求最小值

for (int f = 0; f < vec.size(); ++f)

{

vec[f] = (ymax - ymin)*(vec[f] - dMinValue) / (dMaxValue - dMinValue + 1e-8) + ymin;

}

} 当个block中的所有cell方向梯度直方图做归一化的时候也可以尝试用其他的归一化方法,效果会有所不同

这里我只是用了最简单的Min-Max Normalization,简单缩放至[0,1]之间!也可以尝试用Z-score规范化(标准差标准化 / 零均值标准化),只是这样的话,特征向量的值中会有正有负!

void MYHOGDescriptor::normalizeVector(vector &vec)

{

采用Min-Max Normalization,简单缩放

//double ymax = 1;

//float ymin = 0;

//float dMaxValue = *max_element(vec.begin(), vec.end()); //求最大值

//float dMinValue = *min_element(vec.begin(), vec.end()); //求最小值

//for (int f = 0; f < vec.size(); ++f)

//{

// vec[f] = (ymax - ymin)*(vec[f] - dMinValue) / (dMaxValue - dMinValue + 1e-8) + ymin;

//}

//采用Z-score规范化(标准差标准化 / 零均值标准化)

double sum = 0;

for (int i = 0; i < vec.size(); i++)

{

sum += vec[i];

}

double mean = sum/ vec.size();

double var = 0;

for (int i = 0; i < vec.size(); i++)

{

var += (vec[i] - mean)*(vec[i] - mean);

}

var = var / (vec.size()-1);

double std = sqrt(var);

for (int i = 0; i < vec.size(); i++)

{

vec[i] = (vec[i] - mean) / std;

}

}

接着是,调用HOG.h中MYHOGDescriptor类的实例

#include"HOG.h"

using namespace cv;

using namespace std;

int main()

{

Mat img = imread("203.png");

创建HOG特征提取器,设置HOG特征提取器参数

int bin_size=8;//角度分区,最小单元特征向量维度数

int cell_size=8;//图像分块,行像素点数量

int scaleBlock=2;//行cell数量

int stride=1;//block,滑动步长,单位是cell_size,即滑动一步的步长为一个cell_size

MYHOGDescriptor hogi(bin_size, cell_size, scaleBlock, stride);

//计算图片对应的HOG特征

vector> hog_vector = hogi.compute(img);

//得到HOG映射的特征图

Mat hog_image = hogi.getHOGpic();

resize(hog_image, hog_image, Size(0, 0), 3, 3);

imshow("hog_image", hog_image);

resize(img, img, Size(0, 0), 3, 3);

imshow("img", img);

waitKey(0);

return 0;

}

2. cv::ml::SVM模块使用

机器学习里面一个非常经典的分类器,支持向量机Support Vector Machine,简称SVM

OpenCV里面有做好的cv::ml::SVM模块,前面做好了HOG特征描述子,这里直接调用就可以了

cv::ml::SVM模块的使用可以参考博文[1]和[2],写的非常好!这里我就直接上代码了!

首先是SVM.h

#pragma once

#ifndef _SVM_H

#define _SVM_H

#include"HOG.h"

#include

#include

#include

typedef enum { PNG, MP4, TXT } InputFileType;

class HOGSVM

{

public:

HOGSVM(std::string cpatch,std::string depatch,FeatureType ft);

~HOGSVM();

//按照txt文件中的图片,读入图像的路径,训练图片保存在TrainImagePath和TrainImageClass中,

//测试图片保存在TestImagePath和TestImageClass中

//pos为类别1,neg为类别-1

void dataPrepare();

void detectPIC(std::string filename);//测试图片函数

//这里写好其他测试接口,

//方便后面添加对其他测试文件的测试方法,比如mp4文件等

void demo(std::string filename, InputFileType type);

//将TrainImagePath和TrainImageClass中的图片读出,并按照FeatureType的方法提取出特征向量

//从而构建训练数据集

void DataOfTrain(int bin_size, int cell_size, int scaleBlock, int stride);

//将TestImagePath和TestImageClass中的图片读出,并按照FeatureType的方法提取出特征向量

//从而构建测试数据集

void DataOfTest(int bin_size, int cell_size, int scaleBlock, int stride);

//训练分类器

void TrainClassifier(int bin_size, int cell_size, int scaleBlock, int stride, int kerneltype, int maxCount);

//测试分类器

float TestClassifier(int bin_size, int cell_size, int scaleBlock, int stride, int kerneltype, int maxCount);

private:

std::ifstream trainingData;

std::ifstream testingData;

std::vector TrainImagePath;

std::vector TrainImageClass;

std::vector TestImagePath;

std::vector TestImageClass;

cv::Mat featureVectorOfTrain;

cv::Mat classOfTrain;

cv::Mat featureVectorOfTest;

cv::Mat classOfTest;

int lenOfHogFeature;//特征向量的长度

int numOfTrainData = 0;//训练集图片总数

int numOfTestData = 0;//测试集图片总数

std::string CLASSFILEPATH;//分类数据的实际存放路径

std::string DETECTFILEPATH;//检测数据的实际存放路径

FeatureType MYHOG;//特征提取器的提取方式,定义在HOG.h中,HOG, HOI, HOGI,OPENCV

};

#endif // !_SVM_H

SVM.cpp

#include"SVM.h"

using namespace cv;

using namespace std;

HOGSVM::HOGSVM(std::string cpatch, std::string depatch, FeatureType ft)

{

CLASSFILEPATH= cpatch;

DETECTFILEPATH= depatch;

MYHOG= ft;

}

HOGSVM::~HOGSVM()

{

}

void HOGSVM::dataPrepare()

{

double dur;

clock_t start, end;

start = clock();

string buffer;

trainingData.open(string(CLASSFILEPATH) + "Train/pos.txt", ios::in);//ios::in 表示以只读的方式读取文件

if (trainingData.fail())//文件打开失败:返回0

{

cout << "open fail!" << endl;

}

while (getline(trainingData, buffer, '\n'))

{

//cout << buffer << endl;

numOfTrainData++;

TrainImageClass.push_back(1);

TrainImagePath.push_back(string(CLASSFILEPATH) + buffer);

}

trainingData.close();

trainingData.open(string(CLASSFILEPATH) + "Train/neg.txt", ios::in);//ios::in 表示以只读的方式读取文件

if (trainingData.fail())//文件打开失败:返回0

{

cout << "open fail!" << endl;

}

while (getline(trainingData, buffer, '\n'))

{

//cout << buffer << endl;

numOfTrainData++;

TrainImageClass.push_back(-1);

TrainImagePath.push_back(string(CLASSFILEPATH) + buffer);

}

trainingData.close();

cout << "训练数据读入完成!" << endl;

testingData.open(string(CLASSFILEPATH) + "Test/pos.txt", ios::in);//ios::in 表示以只读的方式读取文件

if (testingData.fail())//文件打开失败:返回0

{

cout << "open fail!" << endl;

}

while (getline(testingData, buffer, '\n'))

{

//cout << buffer << endl;

numOfTestData++;

TestImageClass.push_back(1);

TestImagePath.push_back(string(CLASSFILEPATH) + buffer);

}

testingData.close();

testingData.open(string(CLASSFILEPATH) + "Test/neg.txt", ios::in);//ios::in 表示以只读的方式读取文件

if (testingData.fail())//文件打开失败:返回0

{

cout << "open fail!" << endl;

}

while (getline(testingData, buffer, '\n'))

{

//cout << buffer << endl;

numOfTestData++;

TestImageClass.push_back(-1);

TestImagePath.push_back(string(CLASSFILEPATH) + buffer);

}

testingData.close();

cout << "测试数据读入完成!" << endl;

end = clock();

dur = (double)(end - start);

printf("dataPrepare 函数 Use Time: %f\n", (dur / CLOCKS_PER_SEC));

}

//int bin_size = 8;//角度分区,最小单元特征向量维度数

//int cell_size = 8;//图像分块,行像素点数量

//int scaleBlock = 2;//行cell数量

//int stride = 1;//block,滑动步长,单位是cell_size,即滑动一步的步长为一个cell_size

void HOGSVM::DataOfTrain(int bin_size, int cell_size, int scaleBlock, int stride)

{

if (MYHOG != OPENCV)

{

cout << "采用自己的HOGI特征提取器!" << endl;

}

else

{

cout << "采用OpenCV的HOG特征提取器!" << endl;

}

double dur;

clock_t start, end;

start = clock();

获取样本的HOG特征///

cout << "正在生成训练数据集..........." << endl;

int height = 64;//134//64//128

int width = 32;//169//32//64

int he = height / cell_size;//8

int wi = width / cell_size;//4

int blocksize = scaleBlock*cell_size;

int xconfig = he - (scaleBlock - 2) - stride;

int yconfig = wi - (scaleBlock - 2) - stride;

int zconfig = pow(scaleBlock, 2)*bin_size;

cout << "HOGI特征尺寸 --> " << "[ " << xconfig << " x " << yconfig << " x " << zconfig << " ]" << endl;

lenOfHogFeature = xconfig*yconfig*zconfig;

//样本特征向量矩阵

int numOfSample = numOfTrainData;

featureVectorOfTrain = Mat::zeros(numOfSample, lenOfHogFeature, CV_32FC1);

//样本的类别

classOfTrain = Mat::zeros(numOfSample, 1, CV_32SC1);

cv::Mat convertedImg;

cv::Mat trainImg;

for (vector::size_type i = 0; i < TrainImagePath.size(); i++)

{

//cout << "Processing: " << TrainImagePath[i] << endl;

cv::Mat trainImg = cv::imread(TrainImagePath[i]);

if (trainImg.empty())

{

cout << "can not load the image:" << TrainImagePath[i] << endl;

continue;

}

//cv::resize(src, trainImg, cv::Size(64, 128));

//提取HOG特征

vector descriptors;

if (MYHOG != OPENCV)

{

MYHOGIDescriptor hogi(bin_size, cell_size, scaleBlock, stride);

vector> hogi_vector = hogi.compute(trainImg, MYHOG);

//cout << "[ " << hogi_vector.size() << "," << hogi_vector[0].size() << " ]" << endl;//[ 105,32 ]

for (int i = 0; i < hogi_vector.size(); i++)

{

vector vector = hogi_vector[i];

descriptors.insert(descriptors.end(), vector.begin(), vector.end());

}

//cout << "hog feature vector: --> " << descriptors.size() << endl;

}

else

{

cv::HOGDescriptor hog(cv::Size(width, height), cv::Size(blocksize, blocksize), cv::Size(stride*cell_size, stride*cell_size), cv::Size(cell_size, cell_size), bin_size);

//cv::HOGDescriptor hog(cv::Size(64, 128), cv::Size(16, 16), cv::Size(8, 8), cv::Size(8, 8), 8);

hog.compute(trainImg, descriptors);

}

//ofstream outfile;

//outfile.open("HOGDATA.txt");

//outfile << setiosflags(ios::fixed) << setprecision(6) << setiosflags(ios::left) << endl;

//for (int j = 0; j <21 ; j++)

//{

// for (i = 0; i < 32; i++)

// {

// outfile << descriptors[j*32+i] << " ";

// }

// outfile <<"\n" ;

//}

//outfile.close();

for (vector::size_type j = 0; j < descriptors.size(); j++)

{

featureVectorOfTrain.at(i, j) = descriptors[j];

}

classOfTrain.at(i, 0) = TrainImageClass[i];

}

cout << "size of featureVectorOfTrain: " << featureVectorOfTrain.size() << endl;

cout << "size of classOfTrain: " << classOfTrain.size() << endl;

cout << "训练数据集生成完毕!" << endl;

//vector().swap(TrainImagePath);

//vector().swap(TrainImageClass);

end = clock();

dur = (double)(end - start);

printf("DataOfTrain 函数 Use Time: %f\n", (dur / CLOCKS_PER_SEC));

}

//int bin_size = 8;//角度分区,最小单元特征向量维度数

//int cell_size = 8;//图像分块,行像素点数量

//int scaleBlock = 2;//行cell数量

//int stride = 1;//block,滑动步长,单位是cell_size,即滑动一步的步长为一个cell_size

void HOGSVM::DataOfTest(int bin_size, int cell_size, int scaleBlock, int stride)

{

double dur;

clock_t start, end;

start = clock();

获取样本的HOG特征///

cout << "正在生成测试数据集..........." << endl;

//创建HOG特征提取器,设置HOG特征提取器参数

//样本的类别

int numOfSample = numOfTestData;

classOfTest = Mat::zeros(numOfSample, 1, CV_32SC1);

cv::Mat convertedImg;

cv::Mat testImg;

int height = 64;//134//64//128

int width = 32;//169//32//64

int he = height / cell_size;

int wi = width / cell_size;

int blocksize = scaleBlock*cell_size;

int xconfig = he - (scaleBlock - 2) - stride;

int yconfig = wi - (scaleBlock - 2) - stride;

int zconfig = pow(scaleBlock, 2)*bin_size;

//cout << xconfig << " " << yconfig << " " << zconfig << endl;

//样本的数据

lenOfHogFeature = xconfig*yconfig*zconfig;

featureVectorOfTest = Mat::zeros(numOfSample, lenOfHogFeature, CV_32FC1);

for (vector::size_type i = 0; i < TestImagePath.size(); i++)

{

//cout << "Processing: " << TrainImagePath[i] << endl;

cv::Mat testImg = cv::imread(TestImagePath[i]);

if (testImg.empty())

{

cout << "can not load the image:" << TestImagePath[i] << endl;

continue;

}

//cv::resize(src, testImg, cv::Size(64, 128));

vector descriptors;

if (MYHOG != OPENCV)

{

//样本特征向量矩阵

MYHOGIDescriptor hogi(bin_size, cell_size, scaleBlock, stride);

vector> hogi_vector = hogi.compute(testImg, MYHOG);

//cout << "[ " << hogi_vector.size() << "," << hogi_vector[0].size() << " ]" << endl;//[ 105,32 ]

for (int i = 0; i < hogi_vector.size(); i++)

{

vector vector = hogi_vector[i];

descriptors.insert(descriptors.end(), vector.begin(), vector.end());

}

//cout << "hog feature vector: --> " << descriptors.size() << endl;

}

else

{

cv::HOGDescriptor hog(cv::Size(width, height), cv::Size(blocksize, blocksize), cv::Size(stride*cell_size, stride*cell_size), cv::Size(cell_size, cell_size), bin_size);

//cv::HOGDescriptor hog(cv::Size(64, 128), cv::Size(16, 16), cv::Size(8, 8), cv::Size(8, 8), 8);

hog.compute(testImg, descriptors);

}

for (vector::size_type j = 0; j < descriptors.size(); j++)

{

featureVectorOfTest.at(i, j) = descriptors[j];

}

classOfTest.at(i, 0) = TestImageClass[i];

}

cout << "size of featureVectorOfTest: " << featureVectorOfTest.size() << endl;

cout << "size of classOfTest: " << classOfTest.size() << endl;

cout << "测试数据集生成完毕!" << endl;

//vector().swap(TestImagePath);

//vector().swap(TestImageClass);

end = clock();

dur = (double)(end - start);

printf("DataOfTest 函数 Use Time: %f\n", (dur / CLOCKS_PER_SEC));

}

void HOGSVM::TrainClassifier(int bin_size, int cell_size, int scaleBlock, int stride, int kerneltype, int maxCount)

{

double dur;

clock_t start, end;

start = clock();

cout << "开始训练分类器!" << endl;

///使用SVM分类器训练///

//设置参数,注意Ptr的使用

cv::Ptr svm = cv::ml::SVM::create();

svm->setType(cv::ml::SVM::C_SVC);

//svm->setKernel(cv::ml::SVM::LINEAR);//RBF//LINEAR

if (kerneltype == 0)

{

svm->setKernel(cv::ml::SVM::LINEAR);//RBF//LINEAR

}

else

{

svm->setKernel(cv::ml::SVM::RBF);//RBF//LINEAR

}

svm->setTermCriteria(cv::TermCriteria(CV_TERMCRIT_ITER, maxCount, FLT_EPSILON));

//训练SVM

svm->train(featureVectorOfTrain, cv::ml::ROW_SAMPLE, classOfTrain);

//保存训练好的分类器(其中包含了SVM的参数,支持向量,α和rho)

svm->save(string(CLASSFILEPATH) +to_string(bin_size)+"_"+to_string(cell_size)+"_"+to_string(scaleBlock)+"_"+to_string(stride)+"_"+to_string(kerneltype)+"_"+ to_string(maxCount)+"_classifier.xml");

/*

SVM训练完成后得到的XML文件里面,有一个数组,叫做support vector,还有一个数组,叫做alpha,有一个浮点数,叫做rho;

将alpha矩阵同support vector相乘,注意,alpha*supportVector,将得到一个行向量,将该向量前面乘以-1。之后,再该行向量的最后添加一个元素rho。

如此,变得到了一个分类器,利用该分类器,直接替换opencv中行人检测默认的那个分类器(cv::HOGDescriptor::setSVMDetector()),

*/

//获取支持向量

cv::Mat supportVector = svm->getSupportVectors();

//获取alpha和rho

cv::Mat alpha;

cv::Mat svIndex;

float rho = svm->getDecisionFunction(0, alpha, svIndex);

//转换类型:这里一定要注意,需要转换为32的

cv::Mat alpha2;

alpha.convertTo(alpha2, CV_32FC1);

//结果矩阵,两个矩阵相乘

cv::Mat result(1, lenOfHogFeature, CV_32FC1);

result = alpha2 * supportVector;

//乘以-1,这里为什么会乘以-1?

//注意因为svm.predict使用的是alpha*sv*another-rho,如果为负的话则认为是正样本,在HOG的检测函数中,使用rho+alpha*sv*another(another为-1)

//for (int i = 0;i < 3780;i++)

//result.at(0, i) *= -1;

//将分类器保存到文件,便于HOG识别

//这个才是真正的判别函数的参数(ω),HOG可以直接使用该参数进行识别

FILE *fp = fopen((string(CLASSFILEPATH) + to_string(bin_size) + "_" + to_string(cell_size) + "_" + to_string(scaleBlock) + "_" + to_string(stride) + "_" + to_string(kerneltype) + "_" + to_string(maxCount) + "_HOG_SVM.txt").c_str(), "wb");

for (int i = 0; i(0, i));

}

fprintf(fp, "%f", rho);

fclose(fp);

cout << "训练结束!" << endl;

featureVectorOfTrain.release();

classOfTrain.release();

end = clock();

dur = (double)(end - start);

printf("TrainClassifier 函数 Use Time: %f\n", (dur / CLOCKS_PER_SEC));

}

float HOGSVM::TestClassifier(int bin_size, int cell_size, int scaleBlock, int stride, int kerneltype, int maxCount)

{

double dur;

clock_t start, end;

start = clock();

cout << "开始测试分类准确率!" << endl;

string modelpath = string(CLASSFILEPATH) + to_string(bin_size) + "_" + to_string(cell_size) + "_" + to_string(scaleBlock) + "_" + to_string(stride) + "_" + to_string(kerneltype) + "_" + to_string(maxCount) + "_classifier.xml";

Ptr svm = Algorithm::load(modelpath);

int featureVectorrows = featureVectorOfTest.rows;

int featureVectorcols = featureVectorOfTest.cols;

float TrueNum = 0;

for (int i = 0; i < featureVectorrows; i++)

{

Mat testFeatureMat = Mat::zeros(1, featureVectorcols, CV_32FC1);

for (int j = 0; j(0, j) = featureVectorOfTest.at(i, j);

}

testFeatureMat.convertTo(testFeatureMat, CV_32F);

int response = (int)svm->predict(testFeatureMat);

//cout << "response: " << response << " vs " << "theTrue: " << classOfTest.at(i, 0) << endl;

if (response == classOfTest.at(i, 0))

{

TrueNum++;

}

}

std::cout << "TrueNum: " << TrueNum << endl;

std::cout << "TotalNum: " << featureVectorrows << endl;

std::cout << "Result: " << TrueNum / float(featureVectorrows) * 100 << "%" << endl;

featureVectorOfTest.release();

classOfTest.release();

end = clock();

dur = (double)(end - start);

printf("TestClassifier 函数 Use Time: %f\n", (dur / CLOCKS_PER_SEC));

return TrueNum / float(featureVectorrows) * 100;

}

void HOGSVM::detectPIC(string filename)

{

ifstream f;

// 获取测试图片文件路径

f.open(string(DETECTFILEPATH) + filename, ios::in);//ios::in 表示以只读的方式读取文件

if (f.fail())

{

fprintf(stderr, "ERROR: the specified file could not be loaded\n");

return;

}

//加载训练好的判别函数的参数(注意,与svm->save保存的分类器不同)

vector detector;

ifstream fileIn(string(CLASSFILEPATH) + "HOG_SVM.txt", ios::in);

float val = 0.0f;

while (!fileIn.eof())

{

fileIn >> val;

detector.push_back(val);

}

fileIn.close();

//设置HOG

//cv::HOGDescriptor hog;

//blocksize=scaleblock*cell_size

cv::HOGDescriptor hog(cv::Size(32, 64), cv::Size(16, 16), cv::Size(8, 8), cv::Size(8, 8), 8);

hog.setSVMDetector(detector);

//hog.setSVMDetector(cv::HOGDescriptor::getDefaultPeopleDetector());

cv::namedWindow("people detector", 1);

// 检测图片

string buffer;

while (getline(f, buffer, '\n'))

{

cv::Mat img = cv::imread(string(DETECTFILEPATH) + buffer);

//resize(img, img, Size(0, 0), 2, 2);

cout << buffer << endl;

if (!img.data)

continue;

vector found, found_filtered;

//多尺度检测

hog.detectMultiScale(img, found, -8, cv::Size(16, 16), cv::Size(0, 0), 1.05, 5);

//hog.detectMultiScale(img, found, -6, cv::Size(2, 2), cv::Size(0, 0), 1.05, 20);

cout << "found.size() :" << found.size() << endl;

//if (!found.size())

//{

// continue;

//}

size_t i, j;

//去掉空间中具有内外包含关系的区域,保留大的

for (i = 0; i < found.size(); i++)

{

cv::Rect r = found[i];

for (j = 0; j < found.size(); j++)

if (j != i && (r & found[j]) == r)

break;

if (j == found.size())

found_filtered.push_back(r);

}

// 适当缩小矩形

for (i = 0; i < found_filtered.size(); i++)

{

cv::Rect r = found_filtered[i];

// the HOG detector returns slightly larger rectangles than the real objects.

// so we slightly shrink the rectangles to get a nicer output.

r.x += cvRound(r.width*0.5);

//r.width = cvRound(r.width*1.5);

//r.y -= cvRound(r.height*0.65);

//r.height = cvRound(r.height*1.7);

rectangle(img, r.tl(), r.br(), cv::Scalar(0, 0, 255), 1);

}

resize(img, img, Size(0, 0), 3, 3);

imshow("people detector", img);

int c = cv::waitKey(30);

if (c == 'q' || c == 'Q' || !f)

break;

}

}

void HOGSVM::demo(string filename, InputFileType type)

{

if (type == TXT)

{

detectPIC(filename);

}

} 最后写一个测试,分类训练和分类测试的图片路径放在LSIFIR_equalHist\Classification\Train和LSIFIR_equalHist\Classification\Test的pos.txt和neg.txt中,复制同一个文件夹下的pos.lst和neg.lst,可以修改需要训练和测试的图片数量

pos.txt文件部分截图如下

#include"SVM.h"

using namespace cv;

using namespace std;

string CLASSFILEPATH = "E:/LSIFIR_equalHist/Classification/";

string DETECTFILEPATH = "E:/LSIFIR_equalHist/Detection/";

FeatureType MYHOG = HOGI;//HOGI

void OneTrainNTest();

void MultiTest();

int main()

{

OneTrainNTest();

//MultiTest();

return 0;

}

void MultiTest()

{

HOGSVM *hogsvmer = new HOGSVM(CLASSFILEPATH, DETECTFILEPATH, MYHOG);

string filename = "Train/pos.txt";

hogsvmer->demo(filename, TXT);

}

void OneTrainNTest()

{

int bin_size = 8;//角度分区,最小单元特征向量维度数

int cell_size = 8;//图像分块,行像素点数量

int scaleBlock = 2;//行cell数量

int stride = 1;//block,滑动步长,单位是cell_size,即滑动一步的步长为一个cell_size

int kerneltype = 0;

int maxCount = 1000000;

HOGSVM *hogsvmer = new HOGSVM(CLASSFILEPATH, DETECTFILEPATH, MYHOG);

hogsvmer->dataPrepare();

hogsvmer->DataOfTrain(bin_size, cell_size, scaleBlock, stride);

hogsvmer->DataOfTest(bin_size, cell_size, scaleBlock, stride);

hogsvmer->TrainClassifier(bin_size, cell_size, scaleBlock, stride, kerneltype, maxCount);

float target = hogsvmer->TestClassifier(bin_size, cell_size, scaleBlock, stride, kerneltype, maxCount);

}

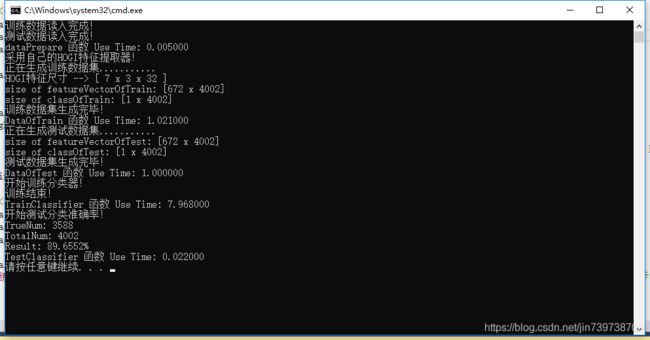

训练分类效果

用2001张pos和2001张neg图片作分类训练,分类准确率89.6%,一般般

检测的效果也是很一般,漏检率和误检率都很高!

3. 手写PSO粒子群优化方法

粒子群优化Partical Swarm Optimization,简称PSO

参考了一些论文,可以采用优化方法对SVM和HOG进行参数寻优,那么最后的检测效果也相应可以提高!

当然,这个只是论文上玩一玩,实际的使用,也还是费时费力不讨好!

PSO算法最重要是要抓住粒子位置和速度的更新方法

这里写一个简单的多维度PSO方法,源码中会把PSO封装进去,这里只介绍简单的构造PSO的代码

首先是PSO.h

#pragma once

#ifndef _PSO_H

#define _PSO_H

#include

#include

#include

#include

#include

/*

int particlesize = 50;

std::pair temprange[6] =

{

make_pair(-10,10),

make_pair(-10,10),

make_pair(-10,10),

make_pair(-10,10),

make_pair(-10,10),

make_pair(-10,10),

};

vector> xrange;

xrange.insert(xrange.begin(), temprange, temprange + 6);

PSO *pso = new PSO(xrange, particlesize);

pso->search();

delete pso;

*/

typedef struct partical

{

double fitness;

std::vector x;

std::vector v;

int narvs;

}partical;

class PSO

{

public:

PSO(std::vector> &input_range, int psize)

{

particlesize = psize;

narvs = input_range.size();

input_range.swap(xrange);

init();

}

~PSO()

{

}

void update();

void search();

double targetfunction(std::vector &x);

void BubbleSort(std::vector &arr);

private:

void init();

public:

std::vector p;

std::vector personalbest;

partical globalbest;

std::vector ff;

partical finalbest;

private:

double E = 0.000001;

int maxnum = 800; //最大迭代次数

int c1 = 2; //每个粒子的个体学习因子,加速度常数

int c2 = 2; //每个粒子的社会学习因子,加速度常数

double w = 0.6; //惯性因子

int vmax = 5; //粒子的最大飞翔速度

int particlesize;//粒子数

int narvs;//粒子的维数

std::vector> xrange;//粒子的各个维度的限制条件

};

#endif // !_PSO_H

然后是PSO.cpp

#include"PSO.h"

using namespace std;

void PSO::update()

{

for (int i = 0; i < p.size(); i++)

{

p[i].fitness = targetfunction(p[i].x);

if (p[i].fitness < personalbest[i].fitness)

{

personalbest[i] = p[i];

}

}

vectortemp(personalbest);

BubbleSort(temp);

globalbest = temp[0];

for (int i = 0; i < p.size(); i++)

{

for (int j = 0; j < p[i].narvs; j++)

{

p[i].v[j] = w*p[i].v[j]

+ c1*((rand() % 100) / (double)(100))*(personalbest[i].x[j] - p[i].x[j])

+ c2*((rand() % 100) / (double)(100))*(globalbest.x[j] - p[i].x[j]);//更新速度

if (p[i].v[j] > vmax)

{

p[i].v[j] = vmax;

}

else if(p[i].v[j] < -vmax)

{

p[i].v[j] = -vmax;

}

p[i].x[j] = p[i].x[j] + p[i].v[j];//更新调整参数

if (p[i].x[j] > xrange[j].second)//限制搜索范围

{

p[i].x[j] = xrange[j].second;

}

else if (p[i].x[j] < xrange[j].first)

{

p[i].x[j] = xrange[j].first;

}

}

}

}

void PSO::search()

{

int k = 1;

while (k <= maxnum)

{

update();

ff.push_back(globalbest.fitness);

cout.precision(4);

cout << "PSO --> " << "fitness: " << globalbest.fitness <<"\t";

for (int i = 0; i < globalbest.narvs; i++)

{

cout << to_string(i) << " : " << globalbest.x[i] <<"\t";

}

cout << endl;

if (globalbest.fitness < E)

{

break;

}

k++;

}

finalbest = globalbest;

cout << "FIN --> " << "fitness: " << globalbest.fitness << "\t";

for (int i = 0; i < globalbest.narvs; i++)

{

cout << to_string(i) << " : " << globalbest.x[i] << "\t";

}

cout << endl;

}

double PSO::targetfunction(vector& x)

{

double fitness = 0;

for (int i = 0; i < x.size(); i++)

{

fitness += pow(x[i], 2);

}

fitness /= x.size();

return fitness;

}

void PSO::BubbleSort(std::vector& arr)

{

for (int i = 0; i < arr.size() - 1; i++)

{

for (int j = 0; j < arr.size() - i - 1; j++)

{

if (arr[j].fitness > arr[j + 1].fitness)

{

partical temp = arr[j];

arr[j] = arr[j + 1];

arr[j + 1] = temp;

}

}

}

}

void PSO::init()

{

for (int i = 0; i < particlesize; i++)

{

partical temp;

temp.narvs = narvs;

for (int j = 0; j < temp.narvs; j++)

{

temp.v.push_back((rand() % 100) / (double)(100));

temp.x.push_back(xrange[j].first + xrange[j].second * (rand() % 100) / (double)(100));

temp.fitness = targetfunction(temp.x);

}

p.push_back(temp);

}

vector temp1(p);

BubbleSort(temp1);

globalbest = temp1[0];

vector temp2(p);

temp2.swap(personalbest);

}

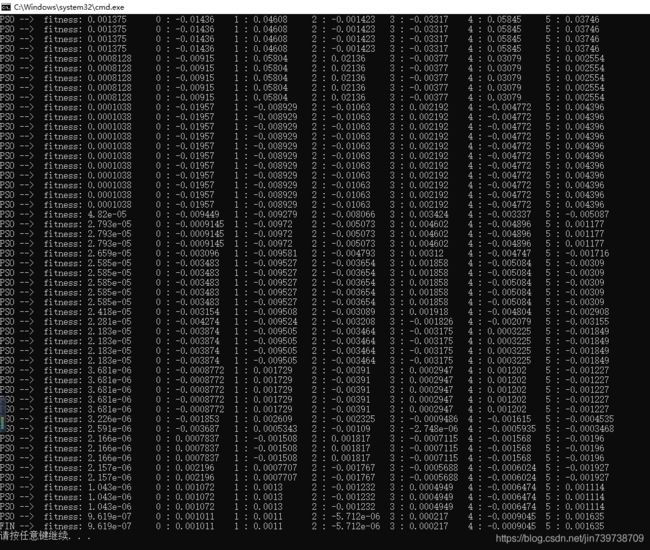

这里是对一个简单的target函数(各维度平方和/维数)进行优化

测试实例,初始化50个粒子,6个维度,每个维度的限制条件均为[-10,10]

#include"PSO.h"

using namespace std;

int main()

{

int particlesize = 50;

std::pair temprange[6] =

{

make_pair(-10,10),

make_pair(-10,10),

make_pair(-10,10),

make_pair(-10,10),

make_pair(-10,10),

make_pair(-10,10),

};

vector> xrange;

xrange.insert(xrange.begin(), temprange, temprange + 6);

PSO *pso = new PSO(xrange, particlesize);

pso->search();

delete pso;

//vector ff(pso->ff);

//for (int i = 0; i < ff.size(); i++)

//{

// cout << i << " : " << ff[i] << endl;

//}

//partical finalbest = pso->finalbest;

//for (int i = 0; i < finalbest.narvs; i++)

//{

// cout << finalbest.x[i] << endl;

//}

return 0;

}

结果

4. 数据集和源码下载

自行下载数据集和源码,有疑问可以私聊博主

全部源码打包(CSDN下载)

https://download.csdn.net/download/jin739738709/11467578

数据集下载(百度云)

链接:https://pan.baidu.com/s/16kXSOYeo4SHRW7JNV0PErA

提取码:fq1i