1、k8s入门

1、为什么有了Docker Swarm还需要k8s

Swarm3k,Swarm可扩展性的极限是在4700个节点,生产环境节点数建议在1000以下

Swarm不足 :

- 节点,容器数量上升,管理成本上升

- 无法大规模容器调度

- 没有自愈机制

- 没有统一配置中心

- 没有容器生命周期管理

k8s能解决的问题

- 一个服务经常运行一段时间后出现"假死",好像也找不出什么问题,重启又好了?(自修复)

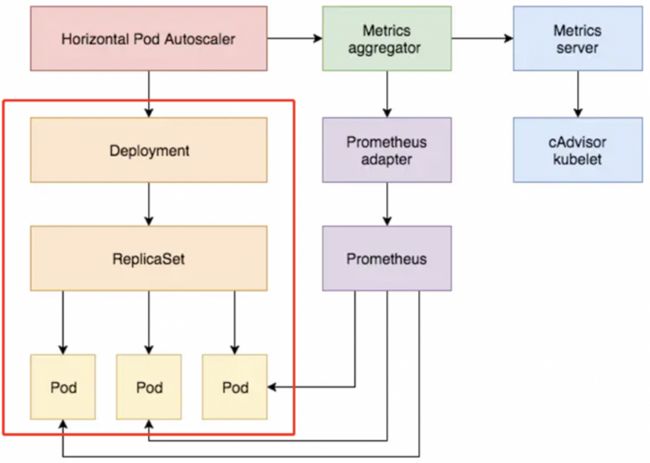

- 一个站点突然流量增大,服务随着流量大小自动扩容(自动扩缩容)

- 节点的存储不够,如何自动增加存储 ? (自动挂载存储)

2、k8s是什么?

k8s是一个可移植的,可扩展的开源平台,用于管理容器化的工作负载和服务,可促进声明式配置和自动化

Kubernetes是谷歌严格保密十几年的秘密武器——Borg的一个开源版本。Borg是谷歌的一个久负盛名的内部使用的大规模集群管理系统,它基于容器技术,目的是实现资源管理的自动化,以及跨多个数据中心的资源利用率的最大化

直到2015年4月,传闻许久的Borg论文伴随Kubernetes的高调宣传被谷歌首次公开,大家才得以了解它的更多内幕

3、k8s优势和特点

趋势 : Docker这门容器化技术已经被很多公司采用,从单机走向集群已成为必然,云计算的蓬勃发展正在加速这一进程

生态 : 2015年,谷歌联合20多家公司一起建立了CNCF(Cloud Native ComputingFoundation,云原生计算基金会)开源组织来推广Kubernetes,并由此开创了云原生应用(Cloud Native Application)的新时代

好处 :

- 可以“轻装上阵”地开发复杂系统

- 可以全面拥抱微服务架构

- 可以随时随地将系统整体“搬迁”到公有云上

- Kubernetes内在的服务弹性扩容机制可以让我们轻松应对突发流量

- Kubernetes系统架构超强的横向扩容能力可以让我们的竞争力大大提升

优势 :

使用Kubernetes提供的解决方案,我们不仅节省了不少于30%的开发成本,还可以将精力更加集中于业务本身,而且由于Kubernetes提供了强大的自动化机制,所以系统后期的运维难度和运维成本大幅度降低

Kubernetes是一个开放的开发平台。与J2EE不同,它不局限于任何一种语言,没有限定任何编程接口,所以不论是用Java、Go、C++还是用Python编写的服务,都可以被映射为Kubernetes的Service(服务),并通过标准的TCP通信协议进行交互。此外,Kubernetes平台对现有的编程语言、编程框架、中间件没有任何侵入性,因此现有的系统也很容易改造升级并迁移到Kubernetes平台上。

Kubernetes是一个完备的分布式系统支撑平台。Kubernetes具有完备的集群管理能力,包括多层次的安全防护和准入机制、多租户应用支撑能力、透明的服务注册和服务发现机制、内建的智能负载均衡器、强大的故障发现和自我修复能力、服务滚动升级和在线扩容能力、可扩展的资源自动调度机制,以及多粒度的资源配额管理能力

特点 :

Kubernetes拥有全面的集群管理能力,主要包括:多级的授权机制、多租户应用、透明的服务注册和发现机制、内置的负载均衡、错误发现和自修复能力、服务升级回滚和自动扩容等

- 服务发现和负载平衡 :可以负载平衡并分配网络流量

- 自我修复 : 自动启动失败的容器,替换容器,杀死不通过状况检查的容器

- 存储编排 : 允许自动挂载存储系统

- 自动部署和回滚 : 自动部署新建、删除容器并将其所有资源用于新容器

- 自动完成装箱计算 : 限制每个容器需要多少CPU和内存

- 密钥和配置管理 : 不重建容器镜像部署和更新密钥(密码、令牌和SSH密钥)和应用程序配置

2、k8s安装

1、docker安装

sudo yum remove docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-engine

sudo yum install -y yum-utils

sudo yum-config-manager \

--add-repo \

https://download.docker.com/linux/centos/docker-ce.repo

sudo yum install docker-ce docker-ce-cli containerd.io

sudo systemctl start docker

sudo docker run hello-world2、设置机器名称

hostnamectl set-hostname master

hostnamectl set-hostname node1

hostnamectl set-hostname node23、配置hosts

echo "

172.20.174.136 master

172.20.174.137 node1

172.20.174.138 node2

" >>/etc/hosts4、添加k8s的阿里云YUM源(每台机器)

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF5、设置premissive模式(每台机器)

sudo setenforce 0

sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config6、安装kubelet、kubeadm和kubectl(每台机器)

安装指定版本,因为下面是指定的版本安装,需要从阿里云下载镜像和下面安装的版本匹配

sudo yum install -y kubelet-1.23.3 kubeadm-1.23.3 kubectl-1.23.3 --disableexcludes=kubernetes7、开启启动(每台机器)

systemctl enable kubelet.service8、阿里云镜像加锁修改(每台机器)

"/etc/docker/daemon.json" 文件,添加如下 :

"exec-opts": ["native.cgroupdriver=systemd"]

sudo systemctl daemon-reload

sudo systemctl restart docker

sudo systemctl restart kubelet9、下载镜像(每台机器)

./pull_images.sh

#!/bin/bash

image_list=' registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.23.3

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.23.3

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.23.3

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.23.3

registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6

registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.1-0

registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:v1.8.6

'

for img in $image_list

do

docker pull $img

done10、打tag(每台机器)

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.23.3 k8s.gcr.io/kube-apiserver:v1.23.3

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.23.3 k8s.gcr.io/kube-controller-manager:v1.23.3

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.23.3 k8s.gcr.io/kube-scheduler:v1.23.3

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.23.3 k8s.gcr.io/kube-proxy:v1.23.3

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6 k8s.gcr.io/pause:3.6

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.1-0 k8s.gcr.io/etcd:3.5.1-0

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:v1.8.6 k8s.gcr.io/coredns/coredns:v1.8.611、初始化master(只有master执行)

master机器上执行,--apiserver-advertise-address=Master机器地址

kubeadm init --apiserver-advertise-address=172.24.251.133 --service-cidr=10.1.0.0/16 --kubernetes-version v1.23.3 --pod-network-cidr=10.244.0.0/16

加入 在.bashrc中 : export KUBECONFIG=/etc/kubernetes/admin.conf

source .bashrc12、worker加入

注意:如果有换行,变成一行,否则有问题

kubeadm join 172.24.251.134:6443 --token rrfuvj.9etcsup3crh0c7j4 --discovery-token-ca-cert-hash sha256:fb74ff7fb76b3b1257b557fdd5006db736522619b415bcc489458dd19e67e9db13、安装fannel

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

kubectl apply -f kube-flannel.yml

同步cni

master /etc/cni/net.d/ copy node1,node214、测试

[root@master ~]# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master Ready control-plane,master 123m v1.23.3 172.24.251.133 CentOS Linux 7 (Core) 3.10.0-1127.19.1.el7.x86_64 docker://24.0.2

node1 Ready 114m v1.23.3 172.24.251.132 CentOS Linux 7 (Core) 3.10.0-1127.19.1.el7.x86_64 docker://24.0.2

node2 Ready 114m v1.23.3 172.24.251.134 CentOS Linux 7 (Core) 3.10.0-1127.19.1.el7.x86_64 docker://24.0.2

[root@master ~]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-flannel kube-flannel-ds-9j4gp 1/1 Running 0 112m

kube-flannel kube-flannel-ds-bzlh6 1/1 Running 0 112m

kube-flannel kube-flannel-ds-cdp8x 1/1 Running 0 112m

kube-system coredns-64897985d-6bwbq 1/1 Running 0 123m

kube-system coredns-64897985d-9s84l 1/1 Running 0 123m

kube-system etcd-master 1/1 Running 0 123m

kube-system kube-apiserver-master 1/1 Running 0 123m

kube-system kube-controller-manager-master 1/1 Running 0 123m

kube-system kube-proxy-7pfxp 1/1 Running 0 114m

kube-system kube-proxy-m7xnn 1/1 Running 0 114m

kube-system kube-proxy-r2gs8 1/1 Running 0 123m

kube-system kube-scheduler-master 1/1 Running 0 123m 3、k8s架构

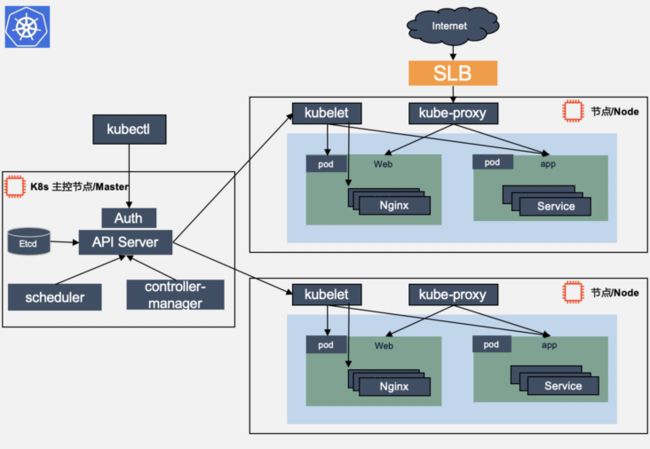

- Master

负责管理整个集群。Master协调集群中的所有活动,例如调度应用、维护应用的所需状态、应用扩容以及推出新的更新 - Node

是一个虚拟机或物理机,它在kubernates集群中充当工作机器的角色。每个Node都有Kubelet,它管理Node,而且是Node与Master通信的代理

k8s组件说明 :

| 职能 | 组件 | 说明 |

|---|---|---|

| Master | Kube-apiserver | kube-apiserver,主要负责对外统一输出 |

| etcd | k8s数据库,主要保存k8s过程数据 | |

| Controller Manager | k8s Pod控制器,主要针对Pod的控制管理 | |

| Scheduler | k8s 调度器,主要针对Pod节点调度管理 | |

| Node | Pod | k8s 中创建和管理,最小可部署计算单元 |

| kubelet | k8s Node节点服务端,主要响应Node与Master的任务管理 | |

| kube-proxy | k8s API服务代理 | |

| container | 容器 | |

| Other | kubectl | k8s Client客户端 |

4、k8s设计理念

1、准备工作

[root@master opt]# export clientcert=$(grep client-cert /etc/kubernetes/admin.conf |cut -d" " -f 6)

[root@master opt]# export clientkey=$(grep client-key-data /etc/kubernetes/admin.conf |cut -d" " -f 6)

[root@master opt]# export certauth=$(grep certificate-authority-data /etc/kubernetes/admin.conf |cut -d" " -f 6)

[root@master opt]# echo $clientcert | base64 -d > ./client.pem

[root@master opt]# echo $clientkey | base64 -d > ./client-key.pem

[root@master opt]# echo $certauth | base64 -d > ./ca.pem

[root@master opt]# curl --cert ./client.pem --key ./client-key.pem --cacert ./ca.pem https://172.24.251.133:6443/api/v1/pods2、 调用API

创建 journey 命名空间

[root@master opt]# kubectl get namespaces

NAME STATUS AGE

default Active 19h

kube-flannel Active 19h

kube-node-lease Active 19h

kube-public Active 19h

kube-system Active 19h

[root@master opt]# kubectl create namespace journey

namespace/journey created

[root@master opt]# kubectl get namespaces

NAME STATUS AGE

default Active 19h

journey Active 7s

kube-flannel Active 19h

kube-node-lease Active 19h

kube-public Active 19h

kube-system Active 19h

yml文件如下 :

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

selector:

matchLabels:

app: nginx

replicas: 2 # 告知 Deployment 运行 2 个与该模板匹配的 Pod

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.14.2

ports:

- containerPort: 80

使用API来创建Pod

[root@master opt]# curl --cert ./client.pem --key ./client-key.pem --cacert ./ca.pem -X POST -H 'Content-Type: application/yaml' --data '

> apiVersion: apps/v1

> kind: Deployment

> metadata:

> namespace: journey

> name: nginx-deployment

> spec:

> selector:

> matchLabels:

> app: nginx

> replicas: 2 # 告知 Deployment 运行 2 个与该模板匹配的 Pod

> template:

> metadata:

> labels:

> app: nginx

> spec:

> containers:

> - name: nginx

> image: nginx:1.14.2

> ports:

> - containerPort: 80

> ' https://172.24.251.133:6443/apis/apps/v1/namespaces/journey/deployments

{

"kind": "Deployment",

"apiVersion": "apps/v1",

"metadata": {

"name": "nginx-deployment",

"namespace": "journey",

"uid": "84be8a78-eb20-4374-a10a-606f58d8f38a",

"resourceVersion": "36743",

"generation": 1,

"creationTimestamp": "2023-06-14T03:45:26Z",

"managedFields": [

{

"manager": "curl",

"operation": "Update",

"apiVersion": "apps/v1",

"time": "2023-06-14T03:45:26Z",

"fieldsType": "FieldsV1",

"fieldsV1": {

"f:spec": {

"f:progressDeadlineSeconds": {},

"f:replicas": {},

"f:revisionHistoryLimit": {},

"f:selector": {},

"f:strategy": {

"f:rollingUpdate": {

".": {},

"f:maxSurge": {},

"f:maxUnavailable": {}

},

"f:type": {}

},

"f:template": {

"f:metadata": {

"f:labels": {

".": {},

"f:app": {}

}

},

"f:spec": {

"f:containers": {

"k:{\"name\":\"nginx\"}": {

".": {},

"f:image": {},

"f:imagePullPolicy": {},

"f:name": {},

"f:ports": {

".": {},

"k:{\"containerPort\":80,\"protocol\":\"TCP\"}": {

".": {},

"f:containerPort": {},

"f:protocol": {}

}

},

"f:resources": {},

"f:terminationMessagePath": {},

"f:terminationMessagePolicy": {}

}

},

"f:dnsPolicy": {},

"f:restartPolicy": {},

"f:schedulerName": {},

"f:securityContext": {},

"f:terminationGracePeriodSeconds": {}

}

}

}

}

}

]

},

"spec": {

"replicas": 2,

"selector": {

"matchLabels": {

"app": "nginx"

}

},

"template": {

"metadata": {

"creationTimestamp": null,

"labels": {

"app": "nginx"

}

},

"spec": {

"containers": [

{

"name": "nginx",

"image": "nginx:1.14.2",

"ports": [

{

"containerPort": 80,

"protocol": "TCP"

}

],

"resources": {},

"terminationMessagePath": "/dev/termination-log",

"terminationMessagePolicy": "File",

"imagePullPolicy": "IfNotPresent"

}

],

"restartPolicy": "Always",

"terminationGracePeriodSeconds": 30,

"dnsPolicy": "ClusterFirst",

"securityContext": {},

"schedulerName": "default-scheduler"

}

},

"strategy": {

"type": "RollingUpdate",

"rollingUpdate": {

"maxUnavailable": "25%",

"maxSurge": "25%"

}

},

"revisionHistoryLimit": 10,

"progressDeadlineSeconds": 600

},

"status": {}

}[root@master opt]#3、API设计原则

1、所有API应该是声明式的

- 相对于命令操作,对于重复操作的效果是稳定的

- 声明式操作更容器被用户使用,可以使系统向用户隐藏实现的细节,隐藏实现的细节的同时,也保留了系统未来持续优化的可能

- 声明式的API,同时隐含了所有的API对象都是名词性质的,例如Service、Volume这些API都是名词,这些名词描述了用户所期望得到的一个目标分布式对象

2、API对象是彼此互补而且可以组合的

- API对象尽量实现面向对象设计时的需求,即"高内聚,低耦合",对业务相关的概念有一个合适的分解,提高分解出来对象的可重用性

- 事实上,k8s这种分布式系统管理平台,也是一种业务系统,只不过它的业务就是调度和管理容器服务

3、高层API以操作意图为基础设计

如何能够设计好API,跟如何能用面向对象的方法设计好应用系统有想通的地方,高层设计一定是从业务触发,而不是过早的从技术实现出发。因此,针对k8s的高层API设计,一定是以k8s的业务为基础出发,也就是以系统调度管理容器的操作意图为基础设计

4、底层API根据高层API的控制需要设计

设计实现底层API的目的,是为了被高层API使用,考虑减少冗余、提高重用性的目的,底层API的设计也要以需求为基础,要尽量抵抗受技术实现影响的诱惑

5、尽量避免简单封装,不要有在外部API无法显示知道的内部隐藏的机制

简单的封装,实际没有提供新的功能,反而增加了对封装API的依赖性。内部隐藏的机制也是非常不利于系统维护的设计方式,例如PetSet和ReplicaSet,本来就是两种Pod集合,那么k8s就用不同的API对象来定义它们,而不会说只用同一个ReplicaSet,内部通过特殊的算法再区分这个ReplicaSet是由状态的还是无状态

6、API操作复杂度与对象数量成正比

这一条主要是从系统性能角度考虑,要保证整个系统随着系统规模的扩大,性能不会迅速变慢到无法使用,那么最低的限定就是API的操作复杂度不能超过o(N),N是对象的数量,否则系统就不具备水平伸缩了

7、API对象状态不能依赖于网络连接状态

由于众所周知,在分布式环境下,网络连接断开是经常发生的事情,因此要保证API对象状态能应对网络的不稳定,API对象的状态就不能依赖于网络连接状态

8、尽量避免让操作机制依赖于全局状态

因为在分布式系统中要保证全局状态的同步是非常困难的

4、控制器

[root@master ~]# kubectl get pods -n journey

NAME READY STATUS RESTARTS AGE

nginx-deployment-9456bbbf9-ck8n5 1/1 Running 1 (3h10m ago) 3h55m

nginx-deployment-9456bbbf9-rn797 1/1 Running 1 (3h10m ago) 3h55m

[root@master ~]# kubectl get pods -n journey -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deployment-9456bbbf9-ck8n5 1/1 Running 1 (3h10m ago) 3h55m 10.244.1.8 node1

nginx-deployment-9456bbbf9-rn797 1/1 Running 1 (3h10m ago) 3h55m 10.244.2.4 node2 4、控制器的设计原则

1、控制逻辑应该只依赖于当前状态

这是为了保证分布式系统的稳定可靠,对于经常出现局部错误的分布式系统,如果控制逻辑只能依赖当前状态,那么就非常容易将一个暂时出现故障的系统恢复到正常状态,因为你只要将该系统重置到某个稳定状态,就可以自信的知道系统的所有控制逻辑会开始按照正常方式运行

2、假设任何错误的可能,并做容错处理

在一个分布式系统中出现局部和临时错误是大概率事件。错误可能来自于物理系统故障,外部系统故障也可能是来自于系统自身的代码错误,依靠自己实现的代码不会出错来保证系统稳定其实是很难实现的,因此要设计对任何可能错误的容错处理

3、尽量避免复杂状态机,控制逻辑不要依赖无法监控的内部状态

因为分布式系统各个子系统都是不能严格通过程序内部保持同步的,所以如果两个子系统的控制逻辑如果相互有影响,那么系统就一定要能互相访问到影响控制逻辑的状态,否则,就等同于系统里存在不确定的控制逻辑

4、假设任何操作都可能被任何操作对象拒绝,甚至被错误解析

由于分布式系统的复杂性以及各个子系统的相对独立性,不同子系统经常来自不同的开发团队,所以不能奢望任何操作被另一个子系统以正确的方式处理,要保证出现错误的时候,操作级别的错误不会影响到系统稳定性

5、每个模块都可以在出错后自动恢复

由于分布式系统中无法保证各个模块是始终连接的,因此每个模块要有自我修复的能力,保证不会因为连接不到其他模块而自我崩溃

6、每个模块都可以在必须时优雅地降级服务

所谓优雅降级服务,是对系统鲁棒性的要求,即要求在设计实时模块时划分清楚基本功能和高级功能,保证基本功能不会依赖高级功能,这样同时就保证了不会因为高级功能出现故障而导致整个模块崩溃。根据这种理念实现的系统,也容易快速增加新的高级功能,以不必担心引入高级功能影响原有的基本功能

5、k8s架构深入

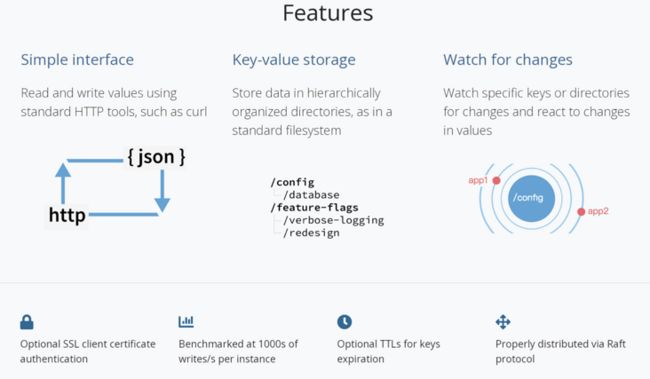

6、etcd

1、etcd是什么

etcd是一种高度一致的分布式键值存储,它提供了一种可靠的方式来存储需要由分布式系统或机器集群访问的数据

2、etcd features

3、etcdctl和API使用

[root@master opt]# wget https://github.com/etcd-io/etcd/releases/download/v3.5.1/etcd-v3.5.1-linux-amd64.tar.gz

[root@master opt]# tar -zxvf etcd-v3.5.1-linux-amd64.tar.gz

[root@master ~]# alias ectl='ETCDCTL_API=3 /opt/etcd-v3.5.1-linux-amd64/etcdctl --endpoints=https://127.0.0.1:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key'

添加

[root@master ~]# ectl put journey hello-world

OK

查询

[root@master opt]# ectl get journey

journey

hello-world

删除

[root@master opt]# ectl del journey

1

[root@master opt]# ectl get journey

添加

[root@master ~]# ectl put journey hello-world

OK

更新

[root@master opt]# ectl put journey hello-world1

OK

[root@master opt]# ectl put journey hello-world2

OK

[root@master opt]# ectl get journey

journey

hello-world2

etcd watch(监听作用)

[root@master opt]# ectl watch journey

PUT

journey

hello-world3

curl etcd API测试

[root@master opt]# curl --cert /etc/kubernetes/pki/etcd/server.crt --key /etc/kubernetes/pki/etcd/server.key --cacert /etc/kubernetes/pki/etcd/ca.crt https://172.24.251.133:2379/v3/kv/put -X POST -d '{"key": "Zm9v", "value": "YmFy"}'

{"header":{"cluster_id":"15060521882999422335","member_id":"12630540088856447431","revision":"86922","raft_term":"5"}}[root@master opt]#

[root@master opt]# curl --cert /etc/kubernetes/pki/etcd/server.crt --key /etc/kubernetes/pki/etcd/server.key --cacert /etc/kubernetes/pki/etcd/ca.crt https://172.24.251.133:2379/v3/kv/range -X POST -d '{"key": "Zm9v"}'

{"header":{"cluster_id":"15060521882999422335","member_id":"12630540088856447431","revision":"87096","raft_term":"5"},"kvs":[{"key":"Zm9v","create_revision":"86922","mod_revision":"86922","version":"1","value":"YmFy"}],"count":"1"}[root@master opt]#4、etcd k8s

1、查看所有keys

[root@master ~]# docker exec -it ee5c38a5d554 etcdctl --endpoints=https://127.0.0.1:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key get / --prefix --keys-only >etcd.txt

2、查看ns信息

[root@master ~]# docker exec -it ee5c38a5d554 etcdctl --endpoints=https://127.0.0.1:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key get /registry/namespaces/journey

/registry/namespaces/journey

k8s

v1 Namespace�

�

journey"*$81fa3d53-0d77-4e0c-bd13-08d860d2a5d22�椤Z&

kubectl-createUpdatev�椤FieldsV1:I

G{"f:metadata":{"f:labels":{".":{},"f:kubernetes.io/metadata.name":{}}}}B

kubernetes

Active"

3、watch ns信息

[root@master ~]# docker exec -it ee5c38a5d554 etcdctl --endpoints=https://127.0.0.1:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key watch /registry/namespaces/journey7、apiserver

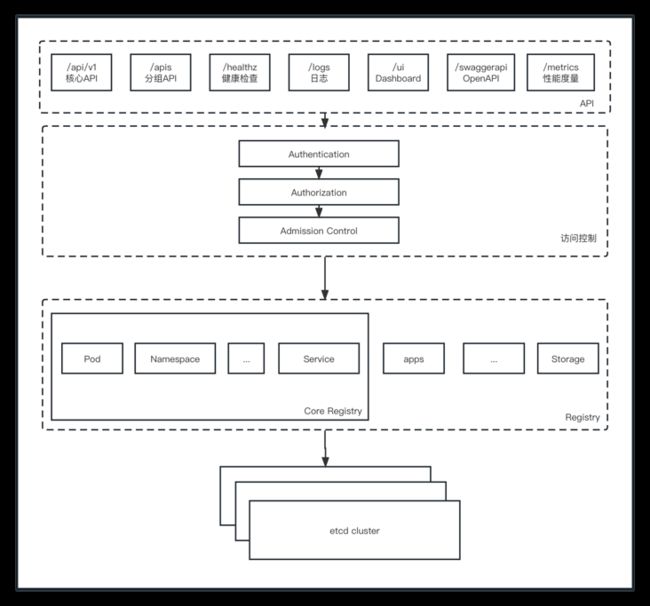

1、api server是什么

总体看来,Kubernates API Server的核心功能是提供Kubernates各种资源对象(比如Pod、RC、Service等)的增、删、改、查及Watch等HTTP Rest接口,成为集群内各个功能模块之间数据交互和通信的中心枢纽,是整个系统的数据总线和数据中心。除此之外,它还有一些功能特性

- 是集群管理的API入口

- 是资源配额控制的入口

- 提供了完备的集群安全机制

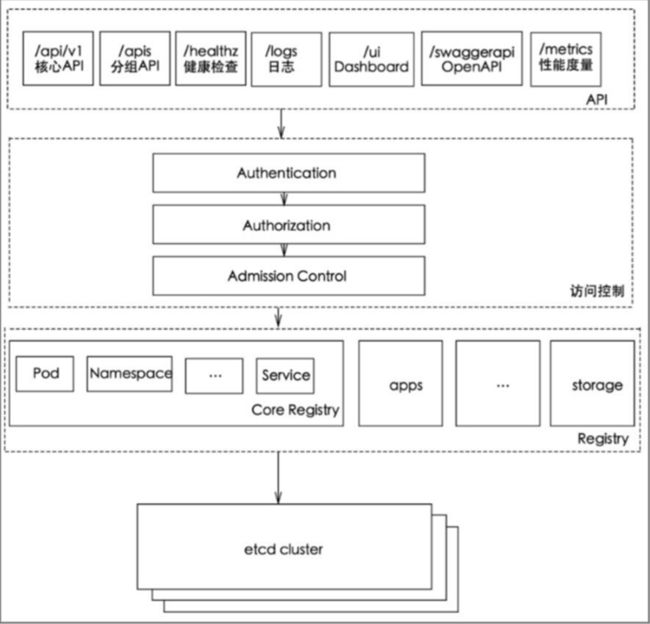

2、api server分层架构

- API层 : 主要以REST方式提供各种API接口,除了有Kubernates资源对象的CRUD的Watch等主要API,还有健康检查、UI、日志、性能指标等运维监控相关的API。Kubernates从1.11版本开始废弃Heapster监控组件,转而使用Metrics Server提供Metrics API接口,进一步完善了自身的监控能力

- 访问控制层 : 当客户端访问API接口时,访问控制层负责对用户身份鉴权,验明用户身份,核准用户对Kubernates资源对象的访问权限,然后根据配置的各种资源访问许可逻辑(Admission Control),判断是否运行访问

- 注册表层 : Kubernates把所有资源对象都保存在注册表(Registry)中,针对注册表中的各种资源对象定义了 : 资源对象类型、如何创建资源对象、如何转换资源的不同版本,以及如何将资源编码和解码为JSON或ProtoBuf格式进行存储

- etcd数据库 : 用于持久化存储Kubernates资源对象的KV数据库。etcd的watch API接口对于API Server来说至关重要,因为通过这个接口,API Server创新性的设计了List-Watch这种高性能资源对象实时同步机制,使Kubernates可以管理超大规模的集群,及时响应和快速处理集群中的各个事件

3、api server认证

3钟级别的客户端身份认证方式

- 1、最严格的HTTPS证书认证 : 基于CA根证书签名的双向数字证书认证方式

- 2、HTTP Token认证 : 通过一个Token来识别合法用户

- 3、HTTP Base认证 : 通过用户名 + 密码的方式认证

4、api server pods详情

[root@master opt]# kubectl describe pods nginx-deployment-8d545c96d-24nk9 -n journey

Name: nginx-deployment-8d545c96d-24nk9

Namespace: journey

Priority: 0

Node: node2/172.24.251.134

Start Time: Fri, 16 Jun 2023 22:46:30 +0800

Labels: app=nginx

pod-template-hash=8d545c96d

Annotations:

Status: Running

IP: 10.244.2.10

IPs:

IP: 10.244.2.10

Controlled By: ReplicaSet/nginx-deployment-8d545c96d

Containers:

nginx:

Container ID: docker://25591ca18c7fa94acba075436cc59d4b79c1cd21977e41750b91536e807a3b91

Image: nginx:latest

Image ID: docker-pullable://nginx@sha256:0d17b565c37bcbd895e9d92315a05c1c3c9a29f762b011a10c54a66cd53c9b31

Port: 80/TCP

Host Port: 0/TCP

State: Running

Started: Sun, 18 Jun 2023 08:49:26 +0800

Last State: Terminated

Reason: Completed

Exit Code: 0

Started: Sat, 17 Jun 2023 17:20:07 +0800

Finished: Sat, 17 Jun 2023 22:35:32 +0800

Ready: True

Restart Count: 2

Environment:

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-58cb5 (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

kube-api-access-58cb5:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional:

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors:

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedCreatePodSandBox 9m2s kubelet Failed to create pod sandbox: rpc error: code = Unknown desc = failed to set up sandbox container "fe8d6e994b4ae922205a579a63a8e7f9dd57a0478e9b1437fc937958810f37a4" network for pod "nginx-deployment-8d545c96d-24nk9": networkPlugin cni failed to set up pod "nginx-deployment-8d545c96d-24nk9_journey" network: loadFlannelSubnetEnv failed: open /run/flannel/subnet.env: no such file or directory

Warning FailedCreatePodSandBox 9m2s kubelet Failed to create pod sandbox: rpc error: code = Unknown desc = failed to set up sandbox container "8a39757d788d1173909662754b4b4b32e385b99f61f347d1139c5773cf65c8bf" network for pod "nginx-deployment-8d545c96d-24nk9": networkPlugin cni failed to set up pod "nginx-deployment-8d545c96d-24nk9_journey" network: loadFlannelSubnetEnv failed: open /run/flannel/subnet.env: no such file or directory

Warning FailedCreatePodSandBox 9m1s kubelet Failed to create pod sandbox: rpc error: code = Unknown desc = failed to set up sandbox container "c9d9d4622c7ff94beff4f0f3b1a827bf7e8131f7a1b03a1b227b3adbe430d750" network for pod "nginx-deployment-8d545c96d-24nk9": networkPlugin cni failed to set up pod "nginx-deployment-8d545c96d-24nk9_journey" network: loadFlannelSubnetEnv failed: open /run/flannel/subnet.env: no such file or directory

Warning FailedCreatePodSandBox 9m kubelet Failed to create pod sandbox: rpc error: code = Unknown desc = failed to set up sandbox container "291e8c7fd4558970709bfd5d5359e32534ebadc2ef9522677fa8fbe65e2af8f5" network for pod "nginx-deployment-8d545c96d-24nk9": networkPlugin cni failed to set up pod "nginx-deployment-8d545c96d-24nk9_journey" network: loadFlannelSubnetEnv failed: open /run/flannel/subnet.env: no such file or directory

Warning FailedCreatePodSandBox 8m59s kubelet Failed to create pod sandbox: rpc error: code = Unknown desc = failed to set up sandbox container "5b769bbb5815a230a530fdaa04eb8a8484dc3c28f0a173d85cd7629dfb54584a" network for pod "nginx-deployment-8d545c96d-24nk9": networkPlugin cni failed to set up pod "nginx-deployment-8d545c96d-24nk9_journey" network: loadFlannelSubnetEnv failed: open /run/flannel/subnet.env: no such file or directory

Normal SandboxChanged 8m58s (x6 over 9m3s) kubelet Pod sandbox changed, it will be killed and re-created.

Normal Pulling 8m58s kubelet Pulling image "nginx:latest"

Normal Pulled 8m43s kubelet Successfully pulled image "nginx:latest" in 15.265138889s

Normal Created 8m43s kubelet Created container nginx

Normal Started 8m42s kubelet Started container nginx 5、api server services

[root@master opt]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.1.0.1 443/TCP 4d17h

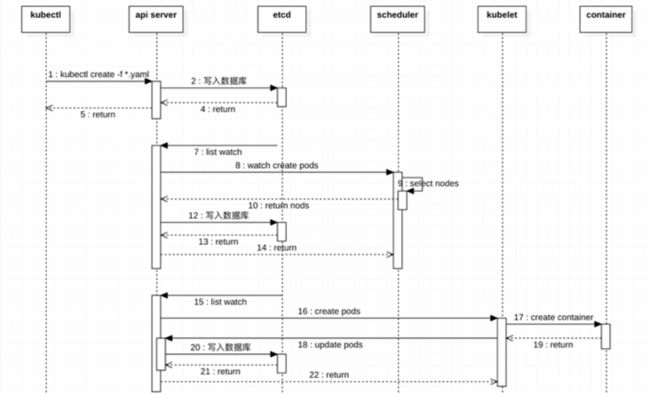

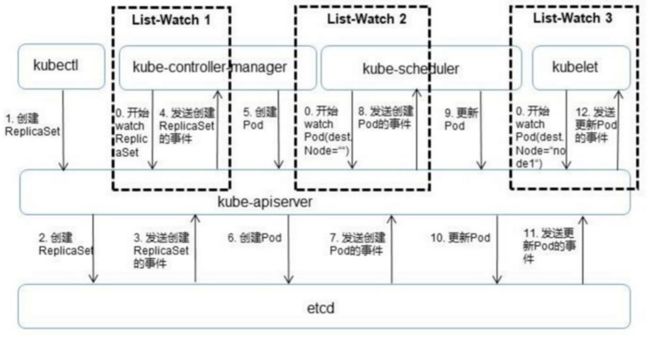

[root@master opt]# curl --cert ./client.pem --key ./client-key.pem --cacert ./ca.pem https://10.1.0.1:443/api/v1/pods | more 6、api server与组件交互

- api server与kubelet交互 : 每个Node节点上的kubelet定期就会调用API Server的REST接口报告自身状态,API Server接收这些信息后,将节点状态信息更新到etcd中。kubelet也通过API Server的Watch接口监听Pod信息,从而对Node机器上的Pod进行管理

- api server与kube-controller-manager交互 : kube-controller-manager中的Node Controller模块通过API Server提供的Watch接口,实时监控Node的信息,并做响应处理

- api server与kube-scheduler交互 : Scheduler通过API Server的Watch接口监听到新建Pod副本的信息后,他会检索所有符合该Pod要求的Node列表,开始执行Pod调度逻辑。调度成功后将Pod绑定到目标节点上

- api server交互压力 : 为了缓解各模块对API Server的访问压力,各功能模块都采用缓存机制来缓存数据,各功能模块定时从API Server获取指定的资源对象信息(LIST/WATCH方法),然后将信息保存到本地缓存,功能模块在某些情况下不直接访问API Server,而是通过访问缓存数据来间接访问API Server

8、Controller Manager

当node节点挂机,node节点上的Pod会迁移到其他node上,这就是Contrlller Manager来进行主要控制的

1、概念

Controller Manager是Kubernates中各种操作系统的管理者,是集群内部的管理控制中心,也是Kubernates自动化功能的核心

2、核心逻辑

- 获取期望状态

- 观察当前状态

- 判断两者间的差异

- 变更当前状态来消除差异点

3、Deployment Controller

一般来说,用户不会直接创建Pod,而是创建控制器,让控制器来管理Pod。在控制器中定义Pod的部署方式,如有多少副本、需要在哪种Node上运行等

[root@master k8s]# cat nginx-deployment

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: journey

name: nginx-deployment

spec:

selector:

matchLabels:

app: nginx

replicas: 2 # 告知 Deployment 运行 2 个与该模板匹配的 Pod

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 804、分层控制

- Replicaset : 自愈和副本数

- Deployment : 扩缩容、更新和回滚

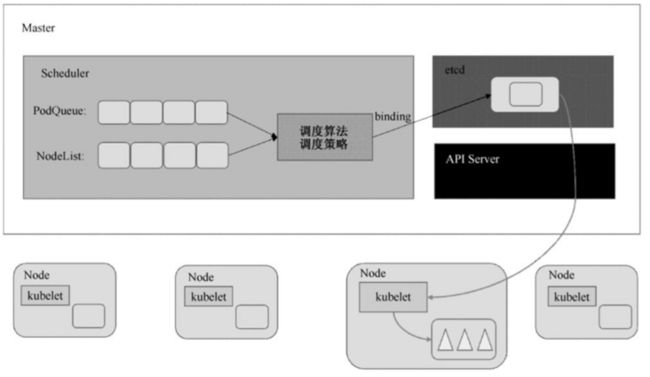

9、Scheduler

新建一个Pod,Pod为什么会运行在node1节点上?谁控制的,那就是Scheduler了

1、Scheduler概念

Kubernates Scheduler在整个系统中承担了承上启下的功能,承上是指他负责接收Controller Manager创建的新Pod,为其安排一个落地的地方,目标当然就是Node了。启下是指安置工作完成后,目标Node上的kubelet服务进程会接管后续的工作,负责Pod生命周期的下半场

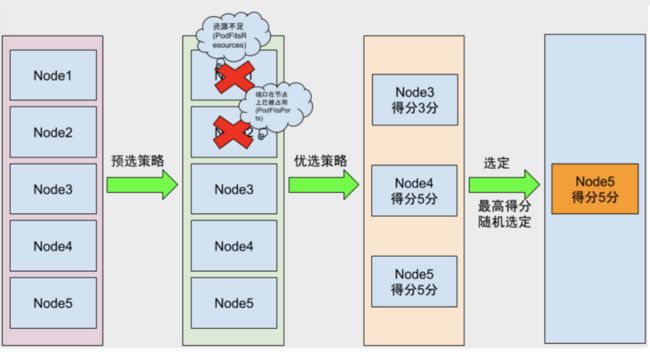

2、Scheduler调度流程

3、Scheduler选择过程

Kubernates Scheduler当前提供的默认调度流程分为两步 :

- 预选调度过程 : 即遍历所有目标Node,筛选副本要求的候选节点。为此,Kubernates内置了多种预选策略(xxx Predicates)供用户选择

- 确定最优节点 : 基于上面的步骤,才有优选策略(xxx Priority) 计算每个候选节点的得分,积分最高的胜出

Scheduler调度因素 :

在做调度决定时要考虑的因素包括 : 单独和整体的资源请求、硬件/软件/策略限制、亲和以及反亲和要求、数据局域性、负载的干扰等等

10、Scheduler

1、kubelet是什么?

- 在Kubernates集群中,在每个Node上都会启动一个kubelet服务进程。该进程用于处理Master下发到本节点的任务,管理Pod及Pod中的容器

- 每个kubelet进程都会在API Server上注册节点自身的信息,定期向Master汇报节点资源的使用情况,并通过cAdvisor监控容器和节点资源

2、kubelet进程

[root@master k8s]# ps -ef | grep /usr/bin/kubelet

root 2368 1 1 08:48 ? 00:01:46 /usr/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf --config=/var/lib/kubelet/config.yaml --network-plugin=cni --pod-infra-container-image=k8s.gcr.io/pause:3.6

root 10530 2094 0 10:22 pts/0 00:00:00 grep --color=auto /usr/bin/kubelet3、Pod管理

kubelet通过API Server Client使用Watch加List的方式监听 "/registry/nodes/$"当前节点的名称和"/registry/pods"目录,将获取的信息同步到本地缓存中

kubelet监听etcd,所有针对Pod的操作都会被kubelet监听。如果发现有新的的绑定到本节点的Pod,则按照Pod清单的要求创建该Pod

4、容器健康检查

Pod通过两类探针来检查容器的健康状态 :

- 一类是LivenessProbe探针 : 用于判断容器是否健康并反馈给kubelet。如果LivenessProbe探针探测到容器不健康,则kubelet将删除该容器,并根据容器的重启策略做响应的处理。如果一个容器不包含LivenessProbe探针,那么kubelet认为该容器的LivenessProb谭政返回的永远是Success

- 另一类是ReadinessProb探针,用于判断容器是否启动完成,且准备接受请求。如果ReadinessProb探针检测到容器启动失败,则Pod的状态将被修改,Endpont Controller将从Service的Endpoint删除包含该容器的Pod的IP地址的Endpoint条目

5、cAdvisor资源监控

- cAdvisor是一个开源的分析容器资源使用率和性能特性的代理工具,他是因为容器而产生的,自然支持Docker容器,在Kubernates项目中,cAdvisor被集成到kubernates代码中,kubelet则通过cAdvisor获取其所在节点及容器的数据

- cAdvisor自动查找所有在其所在Node上的容器,自动采集CPU、内存、文件系统和网络使用的统计信息。在大部分Kubernates集群中,cAdvisor通过他所在的Node的4194端口暴露一个简单的UI

6、总结

- kubelet作为连接Kubernates Master和各Node之间的桥梁,管理运行在Node上的Pod和容器

- kubelet将每个Pod都转换成他的成员的容器,同时从cAdvisor获取单独的容器使用统计信息,然后通过该REST API暴露这些聚合后的Pod资源使用的统计信息

11、 Pod入门

1、Pod定义

- 在VMware的世界中,调度的原子单位是虚拟机(VM)

- 在Docker的世界中,调度的原子单位是容器

- 在Kubernates的世界中,调度的原子单位是Pod

Pod的共享上下文包含一组Linux命名空间、控制组(cgroup)和可能一些其他的隔离方面,即用来隔离Docker容器的技术。Pod是非永久性资源

- Pod是可以在Kubernates中创建和管理的最小的可部署的计算单元

- Pod是一组(一个或多个)容器

- 这些容器共享存储、网络,以及怎样这些容器的声明

2、Pod定义

容器在Pod运行方式有 :

- 一种是在每个Pod中只运行一个容器

- 一种更高级的用法,在一个Pod中会运行一组容器

注意 :

多容器Pod仅适用于那种两个的确是不同容器但又需要共享资源的场景

多容器Pod的一个“以基础设施为中心”的使用场景,就是服务网格(ServiceMesh)。在服务网格模型中,每个Pod会塞入一个代理容器(ProxyContainer)。由代理容器来处理所有进出Pod的网络流量,这样就可以方便实现类似流量加密、网络监测、智能路由等特性

3、共享资源

如果在Pod中运行多个容器,那么多个容器是共享相同的Pod环境的。共享环境中包含了IPC命名空间、共享的内存、共享的磁盘、网络以及其他资源

如果在同一个Pod中运行的两个容器之间需要通信(在Pod内部的容器间),那么就可以使用Pod提供的localhost接口来完成

4、Pod测试

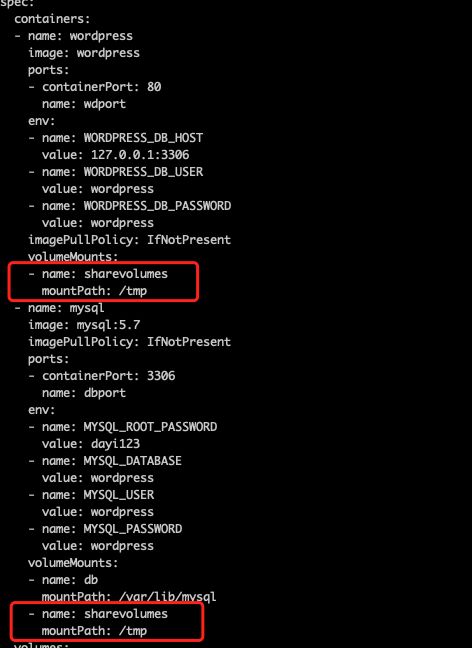

[root@master k8s]# cat wordpress-pod.yml

apiVersion: v1

kind: Namespace

metadata:

name: wordpress

---

# 创建pod

apiVersion: v1

kind: Pod

metadata:

name: wordpress

namespace: wordpress

labels:

app: wordpress

spec:

containers:

- name: wordpress

image: wordpress

ports:

- containerPort: 80

name: wdport

env:

- name: WORDPRESS_DB_HOST

value: 127.0.0.1:3306

- name: WORDPRESS_DB_USER

value: wordpress

- name: WORDPRESS_DB_PASSWORD

value: wordpress

imagePullPolicy: IfNotPresent

volumeMounts:

- name: sharevolumes

mountPath: /tmp

- name: mysql

image: mysql:5.7

imagePullPolicy: IfNotPresent

ports:

- containerPort: 3306

name: dbport

env:

- name: MYSQL_ROOT_PASSWORD

value: dayi123

- name: MYSQL_DATABASE

value: wordpress

- name: MYSQL_USER

value: wordpress

- name: MYSQL_PASSWORD

value: wordpress

volumeMounts:

- name: db

mountPath: /var/lib/mysql

- name: sharevolumes

mountPath: /tmp

volumes:

- name: db

hostPath:

path: /var/lib/mysql

- name: sharevolumes

emptyDir: {}

---

apiVersion: v1

kind: Service

metadata:

labels:

app: wordpress

name: wp-svc

namespace: wordpress

spec:

ports:

- port: 8081

protocol: TCP

targetPort: 80

selector:

app: wordpress

type: NodePort

[root@node2 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

acfda9c84648 mysql "docker-entrypoint.s…" About a minute ago Up About a minute k8s_mysql_wordpress_wordpress_b69c4abb-185e-4169-89b5-d970aca6a1e7_0

812d108eb099 wordpress "docker-entrypoint.s…" About a minute ago Up About a minute k8s_wordpress_wordpress_wordpress_b69c4abb-185e-4169-89b5-d970aca6a1e7_0

2e381af9fe7f k8s.gcr.io/pause:3.6 "/pause" 2 minutes ago Up 2 minutes k8s_POD_wordpress_wordpress_b69c4abb-185e-4169-89b5-d970aca6a1e7_0

d26a17d867aa 38c11b8f4aa1 "/opt/bin/flanneld -…" 2 hours ago Up 2 hours k8s_kube-flannel_kube-flannel-ds-45srz_kube-flannel_c398b88d-1c07-40ce-90f3-ed4659233bcd_2

a07c1c8ff4c7 k8s.gcr.io/pause:3.6 "/pause" 2 hours ago Up 2 hours k8s_POD_kube-flannel-ds-45srz_kube-flannel_c398b88d-1c07-40ce-90f3-ed4659233bcd_2

a66ef24f5577 9b7cc9982109 "/usr/local/bin/kube…" 2 hours ago Up 2 hours k8s_kube-proxy_kube-proxy-7pfxp_kube-system_cc3d56f5-e199-48fb-8ff8-3ab5f755c69b_5

3bcb807a8284 k8s.gcr.io/pause:3.6 "/pause" 2 hours ago Up 2 hours k8s_POD_kube-proxy-7pfxp_kube-system_cc3d56f5-e199-48fb-8ff8-3ab5f755c69b_5

[root@node2 ~]# docker exec top acfda9c84648

Error response from daemon: No such container: top

[root@node2 ~]# docker top acfda9c84648

UID PID PPID C STIME TTY TIME CMD

polkitd 29542 29521 0 10:53 ? 00:00:00 mysqld

[root@node2 ~]# ls /proc/29542/ns

ipc mnt net pid user uts

[root@node2 ~]# ll /proc/29542/ns

总用量 0

lrwxrwxrwx 1 polkitd input 0 6月 18 10:55 ipc -> ipc:[4026532192]

lrwxrwxrwx 1 polkitd input 0 6月 18 10:55 mnt -> mnt:[4026532269]

lrwxrwxrwx 1 polkitd input 0 6月 18 10:53 net -> net:[4026532195]

lrwxrwxrwx 1 polkitd input 0 6月 18 10:55 pid -> pid:[4026532271]

lrwxrwxrwx 1 polkitd input 0 6月 18 10:55 user -> user:[4026531837]

lrwxrwxrwx 1 polkitd input 0 6月 18 10:55 uts -> uts:[4026532270]

[root@node2 ~]# docker top 812d108eb099

UID PID PPID C STIME TTY TIME CMD

root 29168 29151 0 10:52 ? 00:00:00 apache2 -DFOREGROUND

33 29245 29168 0 10:52 ? 00:00:00 apache2 -DFOREGROUND

33 29246 29168 0 10:52 ? 00:00:00 apache2 -DFOREGROUND

33 29247 29168 0 10:52 ? 00:00:00 apache2 -DFOREGROUND

33 29248 29168 0 10:52 ? 00:00:00 apache2 -DFOREGROUND

33 29249 29168 0 10:52 ? 00:00:00 apache2 -DFOREGROUND

[root@node2 ~]# ll /proc/29245/ns

总用量 0

lrwxrwxrwx 1 root root 0 6月 18 10:55 ipc -> ipc:[4026532192]

lrwxrwxrwx 1 root root 0 6月 18 10:55 mnt -> mnt:[4026532266]

lrwxrwxrwx 1 root root 0 6月 18 10:55 net -> net:[4026532195]

lrwxrwxrwx 1 root root 0 6月 18 10:53 pid -> pid:[4026532268]

lrwxrwxrwx 1 root root 0 6月 18 10:55 user -> user:[4026531837]

lrwxrwxrwx 1 root root 0 6月 18 10:55 uts -> uts:[4026532267]

注意 : 发现wordpress和mysql的ns相同

[root@node2 29542]# cat cgroup

11:cpuset:/kubepods.slice/kubepods-besteffort.slice/kubepods-besteffort-podb69c4abb_185e_4169_89b5_d970aca6a1e7.slice/docker-acfda9c846489c167d2d6550473d6ebe326860c4069ceef7f46a217bbc3556f9.scope

10:pids:/kubepods.slice/kubepods-besteffort.slice/kubepods-besteffort-podb69c4abb_185e_4169_89b5_d970aca6a1e7.slice/docker-acfda9c846489c167d2d6550473d6ebe326860c4069ceef7f46a217bbc3556f9.scope

9:memory:/kubepods.slice/kubepods-besteffort.slice/kubepods-besteffort-podb69c4abb_185e_4169_89b5_d970aca6a1e7.slice/docker-acfda9c846489c167d2d6550473d6ebe326860c4069ceef7f46a217bbc3556f9.scope

8:perf_event:/kubepods.slice/kubepods-besteffort.slice/kubepods-besteffort-podb69c4abb_185e_4169_89b5_d970aca6a1e7.slice/docker-acfda9c846489c167d2d6550473d6ebe326860c4069ceef7f46a217bbc3556f9.scope

7:hugetlb:/kubepods.slice/kubepods-besteffort.slice/kubepods-besteffort-podb69c4abb_185e_4169_89b5_d970aca6a1e7.slice/docker-acfda9c846489c167d2d6550473d6ebe326860c4069ceef7f46a217bbc3556f9.scope

6:devices:/kubepods.slice/kubepods-besteffort.slice/kubepods-besteffort-podb69c4abb_185e_4169_89b5_d970aca6a1e7.slice/docker-acfda9c846489c167d2d6550473d6ebe326860c4069ceef7f46a217bbc3556f9.scope

5:cpuacct,cpu:/kubepods.slice/kubepods-besteffort.slice/kubepods-besteffort-podb69c4abb_185e_4169_89b5_d970aca6a1e7.slice/docker-acfda9c846489c167d2d6550473d6ebe326860c4069ceef7f46a217bbc3556f9.scope

4:net_prio,net_cls:/kubepods.slice/kubepods-besteffort.slice/kubepods-besteffort-podb69c4abb_185e_4169_89b5_d970aca6a1e7.slice/docker-acfda9c846489c167d2d6550473d6ebe326860c4069ceef7f46a217bbc3556f9.scope

3:blkio:/kubepods.slice/kubepods-besteffort.slice/kubepods-besteffort-podb69c4abb_185e_4169_89b5_d970aca6a1e7.slice/docker-acfda9c846489c167d2d6550473d6ebe326860c4069ceef7f46a217bbc3556f9.scope

2:freezer:/kubepods.slice/kubepods-besteffort.slice/kubepods-besteffort-podb69c4abb_185e_4169_89b5_d970aca6a1e7.slice/docker-acfda9c846489c167d2d6550473d6ebe326860c4069ceef7f46a217bbc3556f9.scope

1:name=systemd:/kubepods.slice/kubepods-besteffort.slice/kubepods-besteffort-podb69c4abb_185e_4169_89b5_d970aca6a1e7.slice/docker-acfda9c846489c167d2d6550473d6ebe326860c4069ceef7f46a217bbc3556f9.scope

[root@node2 29542]#

[root@node2 29542]#

[root@node2 29542]# cd /proc/29247

[root@node2 29247]# cat cgroup

11:cpuset:/kubepods.slice/kubepods-besteffort.slice/kubepods-besteffort-podb69c4abb_185e_4169_89b5_d970aca6a1e7.slice/docker-812d108eb099740c191e204c2e00ef5c6cac8256ae2560289aef26eac23f6cb9.scope

10:pids:/kubepods.slice/kubepods-besteffort.slice/kubepods-besteffort-podb69c4abb_185e_4169_89b5_d970aca6a1e7.slice/docker-812d108eb099740c191e204c2e00ef5c6cac8256ae2560289aef26eac23f6cb9.scope

9:memory:/kubepods.slice/kubepods-besteffort.slice/kubepods-besteffort-podb69c4abb_185e_4169_89b5_d970aca6a1e7.slice/docker-812d108eb099740c191e204c2e00ef5c6cac8256ae2560289aef26eac23f6cb9.scope

8:perf_event:/kubepods.slice/kubepods-besteffort.slice/kubepods-besteffort-podb69c4abb_185e_4169_89b5_d970aca6a1e7.slice/docker-812d108eb099740c191e204c2e00ef5c6cac8256ae2560289aef26eac23f6cb9.scope

7:hugetlb:/kubepods.slice/kubepods-besteffort.slice/kubepods-besteffort-podb69c4abb_185e_4169_89b5_d970aca6a1e7.slice/docker-812d108eb099740c191e204c2e00ef5c6cac8256ae2560289aef26eac23f6cb9.scope

6:devices:/kubepods.slice/kubepods-besteffort.slice/kubepods-besteffort-podb69c4abb_185e_4169_89b5_d970aca6a1e7.slice/docker-812d108eb099740c191e204c2e00ef5c6cac8256ae2560289aef26eac23f6cb9.scope

5:cpuacct,cpu:/kubepods.slice/kubepods-besteffort.slice/kubepods-besteffort-podb69c4abb_185e_4169_89b5_d970aca6a1e7.slice/docker-812d108eb099740c191e204c2e00ef5c6cac8256ae2560289aef26eac23f6cb9.scope

4:net_prio,net_cls:/kubepods.slice/kubepods-besteffort.slice/kubepods-besteffort-podb69c4abb_185e_4169_89b5_d970aca6a1e7.slice/docker-812d108eb099740c191e204c2e00ef5c6cac8256ae2560289aef26eac23f6cb9.scope

3:blkio:/kubepods.slice/kubepods-besteffort.slice/kubepods-besteffort-podb69c4abb_185e_4169_89b5_d970aca6a1e7.slice/docker-812d108eb099740c191e204c2e00ef5c6cac8256ae2560289aef26eac23f6cb9.scope

2:freezer:/kubepods.slice/kubepods-besteffort.slice/kubepods-besteffort-podb69c4abb_185e_4169_89b5_d970aca6a1e7.slice/docker-812d108eb099740c191e204c2e00ef5c6cac8256ae2560289aef26eac23f6cb9.scope

1:name=systemd:/kubepods.slice/kubepods-besteffort.slice/kubepods-besteffort-podb69c4abb_185e_4169_89b5_d970aca6a1e7.slice/docker-812d108eb099740c191e204c2e00ef5c6cac8256ae2560289aef26eac23f6cb9.scope

[root@node2 29247]#

注意 : 发现wordpress和mysql的cgroup也是相同的同Pod多容器通信

[root@node2 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

41797672eb03 c20987f18b13 "docker-entrypoint.s…" 18 hours ago Up 18 hours k8s_mysql_wordpress_wordpress_b69c4abb-185e-4169-89b5-d970aca6a1e7_2

d1b182fa1c5c c3c92cc3dcb1 "docker-entrypoint.s…" 18 hours ago Up 18 hours k8s_wordpress_wordpress_wordpress_b69c4abb-185e-4169-89b5-d970aca6a1e7_2

c0636bf2f97c k8s.gcr.io/pause:3.6 "/pause" 18 hours ago Up 18 hours k8s_POD_wordpress_wordpress_b69c4abb-185e-4169-89b5-d970aca6a1e7_10

c0f187158cb3 38c11b8f4aa1 "/opt/bin/flanneld -…" 18 hours ago Up 18 hours k8s_kube-flannel_kube-flannel-ds-45srz_kube-flannel_c398b88d-1c07-40ce-90f3-ed4659233bcd_4

ec5dac7565bd k8s.gcr.io/pause:3.6 "/pause" 18 hours ago Up 18 hours k8s_POD_kube-flannel-ds-45srz_kube-flannel_c398b88d-1c07-40ce-90f3-ed4659233bcd_4

ce54cb186069 9b7cc9982109 "/usr/local/bin/kube…" 18 hours ago Up 18 hours k8s_kube-proxy_kube-proxy-7pfxp_kube-system_cc3d56f5-e199-48fb-8ff8-3ab5f755c69b_7

fd85b6f4ee21 k8s.gcr.io/pause:3.6 "/pause" 18 hours ago Up 18 hours k8s_POD_kube-proxy-7pfxp_kube-system_cc3d56f5-e199-48fb-8ff8-3ab5f755c69b_7

[root@node2 ~]# docker top 41797672eb03

UID PID PPID C STIME TTY TIME CMD

polkitd 2964 2944 0 Jun18 ? 00:00:28 mysqld

[root@node2 ~]# docker exec -it d1b182fa1c5c /bin/bash

root@wordpress:/var/www/html# telnet 127.0.0.1 3306

Trying 127.0.0.1...

Connected to 127.0.0.1.

Escape character is '^]'.

J

5.7.36(7`{VW0zhD/ !,s0amysql_native_passwordConnection closed by foreign host.流程验证,就是在其中一个容器下的/tmp目录的变动会在另外一个容器下进行共享 :

[root@node2 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

41797672eb03 c20987f18b13 "docker-entrypoint.s…" 18 hours ago Up 18 hours k8s_mysql_wordpress_wordpress_b69c4abb-185e-4169-89b5-d970aca6a1e7_2

d1b182fa1c5c c3c92cc3dcb1 "docker-entrypoint.s…" 18 hours ago Up 18 hours k8s_wordpress_wordpress_wordpress_b69c4abb-185e-4169-89b5-d970aca6a1e7_2

c0636bf2f97c k8s.gcr.io/pause:3.6 "/pause" 18 hours ago Up 18 hours k8s_POD_wordpress_wordpress_b69c4abb-185e-4169-89b5-d970aca6a1e7_10

c0f187158cb3 38c11b8f4aa1 "/opt/bin/flanneld -…" 18 hours ago Up 18 hours k8s_kube-flannel_kube-flannel-ds-45srz_kube-flannel_c398b88d-1c07-40ce-90f3-ed4659233bcd_4

ec5dac7565bd k8s.gcr.io/pause:3.6 "/pause" 18 hours ago Up 18 hours k8s_POD_kube-flannel-ds-45srz_kube-flannel_c398b88d-1c07-40ce-90f3-ed4659233bcd_4

ce54cb186069 9b7cc9982109 "/usr/local/bin/kube…" 18 hours ago Up 18 hours k8s_kube-proxy_kube-proxy-7pfxp_kube-system_cc3d56f5-e199-48fb-8ff8-3ab5f755c69b_7

fd85b6f4ee21 k8s.gcr.io/pause:3.6 "/pause" 18 hours ago Up 18 hours k8s_POD_kube-proxy-7pfxp_kube-system_cc3d56f5-e199-48fb-8ff8-3ab5f755c69b_7

[root@node2 ~]# docker exec -it 41797672eb03 /bin/bash

root@wordpress:/# cd /tmp/

root@wordpress:/tmp# ls

test.txt

root@wordpress:/tmp# cat test.txt

test123

root@wordpress:/tmp# exit

[root@node2 ~]# docker exec -it d1b182fa1c5c /bin/bash

root@wordpress:/var/www/html# cat /tmp/test.txt

test123

root@wordpress:/var/www/html#12、pause容器

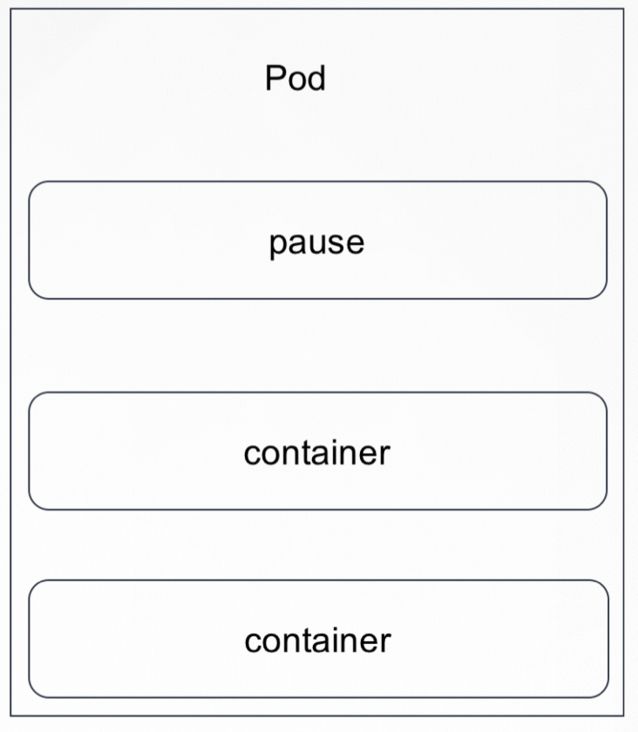

1、Pod的容器组成

2、pause

1、puase概念

Pod 是Kubernates设计的精髓,而pause容器测试Pod网络模型的精髓,理解pause容器能更好地帮助我们理解Kubernates Pod的设计初衷

2、Pod Sandbox与pause

- 创建Pod时Kubelet限创建一个沙箱环境,为Pod设置网络(例如 : 分配IP)等基础运行环境。 当Pod沙箱(Pod Sandbox)建立起来后,Kubelet就可以在里面创建用户容器。当到删除Pod时,Kubelet会先移除Pod Sandbox然后再停止里面的所有容器

- k8s就是通过Pod Sandbox对容器进行统一隔离资源

在Linux CRI体系里,Pod Sandbox其实就是pause容器

一个隔离的应用运行时环境叫做容器,一组共同被Pod约束的容器叫做Pod Sandbox

3、pause的作用

在Kubernates中,pause容器被当做Pod中所有容器的“父容器”并为每个业务容器提供一下功能:

- 在Pod中它作为共享Linux Namespace(Network、IPC等)的基础

- 启用PID Namespace共享,它为每个Pod提供1号进程,并收集Pod内的僵尸进程

3、pause测试

1、共享命名空间

什么是共享命名空间?在Linux中,当我们运行一个新的进程时,这个进程会继承父进程的命名空间

2、运行pause

[root@master k8s]# docker run -d --ipc=shareable --name pause -p 5555:80 registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0

Unable to find image 'registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0' locally

3.0: Pulling from google-containers/pause-amd64

a3ed95caeb02: Pull complete

f11233434377: Pull complete

Digest: sha256:3b3a29e3c90ae7762bdf587d19302e62485b6bef46e114b741f7d75dba023bd3

Status: Downloaded newer image for registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0

405c10b73086141d45e97a5478a8f99b62db1163246de11d510888108fceced3

[root@master k8s]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

405c10b73086 registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0 "/pause" 13 seconds ago Up 12 seconds 0.0.0.0:5555->80/tcp, :::5555->80/tcp pause3、模拟Pod

一旦进程运行,你可以将其他进程添加到进程的名称空间中,以形成一个pod

把nginx加入命名空间

[root@master ~]# cat nginx.conf error_log stderr; events { worker_connections 1024; } http { access_log /dev/stdout combined; server { listen 80 default_server; server_name example.com www.example.com; location / { proxy_pass http://127.0.0.1:2368; } } } docker run -d --name nginx -v /root/nginx.conf:/etc/nginx/nginx.conf --net=container:pause --ipc=container:pause --pid=container:pause nginx把ghost加入命名空间

docker run -d --name ghost --net=container:pause --ipc=container:pause --pid=container:pause ghost访问 http://ip:5555,可以看到ghost页面(博客平台)

4、总结

- pause容器将内部的80端口通过iptables映射到宿主机的5555端口

- nginx容器通过指定--net/ipc/pid=container:pause绑定到pause容器的net/ipc/pid namespace

- ghost容器通过指定--net/ipc/pid=container:pause绑定到pause容器的net/ipc/pid

namespace

三个容器共享net namespace,可以使用localhost直接通信

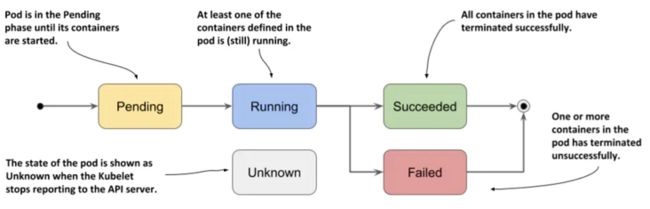

13、Pod生命周期

1、Pod状态

- 挂起(Pending) : API Server创建了Pod资源对象并已经存入了etcd中,但是它并未调度完成,或者仍然处于从仓库下载镜像的过程中

- 运行中(Running) : Pod已经被调度到某节点之上,并且所有容器都已经被kubelet创建完成

- 成功(Successed) : Pod中的所有容器都被成功终止,并且不会再重启

- 失败(Failed) : Pod中的所有容器都已经终止了,并且至少有一个容器是因为失败终止。也就是说,容器以非0状态退出或者被系统终止

- 未知(Unknown) : 因为某些原因无法获取Pod状态,通常是因为与Pod所在主机通信失败

查看Pod状态

[root@master ~]# kubectl get pod wordpress -o yaml -n wordpress

会看到 phase: Running

[root@master ~]# kubectl get pods -n wordpress -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

wordpress 2/2 Running 4 (22h ago) 28h 10.244.2.13 node2

也可以使用kubectl describe,查看pod的描述信息

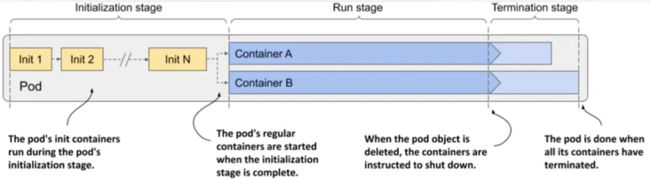

[root@master ~]# kubectl describe pods wordpress -n wordpress 2、Pod运行过程

如图展示了Pod的运行过程,分为三个阶段 :

- 初始化阶段 : 在此期间Pod的init容器运行

- 运行阶段 : Pod的常规容器在其中运行

- 终止阶段 : 在该阶段终止Pod的容器

3、Pod状态(Condition)

1、Condition概念

可以通过查看Pod的Condition列表了解更多信息,Pod的Condition指示Pod是否已经达到某个状态,以及为什么会这样,与状态相反,一个Pod同时具有多个Conditions

- PodScheduled : 表示Pod是否已经调度到节点

- Initialized : Pod的init容器都已经成功完成

- ContainersReady : Pod中所有容器都已就绪

- Ready Pod : 可以为请求提供服务,并且应该被添加到对应服务的负载均衡池中

2、查看Condition状态

[root@master ~]# kubectl get pod wordpress -o yaml -n wordpress

conditions:

- lastProbeTime: null

lastTransitionTime: "2023-06-18T02:51:49Z"

status: "True"

type: Initialized(2)

- lastProbeTime: null

lastTransitionTime: "2023-06-18T08:38:13Z"

status: "True"

type: Ready(4)

- lastProbeTime: null

lastTransitionTime: "2023-06-18T08:38:13Z"

status: "True"

type: ContainersReady(3)

- lastProbeTime: null

lastTransitionTime: "2023-06-18T02:51:49Z"

status: "True"

type: PodScheduled(1)4、Pod重启策略

当某个容器异常退出或者健康检查失败时,kubelet将根据RestartPolicy的设置来进行相应的操作

重启类型,Pod的重启类型包括Always、OnFailure和Never,默认值为Always

- Always : 当容器失效时,由kubelet自动重启该容器

- OnFailure : 当容器终止运行且退出码不为0时,由kubelet自动重启该容器

- Never : 不论容器运行状态如何,kubelet都不会重启该容器

5、容器探针

Kubrenates对Pod的监控状态可以通过两类探针来检查 : LivenessProbe和ReadinessProbe,kubelet定期执行这两类指针来诊断容器的监控状况

1、探针类型

- LivenessProbe(存活探针)

LivensssProbe : 指示容器是否在运行。如果存活态探测失败,则kubelet会杀死容器,并且容器将根据其重启策略决定未来。如果容器不提供存活探针,则默认状态为Success - ReadinessProbe(就绪探针)

ReadinessProbe : 指示容器是否准备好提供服务。如果就绪态探测失败,端点控制器将会从与Pod匹配的所有服务的端点列表中删除该Pod的IP地址。初始延迟之前的就绪态的状态值默认为Failure。如果容器不提供就绪态探针,则默认状态为Success startupProbe(启动探针)

如果某系应用启动比较慢,可以使用startupProbe探针,该探针指示容器中的应用是否已经启动。如果提供了startupProbe探针,则所有其他探针都会被禁用,直接到此探针成功为止。如果探测失败,kubelet将杀死容器,而容器依其重启策略进行重启。如果容器没有提供启动探针,则默认状态为Success2、探针实现方式

有三种类型的处理程序 :

- ExecAction : 在容器内执行指定命令。如果命令退出时返回码为0则认为诊断成功

- TCPSocketAction : 对容器的IP地址上的指定端口执行TCP检查。如果断开打开,则诊断被认为是成功的

- HTTPGetAction : 对容器的IP地址上指定端口和路径执行HTTP Get请求。如果响应的状态码大于等于200且小于400,则诊断被认为是成功的

3、探针测试

[root@master k8s]# cat httpd-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: httpd

labels:

app: httpd

spec:

containers:

- name: httpd

image: nginx

ports:

- containerPort: 80

readinessProbe:

tcpSocket:

port: 80

initialDelaySeconds: 20

periodSeconds: 10

livenessProbe:

tcpSocket:

port: 80

initialDelaySeconds: 20

periodSeconds: 10

[root@master k8s]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

httpd 1/1 Running 0 41s 10.244.2.14 node2

[root@master k8s]# kubectl describe pods httpd

Name: httpd

Namespace: default

Priority: 0

Node: node2/172.24.251.134

Start Time: Mon, 19 Jun 2023 16:49:31 +0800

Labels: app=httpd

Annotations:

Status: Running

IP: 10.244.2.14

IPs:

IP: 10.244.2.14

Containers:

httpd:

Container ID: docker://f06b5d25d2d6d6061e885324264a9af11505db789bc426c8baacae633a902ec0

Image: nginx

Image ID: docker-pullable://nginx@sha256:0d17b565c37bcbd895e9d92315a05c1c3c9a29f762b011a10c54a66cd53c9b31

Port: 80/TCP

Host Port: 0/TCP

State: Running

Started: Mon, 19 Jun 2023 16:49:32 +0800

Ready: True

Restart Count: 0

Liveness: tcp-socket :80 delay=20s timeout=1s period=10s #success=1 #failure=3

Readiness: tcp-socket :80 delay=20s timeout=1s period=10s #success=1 #failure=3

Environment:

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-thwx7 (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

kube-api-access-thwx7:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional:

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors:

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 57s default-scheduler Successfully assigned default/httpd to node2

Normal Pulling 57s kubelet Pulling image "nginx"

Normal Pulled 57s kubelet Successfully pulled image "nginx" in 242.923746ms

Normal Created 57s kubelet Created container httpd

Normal Started 56s kubelet Started container httpd

注意 : 看到Event中也没有错误信息,说明容器正常着呢 模拟httpd运行60s退出场景 :

[root@master k8s]# cat httpd-pod-quit.yaml

apiVersion: v1

kind: Pod

metadata:

name: httpd

labels:

app: httpd

spec:

containers:

- name: httpd

image: nginx

args:

- /bin/sh

- -c

- sleep 60;nginx -s quit

ports:

- containerPort: 80

readinessProbe:

tcpSocket:

port: 80

initialDelaySeconds: 20

periodSeconds: 10

livenessProbe:

tcpSocket:

port: 80

initialDelaySeconds: 20

periodSeconds: 10

[root@master k8s]# kubectl apply -f httpd-pod-quit.yaml

pod/httpd created

[root@master k8s]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

httpd 0/1 Running 1 (32s ago) 108s 10.244.2.15 node2

[root@master k8s]# kubectl describe pods httpd

Name: httpd

Namespace: default

Priority: 0

Node: node2/172.24.251.134

Start Time: Mon, 19 Jun 2023 16:53:14 +0800

Labels: app=httpd

Annotations:

Status: Running

IP: 10.244.2.15

IPs:

IP: 10.244.2.15

Containers:

httpd:

Container ID: docker://ecbc80148d2299c2d9779dc4a61c0fd2d0536f4210addcf78d35339459fdecc0

Image: nginx

Image ID: docker-pullable://nginx@sha256:0d17b565c37bcbd895e9d92315a05c1c3c9a29f762b011a10c54a66cd53c9b31

Port: 80/TCP

Host Port: 0/TCP

Args:

/bin/sh

-c

sleep 60;nginx -s quit

State: Running

Started: Mon, 19 Jun 2023 16:54:45 +0800

Last State: Terminated

Reason: Error

Exit Code: 1

Started: Mon, 19 Jun 2023 16:53:30 +0800

Finished: Mon, 19 Jun 2023 16:54:30 +0800

Ready: False

Restart Count: 1

Liveness: tcp-socket :80 delay=20s timeout=1s period=10s #success=1 #failure=3

Readiness: tcp-socket :80 delay=20s timeout=1s period=10s #success=1 #failure=3

Environment:

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-cdsjs (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

kube-api-access-cdsjs:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional:

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors:

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 2m8s default-scheduler Successfully assigned default/httpd to node2

Normal Pulled 112s kubelet Successfully pulled image "nginx" in 15.294980797s

Normal Killing 68s kubelet Container httpd failed liveness probe, will be restarted

Normal Pulling 52s (x2 over 2m8s) kubelet Pulling image "nginx"

Normal Created 37s (x2 over 112s) kubelet Created container httpd

Normal Started 37s (x2 over 112s) kubelet Started container httpd

Normal Pulled 37s kubelet Successfully pulled image "nginx" in 15.207521471s

Warning Unhealthy 8s (x4 over 88s) kubelet Liveness probe failed: dial tcp 10.244.2.15:80: connect: connection refused

Warning Unhealthy 8s (x8 over 88s) kubelet Readiness probe failed: dial tcp 10.244.2.15:80: connect: connection refused 14、init container

1、init container是什么

- Init容器是一种特殊容器,在Pod内的引用容器启动之前运行。 Init容器可以包括一些应用镜像中不存在的实用工具和安装脚本

- 每个Pod中可以有一个或多个先于应用容器启动的Init容器

2、特点

Init容器与普通容器非常像

- 它们总是运行到完成

- 每个都必须在下一个启动之前成功完成

- Init容器不支持lifecycle、livenessProbe、readinessProbe和startupProbe,因为她们必须是Pod就绪之前运行完成

- 如果为一个Pod指定了多个Init容器,这些容器会按顺序逐个运行。每个Init容器必须运行成功,下一个才能运行

3、测试应用

apiVersion: apps/v1

kind: Deployment

metadata:

name: init-demo

namespace: default

spec:

replicas: 2

selector:

matchLabels:

app: init

template:

metadata:

labels:

app: init

spec:

initContainers:

- name: download

image: busybox

command:

- wget

- "-O"

- "/opt/index.html"

- http://www.baidu.com

volumeMounts:

- name: wwwroot

mountPath: "/opt"

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

volumeMounts:

- name: wwwroot

mountPath: "/usr/share/nginx/html"

volumes:

- name: wwwroot

emptyDir: {}

[root@master k8s]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

init-demo-564f5c9f54-bnsgc 1/1 Running 0 38s 10.244.2.16 node2

init-demo-564f5c9f54-mv6h8 1/1 Running 0 38s 10.244.1.29 node1

[root@master k8s]# curl 10.244.2.16

百度一下,你就知道

[root@master k8s]# curl 10.244.1.29

百度一下,你就知道

[root@master k8s]# 15、Service入门

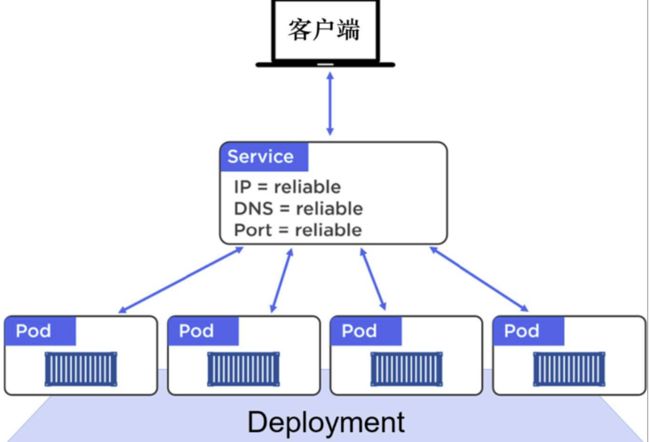

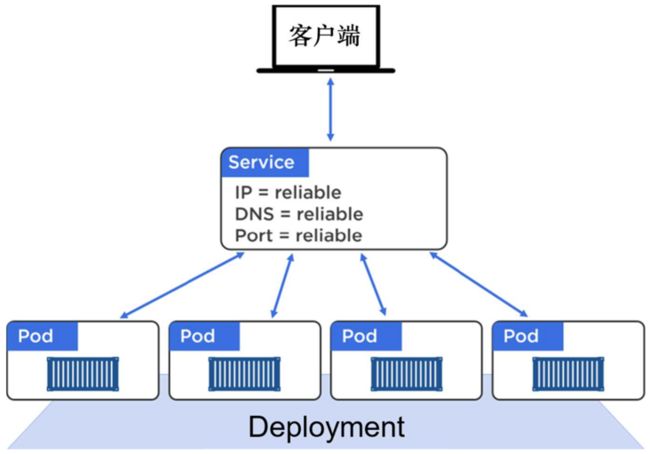

1、Pod存在的问题

- Pod的IP地址是不可靠的。在某个Pod失效之后,他会被一个拥有新的IP的Pod代替

- Deployment扩容也会引入有用新的IP的Pod,而缩容则会删除Pod。这会导致大量IP流失,因而Pod的IP地址是不可靠的

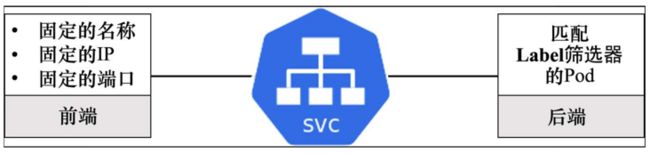

2、Service概念

- Service的核心作用就是为Pod提供稳定的网络连接

- 提供负载均衡和从集群外部访问Pod的途径

- Service对外提供固定的IP、DNS名称和端口,确保这些信息在Service的整个生命周期是不变的。Service对内则使用Label来讲流量均衡发至应用的各个(通常是动态变化的)Pod中

3、Service体验

1、创建dev namespace

[root@master k8s]# kubectl create namespace dev

namespace/dev created2、创建nginx service

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

namespace: dev

spec:

replicas: 2

selector:

matchLabels:

app: nginx-dev

template:

metadata:

labels:

app: nginx-dev

spec:

initContainers:

- name: init-nginx

image: busybox

command: ["/bin/sh"]

args: ["-c","hostname > /opt/index.html"]

volumeMounts:

- name: wwwroot

mountPath: "/opt"

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

volumeMounts:

- name: wwwroot

mountPath: "/usr/share/nginx/html"

volumes:

- name: wwwroot

emptyDir: {}

---

apiVersion: v1

kind: Service

metadata:

namespace: dev

name: nginx-service-dev

spec:

selector:

app: nginx-dev

ports:

- protocol: TCP

port: 80

targetPort: 80[root@master k8s]# kubectl apply -f nginx-service.yaml

deployment.apps/nginx created

service/nginx-service-dev created

[root@master k8s]# kubectl get services -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.1.0.1 443/TCP 6d23h

dev nginx-service-dev ClusterIP 10.1.101.26 80/TCP 4m52s

kube-system kube-dns ClusterIP 10.1.0.10 53/UDP,53/TCP,9153/TCP 6d23h 访问Service Cluster Ip如下轮询访问 :

[root@master k8s]# kubectl get pods -n dev -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-85756ff869-jlrrc 1/1 Running 0 7m5s 10.244.2.18 node2

nginx-85756ff869-vl7lx 1/1 Running 0 7m5s 10.244.1.33 node1

[root@master k8s]# curl 10.1.101.26

nginx-85756ff869-vl7lx

[root@master k8s]# curl 10.1.101.26

nginx-85756ff869-vl7lx

[root@master k8s]# curl 10.1.101.26

nginx-85756ff869-vl7lx

[root@master k8s]# curl 10.1.101.26

nginx-85756ff869-jlrrc

[root@master k8s]# curl 10.1.101.26

nginx-85756ff869-jlrrc

[root@master k8s]# curl 10.1.101.26

nginx-85756ff869-jlrrc

[root@master k8s]# curl 10.1.101.26

nginx-85756ff869-vl7lx 16、Service原理

1、Service类型

Kubernates有3个常用的Service类型 :

- ClusterIP,默认的类型 : 这种Service面相集群内部有固定的IP地址。但是在集群外是不可访问的

- NodePort : 它在ClusterIP的基础之上增加了一个集群范围的TCP或UDP的端口,从而使Service可以从集群外部访问

- LoadBalancer : 这种Service基于NodePort,并且集成了基于云的负载均衡器。还有一种名为ExternalName的Service类型,可以用来将流量直接导入Kubernates集群外部的服务

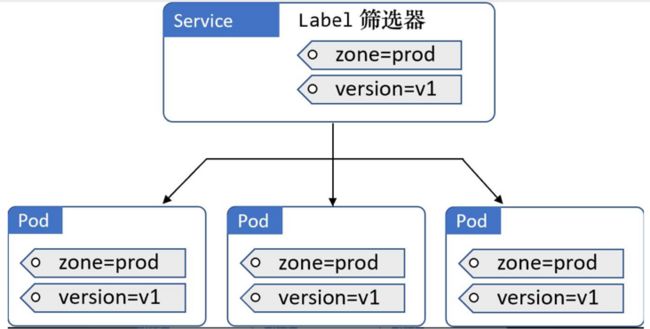

2、Service Label

Service与Pod之间是通过Label和Label筛选器(selector)松耦合在一起的。Deployment和Pod之间也是通过这种方式进行关联的,这种松耦合方式是Kubernates具备足够的灵活性的关键

3、Service与Endpoint

- 随着Pod的时常进出(扩容和缩容、故障、滚动升级等),Service会动态更新其维护的相匹配的监控Pod列表。具体来说,其中的匹配关系是通过Label筛选器和名为Endpoint对象结构共同完成的

- 每一个Service在被创建的时候,都会得到一个关联的Endpoint对象。整个Endpoint对象其实就是一个动态的列表,其中包含集群中所有的匹配Service Label筛选器的监控Pod

总结 : Kubernetes中的Service,它定义了一组Pods的逻辑集合和一个用于访问它们的策略。 一个 Service 的目标 Pod 集合通常是由Label Selector 来决定的

Endpoints是一组实际服务的端点集合。一个Endpoint是一个可被访问的服务端点,即一个状态为 running的pod的可访问端点。一般 Pod 都不是一个独立存在,所以一组 Pod 的端点合在一起称为 EndPoints。只有被 Service Selector 匹配选中并且状态为 Running 的才会被加入到和Service同名的Endpoints中

4、查看Service的Endpoint

[root@master k8s]# kubectl get service -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.1.0.1 443/TCP 7d

dev nginx-service-dev ClusterIP 10.1.101.26 80/TCP 62m

kube-system kube-dns ClusterIP 10.1.0.10 53/UDP,53/TCP,9153/TCP 7d

[root@master k8s]# kubectl describe svc nginx-service-dev -n dev

Name: nginx-service-dev

Namespace: dev

Labels:

Annotations:

Selector: app=nginx-dev

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.1.101.26

IPs: 10.1.101.26

Port: 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.1.33:80,10.244.2.18:80

Session Affinity: None

Events: 5、访问Service

1、从集群内部访问Service(ClusterIP)

ClusterIP Service拥有固定IP地址和端口号,并且仅能够从集群内部访问到。这一点被内部往来所实现,并且能够确保在Service的整个生命周期中是固定不变的

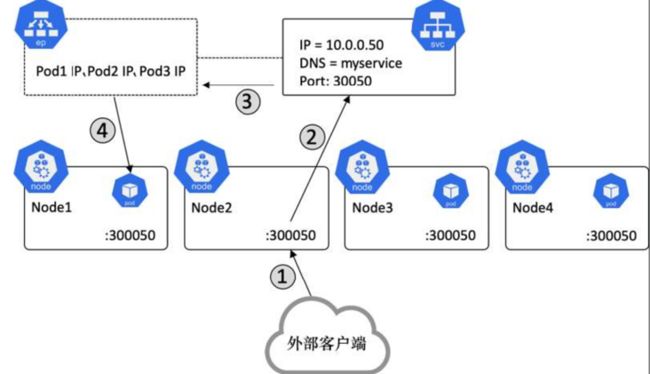

2、从集群外部访问Service(NodePort Service)

3个Pod经由NodePort Service通过每个节点上的端口30050堆外提供服务

- 来自一个外部客户端的请求到达Node2的30050对外提供服务

- 请求被转发至Service对象(即使Node2上压根没事有运行该Service管理的Pod)

- 与该Service对应的Endpoint对象维护了实时更新的与Label筛选器匹配的Pod列表

- 请求被转发至Node1的Pod1

3、与公有云集成(LoadBalancer Service)

LoadBalancer Service能够诸如AWS、Azure、DO、IBM和GCP等云服务商提供的负载均衡服务集成。它基于NodePort Service(它又基于ClusterIPService)实现,并在此基础上允许互连网上的客户端能够通过云平台负载均衡到达Pod

6、Service应用

发布v1版本

[root@master v1]# cat nginx-deployment-v1.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

namespace: default

spec:

replicas: 2

selector:

matchLabels:

app: nginx-dev

version: v1

template:

metadata:

labels:

app: nginx-dev

version: v1

spec:

initContainers:

- name: init-nginx

image: busybox

command: ["/bin/sh"]

args: ["-c","hostname > /opt/index.html"]

volumeMounts:

- name: wwwroot

mountPath: "/opt"

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

volumeMounts:

- name: wwwroot

mountPath: "/usr/share/nginx/html"

volumes:

- name: wwwroot

emptyDir: {}

[root@master v1]# cat nginx-service-v1.yaml

apiVersion: v1

kind: Service

metadata:

namespace: default

name: nginx-service-dev-v1

spec:

selector:

app: nginx-dev

version: v1

ports:

- protocol: TCP

port: 80

targetPort: 80

[root@master v1]# kubectl apply -f nginx-deployment-v1.yaml

deployment.apps/nginx created

[root@master v1]# kubectl apply -f nginx-service-v1.yaml

service/nginx-service-dev-v1 created

[root@master v1]# kubectl get services -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.1.0.1 443/TCP 7d

default nginx-service-dev-v1 ClusterIP 10.1.22.69 80/TCP 110s

kube-system kube-dns ClusterIP 10.1.0.10 53/UDP,53/TCP,9153/TCP 7d

[root@master v1]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-75c7b4c8bb-rcbl9 1/1 Running 0 2m15s 10.244.2.19 node2

nginx-75c7b4c8bb-xfmx6 1/1 Running 0 2m15s 10.244.1.34 node1 发布v2版本

[root@master v2]# cat nginx-deployment-v2.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-v2

namespace: default

spec:

replicas: 2

selector:

matchLabels:

app: nginx-dev

version: v2

template:

metadata:

labels:

app: nginx-dev

version: v2

spec:

initContainers:

- name: init-nginx

image: busybox

command: ["/bin/sh"]

args: ["-c","hostname > /opt/index.html"]

volumeMounts:

- name: wwwroot

mountPath: "/opt"

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

volumeMounts:

- name: wwwroot

mountPath: "/usr/share/nginx/html"

volumes:

- name: wwwroot

emptyDir: {}

[root@master v2]# cat nginx-service-v2.yaml

apiVersion: v1

kind: Service

metadata:

namespace: default

name: nginx-service-dev-v2

spec:

selector:

app: nginx-dev

version: v2

ports:

- protocol: TCP

port: 80

targetPort: 80

[root@master v2]# kubectl apply -f nginx-deployment-v2.yaml

deployment.apps/nginx-v2 created

[root@master v2]# kubectl apply -f nginx-service-v2.yaml

service/nginx-service-dev-v2 created新建一个选择器,可以同时访问v1和v2版本,说白了,其实就是selector中设置app不设置version

[root@master service_all]# cat nginx-service-all.yaml

apiVersion: v1

kind: Service

metadata:

namespace: default

name: nginx-service-dev-all

spec:

selector:

app: nginx-dev

ports:

- protocol: TCP

port: 80

targetPort: 80

[root@master service_all]# kubectl apply -f nginx-service-all.yaml

service/nginx-service-dev-all created访问 :

[root@master service_all]# kubectl get services -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.1.0.1 443/TCP 7d1h

default nginx-service-dev-all ClusterIP 10.1.68.193 80/TCP 119s

default nginx-service-dev-v1 ClusterIP 10.1.22.69 80/TCP 17m

default nginx-service-dev-v2 ClusterIP 10.1.134.127 80/TCP 5m34s

kube-system kube-dns ClusterIP 10.1.0.10 53/UDP,53/TCP,9153/TCP 7d1h

[root@master service_all]# curl 10.1.68.193

nginx-v2-5bd57c5867-jzbkr

[root@master service_all]# curl 10.1.68.193

nginx-v2-5bd57c5867-jzbkr

[root@master service_all]# curl 10.1.68.193

nginx-v2-5bd57c5867-jzbkr

[root@master service_all]# curl 10.1.68.193

nginx-75c7b4c8bb-xfmx6

[root@master service_all]# curl 10.1.68.193

nginx-v2-5bd57c5867-v2prh

[root@master service_all]# curl 10.1.68.193

nginx-75c7b4c8bb-rcbl9

[root@master service_all]# curl 10.1.68.193

nginx-75c7b4c8bb-rcbl9 注意 : 可以看到有v1和v2版本轮询访问,验证多版本同时存在问题

17、Service服务发现

1、容器内部Service名称访问(容器内是可以通过service名称访问的)

[root@master service_all]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-75c7b4c8bb-rcbl9 1/1 Running 0 26m 10.244.2.19 node2

nginx-75c7b4c8bb-xfmx6 1/1 Running 0 26m 10.244.1.34 node1

nginx-v2-5bd57c5867-jzbkr 1/1 Running 0 14m 10.244.2.20 node2

nginx-v2-5bd57c5867-v2prh 1/1 Running 0 14m 10.244.1.35 node1

[root@master service_all]# kubectl get service -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.1.0.1 443/TCP 7d1h

default nginx-service-dev-all ClusterIP 10.1.68.193 80/TCP 10m

default nginx-service-dev-v1 ClusterIP 10.1.22.69 80/TCP 26m

default nginx-service-dev-v2 ClusterIP 10.1.134.127 80/TCP 14m

kube-system kube-dns ClusterIP 10.1.0.10 53/UDP,53/TCP,9153/TCP 7d1h

[root@master service_all]# kubectl exec -it nginx-75c7b4c8bb-rcbl9 /bin/bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

Defaulted container "nginx" out of: nginx, init-nginx (init)

root@nginx-75c7b4c8bb-rcbl9:/# curl nginx-service-dev-all

nginx-v2-5bd57c5867-jzbkr

root@nginx-75c7b4c8bb-rcbl9:/# curl nginx-service-dev-all

nginx-v2-5bd57c5867-v2prh

root@nginx-75c7b4c8bb-rcbl9:/# curl nginx-service-dev-all

nginx-v2-5bd57c5867-jzbkr

root@nginx-75c7b4c8bb-rcbl9:/# curl nginx-service-dev-all

nginx-75c7b4c8bb-xfmx6

root@nginx-75c7b4c8bb-rcbl9:/# curl nginx-service-dev-all

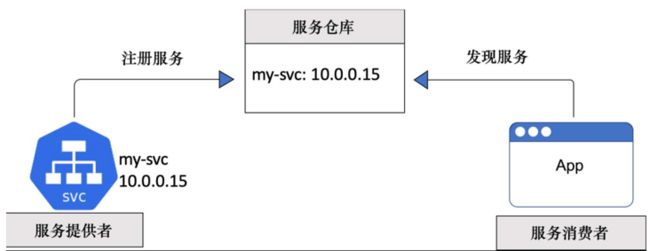

nginx-75c7b4c8bb-rcbl9 2、微服务注册和发现

现在的云原生应用由多个独立的微服务协同合作而成。为了便于通力合作,这些微服务需要能够互相发现和连接。这时候就需要服务发现(Service Discovery)

3、k8s服务发现

Kubernates通过以下方式来实现服务发现(Service Discovery)

- DNS(推荐)

- 环境变量(绝对不推荐)

4、服务注册

所谓服务注册,即把微服务的连接信息注册到服务仓库,以便其他微服务能够发现它并进行连接

1、CoreDNS

- Kubrenates使用一个内部DNS服务作为服务注册中心

- 服务是基于DNS注册的(而非具体的Pod)

- 每个服务的名称、IP地址和网络端口都会被注册

[root@master ~]# kubectl get services -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.1.0.10 53/UDP,53/TCP,9153/TCP 7d2h

[root@master ~]# kubectl get deploy -n kube-system

NAME READY UP-TO-DATE AVAILABLE AGE

coredns 2/2 2 2 7d2h

[root@master ~]# kubectl get pods -n kube-system -l k8s-app=kube-dns -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-64897985d-6bwbq 1/1 Running 9 (91m ago) 7d2h 10.244.1.36 node1

coredns-64897985d-9s84l 1/1 Running 9 (91m ago) 7d2h 10.244.1.38 node1 每一个Kubernates Service都会在创建之时被自动注册到集群DNS中

2、服务转发

Kubernates自动为每个Service创建一个Endpoint对象(或Endpoint slice)。它维护着一组匹配Label筛选器的Pod列表,这些Pod能够接受转发来自Service的流程

[root@master ~]# kubectl describe service nginx-service-dev-v1

Name: nginx-service-dev-v1

Namespace: default

Labels:

Annotations:

Selector: app=nginx-dev,version=v1

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.1.22.69

IPs: 10.1.22.69

Port: 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.1.37:80,10.244.2.21:80

Session Affinity: None

Events: 3、服务发现

1、DNS

[root@master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-75c7b4c8bb-rcbl9 1/1 Running 1 (99m ago) 130m

nginx-75c7b4c8bb-xfmx6 1/1 Running 1 (10m ago) 130m

nginx-v2-5bd57c5867-jzbkr 1/1 Running 1 (99m ago) 118m

nginx-v2-5bd57c5867-v2prh 1/1 Running 1 (10m ago) 118m

[root@master ~]# kubectl exec -it nginx-75c7b4c8bb-rcbl9 /bin/bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

Defaulted container "nginx" out of: nginx, init-nginx (init)

root@nginx-75c7b4c8bb-rcbl9:/# cat /etc/resolv.conf

nameserver 10.1.0.10

search default.svc.cluster.local svc.cluster.local cluster.local

options ndots:5

[root@master ~]# kubectl get service -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.1.0.10 53/UDP,53/TCP,9153/TCP 7d2h 2、同命名空间

[root@master ~]# kubectl exec -it nginx-75c7b4c8bb-rcbl9 /bin/bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

Defaulted container "nginx" out of: nginx, init-nginx (init)

root@nginx-75c7b4c8bb-rcbl9:/# curl nginx-service-dev-all

nginx-75c7b4c8bb-xfmx6

root@nginx-75c7b4c8bb-rcbl9:/# curl nginx-service-dev-all.default.svc.cluster.local

nginx-75c7b4c8bb-xfmx63、不同命名空间访问

root@nginx-75c7b4c8bb-rcbl9:/# curl kube-dns.kube-system.svc.cluster.local:9153

404 page not found4、服务发现iptables

1、加速器

root@nginx-75c7b4c8bb-rcbl9:/# cp /etc/apt/sources.list /etc/apt/sources.list.bak && sed -i "s@http://deb.debian.org@http://mirrors.aliyun.com@g" /etc/apt/sources.list && rm -rf /var/lib/apt/lists/* && apt-get update

2、加速器

root@nginx-75c7b4c8bb-rcbl9:/# apt-get install dnsutils

3、看nsloopup

root@nginx-75c7b4c8bb-rcbl9:/# nslookup nginx-service-dev-all

Server: 10.1.0.10

Address: 10.1.0.10#53

Name: nginx-service-dev-all.default.svc.cluster.local

Address: 10.1.68.193

4、iptables

[root@master ~]# iptables -t nat -L KUBE-SERVICES

Chain KUBE-SERVICES (2 references)

target prot opt source destination

KUBE-SVC-2DMZZ5IIIMJAIRAQ tcp -- anywhere 10.1.68.193 /* default/nginx-service-dev-all cluster IP */ tcp dpt:http

KUBE-SVC-KYLLT46RYBS5DRNN tcp -- anywhere 10.1.22.69 /* default/nginx-service-dev-v1 cluster IP */ tcp dpt:http

KUBE-SVC-PMBGFTSR3NCYO6NA tcp -- anywhere 10.1.134.127 /* default/nginx-service-dev-v2 cluster IP */ tcp dpt:http

KUBE-SVC-TCOU7JCQXEZGVUNU udp -- anywhere 10.1.0.10 /* kube-system/kube-dns:dns cluster IP */ udp dpt:domain

KUBE-SVC-ERIFXISQEP7F7OF4 tcp -- anywhere 10.1.0.10 /* kube-system/kube-dns:dns-tcp cluster IP */ tcp dpt:domain

KUBE-SVC-JD5MR3NA4I4DYORP tcp -- anywhere 10.1.0.10 /* kube-system/kube-dns:metrics cluster IP */ tcp dpt:9153

KUBE-SVC-NPX46M4PTMTKRN6Y tcp -- anywhere 10.1.0.1 /* default/kubernetes:https cluster IP */ tcp dpt:https

KUBE-NODEPORTS all -- anywhere anywhere /* kubernetes service nodeports; NOTE: this must be the last rule in this chain */ ADDRTYPE match dst-type LOCAL

[root@master ~]# iptables -t nat -L KUBE-SVC-PMBGFTSR3NCYO6NA

Chain KUBE-SVC-PMBGFTSR3NCYO6NA (1 references)

target prot opt source destination

KUBE-MARK-MASQ tcp -- !master/16 10.1.134.127 /* default/nginx-service-dev-v2 cluster IP */ tcp dpt:http

KUBE-SEP-MISD75AB66PCUDEJ all -- anywhere anywhere /* default/nginx-service-dev-v2 */ statistic mode random probability 0.50000000000

KUBE-SEP-WL3D2RDOPXCOIXNY all -- anywhere anywhere /* default/nginx-service-dev-v2 */

[root@master ~]# iptables -t nat -L KUBE-SEP-MISD75AB66PCUDEJ

Chain KUBE-SEP-MISD75AB66PCUDEJ (1 references)

target prot opt source destination

KUBE-MARK-MASQ all -- 10.244.1.39 anywhere /* default/nginx-service-dev-v2 */

DNAT tcp -- anywhere anywhere /* default/nginx-service-dev-v2 */ tcp to:10.244.1.39:80

[root@master ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-75c7b4c8bb-rcbl9 1/1 Running 1 (118m ago) 149m 10.244.2.21 node2

nginx-75c7b4c8bb-xfmx6 1/1 Running 1 (29m ago) 149m 10.244.1.37 node1

nginx-v2-5bd57c5867-jzbkr 1/1 Running 1 (118m ago) 137m 10.244.2.22 node2

nginx-v2-5bd57c5867-v2prh 1/1 Running 1 (29m ago) 137m 10.244.1.39 node1 持续更新中。。。。,写作不易,多多点赞支持,非常感谢!!!