Kubernetes第2天

第四章 实战入门

本章节将介绍如何在kubernetes集群中部署一个nginx服务,并且能够对其进行访问。

Namespace

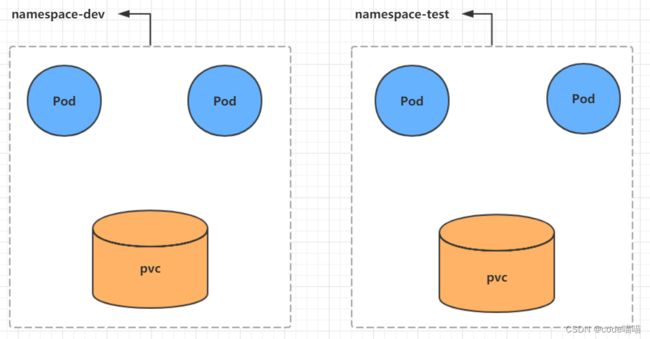

Namespace是kubernetes系统中的一种非常重要资源,它的主要作用是用来实现多套环境的资源隔离或者多租户的资源隔离。

默认情况下,kubernetes集群中的所有的Pod都是可以相互访问的。但是在实际中,可能不想让两个Pod之间进行互相的访问,那此时就可以将两个Pod划分到不同的namespace下。kubernetes通过将集群内部的资源分配到不同的Namespace中,可以形成逻辑上的"组",以方便不同的组的资源进行隔离使用和管理。

可以通过kubernetes的授权机制,将不同的namespace交给不同租户进行管理,这样就实现了多租户的资源隔离。此时还能结合kubernetes的资源配额机制,限定不同租户能占用的资源,例如CPU使用量、内存使用量等等,来实现租户可用资源的管理。

kubernetes在集群启动之后,会默认创建几个namespace

[root@master ~]# kubectl get namespace

NAME STATUS AGE

default Active 45h # 所有未指定Namespace的对象都会被分配在default命名空间

kube-node-lease Active 45h # 集群节点之间的心跳维护,v1.13开始引入

kube-public Active 45h # 此命名空间下的资源可以被所有人访问(包括未认证用户)

kube-system Active 45h # 所有由Kubernetes系统创建的资源都处于这个命名空间

下面来看namespace资源的具体操作:

查看

# 1 查看所有的ns 命令:kubectl get ns

[root@master ~]# kubectl get ns

NAME STATUS AGE

default Active 45h

kube-node-lease Active 45h

kube-public Active 45h

kube-system Active 45h

# 2 查看指定的ns 命令:kubectl get ns ns名称

[root@master ~]# kubectl get ns default

NAME STATUS AGE

default Active 45h

# 3 指定输出格式 命令:kubectl get ns ns名称 -o 格式参数

# kubernetes支持的格式有很多,比较常见的是wide、json、yaml

[root@master ~]# kubectl get ns default -o yaml

apiVersion: v1

kind: Namespace

metadata:

creationTimestamp: "2020-04-05T04:44:16Z"

name: default

resourceVersion: "151"

selfLink: /api/v1/namespaces/default

uid: 7405f73a-e486-43d4-9db6-145f1409f090

spec:

finalizers:

- kubernetes

status:

phase: Active

# 4 查看ns详情 命令:kubectl describe ns ns名称

[root@master ~]# kubectl describe ns default

Name: default

Labels:

Annotations:

Status: Active # Active 命名空间正在使用中 Terminating 正在删除命名空间

# ResourceQuota 针对namespace做的资源限制

# LimitRange针对namespace中的每个组件做的资源限制

No resource quota.

No LimitRange resource.

创建

# 创建namespace

[root@master ~]# kubectl create ns dev

namespace/dev created

删除

# 删除namespace

[root@master ~]# kubectl delete ns dev

namespace "dev" deleted

配置方式

首先准备一个yaml文件:ns-dev.yaml

apiVersion: v1

kind: Namespace

metadata:

name: dev

然后就可以执行对应的创建和删除命令了:

创建:kubectl create -f ns-dev.yaml

删除:kubectl delete -f ns-dev.yaml

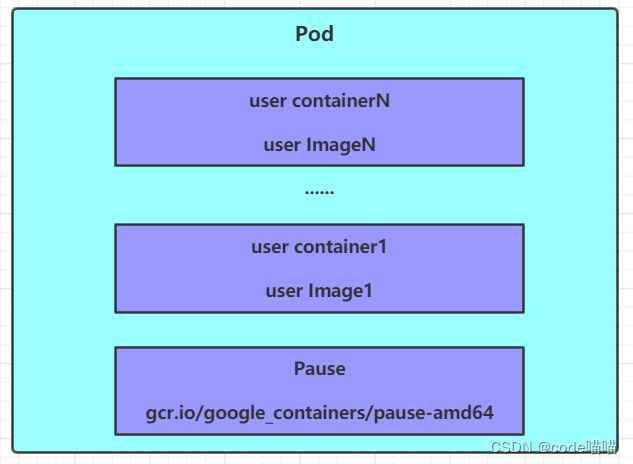

Pod

Pod是kubernetes集群进行管理的最小单元,程序要运行必须部署在容器中,而容器必须存在于Pod中。

Pod可以认为是容器的封装,一个Pod中可以存在一个或者多个容器。

kubernetes在集群启动之后,集群中的各个组件也都是以Pod方式运行的。可以通过下面命令查看:

[root@master ~]# kubectl get pod -n kube-system

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-6955765f44-68g6v 1/1 Running 0 2d1h

kube-system coredns-6955765f44-cs5r8 1/1 Running 0 2d1h

kube-system etcd-master 1/1 Running 0 2d1h

kube-system kube-apiserver-master 1/1 Running 0 2d1h

kube-system kube-controller-manager-master 1/1 Running 0 2d1h

kube-system kube-flannel-ds-amd64-47r25 1/1 Running 0 2d1h

kube-system kube-flannel-ds-amd64-ls5lh 1/1 Running 0 2d1h

kube-system kube-proxy-685tk 1/1 Running 0 2d1h

kube-system kube-proxy-87spt 1/1 Running 0 2d1h

kube-system kube-scheduler-master 1/1 Running 0 2d1h

创建并运行

kubernetes没有提供单独运行Pod的命令,都是通过Pod控制器来实现的

# 命令格式: kubectl run (pod控制器名称) [参数]

# --image 指定Pod的镜像

# --port 指定端口

# --namespace 指定namespace

[root@master ~]# kubectl run nginx --image=nginx:1.17.1 --port=80 --namespace dev

deployment.apps/nginx created

查看pod信息

# 查看Pod基本信息

[root@master ~]# kubectl get pods -n dev

NAME READY STATUS RESTARTS AGE

nginx-5ff7956ff6-fg2db 1/1 Running 0 43s

# 查看Pod的详细信息

[root@master ~]# kubectl describe pod nginx-5ff7956ff6-fg2db -n dev

Name: nginx-5ff7956ff6-fg2db

Namespace: dev

Priority: 0

Node: node1/192.168.109.101

Start Time: Wed, 08 Apr 2020 09:29:24 +0800

Labels: pod-template-hash=5ff7956ff6

run=nginx

Annotations:

Status: Running

IP: 10.244.1.23

IPs:

IP: 10.244.1.23

Controlled By: ReplicaSet/nginx-5ff7956ff6

Containers:

nginx:

Container ID: docker://4c62b8c0648d2512380f4ffa5da2c99d16e05634979973449c98e9b829f6253c

Image: nginx:1.17.1

Image ID: docker-pullable://nginx@sha256:485b610fefec7ff6c463ced9623314a04ed67e3945b9c08d7e53a47f6d108dc7

Port: 80/TCP

Host Port: 0/TCP

State: Running

Started: Wed, 08 Apr 2020 09:30:01 +0800

Ready: True

Restart Count: 0

Environment:

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-hwvvw (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

default-token-hwvvw:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-hwvvw

Optional: false

QoS Class: BestEffort

Node-Selectors:

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled default-scheduler Successfully assigned dev/nginx-5ff7956ff6-fg2db to node1

Normal Pulling 4m11s kubelet, node1 Pulling image "nginx:1.17.1"

Normal Pulled 3m36s kubelet, node1 Successfully pulled image "nginx:1.17.1"

Normal Created 3m36s kubelet, node1 Created container nginx

Normal Started 3m36s kubelet, node1 Started container nginx

访问Pod

# 获取podIP

[root@master ~]# kubectl get pods -n dev -o wide

NAME READY STATUS RESTARTS AGE IP NODE ...

nginx-5ff7956ff6-fg2db 1/1 Running 0 190s 10.244.1.23 node1 ...

#访问POD

[root@master ~]# curl http://10.244.1.23:80

<!DOCTYPE html>

Welcome to nginx<span class="token operator">!</span><<span class="token operator">/</span>title>

<<span class="token operator">/</span>head>

<body>

<p><em>Thank you <span class="token keyword">for</span> <span class="token keyword">using</span> nginx<span class="token punctuation">.</span><<span class="token operator">/</span>em><<span class="token operator">/</span>p>

<<span class="token operator">/</span>body>

<<span class="token operator">/</span>html>

</code></pre>

<p><strong>删除指定Pod</strong></p>

<pre><code class="prism language-powershell"><span class="token comment"># 删除指定Pod</span>

<span class="token namespace">[root@master ~]</span><span class="token comment"># kubectl delete pod nginx-5ff7956ff6-fg2db -n dev</span>

pod <span class="token string">"nginx-5ff7956ff6-fg2db"</span> deleted

<span class="token comment"># 此时,显示删除Pod成功,但是再查询,发现又新产生了一个 </span>

<span class="token namespace">[root@master ~]</span><span class="token comment"># kubectl get pods -n dev</span>

NAME READY STATUS RESTARTS AGE

nginx<span class="token operator">-</span>5ff7956ff6<span class="token operator">-</span>jj4ng 1<span class="token operator">/</span>1 Running 0 21s

<span class="token comment"># 这是因为当前Pod是由Pod控制器创建的,控制器会监控Pod状况,一旦发现Pod死亡,会立即重建</span>

<span class="token comment"># 此时要想删除Pod,必须删除Pod控制器</span>

<span class="token comment"># 先来查询一下当前namespace下的Pod控制器</span>

<span class="token namespace">[root@master ~]</span><span class="token comment"># kubectl get deploy -n dev</span>

NAME READY UP<span class="token operator">-</span>TO<span class="token operator">-</span>DATE AVAILABLE AGE

nginx 1<span class="token operator">/</span>1 1 1 9m7s

<span class="token comment"># 接下来,删除此PodPod控制器</span>

<span class="token namespace">[root@master ~]</span><span class="token comment"># kubectl delete deploy nginx -n dev</span>

deployment<span class="token punctuation">.</span>apps <span class="token string">"nginx"</span> deleted

<span class="token comment"># 稍等片刻,再查询Pod,发现Pod被删除了</span>

<span class="token namespace">[root@master ~]</span><span class="token comment"># kubectl get pods -n dev</span>

No resources found in dev namespace<span class="token punctuation">.</span>

</code></pre>

<p><strong>配置操作</strong></p>

<p>创建一个pod-nginx.yaml,内容如下:</p>

<pre><code class="prism language-yaml"><span class="token key atrule">apiVersion</span><span class="token punctuation">:</span> v1

<span class="token key atrule">kind</span><span class="token punctuation">:</span> Pod

<span class="token key atrule">metadata</span><span class="token punctuation">:</span>

<span class="token key atrule">name</span><span class="token punctuation">:</span> nginx

<span class="token key atrule">namespace</span><span class="token punctuation">:</span> dev

<span class="token key atrule">spec</span><span class="token punctuation">:</span>

<span class="token key atrule">containers</span><span class="token punctuation">:</span>

<span class="token punctuation">-</span> <span class="token key atrule">image</span><span class="token punctuation">:</span> nginx<span class="token punctuation">:</span>1.17.1

<span class="token key atrule">name</span><span class="token punctuation">:</span> pod

<span class="token key atrule">ports</span><span class="token punctuation">:</span>

<span class="token punctuation">-</span> <span class="token key atrule">name</span><span class="token punctuation">:</span> nginx<span class="token punctuation">-</span>port

<span class="token key atrule">containerPort</span><span class="token punctuation">:</span> <span class="token number">80</span>

<span class="token key atrule">protocol</span><span class="token punctuation">:</span> TCP

</code></pre>

<p>然后就可以执行对应的创建和删除命令了:</p>

<p> 创建:kubectl create -f pod-nginx.yaml</p>

<p> 删除:kubectl delete -f pod-nginx.yaml</p>

<h2>Label</h2>

<p>Label是kubernetes系统中的一个重要概念。它的作用就是在资源上添加标识,用来对它们进行区分和选择。</p>

<p>Label的特点:</p>

<ul>

<li>一个Label会以key/value键值对的形式附加到各种对象上,如Node、Pod、Service等等</li>

<li>一个资源对象可以定义任意数量的Label ,同一个Label也可以被添加到任意数量的资源对象上去</li>

<li>Label通常在资源对象定义时确定,当然也可以在对象创建后动态添加或者删除</li>

</ul>

<p>可以通过Label实现资源的多维度分组,以便灵活、方便地进行资源分配、调度、配置、部署等管理工作。</p>

<blockquote>

<p>一些常用的Label 示例如下:</p>

<ul>

<li>版本标签:“version”:“release”, “version”:“stable”…</li>

<li>环境标签:“environment”:“dev”,“environment”:“test”,“environment”:“pro”</li>

<li>架构标签:“tier”:“frontend”,“tier”:“backend”</li>

</ul>

</blockquote>

<p>标签定义完毕之后,还要考虑到标签的选择,这就要使用到Label Selector,即:</p>

<p> Label用于给某个资源对象定义标识</p>

<p> Label Selector用于查询和筛选拥有某些标签的资源对象</p>

<p>当前有两种Label Selector:</p>

<ul>

<li> <p>基于等式的Label Selector</p> <p>name = slave: 选择所有包含Label中key="name"且value="slave"的对象</p> <p>env != production: 选择所有包括Label中的key="env"且value不等于"production"的对象</p> </li>

<li> <p>基于集合的Label Selector</p> <p>name in (master, slave): 选择所有包含Label中的key="name"且value="master"或"slave"的对象</p> <p>name not in (frontend): 选择所有包含Label中的key="name"且value不等于"frontend"的对象</p> </li>

</ul>

<p>标签的选择条件可以使用多个,此时将多个Label Selector进行组合,使用逗号","进行分隔即可。例如:</p>

<p> name=slave,env!=production</p>

<p> name not in (frontend),env!=production</p>

<p><strong>命令方式</strong></p>

<pre><code class="prism language-powershell"><span class="token comment"># 为pod资源打标签</span>

<span class="token namespace">[root@master ~]</span><span class="token comment"># kubectl label pod nginx-pod version=1.0 -n dev</span>

pod<span class="token operator">/</span>nginx<span class="token operator">-</span>pod labeled

<span class="token comment"># 为pod资源更新标签</span>

<span class="token namespace">[root@master ~]</span><span class="token comment"># kubectl label pod nginx-pod version=2.0 -n dev --overwrite</span>

pod<span class="token operator">/</span>nginx<span class="token operator">-</span>pod labeled

<span class="token comment"># 查看标签</span>

<span class="token namespace">[root@master ~]</span><span class="token comment"># kubectl get pod nginx-pod -n dev --show-labels</span>

NAME READY STATUS RESTARTS AGE LABELS

nginx<span class="token operator">-</span>pod 1<span class="token operator">/</span>1 Running 0 10m version=2<span class="token punctuation">.</span>0

<span class="token comment"># 筛选标签</span>

<span class="token namespace">[root@master ~]</span><span class="token comment"># kubectl get pod -n dev -l version=2.0 --show-labels</span>

NAME READY STATUS RESTARTS AGE LABELS

nginx<span class="token operator">-</span>pod 1<span class="token operator">/</span>1 Running 0 17m version=2<span class="token punctuation">.</span>0

<span class="token namespace">[root@master ~]</span><span class="token comment"># kubectl get pod -n dev -l version!=2.0 --show-labels</span>

No resources found in dev namespace<span class="token punctuation">.</span>

<span class="token comment">#删除标签</span>

<span class="token namespace">[root@master ~]</span><span class="token comment"># kubectl label pod nginx-pod version- -n dev</span>

pod<span class="token operator">/</span>nginx<span class="token operator">-</span>pod labeled

</code></pre>

<p><strong>配置方式</strong></p>

<pre><code class="prism language-yaml"><span class="token key atrule">apiVersion</span><span class="token punctuation">:</span> v1

<span class="token key atrule">kind</span><span class="token punctuation">:</span> Pod

<span class="token key atrule">metadata</span><span class="token punctuation">:</span>

<span class="token key atrule">name</span><span class="token punctuation">:</span> nginx

<span class="token key atrule">namespace</span><span class="token punctuation">:</span> dev

<span class="token key atrule">labels</span><span class="token punctuation">:</span>

<span class="token key atrule">version</span><span class="token punctuation">:</span> <span class="token string">"3.0"</span>

<span class="token key atrule">env</span><span class="token punctuation">:</span> <span class="token string">"test"</span>

<span class="token key atrule">spec</span><span class="token punctuation">:</span>

<span class="token key atrule">containers</span><span class="token punctuation">:</span>

<span class="token punctuation">-</span> <span class="token key atrule">image</span><span class="token punctuation">:</span> nginx<span class="token punctuation">:</span>1.17.1

<span class="token key atrule">name</span><span class="token punctuation">:</span> pod

<span class="token key atrule">ports</span><span class="token punctuation">:</span>

<span class="token punctuation">-</span> <span class="token key atrule">name</span><span class="token punctuation">:</span> nginx<span class="token punctuation">-</span>port

<span class="token key atrule">containerPort</span><span class="token punctuation">:</span> <span class="token number">80</span>

<span class="token key atrule">protocol</span><span class="token punctuation">:</span> TCP

</code></pre>

<p>然后就可以执行对应的更新命令了:kubectl apply -f pod-nginx.yaml</p>

<h2>Deployment</h2>

<p> 在kubernetes中,Pod是最小的控制单元,但是kubernetes很少直接控制Pod,一般都是通过Pod控制器来完成的。Pod控制器用于pod的管理,确保pod资源符合预期的状态,当pod的资源出现故障时,会尝试进行重启或重建pod。</p>

<p> 在kubernetes中Pod控制器的种类有很多,本章节只介绍一种:Deployment。</p>

<p><a href="http://img.e-com-net.com/image/info8/96577169df40459d9b404375e35cd1ab.jpg" target="_blank"><img src="http://img.e-com-net.com/image/info8/96577169df40459d9b404375e35cd1ab.jpg" alt="Kubernetes第2天_第3张图片" width="650" height="355" style="border:1px solid black;"></a></p>

<p><strong>命令操作</strong></p>

<pre><code class="prism language-powershell"><span class="token comment"># 命令格式: kubectl run deployment名称 [参数] </span>

<span class="token comment"># --image 指定pod的镜像</span>

<span class="token comment"># --port 指定端口</span>

<span class="token comment"># --replicas 指定创建pod数量</span>

<span class="token comment"># --namespace 指定namespace</span>

<span class="token namespace">[root@master ~]</span><span class="token comment"># kubectl run nginx --image=nginx:1.17.1 --port=80 --replicas=3 -n dev</span>

deployment<span class="token punctuation">.</span>apps<span class="token operator">/</span>nginx created

<span class="token comment"># 查看创建的Pod</span>

<span class="token namespace">[root@master ~]</span><span class="token comment"># kubectl get pods -n dev</span>

NAME READY STATUS RESTARTS AGE

nginx<span class="token operator">-</span>5ff7956ff6<span class="token operator">-</span>6k8cb 1<span class="token operator">/</span>1 Running 0 19s

nginx<span class="token operator">-</span>5ff7956ff6<span class="token operator">-</span>jxfjt 1<span class="token operator">/</span>1 Running 0 19s

nginx<span class="token operator">-</span>5ff7956ff6<span class="token operator">-</span>v6jqw 1<span class="token operator">/</span>1 Running 0 19s

<span class="token comment"># 查看deployment的信息</span>

<span class="token namespace">[root@master ~]</span><span class="token comment"># kubectl get deploy -n dev</span>

NAME READY UP<span class="token operator">-</span>TO<span class="token operator">-</span>DATE AVAILABLE AGE

nginx 3<span class="token operator">/</span>3 3 3 2m42s

<span class="token comment"># UP-TO-DATE:成功升级的副本数量</span>

<span class="token comment"># AVAILABLE:可用副本的数量</span>

<span class="token namespace">[root@master ~]</span><span class="token comment"># kubectl get deploy -n dev -o wide</span>

NAME READY UP<span class="token operator">-</span>TO<span class="token operator">-</span>DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

nginx 3<span class="token operator">/</span>3 3 3 2m51s nginx nginx:1<span class="token punctuation">.</span>17<span class="token punctuation">.</span>1 run=nginx

<span class="token comment"># 查看deployment的详细信息</span>

<span class="token namespace">[root@master ~]</span><span class="token comment"># kubectl describe deploy nginx -n dev</span>

Name: nginx

Namespace: dev

CreationTimestamp: Wed<span class="token punctuation">,</span> 08 Apr 2020 11:14:14 <span class="token operator">+</span>0800

Labels: run=nginx

Annotations: deployment<span class="token punctuation">.</span>kubernetes<span class="token punctuation">.</span>io<span class="token operator">/</span>revision: 1

Selector: run=nginx

Replicas: 3 desired <span class="token punctuation">|</span> 3 updated <span class="token punctuation">|</span> 3 total <span class="token punctuation">|</span> 3 available <span class="token punctuation">|</span> 0 unavailable

StrategyType: RollingUpdate

MinReadySeconds: 0

RollingUpdateStrategy: 25<span class="token operator">%</span> max unavailable<span class="token punctuation">,</span> 25<span class="token operator">%</span> max surge

Pod Template:

Labels: run=nginx

Containers:

nginx:

Image: nginx:1<span class="token punctuation">.</span>17<span class="token punctuation">.</span>1

Port: 80<span class="token operator">/</span>TCP

Host Port: 0<span class="token operator">/</span>TCP

Environment: <none>

Mounts: <none>

Volumes: <none>

Conditions:

<span class="token function">Type</span> Status Reason

<span class="token operator">--</span>-<span class="token operator">-</span> <span class="token operator">--</span>-<span class="token operator">--</span><span class="token operator">-</span> <span class="token operator">--</span>-<span class="token operator">--</span><span class="token operator">-</span>

Available True MinimumReplicasAvailable

Progressing True NewReplicaSetAvailable

OldReplicaSets: <none>

NewReplicaSet: nginx<span class="token operator">-</span>5ff7956ff6 <span class="token punctuation">(</span>3<span class="token operator">/</span>3 replicas created<span class="token punctuation">)</span>

Events:

<span class="token function">Type</span> Reason Age <span class="token keyword">From</span> Message

<span class="token operator">--</span>-<span class="token operator">-</span> <span class="token operator">--</span>-<span class="token operator">--</span><span class="token operator">-</span> <span class="token operator">--</span>-<span class="token operator">-</span> <span class="token operator">--</span>-<span class="token operator">-</span> <span class="token operator">--</span>-<span class="token operator">--</span>-<span class="token operator">-</span>

Normal ScalingReplicaSet 5m43s deployment<span class="token operator">-</span>controller Scaled up replicaset nginx<span class="token operator">-</span>5ff7956ff6 to 3

<span class="token comment"># 删除 </span>

<span class="token namespace">[root@master ~]</span><span class="token comment"># kubectl delete deploy nginx -n dev</span>

deployment<span class="token punctuation">.</span>apps <span class="token string">"nginx"</span> deleted

</code></pre>

<p><strong>配置操作</strong></p>

<p>创建一个deploy-nginx.yaml,内容如下:</p>

<pre><code class="prism language-yaml"><span class="token key atrule">apiVersion</span><span class="token punctuation">:</span> apps/v1

<span class="token key atrule">kind</span><span class="token punctuation">:</span> Deployment

<span class="token key atrule">metadata</span><span class="token punctuation">:</span>

<span class="token key atrule">name</span><span class="token punctuation">:</span> nginx

<span class="token key atrule">namespace</span><span class="token punctuation">:</span> dev

<span class="token key atrule">spec</span><span class="token punctuation">:</span>

<span class="token key atrule">replicas</span><span class="token punctuation">:</span> <span class="token number">3</span>

<span class="token key atrule">selector</span><span class="token punctuation">:</span>

<span class="token key atrule">matchLabels</span><span class="token punctuation">:</span>

<span class="token key atrule">run</span><span class="token punctuation">:</span> nginx

<span class="token key atrule">template</span><span class="token punctuation">:</span>

<span class="token key atrule">metadata</span><span class="token punctuation">:</span>

<span class="token key atrule">labels</span><span class="token punctuation">:</span>

<span class="token key atrule">run</span><span class="token punctuation">:</span> nginx

<span class="token key atrule">spec</span><span class="token punctuation">:</span>

<span class="token key atrule">containers</span><span class="token punctuation">:</span>

<span class="token punctuation">-</span> <span class="token key atrule">image</span><span class="token punctuation">:</span> nginx<span class="token punctuation">:</span>1.17.1

<span class="token key atrule">name</span><span class="token punctuation">:</span> nginx

<span class="token key atrule">ports</span><span class="token punctuation">:</span>

<span class="token punctuation">-</span> <span class="token key atrule">containerPort</span><span class="token punctuation">:</span> <span class="token number">80</span>

<span class="token key atrule">protocol</span><span class="token punctuation">:</span> TCP

</code></pre>

<p>然后就可以执行对应的创建和删除命令了:</p>

<p> 创建:kubectl create -f deploy-nginx.yaml</p>

<p> 删除:kubectl delete -f deploy-nginx.yaml</p>

<h2>Service</h2>

<p>通过上节课的学习,已经能够利用Deployment来创建一组Pod来提供具有高可用性的服务。</p>

<p>虽然每个Pod都会分配一个单独的Pod IP,然而却存在如下两问题:</p>

<ul>

<li>Pod IP 会随着Pod的重建产生变化</li>

<li>Pod IP 仅仅是集群内可见的虚拟IP,外部无法访问</li>

</ul>

<p>这样对于访问这个服务带来了难度。因此,kubernetes设计了Service来解决这个问题。</p>

<p>Service可以看作是一组同类Pod<strong>对外的访问接口</strong>。借助Service,应用可以方便地实现服务发现和负载均衡。</p>

<p><a href="http://img.e-com-net.com/image/info8/360d9329054f426caf4b0ddd6d0cecca.jpg" target="_blank"><img src="http://img.e-com-net.com/image/info8/360d9329054f426caf4b0ddd6d0cecca.jpg" alt="Kubernetes第2天_第4张图片" width="650" height="301" style="border:1px solid black;"></a></p>

<p><strong>操作一:创建集群内部可访问的Service</strong></p>

<pre><code class="prism language-powershell"><span class="token comment"># 暴露Service</span>

<span class="token namespace">[root@master ~]</span><span class="token comment"># kubectl expose deploy nginx --name=svc-nginx1 --type=ClusterIP --port=80 --target-port=80 -n dev</span>

service<span class="token operator">/</span>svc<span class="token operator">-</span>nginx1 exposed

<span class="token comment"># 查看service</span>

<span class="token namespace">[root@master ~]</span><span class="token comment"># kubectl get svc svc-nginx -n dev -o wide</span>

NAME <span class="token function">TYPE</span> CLUSTER<span class="token operator">-</span>IP EXTERNAL<span class="token operator">-</span>IP PORT<span class="token punctuation">(</span>S<span class="token punctuation">)</span> AGE SELECTOR

svc<span class="token operator">-</span>nginx1 ClusterIP 10<span class="token punctuation">.</span>109<span class="token punctuation">.</span>179<span class="token punctuation">.</span>231 <none> 80<span class="token operator">/</span>TCP 3m51s run=nginx

<span class="token comment"># 这里产生了一个CLUSTER-IP,这就是service的IP,在Service的生命周期中,这个地址是不会变动的</span>

<span class="token comment"># 可以通过这个IP访问当前service对应的POD</span>

<span class="token namespace">[root@master ~]</span><span class="token comment"># curl 10.109.179.231:80</span>

<<span class="token operator">!</span>DOCTYPE html>

<html>

<head>

<title>Welcome to nginx<span class="token operator">!</span><<span class="token operator">/</span>title>

<<span class="token operator">/</span>head>

<body>

<h1>Welcome to nginx<span class="token operator">!</span><<span class="token operator">/</span>h1>

<span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span>

<<span class="token operator">/</span>body>

<<span class="token operator">/</span>html>

</code></pre>

<p><strong>操作二:创建集群外部也可访问的Service</strong></p>

<pre><code class="prism language-powershell"><span class="token comment"># 上面创建的Service的type类型为ClusterIP,这个ip地址只用集群内部可访问</span>

<span class="token comment"># 如果需要创建外部也可以访问的Service,需要修改type为NodePort</span>

<span class="token namespace">[root@master ~]</span><span class="token comment"># kubectl expose deploy nginx --name=svc-nginx2 --type=NodePort --port=80 --target-port=80 -n dev</span>

service<span class="token operator">/</span>svc<span class="token operator">-</span>nginx2 exposed

<span class="token comment"># 此时查看,会发现出现了NodePort类型的Service,而且有一对Port(80:31928/TC)</span>

<span class="token namespace">[root@master ~]</span><span class="token comment"># kubectl get svc svc-nginx-1 -n dev -o wide</span>

NAME <span class="token function">TYPE</span> CLUSTER<span class="token operator">-</span>IP EXTERNAL<span class="token operator">-</span>IP PORT<span class="token punctuation">(</span>S<span class="token punctuation">)</span> AGE SELECTOR

svc<span class="token operator">-</span>nginx2 NodePort 10<span class="token punctuation">.</span>100<span class="token punctuation">.</span>94<span class="token punctuation">.</span>0 <none> 80:31928<span class="token operator">/</span>TCP 9s run=nginx

<span class="token comment"># 接下来就可以通过集群外的主机访问 节点IP:31928访问服务了</span>

<span class="token comment"># 例如在的电脑主机上通过浏览器访问下面的地址</span>

http:<span class="token operator">/</span><span class="token operator">/</span>192<span class="token punctuation">.</span>168<span class="token punctuation">.</span>109<span class="token punctuation">.</span>100:31928<span class="token operator">/</span>

</code></pre>

<p><strong>删除Service</strong></p>

<pre><code class="prism language-powershell"><span class="token namespace">[root@master ~]</span><span class="token comment"># kubectl delete svc svc-nginx-1 -n dev service "svc-nginx-1" deleted</span>

</code></pre>

<p><strong>配置方式</strong></p>

<p>创建一个svc-nginx.yaml,内容如下:</p>

<pre><code class="prism language-yaml"><span class="token key atrule">apiVersion</span><span class="token punctuation">:</span> v1

<span class="token key atrule">kind</span><span class="token punctuation">:</span> Service

<span class="token key atrule">metadata</span><span class="token punctuation">:</span>

<span class="token key atrule">name</span><span class="token punctuation">:</span> svc<span class="token punctuation">-</span>nginx

<span class="token key atrule">namespace</span><span class="token punctuation">:</span> dev

<span class="token key atrule">spec</span><span class="token punctuation">:</span>

<span class="token key atrule">clusterIP</span><span class="token punctuation">:</span> 10.109.179.231

<span class="token key atrule">ports</span><span class="token punctuation">:</span>

<span class="token punctuation">-</span> <span class="token key atrule">port</span><span class="token punctuation">:</span> <span class="token number">80</span>

<span class="token key atrule">protocol</span><span class="token punctuation">:</span> TCP

<span class="token key atrule">targetPort</span><span class="token punctuation">:</span> <span class="token number">80</span>

<span class="token key atrule">selector</span><span class="token punctuation">:</span>

<span class="token key atrule">run</span><span class="token punctuation">:</span> nginx

<span class="token key atrule">type</span><span class="token punctuation">:</span> ClusterIP

</code></pre>

<p>然后就可以执行对应的创建和删除命令了:</p>

<p> 创建:kubectl create -f svc-nginx.yaml</p>

<p> 删除:kubectl delete -f svc-nginx.yaml</p>

<blockquote>

<p><strong>小结</strong></p>

<p> 至此,已经掌握了Namespace、Pod、Deployment、Service资源的基本操作,有了这些操作,就可以在kubernetes集群中实现一个服务的简单部署和访问了,但是如果想要更好的使用kubernetes,就需要深入学习这几种资源的细节和原理。</p>

</blockquote>

<h1>第五章 Pod详解</h1>

<p>本章节将详细介绍Pod资源的各种配置(yaml)和原理。</p>

<h2>Pod介绍</h2>

<h3>Pod结构</h3>

<p><a href="http://img.e-com-net.com/image/info8/aa866086f66e48739fc208fc09f773e9.jpg" target="_blank"><img src="http://img.e-com-net.com/image/info8/aa866086f66e48739fc208fc09f773e9.jpg" alt="Kubernetes第2天_第5张图片" width="633" height="464" style="border:1px solid black;"></a></p>

<p>每个Pod中都可以包含一个或者多个容器,这些容器可以分为两类:</p>

<ul>

<li> <p>用户程序所在的容器,数量可多可少</p> </li>

<li> <p>Pause容器,这是每个Pod都会有的一个<strong>根容器</strong>,它的作用有两个:</p>

<ul>

<li> <p>可以以它为依据,评估整个Pod的健康状态</p> </li>

<li> <p>可以在根容器上设置Ip地址,其它容器都此Ip(Pod IP),以实现Pod内部的网路通信</p> <pre><code class="prism language-md">这里是Pod内部的通讯,Pod的之间的通讯采用虚拟二层网络技术来实现,我们当前环境用的是Flannel

</code></pre> </li>

</ul> </li>

</ul>

<h3>Pod定义</h3>

<p>下面是Pod的资源清单:</p>

<pre><code class="prism language-yaml"><span class="token key atrule">apiVersion</span><span class="token punctuation">:</span> v1 <span class="token comment">#必选,版本号,例如v1</span>

<span class="token key atrule">kind</span><span class="token punctuation">:</span> Pod <span class="token comment">#必选,资源类型,例如 Pod</span>

<span class="token key atrule">metadata</span><span class="token punctuation">:</span> <span class="token comment">#必选,元数据</span>

<span class="token key atrule">name</span><span class="token punctuation">:</span> string <span class="token comment">#必选,Pod名称</span>

<span class="token key atrule">namespace</span><span class="token punctuation">:</span> string <span class="token comment">#Pod所属的命名空间,默认为"default"</span>

<span class="token key atrule">labels</span><span class="token punctuation">:</span> <span class="token comment">#自定义标签列表</span>

<span class="token punctuation">-</span> <span class="token key atrule">name</span><span class="token punctuation">:</span> string

<span class="token key atrule">spec</span><span class="token punctuation">:</span> <span class="token comment">#必选,Pod中容器的详细定义</span>

<span class="token key atrule">containers</span><span class="token punctuation">:</span> <span class="token comment">#必选,Pod中容器列表</span>

<span class="token punctuation">-</span> <span class="token key atrule">name</span><span class="token punctuation">:</span> string <span class="token comment">#必选,容器名称</span>

<span class="token key atrule">image</span><span class="token punctuation">:</span> string <span class="token comment">#必选,容器的镜像名称</span>

<span class="token key atrule">imagePullPolicy</span><span class="token punctuation">:</span> <span class="token punctuation">[</span> Always<span class="token punctuation">|</span>Never<span class="token punctuation">|</span>IfNotPresent <span class="token punctuation">]</span> <span class="token comment">#获取镜像的策略 </span>

<span class="token key atrule">command</span><span class="token punctuation">:</span> <span class="token punctuation">[</span>string<span class="token punctuation">]</span> <span class="token comment">#容器的启动命令列表,如不指定,使用打包时使用的启动命令</span>

<span class="token key atrule">args</span><span class="token punctuation">:</span> <span class="token punctuation">[</span>string<span class="token punctuation">]</span> <span class="token comment">#容器的启动命令参数列表</span>

<span class="token key atrule">workingDir</span><span class="token punctuation">:</span> string <span class="token comment">#容器的工作目录</span>

<span class="token key atrule">volumeMounts</span><span class="token punctuation">:</span> <span class="token comment">#挂载到容器内部的存储卷配置</span>

<span class="token punctuation">-</span> <span class="token key atrule">name</span><span class="token punctuation">:</span> string <span class="token comment">#引用pod定义的共享存储卷的名称,需用volumes[]部分定义的的卷名</span>

<span class="token key atrule">mountPath</span><span class="token punctuation">:</span> string <span class="token comment">#存储卷在容器内mount的绝对路径,应少于512字符</span>

<span class="token key atrule">readOnly</span><span class="token punctuation">:</span> boolean <span class="token comment">#是否为只读模式</span>

<span class="token key atrule">ports</span><span class="token punctuation">:</span> <span class="token comment">#需要暴露的端口库号列表</span>

<span class="token punctuation">-</span> <span class="token key atrule">name</span><span class="token punctuation">:</span> string <span class="token comment">#端口的名称</span>

<span class="token key atrule">containerPort</span><span class="token punctuation">:</span> int <span class="token comment">#容器需要监听的端口号</span>

<span class="token key atrule">hostPort</span><span class="token punctuation">:</span> int <span class="token comment">#容器所在主机需要监听的端口号,默认与Container相同</span>

<span class="token key atrule">protocol</span><span class="token punctuation">:</span> string <span class="token comment">#端口协议,支持TCP和UDP,默认TCP</span>

<span class="token key atrule">env</span><span class="token punctuation">:</span> <span class="token comment">#容器运行前需设置的环境变量列表</span>

<span class="token punctuation">-</span> <span class="token key atrule">name</span><span class="token punctuation">:</span> string <span class="token comment">#环境变量名称</span>

<span class="token key atrule">value</span><span class="token punctuation">:</span> string <span class="token comment">#环境变量的值</span>

<span class="token key atrule">resources</span><span class="token punctuation">:</span> <span class="token comment">#资源限制和请求的设置</span>

<span class="token key atrule">limits</span><span class="token punctuation">:</span> <span class="token comment">#资源限制的设置</span>

<span class="token key atrule">cpu</span><span class="token punctuation">:</span> string <span class="token comment">#Cpu的限制,单位为core数,将用于docker run --cpu-shares参数</span>

<span class="token key atrule">memory</span><span class="token punctuation">:</span> string <span class="token comment">#内存限制,单位可以为Mib/Gib,将用于docker run --memory参数</span>

<span class="token key atrule">requests</span><span class="token punctuation">:</span> <span class="token comment">#资源请求的设置</span>

<span class="token key atrule">cpu</span><span class="token punctuation">:</span> string <span class="token comment">#Cpu请求,容器启动的初始可用数量</span>

<span class="token key atrule">memory</span><span class="token punctuation">:</span> string <span class="token comment">#内存请求,容器启动的初始可用数量</span>

<span class="token key atrule">lifecycle</span><span class="token punctuation">:</span> <span class="token comment">#生命周期钩子</span>

<span class="token key atrule">postStart</span><span class="token punctuation">:</span> <span class="token comment">#容器启动后立即执行此钩子,如果执行失败,会根据重启策略进行重启</span>

<span class="token key atrule">preStop</span><span class="token punctuation">:</span> <span class="token comment">#容器终止前执行此钩子,无论结果如何,容器都会终止</span>

<span class="token key atrule">livenessProbe</span><span class="token punctuation">:</span> <span class="token comment">#对Pod内各容器健康检查的设置,当探测无响应几次后将自动重启该容器</span>

<span class="token key atrule">exec</span><span class="token punctuation">:</span> <span class="token comment">#对Pod容器内检查方式设置为exec方式</span>

<span class="token key atrule">command</span><span class="token punctuation">:</span> <span class="token punctuation">[</span>string<span class="token punctuation">]</span> <span class="token comment">#exec方式需要制定的命令或脚本</span>

<span class="token key atrule">httpGet</span><span class="token punctuation">:</span> <span class="token comment">#对Pod内个容器健康检查方法设置为HttpGet,需要制定Path、port</span>

<span class="token key atrule">path</span><span class="token punctuation">:</span> string

<span class="token key atrule">port</span><span class="token punctuation">:</span> number

<span class="token key atrule">host</span><span class="token punctuation">:</span> string

<span class="token key atrule">scheme</span><span class="token punctuation">:</span> string

<span class="token key atrule">HttpHeaders</span><span class="token punctuation">:</span>

<span class="token punctuation">-</span> <span class="token key atrule">name</span><span class="token punctuation">:</span> string

<span class="token key atrule">value</span><span class="token punctuation">:</span> string

<span class="token key atrule">tcpSocket</span><span class="token punctuation">:</span> <span class="token comment">#对Pod内个容器健康检查方式设置为tcpSocket方式</span>

<span class="token key atrule">port</span><span class="token punctuation">:</span> number

<span class="token key atrule">initialDelaySeconds</span><span class="token punctuation">:</span> <span class="token number">0</span> <span class="token comment">#容器启动完成后首次探测的时间,单位为秒</span>

<span class="token key atrule">timeoutSeconds</span><span class="token punctuation">:</span> 0 <span class="token comment">#对容器健康检查探测等待响应的超时时间,单位秒,默认1秒</span>

<span class="token key atrule">periodSeconds</span><span class="token punctuation">:</span> 0 <span class="token comment">#对容器监控检查的定期探测时间设置,单位秒,默认10秒一次</span>

<span class="token key atrule">successThreshold</span><span class="token punctuation">:</span> <span class="token number">0</span>

<span class="token key atrule">failureThreshold</span><span class="token punctuation">:</span> <span class="token number">0</span>

<span class="token key atrule">securityContext</span><span class="token punctuation">:</span>

<span class="token key atrule">privileged</span><span class="token punctuation">:</span> <span class="token boolean important">false</span>

<span class="token key atrule">restartPolicy</span><span class="token punctuation">:</span> <span class="token punctuation">[</span>Always <span class="token punctuation">|</span> Never <span class="token punctuation">|</span> OnFailure<span class="token punctuation">]</span> <span class="token comment">#Pod的重启策略</span>

<span class="token key atrule">nodeName</span><span class="token punctuation">:</span> <string<span class="token punctuation">></span> <span class="token comment">#设置NodeName表示将该Pod调度到指定到名称的node节点上</span>

<span class="token key atrule">nodeSelector</span><span class="token punctuation">:</span> obeject <span class="token comment">#设置NodeSelector表示将该Pod调度到包含这个label的node上</span>

<span class="token key atrule">imagePullSecrets</span><span class="token punctuation">:</span> <span class="token comment">#Pull镜像时使用的secret名称,以key:secretkey格式指定</span>

<span class="token punctuation">-</span> <span class="token key atrule">name</span><span class="token punctuation">:</span> string

<span class="token key atrule">hostNetwork</span><span class="token punctuation">:</span> <span class="token boolean important">false</span> <span class="token comment">#是否使用主机网络模式,默认为false,如果设置为true,表示使用宿主机网络</span>

<span class="token key atrule">volumes</span><span class="token punctuation">:</span> <span class="token comment">#在该pod上定义共享存储卷列表</span>

<span class="token punctuation">-</span> <span class="token key atrule">name</span><span class="token punctuation">:</span> string <span class="token comment">#共享存储卷名称 (volumes类型有很多种)</span>

<span class="token key atrule">emptyDir</span><span class="token punctuation">:</span> <span class="token punctuation">{</span><span class="token punctuation">}</span> <span class="token comment">#类型为emtyDir的存储卷,与Pod同生命周期的一个临时目录。为空值</span>

<span class="token key atrule">hostPath</span><span class="token punctuation">:</span> string <span class="token comment">#类型为hostPath的存储卷,表示挂载Pod所在宿主机的目录</span>

<span class="token key atrule">path</span><span class="token punctuation">:</span> string <span class="token comment">#Pod所在宿主机的目录,将被用于同期中mount的目录</span>

<span class="token key atrule">secret</span><span class="token punctuation">:</span> <span class="token comment">#类型为secret的存储卷,挂载集群与定义的secret对象到容器内部</span>

<span class="token key atrule">scretname</span><span class="token punctuation">:</span> string

<span class="token key atrule">items</span><span class="token punctuation">:</span>

<span class="token punctuation">-</span> <span class="token key atrule">key</span><span class="token punctuation">:</span> string

<span class="token key atrule">path</span><span class="token punctuation">:</span> string

<span class="token key atrule">configMap</span><span class="token punctuation">:</span> <span class="token comment">#类型为configMap的存储卷,挂载预定义的configMap对象到容器内部</span>

<span class="token key atrule">name</span><span class="token punctuation">:</span> string

<span class="token key atrule">items</span><span class="token punctuation">:</span>

<span class="token punctuation">-</span> <span class="token key atrule">key</span><span class="token punctuation">:</span> string

<span class="token key atrule">path</span><span class="token punctuation">:</span> string

</code></pre>

<pre><code class="prism language-powershell"><span class="token comment">#小提示:</span>

<span class="token comment"># 在这里,可通过一个命令来查看每种资源的可配置项</span>

<span class="token comment"># kubectl explain 资源类型 查看某种资源可以配置的一级属性</span>

<span class="token comment"># kubectl explain 资源类型.属性 查看属性的子属性</span>

<span class="token namespace">[root@master ~]</span><span class="token comment"># kubectl explain pod</span>

KIND: Pod

VERSION: v1

FIELDS:

apiVersion <string>

kind <string>

metadata <Object>

spec <Object>

status <Object>

<span class="token namespace">[root@master ~]</span><span class="token comment"># kubectl explain pod.metadata</span>

KIND: Pod

VERSION: v1

RESOURCE: metadata <Object>

FIELDS:

annotations <map<span class="token namespace">[string]</span>string>

clusterName <string>

creationTimestamp <string>

deletionGracePeriodSeconds <integer>

deletionTimestamp <string>

finalizers <<span class="token punctuation">[</span><span class="token punctuation">]</span>string>

generateName <string>

generation <integer>

labels <map<span class="token namespace">[string]</span>string>

managedFields <<span class="token punctuation">[</span><span class="token punctuation">]</span>Object>

name <string>

namespace <string>

ownerReferences <<span class="token punctuation">[</span><span class="token punctuation">]</span>Object>

resourceVersion <string>

selfLink <string>

uid <string>

</code></pre>

<p>在kubernetes中基本所有资源的一级属性都是一样的,主要包含5部分:</p>

<ul>

<li> <p>apiVersion <string> 版本,由kubernetes内部定义,版本号必须可以用 kubectl api-versions 查询到</p> </li>

<li> <p>kind <string> 类型,由kubernetes内部定义,版本号必须可以用 kubectl api-resources 查询到</p> </li>

<li> <p>metadata <Object> 元数据,主要是资源标识和说明,常用的有name、namespace、labels等</p> </li>

<li> <p>spec <Object> 描述,这是配置中最重要的一部分,里面是对各种资源配置的详细描述</p> </li>

<li> <p>status <Object> 状态信息,里面的内容不需要定义,由kubernetes自动生成</p> </li>

</ul>

<p>在上面的属性中,spec是接下来研究的重点,继续看下它的常见子属性:</p>

<ul>

<li>containers <[]Object> 容器列表,用于定义容器的详细信息</li>

<li>nodeName <String> 根据nodeName的值将pod调度到指定的Node节点上</li>

<li>nodeSelector <map[]> 根据NodeSelector中定义的信息选择将该Pod调度到包含这些label的Node 上</li>

<li>hostNetwork <boolean> 是否使用主机网络模式,默认为false,如果设置为true,表示使用宿主机网络</li>

<li>volumes <[]Object> 存储卷,用于定义Pod上面挂在的存储信息</li>

<li>restartPolicy <string> 重启策略,表示Pod在遇到故障的时候的处理策略</li>

</ul>

<h2>Pod配置</h2>

<p>本小节主要来研究<code>pod.spec.containers</code>属性,这也是pod配置中最为关键的一项配置。</p>

<pre><code class="prism language-powershell"><span class="token namespace">[root@master ~]</span><span class="token comment"># kubectl explain pod.spec.containers</span>

KIND: Pod

VERSION: v1

RESOURCE: containers <<span class="token punctuation">[</span><span class="token punctuation">]</span>Object> <span class="token comment"># 数组,代表可以有多个容器</span>

FIELDS:

name <string> <span class="token comment"># 容器名称</span>

image <string> <span class="token comment"># 容器需要的镜像地址</span>

imagePullPolicy <string> <span class="token comment"># 镜像拉取策略 </span>

command <<span class="token punctuation">[</span><span class="token punctuation">]</span>string> <span class="token comment"># 容器的启动命令列表,如不指定,使用打包时使用的启动命令</span>

args <<span class="token punctuation">[</span><span class="token punctuation">]</span>string> <span class="token comment"># 容器的启动命令需要的参数列表</span>

env <<span class="token punctuation">[</span><span class="token punctuation">]</span>Object> <span class="token comment"># 容器环境变量的配置</span>

ports <<span class="token punctuation">[</span><span class="token punctuation">]</span>Object> <span class="token comment"># 容器需要暴露的端口号列表</span>

resources <Object> <span class="token comment"># 资源限制和资源请求的设置</span>

</code></pre>

<h3>基本配置</h3>

<p>创建pod-base.yaml文件,内容如下:</p>

<pre><code class="prism language-yaml"><span class="token key atrule">apiVersion</span><span class="token punctuation">:</span> v1

<span class="token key atrule">kind</span><span class="token punctuation">:</span> Pod

<span class="token key atrule">metadata</span><span class="token punctuation">:</span>

<span class="token key atrule">name</span><span class="token punctuation">:</span> pod<span class="token punctuation">-</span>base

<span class="token key atrule">namespace</span><span class="token punctuation">:</span> dev

<span class="token key atrule">labels</span><span class="token punctuation">:</span>

<span class="token key atrule">user</span><span class="token punctuation">:</span> heima

<span class="token key atrule">spec</span><span class="token punctuation">:</span>

<span class="token key atrule">containers</span><span class="token punctuation">:</span>

<span class="token punctuation">-</span> <span class="token key atrule">name</span><span class="token punctuation">:</span> nginx

<span class="token key atrule">image</span><span class="token punctuation">:</span> nginx<span class="token punctuation">:</span>1.17.1

<span class="token punctuation">-</span> <span class="token key atrule">name</span><span class="token punctuation">:</span> busybox

<span class="token key atrule">image</span><span class="token punctuation">:</span> busybox<span class="token punctuation">:</span><span class="token number">1.30</span>

</code></pre>

<p>上面定义了一个比较简单Pod的配置,里面有两个容器:</p>

<ul>

<li>nginx:用1.17.1版本的nginx镜像创建,(nginx是一个轻量级web容器)</li>

<li>busybox:用1.30版本的busybox镜像创建,(busybox是一个小巧的linux命令集合)</li>

</ul>

<pre><code class="prism language-powershell"><span class="token comment"># 创建Pod</span>

<span class="token namespace">[root@master pod]</span><span class="token comment"># kubectl apply -f pod-base.yaml</span>

pod<span class="token operator">/</span>pod<span class="token operator">-</span>base created

<span class="token comment"># 查看Pod状况</span>

<span class="token comment"># READY 1/2 : 表示当前Pod中有2个容器,其中1个准备就绪,1个未就绪</span>

<span class="token comment"># RESTARTS : 重启次数,因为有1个容器故障了,Pod一直在重启试图恢复它</span>

<span class="token namespace">[root@master pod]</span><span class="token comment"># kubectl get pod -n dev</span>

NAME READY STATUS RESTARTS AGE

pod<span class="token operator">-</span>base 1<span class="token operator">/</span>2 Running 4 95s

<span class="token comment"># 可以通过describe查看内部的详情</span>

<span class="token comment"># 此时已经运行起来了一个基本的Pod,虽然它暂时有问题</span>

<span class="token namespace">[root@master pod]</span><span class="token comment"># kubectl describe pod pod-base -n dev</span>

</code></pre>

<h3>镜像拉取</h3>

<p>创建pod-imagepullpolicy.yaml文件,内容如下:</p>

<pre><code class="prism language-yaml"><span class="token key atrule">apiVersion</span><span class="token punctuation">:</span> v1

<span class="token key atrule">kind</span><span class="token punctuation">:</span> Pod

<span class="token key atrule">metadata</span><span class="token punctuation">:</span>

<span class="token key atrule">name</span><span class="token punctuation">:</span> pod<span class="token punctuation">-</span>imagepullpolicy

<span class="token key atrule">namespace</span><span class="token punctuation">:</span> dev

<span class="token key atrule">spec</span><span class="token punctuation">:</span>

<span class="token key atrule">containers</span><span class="token punctuation">:</span>

<span class="token punctuation">-</span> <span class="token key atrule">name</span><span class="token punctuation">:</span> nginx

<span class="token key atrule">image</span><span class="token punctuation">:</span> nginx<span class="token punctuation">:</span>1.17.1

<span class="token key atrule">imagePullPolicy</span><span class="token punctuation">:</span> Always <span class="token comment"># 用于设置镜像拉取策略</span>

<span class="token punctuation">-</span> <span class="token key atrule">name</span><span class="token punctuation">:</span> busybox

<span class="token key atrule">image</span><span class="token punctuation">:</span> busybox<span class="token punctuation">:</span><span class="token number">1.30</span>

</code></pre>

<p>imagePullPolicy,用于设置镜像拉取策略,kubernetes支持配置三种拉取策略:</p>

<ul>

<li>Always:总是从远程仓库拉取镜像(一直远程下载)</li>

<li>IfNotPresent:本地有则使用本地镜像,本地没有则从远程仓库拉取镜像(本地有就本地 本地没远程下载)</li>

<li>Never:只使用本地镜像,从不去远程仓库拉取,本地没有就报错 (一直使用本地)</li>

</ul>

<blockquote>

<p>默认值说明:</p>

<p> 如果镜像tag为具体版本号, 默认策略是:IfNotPresent</p>

<p> 如果镜像tag为:latest(最终版本) ,默认策略是always</p>

</blockquote>

<pre><code class="prism language-powershell"><span class="token comment"># 创建Pod</span>

<span class="token namespace">[root@master pod]</span><span class="token comment"># kubectl create -f pod-imagepullpolicy.yaml</span>

pod<span class="token operator">/</span>pod<span class="token operator">-</span>imagepullpolicy created

<span class="token comment"># 查看Pod详情</span>

<span class="token comment"># 此时明显可以看到nginx镜像有一步Pulling image "nginx:1.17.1"的过程</span>

<span class="token namespace">[root@master pod]</span><span class="token comment"># kubectl describe pod pod-imagepullpolicy -n dev</span>

<span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span>

Events:

<span class="token function">Type</span> Reason Age <span class="token keyword">From</span> Message

<span class="token operator">--</span>-<span class="token operator">-</span> <span class="token operator">--</span>-<span class="token operator">--</span><span class="token operator">-</span> <span class="token operator">--</span>-<span class="token operator">-</span> <span class="token operator">--</span>-<span class="token operator">-</span> <span class="token operator">--</span>-<span class="token operator">--</span>-<span class="token operator">-</span>

Normal Scheduled <unknown> default<span class="token operator">-</span>scheduler Successfully assigned dev<span class="token operator">/</span>pod<span class="token operator">-</span>imagePullPolicy to node1

Normal Pulling 32s kubelet<span class="token punctuation">,</span> node1 Pulling image <span class="token string">"nginx:1.17.1"</span>

Normal Pulled 26s kubelet<span class="token punctuation">,</span> node1 Successfully pulled image <span class="token string">"nginx:1.17.1"</span>

Normal Created 26s kubelet<span class="token punctuation">,</span> node1 Created container nginx

Normal Started 25s kubelet<span class="token punctuation">,</span> node1 Started container nginx

Normal Pulled 7s <span class="token punctuation">(</span>x3 over 25s<span class="token punctuation">)</span> kubelet<span class="token punctuation">,</span> node1 Container image <span class="token string">"busybox:1.30"</span> already present on machine

Normal Created 7s <span class="token punctuation">(</span>x3 over 25s<span class="token punctuation">)</span> kubelet<span class="token punctuation">,</span> node1 Created container busybox

Normal Started 7s <span class="token punctuation">(</span>x3 over 25s<span class="token punctuation">)</span> kubelet<span class="token punctuation">,</span> node1 Started container busybox

</code></pre>

<h3>启动命令</h3>

<p> 在前面的案例中,一直有一个问题没有解决,就是的busybox容器一直没有成功运行,那么到底是什么原因导致这个容器的故障呢?</p>

<p> 原来busybox并不是一个程序,而是类似于一个工具类的集合,kubernetes集群启动管理后,它会自动关闭。解决方法就是让其一直在运行,这就用到了command配置。</p>

<p>创建pod-command.yaml文件,内容如下:</p>

<pre><code class="prism language-yaml"><span class="token key atrule">apiVersion</span><span class="token punctuation">:</span> v1

<span class="token key atrule">kind</span><span class="token punctuation">:</span> Pod

<span class="token key atrule">metadata</span><span class="token punctuation">:</span>

<span class="token key atrule">name</span><span class="token punctuation">:</span> pod<span class="token punctuation">-</span>command

<span class="token key atrule">namespace</span><span class="token punctuation">:</span> dev

<span class="token key atrule">spec</span><span class="token punctuation">:</span>

<span class="token key atrule">containers</span><span class="token punctuation">:</span>

<span class="token punctuation">-</span> <span class="token key atrule">name</span><span class="token punctuation">:</span> nginx

<span class="token key atrule">image</span><span class="token punctuation">:</span> nginx<span class="token punctuation">:</span>1.17.1

<span class="token punctuation">-</span> <span class="token key atrule">name</span><span class="token punctuation">:</span> busybox

<span class="token key atrule">image</span><span class="token punctuation">:</span> busybox<span class="token punctuation">:</span><span class="token number">1.30</span>

<span class="token key atrule">command</span><span class="token punctuation">:</span> <span class="token punctuation">[</span><span class="token string">"/bin/sh"</span><span class="token punctuation">,</span><span class="token string">"-c"</span><span class="token punctuation">,</span><span class="token string">"touch /tmp/hello.txt;while true;do /bin/echo $(date +%T) >> /tmp/hello.txt; sleep 3; done;"</span><span class="token punctuation">]</span>

</code></pre>

<p>command,用于在pod中的容器初始化完毕之后运行一个命令。</p>

<blockquote>

<p>稍微解释下上面命令的意思:</p>

<p> “/bin/sh”,“-c”, 使用sh执行命令</p>

<p> touch /tmp/hello.txt; 创建一个/tmp/hello.txt 文件</p>

<p> while true;do /bin/echo $(date +%T) >> /tmp/hello.txt; sleep 3; done; 每隔3秒向文件中写入当前时间</p>

</blockquote>

<pre><code class="prism language-powershell"><span class="token comment"># 创建Pod</span>

<span class="token namespace">[root@master pod]</span><span class="token comment"># kubectl create -f pod-command.yaml</span>

pod<span class="token operator">/</span>pod<span class="token operator">-</span>command created

<span class="token comment"># 查看Pod状态</span>

<span class="token comment"># 此时发现两个pod都正常运行了</span>

<span class="token namespace">[root@master pod]</span><span class="token comment"># kubectl get pods pod-command -n dev</span>

NAME READY STATUS RESTARTS AGE

pod<span class="token operator">-</span>command 2<span class="token operator">/</span>2 Runing 0 2s

<span class="token comment"># 进入pod中的busybox容器,查看文件内容</span>

<span class="token comment"># 补充一个命令: kubectl exec pod名称 -n 命名空间 -it -c 容器名称 /bin/sh 在容器内部执行命令</span>

<span class="token comment"># 使用这个命令就可以进入某个容器的内部,然后进行相关操作了</span>

<span class="token comment"># 比如,可以查看txt文件的内容</span>

<span class="token namespace">[root@master pod]</span><span class="token comment"># kubectl exec pod-command -n dev -it -c busybox /bin/sh</span>

<span class="token operator">/</span> <span class="token comment"># tail -f /tmp/hello.txt</span>

13:35:35

13:35:38

13:35:41

</code></pre>

<pre><code class="prism language-md">特别说明:

通过上面发现command已经可以完成启动命令和传递参数的功能,为什么这里还要提供一个args选项,用于传递参数呢?这其实跟docker有点关系,kubernetes中的command、args两项其实是实现覆盖Dockerfile中ENTRYPOINT的功能。

1 如果command和args均没有写,那么用Dockerfile的配置。

2 如果command写了,但args没有写,那么Dockerfile默认的配置会被忽略,执行输入的command

3 如果command没写,但args写了,那么Dockerfile中配置的ENTRYPOINT的命令会被执行,使用当前args的参数

4 如果command和args都写了,那么Dockerfile的配置被忽略,执行command并追加上args参数

</code></pre>

<h3>环境变量</h3>

<p>创建pod-env.yaml文件,内容如下:</p>

<pre><code class="prism language-yaml"><span class="token key atrule">apiVersion</span><span class="token punctuation">:</span> v1

<span class="token key atrule">kind</span><span class="token punctuation">:</span> Pod

<span class="token key atrule">metadata</span><span class="token punctuation">:</span>

<span class="token key atrule">name</span><span class="token punctuation">:</span> pod<span class="token punctuation">-</span>env

<span class="token key atrule">namespace</span><span class="token punctuation">:</span> dev

<span class="token key atrule">spec</span><span class="token punctuation">:</span>

<span class="token key atrule">containers</span><span class="token punctuation">:</span>

<span class="token punctuation">-</span> <span class="token key atrule">name</span><span class="token punctuation">:</span> busybox

<span class="token key atrule">image</span><span class="token punctuation">:</span> busybox<span class="token punctuation">:</span><span class="token number">1.30</span>

<span class="token key atrule">command</span><span class="token punctuation">:</span> <span class="token punctuation">[</span><span class="token string">"/bin/sh"</span><span class="token punctuation">,</span><span class="token string">"-c"</span><span class="token punctuation">,</span><span class="token string">"while true;do /bin/echo $(date +%T);sleep 60; done;"</span><span class="token punctuation">]</span>

<span class="token key atrule">env</span><span class="token punctuation">:</span> <span class="token comment"># 设置环境变量列表</span>

<span class="token punctuation">-</span> <span class="token key atrule">name</span><span class="token punctuation">:</span> <span class="token string">"username"</span>

<span class="token key atrule">value</span><span class="token punctuation">:</span> <span class="token string">"admin"</span>

<span class="token punctuation">-</span> <span class="token key atrule">name</span><span class="token punctuation">:</span> <span class="token string">"password"</span>

<span class="token key atrule">value</span><span class="token punctuation">:</span> <span class="token string">"123456"</span>

</code></pre>

<p>env,环境变量,用于在pod中的容器设置环境变量。</p>

<pre><code class="prism language-powershell"><span class="token comment"># 创建Pod</span>

<span class="token namespace">[root@master ~]</span><span class="token comment"># kubectl create -f pod-env.yaml</span>

pod<span class="token operator">/</span>pod<span class="token operator">-</span>env created

<span class="token comment"># 进入容器,输出环境变量</span>

<span class="token namespace">[root@master ~]</span><span class="token comment"># kubectl exec pod-env -n dev -c busybox -it /bin/sh</span>

<span class="token operator">/</span> <span class="token comment"># echo $username</span>

admin

<span class="token operator">/</span> <span class="token comment"># echo $password</span>

123456

</code></pre>

<p>这种方式不是很推荐,推荐将这些配置单独存储在配置文件中,这种方式将在后面介绍。</p>

<h3>端口设置</h3>

<p>本小节来介绍容器的端口设置,也就是containers的ports选项。</p>

<p>首先看下ports支持的子选项:</p>

<pre><code class="prism language-powershell"><span class="token namespace">[root@master ~]</span><span class="token comment"># kubectl explain pod.spec.containers.ports</span>

KIND: Pod

VERSION: v1

RESOURCE: ports <<span class="token punctuation">[</span><span class="token punctuation">]</span>Object>

FIELDS:

name <string> <span class="token comment"># 端口名称,如果指定,必须保证name在pod中是唯一的 </span>

containerPort<integer> <span class="token comment"># 容器要监听的端口(0<x<65536)</span>

hostPort <integer> <span class="token comment"># 容器要在主机上公开的端口,如果设置,主机上只能运行容器的一个副本(一般省略) </span>

hostIP <string> <span class="token comment"># 要将外部端口绑定到的主机IP(一般省略)</span>

protocol <string> <span class="token comment"># 端口协议。必须是UDP、TCP或SCTP。默认为“TCP”。</span>

</code></pre>

<p>接下来,编写一个测试案例,创建pod-ports.yaml</p>

<pre><code class="prism language-yaml"><span class="token key atrule">apiVersion</span><span class="token punctuation">:</span> v1

<span class="token key atrule">kind</span><span class="token punctuation">:</span> Pod

<span class="token key atrule">metadata</span><span class="token punctuation">:</span>

<span class="token key atrule">name</span><span class="token punctuation">:</span> pod<span class="token punctuation">-</span>ports

<span class="token key atrule">namespace</span><span class="token punctuation">:</span> dev

<span class="token key atrule">spec</span><span class="token punctuation">:</span>

<span class="token key atrule">containers</span><span class="token punctuation">:</span>

<span class="token punctuation">-</span> <span class="token key atrule">name</span><span class="token punctuation">:</span> nginx

<span class="token key atrule">image</span><span class="token punctuation">:</span> nginx<span class="token punctuation">:</span>1.17.1

<span class="token key atrule">ports</span><span class="token punctuation">:</span> <span class="token comment"># 设置容器暴露的端口列表</span>

<span class="token punctuation">-</span> <span class="token key atrule">name</span><span class="token punctuation">:</span> nginx<span class="token punctuation">-</span>port

<span class="token key atrule">containerPort</span><span class="token punctuation">:</span> <span class="token number">80</span>

<span class="token key atrule">protocol</span><span class="token punctuation">:</span> TCP

</code></pre>

<pre><code class="prism language-powershell"><span class="token comment"># 创建Pod</span>

<span class="token namespace">[root@master ~]</span><span class="token comment"># kubectl create -f pod-ports.yaml</span>

pod<span class="token operator">/</span>pod<span class="token operator">-</span>ports created

<span class="token comment"># 查看pod</span>

<span class="token comment"># 在下面可以明显看到配置信息</span>

<span class="token namespace">[root@master ~]</span><span class="token comment"># kubectl get pod pod-ports -n dev -o yaml</span>

<span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span>

spec:

containers:

<span class="token operator">-</span> image: nginx:1<span class="token punctuation">.</span>17<span class="token punctuation">.</span>1

imagePullPolicy: IfNotPresent

name: nginx

ports:

<span class="token operator">-</span> containerPort: 80

name: nginx<span class="token operator">-</span>port

protocol: TCP

<span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span>

</code></pre>

<p>访问容器中的程序需要使用的是<code>podIp:containerPort</code></p>

<h3>资源配额</h3>

<p> 容器中的程序要运行,肯定是要占用一定资源的,比如cpu和内存等,如果不对某个容器的资源做限制,那么它就可能吃掉大量资源,导致其它容器无法运行。针对这种情况,kubernetes提供了对内存和cpu的资源进行配额的机制,这种机制主要通过resources选项实现,他有两个子选项:</p>

<ul>

<li> <p>limits:用于限制运行时容器的最大占用资源,当容器占用资源超过limits时会被终止,并进行重启</p> </li>

<li> <p>requests :用于设置容器需要的最小资源,如果环境资源不够,容器将无法启动</p> </li>

</ul>

<p>可以通过上面两个选项设置资源的上下限。</p>

<p>接下来,编写一个测试案例,创建pod-resources.yaml</p>

<pre><code class="prism language-yaml"><span class="token key atrule">apiVersion</span><span class="token punctuation">:</span> v1

<span class="token key atrule">kind</span><span class="token punctuation">:</span> Pod

<span class="token key atrule">metadata</span><span class="token punctuation">:</span>

<span class="token key atrule">name</span><span class="token punctuation">:</span> pod<span class="token punctuation">-</span>resources

<span class="token key atrule">namespace</span><span class="token punctuation">:</span> dev

<span class="token key atrule">spec</span><span class="token punctuation">:</span>

<span class="token key atrule">containers</span><span class="token punctuation">:</span>

<span class="token punctuation">-</span> <span class="token key atrule">name</span><span class="token punctuation">:</span> nginx

<span class="token key atrule">image</span><span class="token punctuation">:</span> nginx<span class="token punctuation">:</span>1.17.1

<span class="token key atrule">resources</span><span class="token punctuation">:</span> <span class="token comment"># 资源配额</span>

<span class="token key atrule">limits</span><span class="token punctuation">:</span> <span class="token comment"># 限制资源(上限)</span>

<span class="token key atrule">cpu</span><span class="token punctuation">:</span> <span class="token string">"2"</span> <span class="token comment"># CPU限制,单位是core数</span>

<span class="token key atrule">memory</span><span class="token punctuation">:</span> <span class="token string">"10Gi"</span> <span class="token comment"># 内存限制</span>

<span class="token key atrule">requests</span><span class="token punctuation">:</span> <span class="token comment"># 请求资源(下限)</span>

<span class="token key atrule">cpu</span><span class="token punctuation">:</span> <span class="token string">"1"</span> <span class="token comment"># CPU限制,单位是core数</span>

<span class="token key atrule">memory</span><span class="token punctuation">:</span> <span class="token string">"10Mi"</span> <span class="token comment"># 内存限制</span>

</code></pre>

<p>在这对cpu和memory的单位做一个说明:</p>

<ul>

<li> <p>cpu:core数,可以为整数或小数</p> </li>

<li> <p>memory: 内存大小,可以使用Gi、Mi、G、M等形式</p> </li>

</ul>

<pre><code class="prism language-powershell"><span class="token comment"># 运行Pod</span>

<span class="token namespace">[root@master ~]</span><span class="token comment"># kubectl create -f pod-resources.yaml</span>

pod<span class="token operator">/</span>pod<span class="token operator">-</span>resources created

<span class="token comment"># 查看发现pod运行正常</span>

<span class="token namespace">[root@master ~]</span><span class="token comment"># kubectl get pod pod-resources -n dev</span>

NAME READY STATUS RESTARTS AGE

pod<span class="token operator">-</span>resources 1<span class="token operator">/</span>1 Running 0 39s

<span class="token comment"># 接下来,停止Pod</span>

<span class="token namespace">[root@master ~]</span><span class="token comment"># kubectl delete -f pod-resources.yaml</span>

pod <span class="token string">"pod-resources"</span> deleted

<span class="token comment"># 编辑pod,修改resources.requests.memory的值为10Gi</span>

<span class="token namespace">[root@master ~]</span><span class="token comment"># vim pod-resources.yaml</span>

<span class="token comment"># 再次启动pod</span>

<span class="token namespace">[root@master ~]</span><span class="token comment"># kubectl create -f pod-resources.yaml</span>

pod<span class="token operator">/</span>pod<span class="token operator">-</span>resources created

<span class="token comment"># 查看Pod状态,发现Pod启动失败</span>

<span class="token namespace">[root@master ~]</span><span class="token comment"># kubectl get pod pod-resources -n dev -o wide</span>

NAME READY STATUS RESTARTS AGE

pod<span class="token operator">-</span>resources 0<span class="token operator">/</span>2 Pending 0 20s

<span class="token comment"># 查看pod详情会发现,如下提示</span>

<span class="token namespace">[root@master ~]</span><span class="token comment"># kubectl describe pod pod-resources -n dev</span>

<span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span>

Warning FailedScheduling <unknown> default<span class="token operator">-</span>scheduler 0<span class="token operator">/</span>2 nodes are available: 2 Insufficient memory<span class="token punctuation">.</span><span class="token punctuation">(</span>内存不足<span class="token punctuation">)</span>

</code></pre>

<h2>Pod生命周期</h2>

<p>我们一般将pod对象从创建至终的这段时间范围称为pod的生命周期,它主要包含下面的过程:</p>

<ul>

<li> <p>pod创建过程</p> </li>

<li> <p>运行初始化容器(init container)过程</p> </li>

<li> <p>运行主容器(main container)</p>

<ul>

<li> <p>容器启动后钩子(post start)、容器终止前钩子(pre stop)</p> </li>

<li> <p>容器的存活性探测(liveness probe)、就绪性探测(readiness probe)</p> </li>

</ul> </li>

<li> <p>pod终止过程</p> </li>

</ul>

<p><a href="http://img.e-com-net.com/image/info8/7ee2284d48c74414b290569399e396a5.jpg" target="_blank"><img src="http://img.e-com-net.com/image/info8/7ee2284d48c74414b290569399e396a5.jpg" alt="Kubernetes第2天_第6张图片" width="650" height="338" style="border:1px solid black;"></a></p>

<p>在整个生命周期中,Pod会出现5种<strong>状态</strong>(<strong>相位</strong>),分别如下:</p>

<ul>

<li>挂起(Pending):apiserver已经创建了pod资源对象,但它尚未被调度完成或者仍处于下载镜像的过程中</li>

<li>运行中(Running):pod已经被调度至某节点,并且所有容器都已经被kubelet创建完成</li>

<li>成功(Succeeded):pod中的所有容器都已经成功终止并且不会被重启</li>

<li>失败(Failed):所有容器都已经终止,但至少有一个容器终止失败,即容器返回了非0值的退出状态</li>

<li>未知(Unknown):apiserver无法正常获取到pod对象的状态信息,通常由网络通信失败所导致</li>

</ul>

<h3>创建和终止</h3>

<p><strong>pod的创建过程</strong></p>

<ol>

<li> <p>用户通过kubectl或其他api客户端提交需要创建的pod信息给apiServer</p> </li>

<li> <p>apiServer开始生成pod对象的信息,并将信息存入etcd,然后返回确认信息至客户端</p> </li>

<li> <p>apiServer开始反映etcd中的pod对象的变化,其它组件使用watch机制来跟踪检查apiServer上的变动</p> </li>

<li> <p>scheduler发现有新的pod对象要创建,开始为Pod分配主机并将结果信息更新至apiServer</p> </li>

<li> <p>node节点上的kubelet发现有pod调度过来,尝试调用docker启动容器,并将结果回送至apiServer</p> </li>

<li> <p>apiServer将接收到的pod状态信息存入etcd中</p> <p><a href="http://img.e-com-net.com/image/info8/fc9422e725cf45e9bfce1aa6578c1341.jpg" target="_blank"><img src="http://img.e-com-net.com/image/info8/fc9422e725cf45e9bfce1aa6578c1341.jpg" alt="Kubernetes第2天_第7张图片" width="650" height="337" style="border:1px solid black;"></a></p> </li>

</ol>

<p><strong>pod的终止过程</strong></p>

<ol>

<li>用户向apiServer发送删除pod对象的命令</li>

<li>apiServcer中的pod对象信息会随着时间的推移而更新,在宽限期内(默认30s),pod被视为dead</li>

<li>将pod标记为terminating状态</li>

<li>kubelet在监控到pod对象转为terminating状态的同时启动pod关闭过程</li>

<li>端点控制器监控到pod对象的关闭行为时将其从所有匹配到此端点的service资源的端点列表中移除</li>

<li>如果当前pod对象定义了preStop钩子处理器,则在其标记为terminating后即会以同步的方式启动执行</li>

<li>pod对象中的容器进程收到停止信号</li>

<li>宽限期结束后,若pod中还存在仍在运行的进程,那么pod对象会收到立即终止的信号</li>

<li>kubelet请求apiServer将此pod资源的宽限期设置为0从而完成删除操作,此时pod对于用户已不可见</li>

</ol>

<h3>初始化容器</h3>

<p>初始化容器是在pod的主容器启动之前要运行的容器,主要是做一些主容器的前置工作,它具有两大特征:</p>

<ol>

<li>初始化容器必须运行完成直至结束,若某初始化容器运行失败,那么kubernetes需要重启它直到成功完成</li>

<li>初始化容器必须按照定义的顺序执行,当且仅当前一个成功之后,后面的一个才能运行</li>

</ol>

<p>初始化容器有很多的应用场景,下面列出的是最常见的几个:</p>

<ul>

<li>提供主容器镜像中不具备的工具程序或自定义代码</li>

<li>初始化容器要先于应用容器串行启动并运行完成,因此可用于延后应用容器的启动直至其依赖的条件得到满足</li>

</ul>

<p>接下来做一个案例,模拟下面这个需求:</p>

<p> 假设要以主容器来运行nginx,但是要求在运行nginx之前先要能够连接上mysql和redis所在服务器</p>

<p> 为了简化测试,事先规定好mysql<code>(192.168.109.201)</code>和redis<code>(192.168.109.202)</code>服务器的地址</p>

<p>创建pod-initcontainer.yaml,内容如下:</p>

<pre><code class="prism language-yaml"><span class="token key atrule">apiVersion</span><span class="token punctuation">:</span> v1

<span class="token key atrule">kind</span><span class="token punctuation">:</span> Pod

<span class="token key atrule">metadata</span><span class="token punctuation">:</span>

<span class="token key atrule">name</span><span class="token punctuation">:</span> pod<span class="token punctuation">-</span>initcontainer

<span class="token key atrule">namespace</span><span class="token punctuation">:</span> dev

<span class="token key atrule">spec</span><span class="token punctuation">:</span>

<span class="token key atrule">containers</span><span class="token punctuation">:</span>

<span class="token punctuation">-</span> <span class="token key atrule">name</span><span class="token punctuation">:</span> main<span class="token punctuation">-</span>container

<span class="token key atrule">image</span><span class="token punctuation">:</span> nginx<span class="token punctuation">:</span>1.17.1

<span class="token key atrule">ports</span><span class="token punctuation">:</span>

<span class="token punctuation">-</span> <span class="token key atrule">name</span><span class="token punctuation">:</span> nginx<span class="token punctuation">-</span>port

<span class="token key atrule">containerPort</span><span class="token punctuation">:</span> <span class="token number">80</span>

<span class="token key atrule">initContainers</span><span class="token punctuation">:</span>

<span class="token punctuation">-</span> <span class="token key atrule">name</span><span class="token punctuation">:</span> test<span class="token punctuation">-</span>mysql

<span class="token key atrule">image</span><span class="token punctuation">:</span> busybox<span class="token punctuation">:</span><span class="token number">1.30</span>

<span class="token key atrule">command</span><span class="token punctuation">:</span> <span class="token punctuation">[</span><span class="token string">'sh'</span><span class="token punctuation">,</span> <span class="token string">'-c'</span><span class="token punctuation">,</span> <span class="token string">'until ping 192.168.109.201 -c 1 ; do echo waiting for mysql...; sleep 2; done;'</span><span class="token punctuation">]</span>

<span class="token punctuation">-</span> <span class="token key atrule">name</span><span class="token punctuation">:</span> test<span class="token punctuation">-</span>redis

<span class="token key atrule">image</span><span class="token punctuation">:</span> busybox<span class="token punctuation">:</span><span class="token number">1.30</span>

<span class="token key atrule">command</span><span class="token punctuation">:</span> <span class="token punctuation">[</span><span class="token string">'sh'</span><span class="token punctuation">,</span> <span class="token string">'-c'</span><span class="token punctuation">,</span> <span class="token string">'until ping 192.168.109.202 -c 1 ; do echo waiting for reids...; sleep 2; done;'</span><span class="token punctuation">]</span>

</code></pre>

<pre><code class="prism language-powershell"><span class="token comment"># 创建pod</span>

<span class="token namespace">[root@master ~]</span><span class="token comment"># kubectl create -f pod-initcontainer.yaml</span>

pod<span class="token operator">/</span>pod<span class="token operator">-</span>initcontainer created

<span class="token comment"># 查看pod状态</span>

<span class="token comment"># 发现pod卡在启动第一个初始化容器过程中,后面的容器不会运行</span>

root@master ~<span class="token punctuation">]</span><span class="token comment"># kubectl describe pod pod-initcontainer -n dev</span>

<span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span><span class="token punctuation">.</span>

Events:

<span class="token function">Type</span> Reason Age <span class="token keyword">From</span> Message

<span class="token operator">--</span>-<span class="token operator">-</span> <span class="token operator">--</span>-<span class="token operator">--</span><span class="token operator">-</span> <span class="token operator">--</span>-<span class="token operator">-</span> <span class="token operator">--</span>-<span class="token operator">-</span> <span class="token operator">--</span>-<span class="token operator">--</span>-<span class="token operator">-</span>

Normal Scheduled 49s default<span class="token operator">-</span>scheduler Successfully assigned dev<span class="token operator">/</span>pod<span class="token operator">-</span>initcontainer to node1