Android Binder通信机制学习(四)

新人阿彡的Android多媒体学习之路

第一章 Android Binder通信机制学习之Binder基本原理

第二章 Android Binder通信机制学习之Binder基本架构

第三章 Android Binder通信机制学习之ServiceManager流程分析

第四章 Android Binder通信机制学习之addService服务注册流程

本章目录

- 新人阿彡的Android多媒体学习之路

-

- 0、前言

- 1、addService服务注册流程

-

- 1.1、客户端服务注册流程

- 1.2、Binder 驱动处理addService服务注册流程

- 1.3、服务端ServiceManager服务注册流程

- 1.4、addService服务注册流程总结

- 2、参考资料

0、前言

主要参考:http://gityuan.com/2015/11/14/binder-add-service/

作为一名新步入Android领域的职场老鸟,奈何最近环境不好,整体越来越卷的大背景下,本老鸟又新进入Android开发这个领域,后续工作基本应该是主攻Android Framework层的开发,辅助Android Applicatios层的开发,在这里记录一下个人的学习之旅,一方面方便自己学习总结,另一方面也方便后续的查漏补缺。整体学习基于Android 12 版本的代码。

1、addService服务注册流程

1.1、客户端服务注册流程

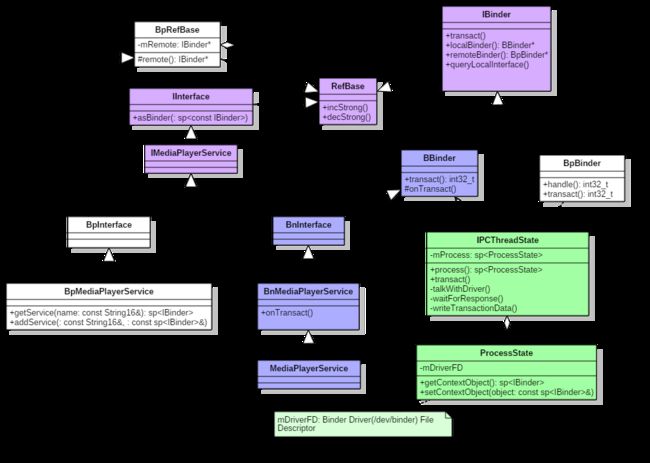

这里以mediaplayer服务为例子来梳理一下客户端的addService流程,先看一下mediaplayer涉及到的各个类之间的关系:

mediaplayer服务的入口函数为main_mediaserver.cpp中的main()方法,代码如下:

// av/media/mediaserver/main_mediaserver.cpp

int main(int argc __unused, char **argv __unused)

{

signal(SIGPIPE, SIG_IGN);

// 获取ProcessState对象实例

sp<ProcessState> proc(ProcessState::self());

// 获取BpServiceManager对象

sp<IServiceManager> sm(defaultServiceManager());

ALOGI("ServiceManager: %p", sm.get());

// 多媒体服务mediaplayer注册

MediaPlayerService::instantiate();

ResourceManagerService::instantiate();

registerExtensions();

::android::hardware::configureRpcThreadpool(16, false);

// 启动Binder线程池

ProcessState::self()->startThreadPool();

// 将当前线程加入线程池

IPCThreadState::self()->joinThreadPool();

::android::hardware::joinRpcThreadpool();

}

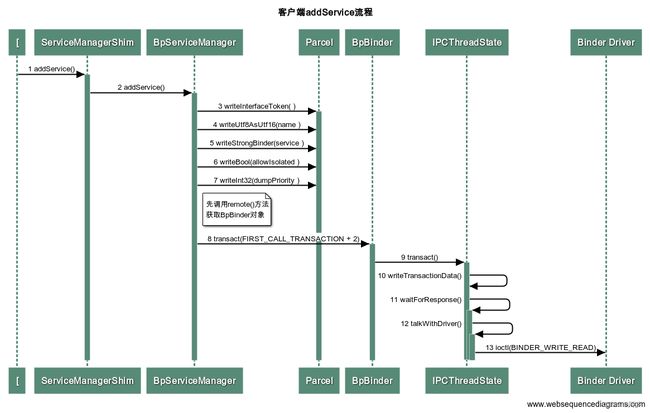

再来整体看一下客户端服务注册addService的完整流程图:

下面根据客户端addService流程,分下一下重点函数里面的代码:

// 2 BpServiceManager::addService

::android::binder::Status BpServiceManager::addService(const ::std::string& name, const ::android::sp<::android::IBinder>& service, bool allowIsolated, int32_t dumpPriority) {

::android::Parcel _aidl_data; // 创建用于发送请求数据的Parcel数据结构_aidl_data

_aidl_data.markForBinder(remoteStrong());

::android::Parcel _aidl_reply; // 创建用于接收响应数据的Parcel数据结构_aidl_reply

::android::status_t _aidl_ret_status = ::android::OK;

::android::binder::Status _aidl_status;

// 往_aidl_data中写入token以及需要传入的参数

_aidl_ret_status = _aidl_data.writeInterfaceToken(getInterfaceDescriptor());

if (((_aidl_ret_status) != (::android::OK))) {

goto _aidl_error;

}

_aidl_ret_status = _aidl_data.writeUtf8AsUtf16(name);

if (((_aidl_ret_status) != (::android::OK))) {

goto _aidl_error;

}

_aidl_ret_status = _aidl_data.writeStrongBinder(service);

if (((_aidl_ret_status) != (::android::OK))) {

goto _aidl_error;

}

_aidl_ret_status = _aidl_data.writeBool(allowIsolated);

if (((_aidl_ret_status) != (::android::OK))) {

goto _aidl_error;

}

_aidl_ret_status = _aidl_data.writeInt32(dumpPriority);

if (((_aidl_ret_status) != (::android::OK))) {

goto _aidl_error;

}

// 调用remote()->transact函数,并取出返回值保存在_aidl_reply中

_aidl_ret_status = remote()->transact(BnServiceManager::TRANSACTION_addService, _aidl_data, &_aidl_reply, 0);

if (UNLIKELY(_aidl_ret_status == ::android::UNKNOWN_TRANSACTION && IServiceManager::getDefaultImpl())) {

return IServiceManager::getDefaultImpl()->addService(name, service, allowIsolated, dumpPriority);

}

if (((_aidl_ret_status) != (::android::OK))) {

goto _aidl_error;

}

// 从_aidl_reply中读取返回数据

_aidl_ret_status = _aidl_status.readFromParcel(_aidl_reply);

if (((_aidl_ret_status) != (::android::OK))) {

goto _aidl_error;

}

if (!_aidl_status.isOk()) {

return _aidl_status;

}

_aidl_error:

_aidl_status.setFromStatusT(_aidl_ret_status);

return _aidl_status;

}

::android::binder::Status BpServiceManager::addService函数是根据AIDL文件自动生成的,有关AIDL的内容这里不展开,感兴趣的可以自行学习。addService()函数主要用于封装请求数据,写token以及一些具体参数,然后调用服务端的addService接口,并读取响应数据。

// 8 BpBinder::transact()

status_t BpBinder::transact(

uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags)

{

......

// 用的是IPCThreadState的transact方法, binderHandle获取handle值

status = IPCThreadState::self()->transact(binderHandle(), code, data, reply, flags);

......

客户端代理对象的transact()函数,使用的是IPCThreadState对象的transact()方法。

// 9 IPCThreadState::transact()

status_t IPCThreadState::transact(int32_t handle,

uint32_t code, const Parcel& data,

Parcel* reply, uint32_t flags)

{

LOG_ALWAYS_FATAL_IF(data.isForRpc(), "Parcel constructed for RPC, but being used with binder.");

status_t err;

flags |= TF_ACCEPT_FDS;

IF_LOG_TRANSACTIONS() {

TextOutput::Bundle _b(alog);

alog << "BC_TRANSACTION thr " << (void*)pthread_self() << " / hand "

<< handle << " / code " << TypeCode(code) << ": "

<< indent << data << dedent << endl;

}

LOG_ONEWAY(">>>> SEND from pid %d uid %d %s", getpid(), getuid(),

(flags & TF_ONE_WAY) == 0 ? "READ REPLY" : "ONE WAY");

// 1.通过writeTransactionData完成数据组装写入,写入的cmd为BC_TRANSACTION

err = writeTransactionData(BC_TRANSACTION, flags, handle, code, data, nullptr);

if (err != NO_ERROR) {

if (reply) reply->setError(err);

return (mLastError = err);

}

// 2.判断是否为TF_ONE_WAY异步单向请求,如果是线程不需要阻塞等待返回值,直接返回即可

if ((flags & TF_ONE_WAY) == 0) {

if (UNLIKELY(mCallRestriction != ProcessState::CallRestriction::NONE)) {

if (mCallRestriction == ProcessState::CallRestriction::ERROR_IF_NOT_ONEWAY) {

ALOGE("Process making non-oneway call (code: %u) but is restricted.", code);

CallStack::logStack("non-oneway call", CallStack::getCurrent(10).get(),

ANDROID_LOG_ERROR);

} else /* FATAL_IF_NOT_ONEWAY */ {

LOG_ALWAYS_FATAL("Process may not make non-oneway calls (code: %u).", code);

}

}

#if 0

if (code == 4) { // relayout

ALOGI(">>>>>> CALLING transaction 4");

} else {

ALOGI(">>>>>> CALLING transaction %d", code);

}

#endif

// 3.通过waitForResponse将数据传输到驱动并等待返回结果

if (reply) {

err = waitForResponse(reply);

} else {

Parcel fakeReply;

err = waitForResponse(&fakeReply);

}

#if 0

if (code == 4) { // relayout

ALOGI("<<<<<< RETURNING transaction 4");

} else {

ALOGI("<<<<<< RETURNING transaction %d", code);

}

#endif

IF_LOG_TRANSACTIONS() {

TextOutput::Bundle _b(alog);

alog << "BR_REPLY thr " << (void*)pthread_self() << " / hand "

<< handle << ": ";

if (reply) alog << indent << *reply << dedent << endl;

else alog << "(none requested)" << endl;

}

} else {

err = waitForResponse(nullptr, nullptr);

}

return err;

}

IPCThreadState对象的transact()函数,首先写入调用writeTransactionData完成数据写入,并设置cmd=BC_TRANSACTION,然后调用waitForResponse函数将数据发送到Binder驱动,并根据情况判断是否需要等待响应数据。

// 10 IPCThreadState::writeTransactionData()

status_t IPCThreadState::writeTransactionData(int32_t cmd, uint32_t binderFlags,

int32_t handle, uint32_t code, const Parcel& data, status_t* statusBuffer)

{

binder_transaction_data tr;

tr.target.ptr = 0; /* Don't pass uninitialized stack data to a remote process */

tr.target.handle = handle;

tr.code = code;

tr.flags = binderFlags;

tr.cookie = 0;

tr.sender_pid = 0;

tr.sender_euid = 0;

const status_t err = data.errorCheck();

if (err == NO_ERROR) {

tr.data_size = data.ipcDataSize();

tr.data.ptr.buffer = data.ipcData();

tr.offsets_size = data.ipcObjectsCount()*sizeof(binder_size_t);

tr.data.ptr.offsets = data.ipcObjects();

} else if (statusBuffer) {

tr.flags |= TF_STATUS_CODE;

*statusBuffer = err;

tr.data_size = sizeof(status_t);

tr.data.ptr.buffer = reinterpret_cast<uintptr_t>(statusBuffer);

tr.offsets_size = 0;

tr.data.ptr.offsets = 0;

} else {

return (mLastError = err);

}

mOut.writeInt32(cmd);

mOut.write(&tr, sizeof(tr));

return NO_ERROR;

}

IPCThreadState::writeTransactionData()函数将先前封装的Parcel结构体中的数据拷贝到binder_transaction_data中,然后先往mOut中写入cmd=BC_TRANSACTION,接着写入具体数据binder_transaction_data。

// 12 IPCThreadState::talkWithDriver

status_t IPCThreadState::talkWithDriver(bool doReceive)

{

......

// 通过ioctl命令BINDER_WRITE_READ与Binder驱动通信

if (ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr) >= 0)

......

}

在talkWithDriver函数中,通过系统调用ioctl并传入BINDER_WRITE_READ命令码,将请求数据写入到Binder驱动中,等待Binder驱动以及服务端都处理完成之后,再继续到上面流程的第2步中,会接收服务端返回的响应数据。接下来看一下服务端是如何处理addService服务注册流程的。

1.2、Binder 驱动处理addService服务注册流程

客户端通过系统调用ioctl并发送BINDER_WRITE_READ命令到Binder Driver之后,我们在来看一下Binder Driver是如何处理的?记住在客户端发送数据的时候通过writeTransactionData函数组装数据的同时,设置的cmd为BC_TRANSACTION。Android12的Binder驱动代码已经全部放到了linux/drivers/android/binder.c下,最重要的一个函数就是binder_ioctl_write_read(),也就是Binder驱动处理addService以及其他流程的入口(ioctl系统调用的入口)。

static int binder_ioctl_write_read(struct file *filp,

unsigned int cmd, unsigned long arg,

struct binder_thread *thread)

{

int ret = 0;

struct binder_proc *proc = filp->private_data;

unsigned int size = _IOC_SIZE(cmd);

void __user *ubuf = (void __user *)arg;

struct binder_write_read bwr;

if (size != sizeof(struct binder_write_read)) {

ret = -EINVAL;

goto out;

}

// 将用户空间bwr结构体拷贝到内核空间

if (copy_from_user(&bwr, ubuf, sizeof(bwr))) {

ret = -EFAULT;

goto out;

}

binder_debug(BINDER_DEBUG_READ_WRITE,

"%d:%d write %lld at %016llx, read %lld at %016llx\n",

proc->pid, thread->pid,

(u64)bwr.write_size, (u64)bwr.write_buffer,

(u64)bwr.read_size, (u64)bwr.read_buffer);

if (bwr.write_size > 0) {

// 将数据写入目标进程

ret = binder_thread_write(proc, thread,

bwr.write_buffer,

bwr.write_size,

&bwr.write_consumed);

trace_binder_write_done(ret);

if (ret < 0) {

bwr.read_consumed = 0;

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto out;

}

}

if (bwr.read_size > 0) {

// 读取自己队列的数据

ret = binder_thread_read(proc, thread, bwr.read_buffer,

bwr.read_size,

&bwr.read_consumed,

filp->f_flags & O_NONBLOCK);

trace_binder_read_done(ret);

binder_inner_proc_lock(proc);

if (!binder_worklist_empty_ilocked(&proc->todo))

binder_wakeup_proc_ilocked(proc);

binder_inner_proc_unlock(proc);

if (ret < 0) {

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto out;

}

}

binder_debug(BINDER_DEBUG_READ_WRITE,

"%d:%d wrote %lld of %lld, read return %lld of %lld\n",

proc->pid, thread->pid,

(u64)bwr.write_consumed, (u64)bwr.write_size,

(u64)bwr.read_consumed, (u64)bwr.read_size);

// 将内核空间bwr结构体拷贝到用户空间

if (copy_to_user(ubuf, &bwr, sizeof(bwr))) {

ret = -EFAULT;

goto out;

}

out:

return ret;

}

binder_thread_write( )和binder_thread_read( )是其中的两个最重要的函数,在binder_thread_write()函数中通过一系列操作会唤醒binder_thread_read()函数中对应的处理流程,我们先简单的看一下,后面用单独的篇章再来分析,本章重点关注整体流程。

static int binder_thread_write(struct binder_proc *proc,

struct binder_thread *thread,

binder_uintptr_t binder_buffer, size_t size,

binder_size_t *consumed)

{

......

case BC_TRANSACTION:

case BC_REPLY: {

// 进入该case

struct binder_transaction_data tr;

// 拷贝用户空间的 binder_transaction_data ,存入tr

if (copy_from_user(&tr, ptr, sizeof(tr)))

return -EFAULT;

ptr += sizeof(tr);

// binder事务处理

binder_transaction(proc, thread, &tr,

cmd == BC_REPLY, 0);

break;

}

......

}

前面说过,客户端传入数据的同时,设置的cmd为BC_TRANSACTION,所以进入到上面的这个case,里面县拷贝用户空间的binder_transaction_data并保存,然后调用binder_transaction()函数进行事务处理。

static void binder_transaction(struct binder_proc *proc,

struct binder_thread *thread,

struct binder_transaction_data *tr, int reply,

binder_size_t extra_buffers_size)

{

......

t->work.type = BINDER_WORK_TRANSACTION;

......

}

binder_transaction函数实在是太复杂了,这里先略过,经过binder_transaction函数处理之后会唤醒目标进程Server端的处理(注意这里说的Server端在addService流程中就是ServiceManager)。

static int binder_thread_read(struct binder_proc *proc,

struct binder_thread *thread,

binder_uintptr_t binder_buffer, size_t size,

binder_size_t *consumed, int non_block)

{

......

if (non_block) {

if (!binder_has_work(thread, wait_for_proc_work))

ret = -EAGAIN;

} else {

// 休眠在这里, wait_for_proc_work为false

ret = binder_wait_for_work(thread, wait_for_proc_work);

}

......

while (1) {

uint32_t cmd;

......

switch (w->type) {

case BINDER_WORK_TRANSACTION: {

binder_inner_proc_unlock(proc);

t = container_of(w, struct binder_transaction, work);

} break;

......

if (!t)

continue;

BUG_ON(t->buffer == NULL);

if (t->buffer->target_node) {

struct binder_node *target_node = t->buffer->target_node;

trd->target.ptr = target_node->ptr;

trd->cookie = target_node->cookie;

t->saved_priority = task_nice(current);

if (t->priority < target_node->min_priority &&

!(t->flags & TF_ONE_WAY))

binder_set_nice(t->priority);

else if (!(t->flags & TF_ONE_WAY) ||

t->saved_priority > target_node->min_priority)

binder_set_nice(target_node->min_priority);

// 在这里将cmd设置为BR_TRANSACTION

cmd = BR_TRANSACTION;

} else {

trd->target.ptr = 0;

trd->cookie = 0;

// 在这里将cmd设置为BR_REPLY

cmd = BR_REPLY;

}

......

// 将cmd和数据写回用户空间

if (put_user(cmd, (uint32_t __user *)ptr)) {

if (t_from)

binder_thread_dec_tmpref(t_from);

binder_cleanup_transaction(t, "put_user failed",

BR_FAILED_REPLY);

return -EFAULT;

}

}

经过binder_thread_read()函数的处理之后,Binder驱动此时将数据写到了目标进程的用户空间,并且此时将cmd设置为BR_TRANSACTION了。

1.3、服务端ServiceManager服务注册流程

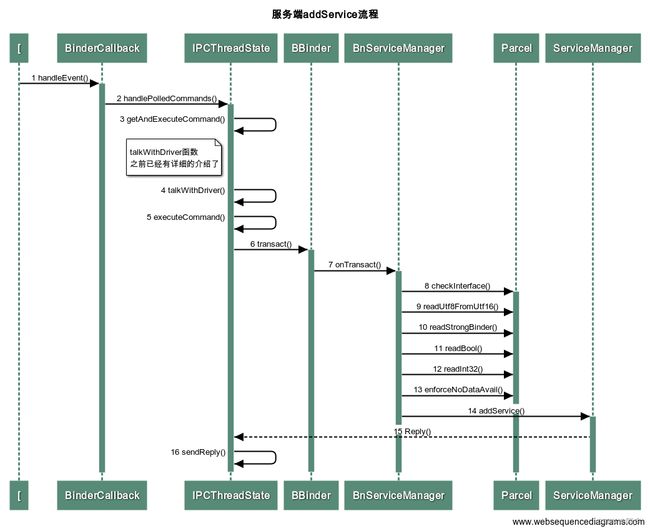

客户端的请求数据经过Binder驱动处理之后,已经写入到了服务端ServiceManager的用户空间了,并且cmd已经从BC_TRANSACTION修改为BR_TRANSACTION了。同样,我们也先整体来看一下服务端ServiceManager处理服务注册addService的完整流程图:

接下来,我们再来看一下重点流程中代码细节:

ServiceManager处理addService以及其他流程的入口在main.cpp中启动的消息循环监听回调函数handleEvent中,代码路径为native/cmds/servicemanager/main.cpp,这里有关Binder中的Looper机制的问题我们后面再来详细分析,这里不进行展开,先看一下入口函数。

class BinderCallback : public LooperCallback {

public:

static sp<BinderCallback> setupTo(const sp<Looper>& looper) {

// 实例化 BinderCallback

sp<BinderCallback> cb = sp<BinderCallback>::make();

// 获取 binder_fd

int binder_fd = -1;

IPCThreadState::self()->setupPolling(&binder_fd);

LOG_ALWAYS_FATAL_IF(binder_fd < 0, "Failed to setupPolling: %d", binder_fd);

// 添加监听目标

int ret = looper->addFd(binder_fd,

Looper::POLL_CALLBACK,

Looper::EVENT_INPUT,

cb,

nullptr /*data*/);

LOG_ALWAYS_FATAL_IF(ret != 1, "Failed to add binder FD to Looper");

return cb;

}

// 1 handleEvent()

int handleEvent(int /* fd */, int /* events */, void* /* data */) override {

// 调用 handlePolledCommands 处理回调

IPCThreadState::self()->handlePolledCommands();

return 1; // Continue receiving callbacks.

}

};

int main(int argc, char** argv) {

#ifdef __ANDROID_RECOVERY__

android::base::InitLogging(argv, android::base::KernelLogger);

#endif

if (argc > 2) {

LOG(FATAL) << "usage: " << argv[0] << " [binder driver]";

}

const char* driver = argc == 2 ? argv[1] : "/dev/binder";

// 打开binder驱动,open,mmap

sp<ProcessState> ps = ProcessState::initWithDriver(driver);

// 在初始化ProcessState的时候,mThreadPoolStarted被初始化为false了,所以setThreadPoolMaxThreadCount函数里面不会设置线程池最大线程数

ps->setThreadPoolMaxThreadCount(0);

// oneway限制,sm发起的binder调用必须是单向,否则打印堆栈日志提示

ps->setCallRestriction(ProcessState::CallRestriction::FATAL_IF_NOT_ONEWAY);

// 实例化ServiceManager,传入Access类用于鉴权

sp<ServiceManager> manager = sp<ServiceManager>::make(std::make_unique<Access>());

// 将自身注册到ServiceManager当中

if (!manager->addService("manager", manager, false /*allowIsolated*/, IServiceManager::DUMP_FLAG_PRIORITY_DEFAULT).isOk()) {

LOG(ERROR) << "Could not self register servicemanager";

}

// 将ServiceManager设置给 IPCThreadState 的全局变量

IPCThreadState::self()->setTheContextObject(manager);

// 注册到驱动,成为binder管理员,handle是0

ps->becomeContextManager();

// 准备Looper

sp<Looper> looper = Looper::prepare(false /*allowNonCallbacks*/);

// 通知驱动BC_ENTER_LOOPER,监听驱动fd,有消息时回调到handleEvent处理binder调用

BinderCallback::setupTo(looper);

ClientCallbackCallback::setupTo(looper, manager);

// 循环等待消息

while(true) {

looper->pollAll(-1);

}

// should not be reached

return EXIT_FAILURE;

}

// 3 IPCThreadState::getAndExecuteCommand

status_t IPCThreadState::getAndExecuteCommand()

{

status_t result;

int32_t cmd;

// 1. 这里从binder driver中获取到的数据就是客户端写入mOut中的数据,获取的数据保存在mIn中

result = talkWithDriver();

if (result >= NO_ERROR) {

size_t IN = mIn.dataAvail();

if (IN < sizeof(int32_t)) return result;

cmd = mIn.readInt32();

IF_LOG_COMMANDS() {

alog << "Processing top-level Command: "

<< getReturnString(cmd) << endl;

}

pthread_mutex_lock(&mProcess->mThreadCountLock);

mProcess->mExecutingThreadsCount++;

if (mProcess->mExecutingThreadsCount >= mProcess->mMaxThreads &&

mProcess->mStarvationStartTimeMs == 0) {

mProcess->mStarvationStartTimeMs = uptimeMillis();

}

pthread_mutex_unlock(&mProcess->mThreadCountLock);

// 2. 从mIn中解析出cmd,根据cmd执行对应的命令

result = executeCommand(cmd);

pthread_mutex_lock(&mProcess->mThreadCountLock);

mProcess->mExecutingThreadsCount--;

if (mProcess->mExecutingThreadsCount < mProcess->mMaxThreads &&

mProcess->mStarvationStartTimeMs != 0) {

int64_t starvationTimeMs = uptimeMillis() - mProcess->mStarvationStartTimeMs;

if (starvationTimeMs > 100) {

ALOGE("binder thread pool (%zu threads) starved for %" PRId64 " ms",

mProcess->mMaxThreads, starvationTimeMs);

}

mProcess->mStarvationStartTimeMs = 0;

}

// Cond broadcast can be expensive, so don't send it every time a binder

// call is processed. b/168806193

if (mProcess->mWaitingForThreads > 0) {

pthread_cond_broadcast(&mProcess->mThreadCountDecrement);

}

pthread_mutex_unlock(&mProcess->mThreadCountLock);

}

return result;

}

IPCThreadState::getAndExecuteCommand()函数首先调用talkWithDriver()函数从mOut中读取cmd以及请求数据,并将请求数据写入mIn中,然后根据读取的cmd=BR_TRANSACTION调用executeCommand()函数进行对应的处理。

// 5 IPCThreadState::executeCommand

status_t IPCThreadState::executeCommand(int32_t cmd)

{

......

switch ((uint32_t)cmd) {

case BR_TRANSACTION_SEC_CTX:

case BR_TRANSACTION:

{

// 1、读取 mIn 中的数据到一个 binder_transaction_data 中

binder_transaction_data_secctx tr_secctx;

binder_transaction_data& tr = tr_secctx.transaction_data;

if (cmd == (int) BR_TRANSACTION_SEC_CTX) {

result = mIn.read(&tr_secctx, sizeof(tr_secctx));

} else {

result = mIn.read(&tr, sizeof(tr));

tr_secctx.secctx = 0;

}

ALOG_ASSERT(result == NO_ERROR,

"Not enough command data for brTRANSACTION");

if (result != NO_ERROR) break;

Parcel buffer;

buffer.ipcSetDataReference(

reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer),

tr.data_size,

reinterpret_cast<const binder_size_t*>(tr.data.ptr.offsets),

tr.offsets_size/sizeof(binder_size_t), freeBuffer);

const void* origServingStackPointer = mServingStackPointer;

mServingStackPointer = __builtin_frame_address(0);

const pid_t origPid = mCallingPid;

const char* origSid = mCallingSid;

const uid_t origUid = mCallingUid;

const int32_t origStrictModePolicy = mStrictModePolicy;

const int32_t origTransactionBinderFlags = mLastTransactionBinderFlags;

const int32_t origWorkSource = mWorkSource;

const bool origPropagateWorkSet = mPropagateWorkSource;

// Calling work source will be set by Parcel#enforceInterface. Parcel#enforceInterface

// is only guaranteed to be called for AIDL-generated stubs so we reset the work source

// here to never propagate it.

clearCallingWorkSource();

clearPropagateWorkSource();

mCallingPid = tr.sender_pid;

mCallingSid = reinterpret_cast<const char*>(tr_secctx.secctx);

mCallingUid = tr.sender_euid;

mLastTransactionBinderFlags = tr.flags;

// ALOGI(">>>> TRANSACT from pid %d sid %s uid %d\n", mCallingPid,

// (mCallingSid ? mCallingSid : ""), mCallingUid);

Parcel reply;

status_t error;

IF_LOG_TRANSACTIONS() {

TextOutput::Bundle _b(alog);

alog << "BR_TRANSACTION thr " << (void*)pthread_self()

<< " / obj " << tr.target.ptr << " / code "

<< TypeCode(tr.code) << ": " << indent << buffer

<< dedent << endl

<< "Data addr = "

<< reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer)

<< ", offsets addr="

<< reinterpret_cast<const size_t*>(tr.data.ptr.offsets) << endl;

}

if (tr.target.ptr) {

// We only have a weak reference on the target object, so we must first try to

// safely acquire a strong reference before doing anything else with it.

if (reinterpret_cast<RefBase::weakref_type*>(

tr.target.ptr)->attemptIncStrong(this)) {

error = reinterpret_cast<BBinder*>(tr.cookie)->transact(tr.code, buffer,

&reply, tr.flags);

reinterpret_cast<BBinder*>(tr.cookie)->decStrong(this);

} else {

error = UNKNOWN_TRANSACTION;

}

} else {

// 2、调用BBinder的transact方法

error = the_context_object->transact(tr.code, buffer, &reply, tr.flags);

}

//ALOGI("<<<< TRANSACT from pid %d restore pid %d sid %s uid %d\n",

// mCallingPid, origPid, (origSid ? origSid : ""), origUid);

if ((tr.flags & TF_ONE_WAY) == 0) {

LOG_ONEWAY("Sending reply to %d!", mCallingPid);

if (error < NO_ERROR) reply.setError(error);

constexpr uint32_t kForwardReplyFlags = TF_CLEAR_BUF;

// 3、将返回的结果重新发给binder

sendReply(reply, (tr.flags & kForwardReplyFlags));

} else {

if (error != OK) {

alog << "oneway function results for code " << tr.code

<< " on binder at "

<< reinterpret_cast<void*>(tr.target.ptr)

<< " will be dropped but finished with status "

<< statusToString(error);

// ideally we could log this even when error == OK, but it

// causes too much logspam because some manually-written

// interfaces have clients that call methods which always

// write results, sometimes as oneway methods.

if (reply.dataSize() != 0) {

alog << " and reply parcel size " << reply.dataSize();

}

alog << endl;

}

LOG_ONEWAY("NOT sending reply to %d!", mCallingPid);

}

mServingStackPointer = origServingStackPointer;

mCallingPid = origPid;

mCallingSid = origSid;

mCallingUid = origUid;

mStrictModePolicy = origStrictModePolicy;

mLastTransactionBinderFlags = origTransactionBinderFlags;

mWorkSource = origWorkSource;

mPropagateWorkSource = origPropagateWorkSet;

IF_LOG_TRANSACTIONS() {

TextOutput::Bundle _b(alog);

alog << "BC_REPLY thr " << (void*)pthread_self() << " / obj "

<< tr.target.ptr << ": " << indent << reply << dedent << endl;

}

}

break;

......

}

IPCThreadState::executeCommand()函数根据cmd=BR_TRANSACTION,这里通过transact()才是真正的调用到服务端的ServiceManager::addService方法。这一步中涉及到的transact()函数跟客户端addService流程中的transact()函数是一样的处理逻辑,这里就不再展开了,可参考客户端addService流程中的transact()函数处理部分。

// 14 ServiceManager::addService

Status ServiceManager::addService(const std::string& name, const sp<IBinder>& binder, bool allowIsolated, int32_t dumpPriority) {

auto ctx = mAccess->getCallingContext();

// uid鉴权

if (multiuser_get_app_id(ctx.uid) >= AID_APP) {

return Status::fromExceptionCode(Status::EX_SECURITY, "App UIDs cannot add services");

}

// selinux鉴权

if (!mAccess->canAdd(ctx, name)) {

return Status::fromExceptionCode(Status::EX_SECURITY, "SELinux denial");

}

if (binder == nullptr) {

return Status::fromExceptionCode(Status::EX_ILLEGAL_ARGUMENT, "Null binder");

}

// 检查名字是否有效

if (!isValidServiceName(name)) {

LOG(ERROR) << "Invalid service name: " << name;

return Status::fromExceptionCode(Status::EX_ILLEGAL_ARGUMENT, "Invalid service name");

}

#ifndef VENDORSERVICEMANAGER

if (!meetsDeclarationRequirements(binder, name)) {

// already logged

return Status::fromExceptionCode(Status::EX_ILLEGAL_ARGUMENT, "VINTF declaration error");

}

#endif // !VENDORSERVICEMANAGER

// implicitly unlinked when the binder is removed

// remoteBinder返回不为空,说明是代理对象,则注册死亡通知并检查注册结果

if (binder->remoteBinder() != nullptr &&

binder->linkToDeath(sp<ServiceManager>::fromExisting(this)) != OK) {

LOG(ERROR) << "Could not linkToDeath when adding " << name;

return Status::fromExceptionCode(Status::EX_ILLEGAL_STATE, "linkToDeath failure");

}

// Overwrite the old service if it exists

// 将binder保存到map容器当中

mNameToService[name] = Service {

.binder = binder,

.allowIsolated = allowIsolated,

.dumpPriority = dumpPriority,

.debugPid = ctx.debugPid,

};

auto it = mNameToRegistrationCallback.find(name);

if (it != mNameToRegistrationCallback.end()) {

for (const sp<IServiceCallback>& cb : it->second) {

mNameToService[name].guaranteeClient = true;

// permission checked in registerForNotifications

cb->onRegistration(name, binder);

}

}

return Status::ok();

}

ServiceManager::addService()函数是服务端最终处理addService服务注册的逻辑,主要是进行了uid以及selinux鉴权以及合法性校验,然后将Binder对象保存到Map容器中。

// 16 IPCThreadState::sendReply

status_t IPCThreadState::sendReply(const Parcel& reply, uint32_t flags)

{

status_t err;

status_t statusBuffer;

err = writeTransactionData(BC_REPLY, flags, -1, 0, reply, &statusBuffer);

if (err < NO_ERROR) return err;

return waitForResponse(nullptr, nullptr);

}

服务端处理完成之后,会将处理的结果以响应数据的形式发送到Binder驱动,这里又将cmd设置成了BC_REPLY,经由Binder驱动的处理之后,再写入到客户端的用户空间从而被客户端获取到。对应到客户端addService流程中的第2步中,addService调用完成之后,从响应的数据结构中读取响应数据。服务端发送的cmd=BC_REPLY的请求到Binder驱动,Binder驱动同样会按照处理BC_TRANSACTION命令的流程处理一遍,Binder驱动处理完成后,将cmd设置成BR_REPLY,并将消息发送到客户端。这里就不展开了,具体可以参考上一小节Binder驱动处理addService服务注册流程来进行学习。

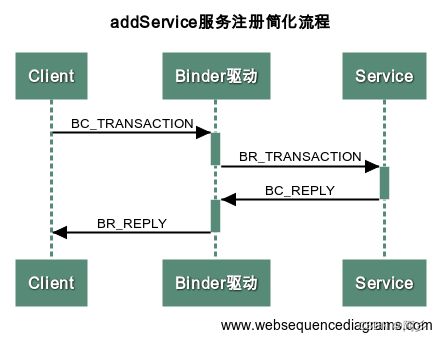

1.4、addService服务注册流程总结

这里简单总结一下addService的完整流程,首先客户端发起addService请求时cmd=BC_TRANSACTION,客户端的请求首先会发送到Binder驱动,经过Binder驱动处理后,将addService请求发送到了服务端,此时cmd=BR_TRANSACTION,服务端接收到addService请求时,完成服务端自身处理,然后发送addService的响应结果到Binder驱动,此时cmd=BC_REPLY,Binder驱动收到服务端的响应消息后,经过Binder驱动处理后,将响应消息转发到了客户端,此时cmd=BR_REPLY,最后客户端收到addService服务注册的最终响应,整个流程结束。

可以看到所有发往Binder驱动的消息对应的cmd都是BC_XXX,所有由Binder驱动发出去的消息对应的cmd都是BR_XXX。这一章我们详细分析总结了addService服务注册的详细流程,包括客户端、服务端以及Binder驱动的处理过程。当然Binder驱动的处理

流程总结得还不够仔细,没关系,后面咱们继续详细的分析Binder驱动是如何处理BC_XXX命令的。

2、参考资料

1、Binder系列5—注册服务(addService)

2、深入分析Android Binder 驱动

3、Android 12(S) Binder(一)

4、Android10.0 Binder通信原理(六)-Binder数据如何完成定向打击

5、干货 | 彻底理解ANDROID BINDER通信架构(下)