Dinky0.7.0源码初探

Dinky0.7.0源码初探

1. Dinky简介

2022年11月25,Dinky0.7.0发布了;

Dinky为 “ Data Integrate No Knotty ” 的首字母组合,寓意 “ 易于建设批流一体平台及应用 ”。

Flink目前是一个使用广泛的基于流计算的流批一体的大数据计算框架,它关注的是流计算的底层实现、集群运行最优化、高效运行的设计等;

Dinky是基于Flink的一个应用平台:

- 目前最核心的功能,就是在Flink上扩展了主要基于Flink SQL的,连接OLTP、OLAP和数据湖等众多框架的一站式实时计算平台,

- 致力于流批一体和湖仓一体的建设与实践,方便直接在其上进行开发、联调。

2. Dinky管理控制台

Dinky0.7.0实时计算平台开发模块包括数据开发、运维中心、元数据中心、注册中心、认证中心、配置中心六大模块。

其实最核心的使用,就是在“注册中心”配置好Flink运行环境,在"数据开发"中配置好帖子Flink SQL,然后提交到相关环境中执行,在“运维中心”台观察任务运行状态;

一些说明性的内容,可以参考链接里的介绍:http://www.dlink.top/

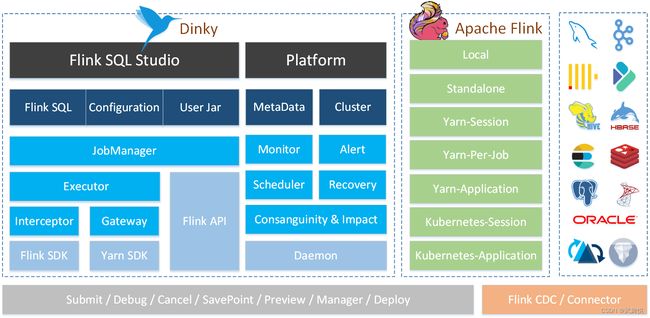

3. Dinky架构描述

Dinky从功能实现上说,由两大块组成,一块是Flink SQL Studio,一块是Platform

3.1 Flink SQL Studio

负责 FlinkSQL 的开发和调试,在确定最终的 SQL 口径及任务配置后,可通过任务发布功能自动地在运维中心注册测试或生产环境下的最终任务,同时具备版本的管理,将开发与运维分离,保证生产环境的稳定性。

这一块基于Flink,通过提交Flink SQL,或Jar包(目前只支持Yarn-Application、K8S-Application),由Dinky转化为Flink任务提交到Flink的执行环境,提供界面反馈Flink执行环境的结果;

作为一个Flink SQL的开发平台,这是最核心的功能;

3.2 Platform

这一块是在整个Dinky中是一个协助性的模块,提供对元数据、定时任务、告警等内容的管理;

4. FlinkSQL核心源码解读

4.1 SQL执行跟踪

输入FlinkSQL,保证合法性,右边面板执行模式选择“Local”,点击“执行当前的SQL”,如下图:

整体SQL,这里性展示一下:

CREATE TABLE source

(

emp_no INT,

birth_date DATE,

first_name STRING,

last_name STRING,

gender STRING,

hire_date DATE,

aa STRING,

PRIMARY KEY (emp_no) NOT ENFORCED

) WITH (

'connector' = 'binlog-x'

,'username' = 'dlink'

,'password' = 'Dlink20221125'

,'cat' = 'insert,delete,update'

,'url' = 'jdbc:mysql://mysql-dlink:3306/dlink?useUnicode=true&characterEncoding=UTF-8&useSSL=false'

,'host' = 'mysql-dlink'

,'port' = '3306'

,'table' = 'ztt_employees'

,'timestamp-format.standard' = 'SQL'

);

CREATE TABLE sink

(

emp_no INT,

birth_date DATE,

first_name STRING,

last_name STRING,

gender STRING,

hire_date DATE,

PRIMARY KEY (emp_no) NOT ENFORCED

) WITH (

'connector' = 'mysql-x'

,'url' = 'jdbc:mysql://mysql-dlink:3306/dlink?useUnicode=true&characterEncoding=UTF-8&useSSL=false'

,'table-name' = 'ztt_employees_sink'

,'username' = 'dlink'

,'password' = 'Dlink20221125'

,'sink.buffer-flush.max-rows' = '1024' -- 批量写数据条数,默认:1024

,'sink.buffer-flush.interval' = '10000' -- 批量写时间间隔,默认:10000毫秒

,'sink.all-replace' = 'true' -- 解释如下(其他rdb数据库类似):默认:false。定义了PRIMARY KEY才有效,否则是追加语句

-- sink.all-replace = 'true' 生成如:INSERT INTO `result3`(`mid`, `mbb`, `sid`, `sbb`) VALUES (?, ?, ?, ?) ON DUPLICATE KEY UPDATE `mid`=VALUES(`mid`), `mbb`=VALUES(`mbb`), `sid`=VALUES(`sid`), `sbb`=VALUES(`sbb`) 。会将所有的数据都替换。

-- sink.all-replace = 'false' 生成如:INSERT INTO `result3`(`mid`, `mbb`, `sid`, `sbb`) VALUES (?, ?, ?, ?) ON DUPLICATE KEY UPDATE `mid`=IFNULL(VALUES(`mid`),`mid`), `mbb`=IFNULL(VALUES(`mbb`),`mbb`), `sid`=IFNULL(VALUES(`sid`),`sid`), `sbb`=IFNULL(VALUES(`sbb`),`sbb`) 。如果新值为null,数据库中的旧值不为null,则不会覆盖。

,'sink.parallelism' = '1' -- 写入结果的并行度,默认:null

);

insert into sink

select emp_no, birth_date, first_name, last_name, gender, hire_date

from source u;

dlink-core子项目下,com.dlink.job.JobManager:

public class JobManager {

public JobResult executeSql(String statement) {

}

}

executeSql,最开始会初始化一个Job对象:

然后使用ThreadLocal保持这个Job对象;执行ready方法,实质调用Job2MysqlHandler的init方法,将Job对象转换成History对象持久化表相关表(dlink_history)里;

再预解析FlinkSQL:

预解析会生成JobParam对象,这个结构简单,四个成员:

public class JobParam {

// 包含所有的SQL

private List<String> statements;

// DDL类SQL

private List<StatementParam> ddl;

// 可立即执行SQL

private List<StatementParam> trans;

// 后台执行SQL

private List<StatementParam> execute;

//......

}

可以猜得到,SQL执行的这个方法,后面就是对预解析后的JobParam对象中的不同成员进行处理

4.1.1 SQL执行—DDL

下面是DDL执行片段,容易理解,只是还需要深入理解下executor的内部构造,这里先略过,自己先想像一下它是如何执行的吧:

for (StatementParam item : jobParam.getDdl()) {

currentSql = item.getValue();

executor.executeSql(item.getValue());

}

4.1.2 SQL执行—可立即执行SQL

这里是执行下面的SQL:

insert into sink

select emp_no, birth_date, first_name, last_name, gender, hire_date

from source u;

这里相对复杂一些,根据“useStatementSet”和“useGateway”这两个布尔变量的不同取值进行不同处理;具体细节没太深入理解,先忽略,上面SQL用例执行的情况是:

useStatementSet = false;

useGateway = false;

所以走这么一段:

public class JobParam {

public JobResult executeSql(String statement) {

// ......

for (StatementParam item : jobParam.getTrans()) {

currentSql = item.getValue();

FlinkInterceptorResult flinkInterceptorResult =

FlinkInterceptor.build(executor, item.getValue());

if (Asserts.isNotNull(flinkInterceptorResult.getTableResult())) {

if (config.isUseResult()) {

IResult result = ResultBuilder

.build(item.getType(), config.getMaxRowNum(), config.isUseChangeLog(),

config.isUseAutoCancel(), executor.getTimeZone())

.getResult(flinkInterceptorResult.getTableResult());

job.setResult(result);

}

} else {

if (!flinkInterceptorResult.isNoExecute()) {

TableResult tableResult = executor.executeSql(item.getValue());

if (tableResult.getJobClient().isPresent()) {

job.setJobId(tableResult.getJobClient().get().getJobID().toHexString());

job.setJids(new ArrayList<String>() {

{

add(job.getJobId());

}

});

}

if (config.isUseResult()) {

IResult result =

ResultBuilder.build(item.getType(), config.getMaxRowNum(),

config.isUseChangeLog(), config.isUseAutoCancel(),

executor.getTimeZone()).getResult(tableResult);

job.setResult(result);

}

}

}

// Only can submit the first of insert sql, when not use statement set.

break;

}

// ......

}

}

看到这里首先由FlinkInterceptor构造出FlinkInterceptorResult,这里上个图,看一下调用过程:

以为是一个很复杂的过程,其实也只不过中构造了一个简单的Java对象;

最终也是一个和上面讲的执行DDL类似的执行语句,只是这里会用到返回值:

TableResult tableResult = executor.executeSql(item.getValue());

跟一下,如下图所示,是会调用到flink table相关api的实现,根据Flink版本不同而不同,其逻辑就是根据sql的操作类别执行相应操作:

这里走的是这一段:

if (operation instanceof ModifyOperation) {

return executeInternal(Collections.singletonList((ModifyOperation) operation));

}

随后,程序执行了相关操作,一大堆日志出来了:

Loading class `com.mysql.jdbc.Driver'. This is deprecated. The new driver class is `com.mysql.cj.jdbc.Driver'. The driver is automatically registered via the SPI and manual loading of the driver class is generally unnecessary.

[dlink] 2022-11-28 15:58:18.780 INFO 13780 --- [io-18888-exec-8] e.taskexecutor.TaskExecutorResourceUtils: The configuration option taskmanager.cpu.cores required for local execution is not set, setting it to the maximal possible value.

[dlink] 2022-11-28 15:58:18.780 INFO 13780 --- [io-18888-exec-8] e.taskexecutor.TaskExecutorResourceUtils: The configuration option taskmanager.memory.task.heap.size required for local execution is not set, setting it to the maximal possible value.

[dlink] 2022-11-28 15:58:18.780 INFO 13780 --- [io-18888-exec-8] e.taskexecutor.TaskExecutorResourceUtils: The configuration option taskmanager.memory.task.off-heap.size required for local execution is not set, setting it to the maximal possible value.

[dlink] 2022-11-28 15:58:18.781 INFO 13780 --- [io-18888-exec-8] e.taskexecutor.TaskExecutorResourceUtils: The configuration option taskmanager.memory.network.min required for local execution is not set, setting it to its default value 64 mb.

[dlink] 2022-11-28 15:58:18.781 INFO 13780 --- [io-18888-exec-8] e.taskexecutor.TaskExecutorResourceUtils: The configuration option taskmanager.memory.network.max required for local execution is not set, setting it to its default value 64 mb.

[dlink] 2022-11-28 15:58:18.781 INFO 13780 --- [io-18888-exec-8] e.taskexecutor.TaskExecutorResourceUtils: The configuration option taskmanager.memory.managed.size required for local execution is not set, setting it to its default value 128 mb.

[dlink] 2022-11-28 15:58:18.789 INFO 13780 --- [io-18888-exec-8] he.flink.runtime.minicluster.MiniCluster: Starting Flink Mini Cluster

[dlink] 2022-11-28 15:58:18.791 INFO 13780 --- [io-18888-exec-8] he.flink.runtime.minicluster.MiniCluster: Starting Metrics Registry

[dlink] 2022-11-28 15:58:18.844 INFO 13780 --- [io-18888-exec-8] flink.runtime.metrics.MetricRegistryImpl: No metrics reporter configured, no metrics will be exposed/reported.

[dlink] 2022-11-28 15:58:18.844 INFO 13780 --- [io-18888-exec-8] he.flink.runtime.minicluster.MiniCluster: Starting RPC Service(s)

[dlink] 2022-11-28 15:58:18.857 INFO 13780 --- [io-18888-exec-8] ink.runtime.rpc.akka.AkkaRpcServiceUtils: Trying to start local actor system

[dlink] 2022-11-28 15:58:19.146 INFO 13780 --- [lt-dispatcher-3] akka.event.slf4j.Slf4jLogger : Slf4jLogger started

[dlink] 2022-11-28 15:58:19.435 INFO 13780 --- [io-18888-exec-8] ink.runtime.rpc.akka.AkkaRpcServiceUtils: Actor system started at akka://flink

[dlink] 2022-11-28 15:58:19.445 INFO 13780 --- [io-18888-exec-8] ink.runtime.rpc.akka.AkkaRpcServiceUtils: Trying to start local actor system

[dlink] 2022-11-28 15:58:19.452 INFO 13780 --- [flink-metrics-2] akka.event.slf4j.Slf4jLogger : Slf4jLogger started

[dlink] 2022-11-28 15:58:19.523 INFO 13780 --- [io-18888-exec-8] ink.runtime.rpc.akka.AkkaRpcServiceUtils: Actor system started at akka://flink-metrics

[dlink] 2022-11-28 15:58:19.540 INFO 13780 --- [io-18888-exec-8] he.flink.runtime.rpc.akka.AkkaRpcService: Starting RPC endpoint for org.apache.flink.runtime.metrics.dump.MetricQueryService at akka://flink-metrics/user/rpc/MetricQueryService .

[dlink] 2022-11-28 15:58:19.557 INFO 13780 --- [io-18888-exec-8] he.flink.runtime.minicluster.MiniCluster: Starting high-availability services

[dlink] 2022-11-28 15:58:19.571 INFO 13780 --- [io-18888-exec-8] org.apache.flink.runtime.blob.BlobServer: Created BLOB server storage directory C:\Users\DELL\AppData\Local\Temp\blobStore-8ce9bd8a-94da-4a5a-ab28-3bb8a2e5411f

[dlink] 2022-11-28 15:58:19.576 INFO 13780 --- [io-18888-exec-8] org.apache.flink.runtime.blob.BlobServer: Started BLOB server at 0.0.0.0:64628 - max concurrent requests: 50 - max backlog: 1000

[dlink] 2022-11-28 15:58:19.579 INFO 13780 --- [io-18888-exec-8] he.flink.runtime.blob.PermanentBlobCache: Created BLOB cache storage directory C:\Users\DELL\AppData\Local\Temp\blobStore-5ce84da4-63b5-40af-83d7-66f0380e3782

[dlink] 2022-11-28 15:58:19.581 INFO 13780 --- [io-18888-exec-8] he.flink.runtime.blob.TransientBlobCache: Created BLOB cache storage directory C:\Users\DELL\AppData\Local\Temp\blobStore-58cbdaf5-cd1d-47f4-a625-e5b918502216

[dlink] 2022-11-28 15:58:19.582 INFO 13780 --- [io-18888-exec-8] he.flink.runtime.minicluster.MiniCluster: Starting 1 TaskManger(s)

[dlink] 2022-11-28 15:58:19.586 INFO 13780 --- [io-18888-exec-8] k.runtime.taskexecutor.TaskManagerRunner: Starting TaskManager with ResourceID: b7ccdd8d-ecb6-47fa-8363-b0d77d6d02ff

[dlink] 2022-11-28 15:58:19.613 INFO 13780 --- [io-18888-exec-8] runtime.taskexecutor.TaskManagerServices: Temporary file directory 'C:\Users\DELL\AppData\Local\Temp': total 353 GB, usable 57 GB (16.15% usable)

[dlink] 2022-11-28 15:58:19.618 INFO 13780 --- [io-18888-exec-8] k.runtime.io.disk.FileChannelManagerImpl: FileChannelManager uses directory C:\Users\DELL\AppData\Local\Temp\flink-io-e6c7759f-98bc-4503-aec9-509aef0a7d0f for spill files.

[dlink] 2022-11-28 15:58:19.640 INFO 13780 --- [io-18888-exec-8] k.runtime.io.disk.FileChannelManagerImpl: FileChannelManager uses directory C:\Users\DELL\AppData\Local\Temp\flink-netty-shuffle-93f548fe-1a11-4e26-9120-b64db72af69d for spill files.

[dlink] 2022-11-28 15:58:19.671 INFO 13780 --- [io-18888-exec-8] time.io.network.buffer.NetworkBufferPool: Allocated 64 MB for network buffer pool (number of memory segments: 2048, bytes per segment: 32768).

[dlink] 2022-11-28 15:58:19.678 INFO 13780 --- [io-18888-exec-8] ntime.io.network.NettyShuffleEnvironment: Starting the network environment and its components.

[dlink] 2022-11-28 15:58:19.680 INFO 13780 --- [io-18888-exec-8] link.runtime.taskexecutor.KvStateService: Starting the kvState service and its components.

[dlink] 2022-11-28 15:58:19.704 INFO 13780 --- [io-18888-exec-8] he.flink.runtime.rpc.akka.AkkaRpcService: Starting RPC endpoint for org.apache.flink.runtime.taskexecutor.TaskExecutor at akka://flink/user/rpc/taskmanager_0 .

[dlink] 2022-11-28 15:58:19.722 INFO 13780 --- [lt-dispatcher-4] ime.taskexecutor.DefaultJobLeaderService: Start job leader service.

[dlink] 2022-11-28 15:58:19.723 INFO 13780 --- [lt-dispatcher-4] apache.flink.runtime.filecache.FileCache: User file cache uses directory C:\Users\DELL\AppData\Local\Temp\flink-dist-cache-fa00336d-132f-4e09-9d1d-f0b18e63f00f

[dlink] 2022-11-28 15:58:19.772 INFO 13780 --- [io-18888-exec-8] untime.dispatcher.DispatcherRestEndpoint: Starting rest endpoint.

[dlink] 2022-11-28 15:58:19.985 WARN 13780 --- [io-18888-exec-8] flink.runtime.webmonitor.WebMonitorUtils: Log file environment variable 'log.file' is not set.

[dlink] 2022-11-28 15:58:19.985 WARN 13780 --- [io-18888-exec-8] flink.runtime.webmonitor.WebMonitorUtils: JobManager log files are unavailable in the web dashboard. Log file location not found in environment variable 'log.file' or configuration key 'web.log.path'.

[dlink] 2022-11-28 15:58:20.692 INFO 13780 --- [io-18888-exec-8] untime.dispatcher.DispatcherRestEndpoint: Rest endpoint listening at localhost:64696

[dlink] 2022-11-28 15:58:20.693 INFO 13780 --- [io-18888-exec-8] ity.nonha.embedded.EmbeddedLeaderService: Proposing leadership to contender http://localhost:64696

[dlink] 2022-11-28 15:58:20.695 INFO 13780 --- [io-18888-exec-8] untime.dispatcher.DispatcherRestEndpoint: Web frontend listening at http://localhost:64696.

[dlink] 2022-11-28 15:58:20.695 INFO 13780 --- [ter-io-thread-1] untime.dispatcher.DispatcherRestEndpoint: http://localhost:64696 was granted leadership with leaderSessionID=95efea4d-38d0-4919-b59a-5adace56de6e

[dlink] 2022-11-28 15:58:20.695 INFO 13780 --- [ter-io-thread-1] ity.nonha.embedded.EmbeddedLeaderService: Received confirmation of leadership for leader http://localhost:64696 , session=95efea4d-38d0-4919-b59a-5adace56de6e

[dlink] 2022-11-28 15:58:20.714 INFO 13780 --- [io-18888-exec-8] he.flink.runtime.rpc.akka.AkkaRpcService: Starting RPC endpoint for org.apache.flink.runtime.resourcemanager.StandaloneResourceManager at akka://flink/user/rpc/resourcemanager_1 .

[dlink] 2022-11-28 15:58:20.730 INFO 13780 --- [io-18888-exec-8] ity.nonha.embedded.EmbeddedLeaderService: Proposing leadership to contender LeaderContender: DefaultDispatcherRunner

[dlink] 2022-11-28 15:58:20.731 INFO 13780 --- [lt-dispatcher-4] ity.nonha.embedded.EmbeddedLeaderService: Proposing leadership to contender LeaderContender: StandaloneResourceManager

[dlink] 2022-11-28 15:58:20.733 INFO 13780 --- [lt-dispatcher-4] esourcemanager.StandaloneResourceManager: ResourceManager akka://flink/user/rpc/resourcemanager_1 was granted leadership with fencing token 8d83085a2ca2d9a9f94cd673edab420b

[dlink] 2022-11-28 15:58:20.735 INFO 13780 --- [io-18888-exec-8] he.flink.runtime.minicluster.MiniCluster: Flink Mini Cluster started successfully

[dlink] 2022-11-28 15:58:20.738 INFO 13780 --- [ter-io-thread-2] er.runner.SessionDispatcherLeaderProcess: Start SessionDispatcherLeaderProcess.

[dlink] 2022-11-28 15:58:20.738 INFO 13780 --- [lt-dispatcher-4] ourcemanager.slotmanager.SlotManagerImpl: Starting the SlotManager.

[dlink] 2022-11-28 15:58:20.739 INFO 13780 --- [ter-io-thread-5] er.runner.SessionDispatcherLeaderProcess: Recover all persisted job graphs.

[dlink] 2022-11-28 15:58:20.739 INFO 13780 --- [ter-io-thread-5] er.runner.SessionDispatcherLeaderProcess: Successfully recovered 0 persisted job graphs.

[dlink] 2022-11-28 15:58:20.743 INFO 13780 --- [ter-io-thread-6] ity.nonha.embedded.EmbeddedLeaderService: Received confirmation of leadership for leader akka://flink/user/rpc/resourcemanager_1 , session=f94cd673-edab-420b-8d83-085a2ca2d9a9

[dlink] 2022-11-28 15:58:20.745 INFO 13780 --- [lt-dispatcher-4] .flink.runtime.taskexecutor.TaskExecutor: Connecting to ResourceManager akka://flink/user/rpc/resourcemanager_1(8d83085a2ca2d9a9f94cd673edab420b).

[dlink] 2022-11-28 15:58:20.747 INFO 13780 --- [ter-io-thread-5] he.flink.runtime.rpc.akka.AkkaRpcService: Starting RPC endpoint for org.apache.flink.runtime.dispatcher.StandaloneDispatcher at akka://flink/user/rpc/dispatcher_2 .

[dlink] 2022-11-28 15:58:20.812 INFO 13780 --- [ter-io-thread-5] ity.nonha.embedded.EmbeddedLeaderService: Received confirmation of leadership for leader akka://flink/user/rpc/dispatcher_2 , session=270b76ae-7c83-4091-9eff-446752ec203c

[dlink] 2022-11-28 15:58:20.823 INFO 13780 --- [lt-dispatcher-4] .flink.runtime.taskexecutor.TaskExecutor: Resolved ResourceManager address, beginning registration

[dlink] 2022-11-28 15:58:20.830 INFO 13780 --- [lt-dispatcher-5] esourcemanager.StandaloneResourceManager: Registering TaskManager with ResourceID b7ccdd8d-ecb6-47fa-8363-b0d77d6d02ff (akka://flink/user/rpc/taskmanager_0) at ResourceManager

[dlink] 2022-11-28 15:58:20.834 INFO 13780 --- [lt-dispatcher-5] .flink.runtime.taskexecutor.TaskExecutor: Successful registration at resource manager akka://flink/user/rpc/resourcemanager_1 under registration id 92e92dfaf5c5dad1b03109a5a1ad45e9.

[dlink] 2022-11-28 15:58:20.834 INFO 13780 --- [lt-dispatcher-3] .runtime.dispatcher.StandaloneDispatcher: Received JobGraph submission 8f4b5ced28ff4ae624d75953f7c34525 (cj_employees_binlog2mysql).

[dlink] 2022-11-28 15:58:20.835 INFO 13780 --- [lt-dispatcher-3] .runtime.dispatcher.StandaloneDispatcher: Submitting job 8f4b5ced28ff4ae624d75953f7c34525 (cj_employees_binlog2mysql).

[dlink] 2022-11-28 15:58:20.857 INFO 13780 --- [er-io-thread-12] ity.nonha.embedded.EmbeddedLeaderService: Proposing leadership to contender LeaderContender: JobManagerRunnerImpl

[dlink] 2022-11-28 15:58:20.866 INFO 13780 --- [er-io-thread-12] he.flink.runtime.rpc.akka.AkkaRpcService: Starting RPC endpoint for org.apache.flink.runtime.jobmaster.JobMaster at akka://flink/user/rpc/jobmanager_3 .

[dlink] 2022-11-28 15:58:20.874 INFO 13780 --- [er-io-thread-12] apache.flink.runtime.jobmaster.JobMaster: Initializing job cj_employees_binlog2mysql (8f4b5ced28ff4ae624d75953f7c34525).

[dlink] 2022-11-28 15:58:20.890 INFO 13780 --- [er-io-thread-12] apache.flink.runtime.jobmaster.JobMaster: Using restart back off time strategy NoRestartBackoffTimeStrategy for cj_employees_binlog2mysql (8f4b5ced28ff4ae624d75953f7c34525).

[dlink] 2022-11-28 15:58:20.931 INFO 13780 --- [er-io-thread-12] apache.flink.runtime.jobmaster.JobMaster: Running initialization on master for job cj_employees_binlog2mysql (8f4b5ced28ff4ae624d75953f7c34525).

[dlink] 2022-11-28 15:58:20.969 INFO 13780 --- [er-io-thread-12] apache.flink.runtime.jobmaster.JobMaster: Successfully ran initialization on master in 38 ms.

[dlink] 2022-11-28 15:58:20.993 INFO 13780 --- [er-io-thread-12] heduler.adapter.DefaultExecutionTopology: Built 1 pipelined regions in 1 ms

[dlink] 2022-11-28 15:58:21.011 INFO 13780 --- [er-io-thread-12] apache.flink.runtime.jobmaster.JobMaster: No state backend has been configured, using default (Memory / JobManager) MemoryStateBackend (data in heap memory / checkpoints to JobManager) (checkpoints: 'null', savepoints: 'null', asynchronous: TRUE, maxStateSize: 5242880)

[dlink] 2022-11-28 15:58:21.025 INFO 13780 --- [er-io-thread-12] runtime.checkpoint.CheckpointCoordinator: No checkpoint found during restore.

[dlink] 2022-11-28 15:58:21.029 INFO 13780 --- [er-io-thread-12] apache.flink.runtime.jobmaster.JobMaster: Using failover strategy org.apache.flink.runtime.executiongraph.failover.flip1.RestartPipelinedRegionFailoverStrategy@401bf7a2 for cj_employees_binlog2mysql (8f4b5ced28ff4ae624d75953f7c34525).

[dlink] 2022-11-28 15:58:21.041 INFO 13780 --- [er-io-thread-12] k.runtime.jobmaster.JobManagerRunnerImpl: JobManager runner for job cj_employees_binlog2mysql (8f4b5ced28ff4ae624d75953f7c34525) was granted leadership with session id 4ef38f79-c49c-4937-8a30-41086f1d39b9 at akka://flink/user/rpc/jobmanager_3.

[dlink] 2022-11-28 15:58:21.044 INFO 13780 --- [lt-dispatcher-3] apache.flink.runtime.jobmaster.JobMaster: Starting execution of job cj_employees_binlog2mysql (8f4b5ced28ff4ae624d75953f7c34525) under job master id 8a3041086f1d39b94ef38f79c49c4937.

[dlink] 2022-11-28 15:58:21.045 INFO 13780 --- [lt-dispatcher-3] apache.flink.runtime.jobmaster.JobMaster: Starting scheduling with scheduling strategy [org.apache.flink.runtime.scheduler.strategy.PipelinedRegionSchedulingStrategy]

[dlink] 2022-11-28 15:58:21.046 INFO 13780 --- [lt-dispatcher-3] nk.runtime.executiongraph.ExecutionGraph: Job cj_employees_binlog2mysql (8f4b5ced28ff4ae624d75953f7c34525) switched from state CREATED to RUNNING.

[dlink] 2022-11-28 15:58:21.049 INFO 13780 --- [lt-dispatcher-3] nk.runtime.executiongraph.ExecutionGraph: Source: TableSourceScan(table=[[default_catalog, default_database, source]], fields=[emp_no, birth_date, first_name, last_name, gender, hire_date, aa]) -> DropUpdateBefore -> Calc(select=[emp_no, birth_date, first_name, last_name, gender, hire_date]) -> Sink: Sink(table=[default_catalog.default_database.sink], fields=[emp_no, birth_date, first_name, last_name, gender, hire_date]) (1/1) (9965276b02e9b289b558f50fd63910ca) switched from CREATED to SCHEDULED.

[dlink] 2022-11-28 15:58:21.059 INFO 13780 --- [lt-dispatcher-3] .runtime.jobmaster.slotpool.SlotPoolImpl: Cannot serve slot request, no ResourceManager connected. Adding as pending request [SlotRequestId{282e15decbed427f13725351361b5998}]

[dlink] 2022-11-28 15:58:21.066 INFO 13780 --- [future-thread-1] ity.nonha.embedded.EmbeddedLeaderService: Received confirmation of leadership for leader akka://flink/user/rpc/jobmanager_3 , session=4ef38f79-c49c-4937-8a30-41086f1d39b9

[dlink] 2022-11-28 15:58:21.066 INFO 13780 --- [lt-dispatcher-3] apache.flink.runtime.jobmaster.JobMaster: Connecting to ResourceManager akka://flink/user/rpc/resourcemanager_1(8d83085a2ca2d9a9f94cd673edab420b)

[dlink] 2022-11-28 15:58:21.068 INFO 13780 --- [lt-dispatcher-5] apache.flink.runtime.jobmaster.JobMaster: Resolved ResourceManager address, beginning registration

[dlink] 2022-11-28 15:58:21.070 INFO 13780 --- [lt-dispatcher-3] esourcemanager.StandaloneResourceManager: Registering job manager 8a3041086f1d39b94ef38f79c49c4937@akka://flink/user/rpc/jobmanager_3 for job 8f4b5ced28ff4ae624d75953f7c34525.

[dlink] 2022-11-28 15:58:21.071 INFO 13780 --- [lt-dispatcher-5] esourcemanager.StandaloneResourceManager: Registered job manager 8a3041086f1d39b94ef38f79c49c4937@akka://flink/user/rpc/jobmanager_3 for job 8f4b5ced28ff4ae624d75953f7c34525.

[dlink] 2022-11-28 15:58:21.073 INFO 13780 --- [lt-dispatcher-3] apache.flink.runtime.jobmaster.JobMaster: JobManager successfully registered at ResourceManager, leader id: 8d83085a2ca2d9a9f94cd673edab420b.

[dlink] 2022-11-28 15:58:21.074 INFO 13780 --- [lt-dispatcher-3] .runtime.jobmaster.slotpool.SlotPoolImpl: Requesting new slot [SlotRequestId{282e15decbed427f13725351361b5998}] and profile ResourceProfile{UNKNOWN} with allocation id 5ceba1916d4b7da69430d88b43c212fa from resource manager.

[dlink] 2022-11-28 15:58:21.075 INFO 13780 --- [lt-dispatcher-5] esourcemanager.StandaloneResourceManager: Request slot with profile ResourceProfile{UNKNOWN} for job 8f4b5ced28ff4ae624d75953f7c34525 with allocation id 5ceba1916d4b7da69430d88b43c212fa.

[dlink] 2022-11-28 15:58:21.077 INFO 13780 --- [lt-dispatcher-3] .flink.runtime.taskexecutor.TaskExecutor: Receive slot request 5ceba1916d4b7da69430d88b43c212fa for job 8f4b5ced28ff4ae624d75953f7c34525 from resource manager with leader id 8d83085a2ca2d9a9f94cd673edab420b.

[dlink] 2022-11-28 15:58:21.081 INFO 13780 --- [lt-dispatcher-3] .flink.runtime.taskexecutor.TaskExecutor: Allocated slot for 5ceba1916d4b7da69430d88b43c212fa.

[dlink] 2022-11-28 15:58:21.083 INFO 13780 --- [lt-dispatcher-3] ime.taskexecutor.DefaultJobLeaderService: Add job 8f4b5ced28ff4ae624d75953f7c34525 for job leader monitoring.

[dlink] 2022-11-28 15:58:21.085 INFO 13780 --- [er-io-thread-18] ime.taskexecutor.DefaultJobLeaderService: Try to register at job manager akka://flink/user/rpc/jobmanager_3 with leader id 4ef38f79-c49c-4937-8a30-41086f1d39b9.

[dlink] 2022-11-28 15:58:21.087 INFO 13780 --- [lt-dispatcher-2] ime.taskexecutor.DefaultJobLeaderService: Resolved JobManager address, beginning registration

[dlink] 2022-11-28 15:58:21.091 INFO 13780 --- [lt-dispatcher-2] ime.taskexecutor.DefaultJobLeaderService: Successful registration at job manager akka://flink/user/rpc/jobmanager_3 for job 8f4b5ced28ff4ae624d75953f7c34525.

[dlink] 2022-11-28 15:58:21.092 INFO 13780 --- [lt-dispatcher-2] .flink.runtime.taskexecutor.TaskExecutor: Establish JobManager connection for job 8f4b5ced28ff4ae624d75953f7c34525.

[dlink] 2022-11-28 15:58:21.098 INFO 13780 --- [lt-dispatcher-2] .flink.runtime.taskexecutor.TaskExecutor: Offer reserved slots to the leader of job 8f4b5ced28ff4ae624d75953f7c34525.

[dlink] 2022-11-28 15:58:21.105 INFO 13780 --- [lt-dispatcher-3] nk.runtime.executiongraph.ExecutionGraph: Source: TableSourceScan(table=[[default_catalog, default_database, source]], fields=[emp_no, birth_date, first_name, last_name, gender, hire_date, aa]) -> DropUpdateBefore -> Calc(select=[emp_no, birth_date, first_name, last_name, gender, hire_date]) -> Sink: Sink(table=[default_catalog.default_database.sink], fields=[emp_no, birth_date, first_name, last_name, gender, hire_date]) (1/1) (9965276b02e9b289b558f50fd63910ca) switched from SCHEDULED to DEPLOYING.

[dlink] 2022-11-28 15:58:21.105 INFO 13780 --- [lt-dispatcher-3] nk.runtime.executiongraph.ExecutionGraph: Deploying Source: TableSourceScan(table=[[default_catalog, default_database, source]], fields=[emp_no, birth_date, first_name, last_name, gender, hire_date, aa]) -> DropUpdateBefore -> Calc(select=[emp_no, birth_date, first_name, last_name, gender, hire_date]) -> Sink: Sink(table=[default_catalog.default_database.sink], fields=[emp_no, birth_date, first_name, last_name, gender, hire_date]) (1/1) (attempt #0) with attempt id 9965276b02e9b289b558f50fd63910ca to b7ccdd8d-ecb6-47fa-8363-b0d77d6d02ff @ account.jetbrains.com (dataPort=-1) with allocation id 5ceba1916d4b7da69430d88b43c212fa

[dlink] 2022-11-28 15:58:21.112 INFO 13780 --- [lt-dispatcher-2] time.taskexecutor.slot.TaskSlotTableImpl: Activate slot 5ceba1916d4b7da69430d88b43c212fa.

[dlink] 2022-11-28 15:58:21.142 INFO 13780 --- [lt-dispatcher-2] .flink.runtime.taskexecutor.TaskExecutor: Received task Source: TableSourceScan(table=[[default_catalog, default_database, source]], fields=[emp_no, birth_date, first_name, last_name, gender, hire_date, aa]) -> DropUpdateBefore -> Calc(select=[emp_no, birth_date, first_name, last_name, gender, hire_date]) -> Sink: Sink(table=[default_catalog.default_database.sink], fields=[emp_no, birth_date, first_name, last_name, gender, hire_date]) (1/1)#0 (9965276b02e9b289b558f50fd63910ca), deploy into slot with allocation id 5ceba1916d4b7da69430d88b43c212fa.

[dlink] 2022-11-28 15:58:21.143 INFO 13780 --- [_date]) (1/1)#0] rg.apache.flink.runtime.taskmanager.Task: Source: TableSourceScan(table=[[default_catalog, default_database, source]], fields=[emp_no, birth_date, first_name, last_name, gender, hire_date, aa]) -> DropUpdateBefore -> Calc(select=[emp_no, birth_date, first_name, last_name, gender, hire_date]) -> Sink: Sink(table=[default_catalog.default_database.sink], fields=[emp_no, birth_date, first_name, last_name, gender, hire_date]) (1/1)#0 (9965276b02e9b289b558f50fd63910ca) switched from CREATED to DEPLOYING.

[dlink] 2022-11-28 15:58:21.144 INFO 13780 --- [lt-dispatcher-2] time.taskexecutor.slot.TaskSlotTableImpl: Activate slot 5ceba1916d4b7da69430d88b43c212fa.

[dlink] 2022-11-28 15:58:21.147 INFO 13780 --- [_date]) (1/1)#0] rg.apache.flink.runtime.taskmanager.Task: Loading JAR files for task Source: TableSourceScan(table=[[default_catalog, default_database, source]], fields=[emp_no, birth_date, first_name, last_name, gender, hire_date, aa]) -> DropUpdateBefore -> Calc(select=[emp_no, birth_date, first_name, last_name, gender, hire_date]) -> Sink: Sink(table=[default_catalog.default_database.sink], fields=[emp_no, birth_date, first_name, last_name, gender, hire_date]) (1/1)#0 (9965276b02e9b289b558f50fd63910ca) [DEPLOYING].

[dlink] 2022-11-28 15:58:21.148 INFO 13780 --- [_date]) (1/1)#0] rg.apache.flink.runtime.taskmanager.Task: Registering task at network: Source: TableSourceScan(table=[[default_catalog, default_database, source]], fields=[emp_no, birth_date, first_name, last_name, gender, hire_date, aa]) -> DropUpdateBefore -> Calc(select=[emp_no, birth_date, first_name, last_name, gender, hire_date]) -> Sink: Sink(table=[default_catalog.default_database.sink], fields=[emp_no, birth_date, first_name, last_name, gender, hire_date]) (1/1)#0 (9965276b02e9b289b558f50fd63910ca) [DEPLOYING].

[dlink] 2022-11-28 15:58:21.159 INFO 13780 --- [_date]) (1/1)#0] flink.streaming.runtime.tasks.StreamTask: No state backend has been configured, using default (Memory / JobManager) MemoryStateBackend (data in heap memory / checkpoints to JobManager) (checkpoints: 'null', savepoints: 'null', asynchronous: TRUE, maxStateSize: 5242880)

[dlink] 2022-11-28 15:58:21.166 INFO 13780 --- [_date]) (1/1)#0] rg.apache.flink.runtime.taskmanager.Task: Source: TableSourceScan(table=[[default_catalog, default_database, source]], fields=[emp_no, birth_date, first_name, last_name, gender, hire_date, aa]) -> DropUpdateBefore -> Calc(select=[emp_no, birth_date, first_name, last_name, gender, hire_date]) -> Sink: Sink(table=[default_catalog.default_database.sink], fields=[emp_no, birth_date, first_name, last_name, gender, hire_date]) (1/1)#0 (9965276b02e9b289b558f50fd63910ca) switched from DEPLOYING to RUNNING.

[dlink] 2022-11-28 15:58:21.168 INFO 13780 --- [lt-dispatcher-2] nk.runtime.executiongraph.ExecutionGraph: Source: TableSourceScan(table=[[default_catalog, default_database, source]], fields=[emp_no, birth_date, first_name, last_name, gender, hire_date, aa]) -> DropUpdateBefore -> Calc(select=[emp_no, birth_date, first_name, last_name, gender, hire_date]) -> Sink: Sink(table=[default_catalog.default_database.sink], fields=[emp_no, birth_date, first_name, last_name, gender, hire_date]) (1/1) (9965276b02e9b289b558f50fd63910ca) switched from DEPLOYING to RUNNING.

[dlink] 2022-11-28 15:58:21.183 WARN 13780 --- [_date]) (1/1)#0] org.apache.flink.metrics.MetricGroup : The operator name Sink: Sink(table=[default_catalog.default_database.sink], fields=[emp_no, birth_date, first_name, last_name, gender, hire_date]) exceeded the 80 characters length limit and was truncated.

[dlink] 2022-11-28 15:58:21.221 WARN 13780 --- [_date]) (1/1)#0] org.apache.flink.metrics.MetricGroup : The operator name Source: TableSourceScan(table=[[default_catalog, default_database, source]], fields=[emp_no, birth_date, first_name, last_name, gender, hire_date, aa]) exceeded the 80 characters length limit and was truncated.

[dlink] 2022-11-28 15:58:25.630 INFO 13780 --- [_date]) (1/1)#0] .chunjun.sink.DtOutputFormatSinkFunction: Start initialize output format state

[dlink] 2022-11-28 15:58:26.109 INFO 13780 --- [_date]) (1/1)#0] .chunjun.sink.DtOutputFormatSinkFunction: Is restored:false

[dlink] 2022-11-28 15:58:26.109 INFO 13780 --- [_date]) (1/1)#0] .chunjun.sink.DtOutputFormatSinkFunction: End initialize output format state

[dlink] 2022-11-28 15:58:26.124 INFO 13780 --- [Pool-1-thread-1] jun.connector.jdbc.sink.JdbcOutputFormat: [SharedInfo.threadJdbcOutput]: Thread[pool-asyncRetryPool-1-thread-1,5,Flink Task Threads]

[dlink] 2022-11-28 15:58:26.175 INFO 13780 --- [_date]) (1/1)#0] jun.connector.jdbc.sink.JdbcOutputFormat: write sql:INSERT INTO `ztt_employees_sink`(`emp_no`, `birth_date`, `first_name`, `last_name`, `gender`, `hire_date`) VALUES (:emp_no, :birth_date, :first_name, :last_name, :gender, :hire_date) ON DUPLICATE KEY UPDATE `emp_no`=VALUES(`emp_no`), `birth_date`=VALUES(`birth_date`), `first_name`=VALUES(`first_name`), `last_name`=VALUES(`last_name`), `gender`=VALUES(`gender`), `hire_date`=VALUES(`hire_date`)

[dlink] 2022-11-28 15:58:26.838 INFO 13780 --- [isson-netty-4-6] nnection.pool.MasterPubSubConnectionPool: 1 connections initialized for vert-redis/192.168.123.216:7006

[dlink] 2022-11-28 15:58:26.871 INFO 13780 --- [sson-netty-4-20] son.connection.pool.MasterConnectionPool: 24 connections initialized for vert-redis/192.168.123.216:7006

[dlink] 2022-11-28 15:58:26.963 INFO 13780 --- [_date]) (1/1)#0] jun.connector.jdbc.sink.JdbcOutputFormat: subTask[0}] wait finished

[dlink] 2022-11-28 15:58:27.060 INFO 13780 --- [isson-netty-7-6] nnection.pool.MasterPubSubConnectionPool: 1 connections initialized for vert-redis/192.168.123.216:7006

[dlink] 2022-11-28 15:58:27.089 INFO 13780 --- [sson-netty-7-20] son.connection.pool.MasterConnectionPool: 24 connections initialized for vert-redis/192.168.123.216:7006

[dlink] 2022-11-28 15:58:27.198 INFO 13780 --- [_date]) (1/1)#0] n.connector.mysql.sink.MysqlOutputFormat: [MysqlOutputFormat] open successfully,

4.1.3 SQL执行—结果状态更新

会根据SQL执行状态,更新相关表的字段,这里后续 补充;

4.2 FlinkSQL执行对接

前面提到,执行FlinkSQL是调用FlinkTable的标准api,insert语句最终端调用了Flink Table API的executeInternal方法:

public class TableEnvironmentImpl implements TableEnvironmentInternal {

@Override

public TableResult executeInternal(List<ModifyOperation> operations) {

List<Transformation<?>> transformations = translate(operations);

List<String> sinkIdentifierNames = extractSinkIdentifierNames(operations);

String jobName = getJobName("insert-into_" + String.join(",", sinkIdentifierNames));

Pipeline pipeline = execEnv.createPipeline(transformations, tableConfig, jobName);

try {

JobClient jobClient = execEnv.executeAsync(pipeline);

TableSchema.Builder builder = TableSchema.builder();

Object[] affectedRowCounts = new Long[operations.size()];

for (int i = 0; i < operations.size(); ++i) {

// use sink identifier name as field name

builder.field(sinkIdentifierNames.get(i), DataTypes.BIGINT());

affectedRowCounts[i] = -1L;

}

return TableResultImpl.builder()

.jobClient(jobClient)

.resultKind(ResultKind.SUCCESS_WITH_CONTENT)

.tableSchema(builder.build())

.data(

new InsertResultIterator(

jobClient, Row.of(affectedRowCounts), userClassLoader))

.build();

} catch (Exception e) {

throw new TableException("Failed to execute sql", e);

}

}

}

上面代码里,第一步是先进行一个SQL的转换,然后与其它重要元素一起合成一个Pipeline接口的实现:

package org.apache.flink.api.dag;

import org.apache.flink.annotation.Internal;

@Internal

public interface Pipeline {

}

Pipeline,管道,很容易联系到“流”,在这里意味阗实时ETL;这里的Pileline实现,实质是一个StreamGraph:

看看StreamExecutor:

package org.apache.flink.table.planner.delegation;

import org.apache.flink.annotation.Internal;

import org.apache.flink.annotation.VisibleForTesting;

import org.apache.flink.api.dag.Pipeline;

import org.apache.flink.api.dag.Transformation;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.graph.StreamGraph;

import org.apache.flink.table.api.TableConfig;

import org.apache.flink.table.delegation.Executor;

import org.apache.flink.table.planner.utils.ExecutorUtils;

import java.util.List;

@Internal

public class StreamExecutor extends ExecutorBase {

@VisibleForTesting

public StreamExecutor(StreamExecutionEnvironment executionEnvironment) {

super(executionEnvironment);

}

@Override

public Pipeline createPipeline(

List<Transformation<?>> transformations, TableConfig tableConfig, String jobName) {

StreamGraph streamGraph =

ExecutorUtils.generateStreamGraph(getExecutionEnvironment(), transformations);

streamGraph.setJobName(getNonEmptyJobName(jobName));

return streamGraph;

}

}

4.3 FlinkSQL执行总结

这里给一个时序图: