ML刻意练习第六周之K-means

k-means算法是无监督学习方法的经典算法之一,也是最简单的一个。

其中我们需要选择一种距离度量来表示数据点之间的距离,本文中我们使用的是欧式距离。

一、k均值聚类算法

1.支持函数

import numpy as np

def loadDataSet(fileName):

"""

函数说明:加载数据

Parameters:

fileName - 文件名

Returns:

dataMat - 数据矩阵

"""

dataMat = []

fr = open(fileName)

for line in fr.readlines():

curLine = line.strip().split('\t')

fltLine = list(map(float, curLine)) # 转化为float类型

dataMat.append(fltLine)

return np.array(dataMat)

def distEclud(vecA, vecB):

"""

函数说明:欧拉距离

parameters:

vecA,vecB:两个数据点的特征向量

returns:

欧式距离

"""

return np.sqrt(np.sum(np.power(vecA - vecB, 2)))

def randCent(dataSet, k):

"""

函数说明:

:param dataSet: 数据矩阵

:param k: 最终分类的个数

:return: centroids:一个包含k个随机质心的集合

"""

# n为特征值个数

n = np.shape(dataSet)[1]

centroids = np.mat(np.zeros((k, n)))

for j in range(n):

# minJ为特征值最小值,rangeJ为特征值取值范围

minJ = min(dataSet[:, j])

rangeJ = float(max(dataSet[:, j]) - minJ)

centroids[:, j] = np.mat(minJ + rangeJ * np.random.rand(k, 1))

return centroids

为了测试一下randCent函数,输入以下命令:

arr = np.eye(5)

result = randCent(arr,6)

print(result)

得到以下结果:

[[ 0.05560545 0.81041864 0.79611652 0.9373905 0.08548578]

[ 0.42075168 0.86751914 0.66679966 0.57616285 0.13381111]

[ 0.46620813 0.2056531 0.35411902 0.10988056 0.51711511]

[ 0.48553254 0.38452667 0.3622934 0.85310448 0.4792246 ]

[ 0.81550174 0.75100062 0.99734453 0.22288111 0.30330208]

[ 0.61745704 0.13374273 0.91897972 0.13062757 0.79268968]]

可见randCent函数可以实现构建一个包含k个随机质心的集合的功能。

为了测试加载函数和distEclud函数,运行以下命令:

datamat = loadDataSet("E:\学习资料\机器学习算法刻意练习\机器学习实战书电子版\machinelearninginaction\Ch10\\testSet.txt")

dataMat = np.array(datamat)

result = distEclud(dataMat[0],dataMat[1])

print(result)

得到以下结果

5.18463281668

可见所有的支持函数均可正常运行。

2.KMeans聚类算法

def kMeans(dataSet, k, distMeas=distEclud, createCent=randCent):

"""

函数说明:kMeans算法的实现

:param dataSet:数据矩阵

:param k:待分类的类别数

:param distMeas:度量距离的公式

:param createCent:随机创建的初始点

:return:

centroids:聚类中心

clusterAssment:聚类结果

"""

m = np.shape(dataSet)[0]

# clusterAssment用来存储聚类中心

clusterAssment = np.mat(np.zeros((m,2)))

centroids = createCent(dataSet, k)

# clusterChanged用来判断算法是否收敛

clusterChanged = True

while clusterChanged:

clusterChanged = False

# 遍历所有数据,将其分入最近的聚类中心中

for i in range(m):

# minIndex记录该点属于的类别

minDist = float(math.inf)

minIndex = -1

for j in range(k):

distJI = distMeas(centroids[j,:],dataSet[i,:])

if distJI < minDist:

minDist = distJI

minIndex = j

# # 判断是否收敛

if clusterAssment[i, 0] != minIndex: clusterChanged = True

clusterAssment[i, :] = minIndex, minDist**2

# 重新计算聚类中心

for cent in range(k):

ptsInClust = dataSet[np.nonzero(clusterAssment[:,0].A == cent)[0]]

centroids[cent, :] = np.mean(ptsInClust, axis=0)

return centroids, clusterAssment

运行结果为:

[[-3.53973889 -2.89384326]

[ 2.6265299 3.10868015]

[ 2.65077367 -2.79019029]

[-2.46154315 2.78737555]]

[[ 1. 2.3201915 ]

[ 3. 1.39004893]

[ 2. 7.46974076]

[ 0. 3.60477283]

[ 1. 2.7696782 ]

[ 3. 2.80101213]

[ 2. 5.10287596]

[ 0. 1.37029303]

[ 1. 2.29348924]

[ 3. 0.64596748]

[ 2. 1.72819697]

[ 0. 0.60909593]

[ 1. 2.51695402]

[ 3. 0.13871642]

...

[ 0. 1.11099937]

[ 1. 0.07060147]

[ 3. 0.2599013 ]

[ 2. 4.39510824]

[ 0. 1.86578044]]

可见以上算法成功将数据集testSet分为了四类。

二、二分 K-均值算法

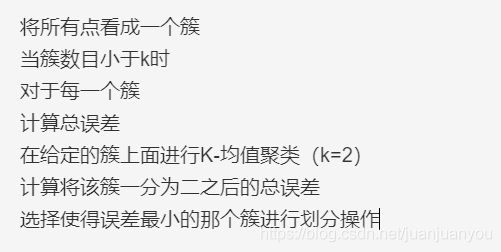

为克服K-均值算法收敛于局部最小值的问题,我们介绍另一个称为二分K-均值(bisecting

K-means)的算法。

其伪代码形式如下:

二分算法biKmeans的具体实现如下:

def biKmeans(dataSet, k, distMeas=distEclud):

"""

函数说明:二分KMeans算法

:param dataSet:数据矩阵

:param k: 分类个数

:param distMeas: 距离度量方式

:return:

np.mat(centList):聚类中心

clusterAssment:数据的分类结果

"""

m = np.shape(dataSet)[0]

clusterAssment = np.mat(np.zeros((m, 2)))

centroid0 = np.mean(dataSet, axis=0).tolist()[0]

# 将均值centroid0存入列表centList中(聚类中心)

centList = [centroid0]

# 计算初始的平方误差

for j in range(m):

clusterAssment[j, 1] = distMeas(np.mat(centroid0), dataSet[j, :])**2

while (len(centList) < k):

lowestSSE = float(math.inf)

# 找出最优切分的类

for i in range(len(centList)):

# ptsInCurrCluster表示划分前的所有数据

ptsInCurrCluster = dataSet[np.nonzero(clusterAssment[:, 0].A == i)[0], :]

centroidMat, splitClustAss = kMeans(ptsInCurrCluster, 2, distMeas)

# 计算划分与没划分的数据的平方误差和

sseSplit = np.sum(splitClustAss[:, 1])

sseNotSplit = np.sum(clusterAssment[np.nonzero(clusterAssment[:, 0].A != i)[0], 1])

print("sseSplit:{} and notSplit:{} ".format(sseSplit, sseNotSplit))

# 找出使平方误差和最小的划分方式

if (sseSplit + sseNotSplit) < lowestSSE:

bestCentToSplit = i

bestNewCents = centroidMat

bestClustAss = splitClustAss.copy()

lowestSSE = sseSplit + sseNotSplit

# 完成一次切分,并输出最好的切分结果

bestClustAss[np.nonzero(bestClustAss[:, 0].A == 1)[0], 0] = len(centList)

bestClustAss[np.nonzero(bestClustAss[:, 0].A == 0)[0], 0] = bestCentToSplit

print('the bestCentToSplit is: ', bestCentToSplit)

print('the len of bestClustAss is: ', len(bestClustAss))

# 更新聚类中心与划分结果

centList[bestCentToSplit] = bestNewCents[0, :].tolist()[0]

centList.append(bestNewCents[1,:].tolist()[0])

clusterAssment[np.nonzero(clusterAssment[:, 0].A == bestCentToSplit)[0], :] = bestClustAss

return np.mat(centList), clusterAssment

输入以下命令:

dataMat = loadDataSet("E:\学习资料\机器学习算法刻意练习\机器学习实战书电子版\machinelearninginaction\Ch10\\testSet.txt")

centList, clusterAssment = biKmeans(dataMat, 4)

print("centList is:")

print(centList)

print("clusterAssment is :")

print(clusterAssment)

运行结果为:

sseSplit:792.9168565373268 and notSplit:0.0

the bestCentToSplit is: 0

the len of bestClustAss is: 80

sseSplit:66.36683512000786 and notSplit:466.63278133614426

sseSplit:83.5874695564185 and notSplit:326.2840752011824

the bestCentToSplit is: 1

the len of bestClustAss is: 40

sseSplit:66.36683512000786 and notSplit:83.5874695564185

sseSplit:18.398749985455712 and notSplit:377.2703018926498

sseSplit:32.678827295007544 and notSplit:358.8853180661336

the bestCentToSplit is: 0

the len of bestClustAss is: 40

centList is:

[[ 2.6265299 3.10868015]

[-3.38237045 -2.9473363 ]

[ 2.80293085 -2.7315146 ]

[-2.46154315 2.78737555]]

clusterAssment is :

[[ 0.00000000e+00 2.32019150e+00]

[ 3.00000000e+00 1.39004893e+00]

[ 2.00000000e+00 6.63839104e+00]

[ 1.00000000e+00 4.16140951e+00]

[ 0.00000000e+00 2.76967820e+00]

[ 3.00000000e+00 2.80101213e+00]

...

[ 0.00000000e+00 7.06014703e-02]

[ 3.00000000e+00 2.59901305e-01]

[ 2.00000000e+00 3.74491207e+00]

[ 1.00000000e+00 2.32143993e+00]]

由以上结果易知,经过三次划分,biKmeans算法成功将数据集分为四类,并给出了相应的聚类中心和分类结果。