Docker(十四)--Docker三剑客--Docker Swarm

Docker Swarm

- 1. Docker Swarm简介

- 2. Docker Swarm实践

-

- 2.1 创建 Swarm 集群

- 2.2 测试软件myapp

- 2.2 部署swarm监控:(各节点提前导入dockersamples/visualizer镜像)

-

- 2.2.1 集群完好的情况

- 2.2.2 一个集群出现故障的情况,会将集群上的任务分配到别的主机

- 3. 节点升级降级

-

- 3.1 节点降级

- 3.2 节点升级

-

- 3.2.1 配置docker

- 3.2.2 配置节点

- 4. 加入本地私有仓库

-

- 4.1 启动harbor仓库

- 4.2 配置本地解析

- 4.3 配置加速器,从本地走

- 4.4 配置证书

- 4.5上传镜像

- 4.6 测试

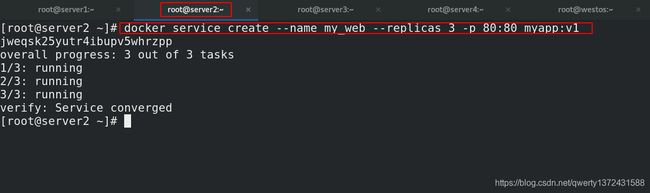

- 4.7 部属集群

- 5. 实现业务滚动更新

-

- 5.1 上传myapp:v2到本地仓库

- 5.2 滚动更新

- 5.3 测试

- 6. compose实现集群部属

-

- 6.1 自己书写compose文件,并删除原有service

-

- 6.1.1 compose文件,并删除原有service

- 6.1.2 部署

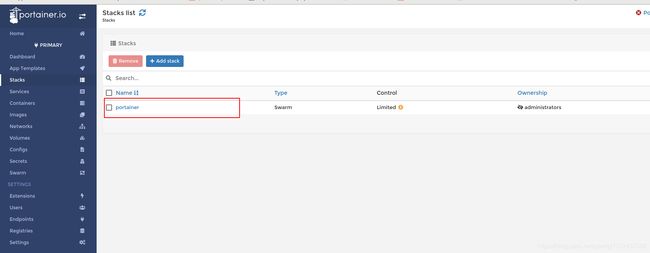

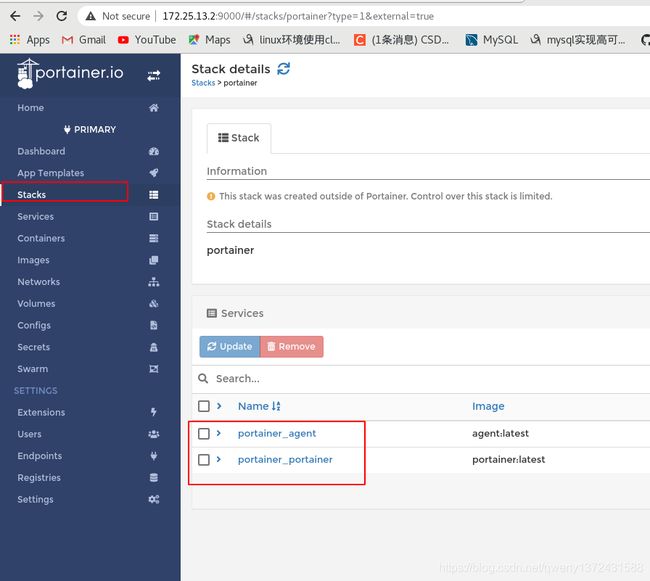

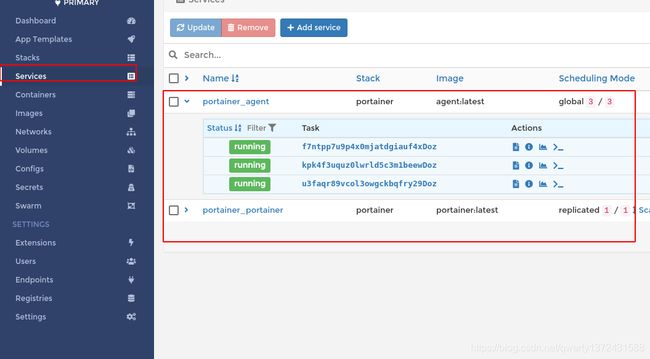

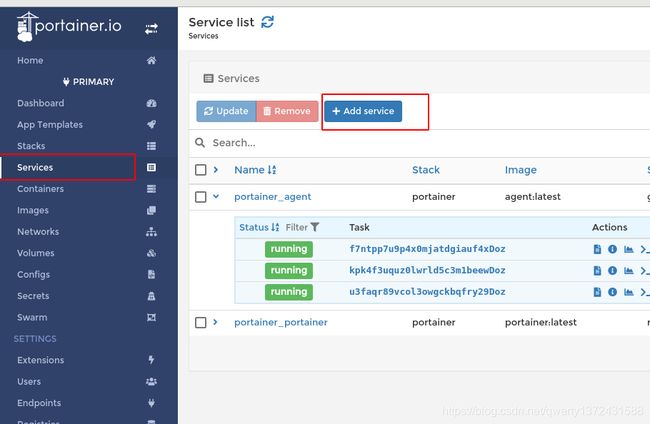

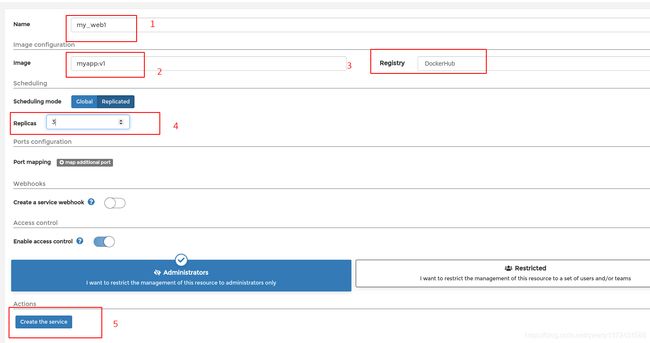

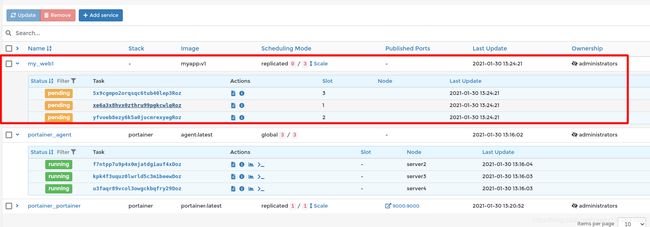

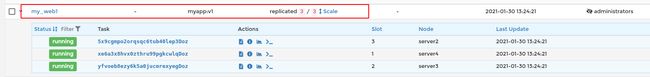

- 6.2 图形化的部署(Portainer 轻量级的 Docker 管理 UI)

-

- 6.2.1 上传portainer镜像到仓库

- 6.2.2 图形化部署

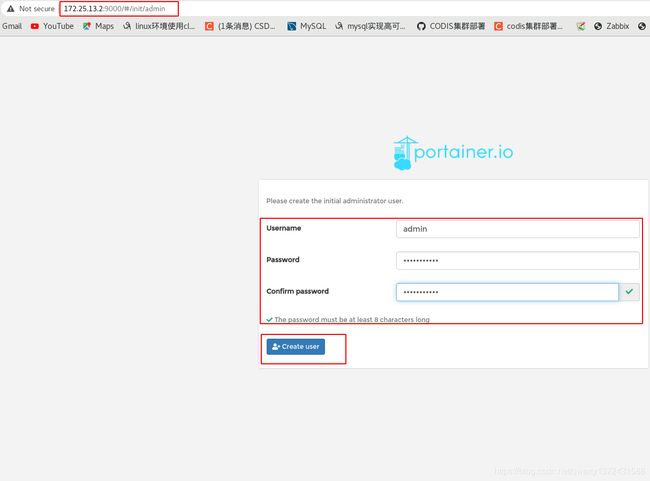

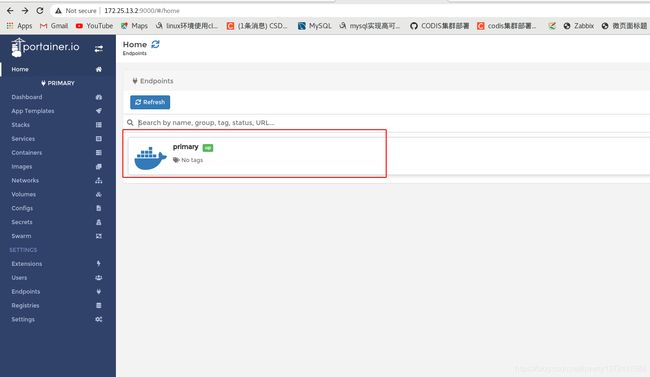

- 6.2.3 图形化界面的操作

- 6.2.4 创建services

1. Docker Swarm简介

-

Swarm 在 Docker 1.12 版本之前属于一个独立的项目,在 Docker 1.12 版本发布之后,该项目合并到了 Docker 中,成为 Docker 的一个子命令。

-

Swarm 是 Docker 社区提供的唯一一个原生支持 Docker 集群管理的工具。

-

Swarm可以把多个 Docker 主机组成的系统转换为单一的虚拟 Docker 主机,使得容器可以组成跨主机的子网网络。

-

Docker Swarm 是一个为 IT 运维团队提供集群和调度能力的编排工具。

-

Docker Swarm 优点

任何规模都有高性能表现

灵活的容器调度

服务的持续可用性

和 Docker API 及整合支持的兼容性

Docker Swarm 为 Docker 化应用的核心功能(诸如多主机网络和存储卷管理)提供原生支持。 -

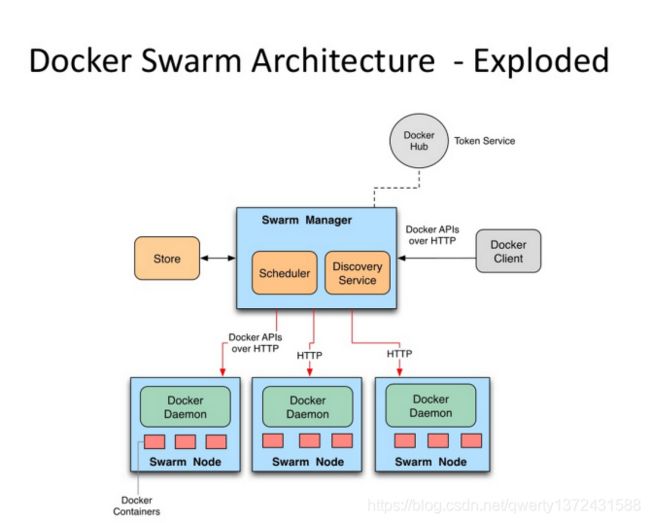

docker swarm 相关概念

节点分为管理 (manager) 节点和工作 (worker) 节点

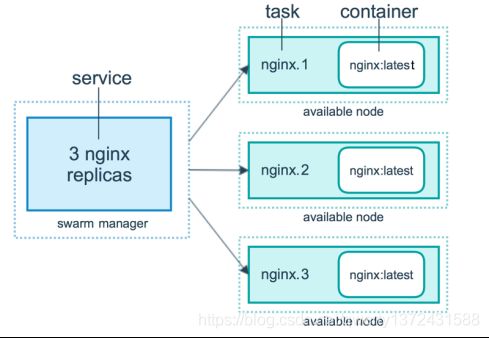

任务 (Task)是 Swarm 中的最小的调度单位,目前来说就是一个单一的容器。

服务 (Services) 是指一组任务的集合,服务定义了任务的属性。

2. Docker Swarm实践

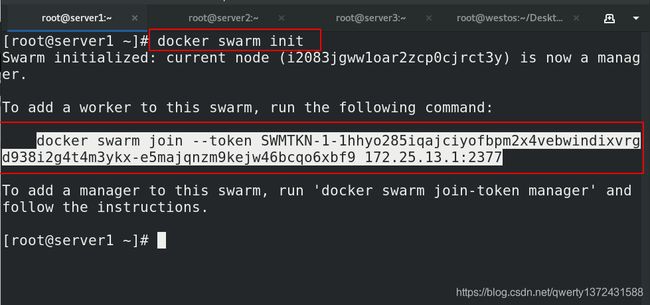

2.1 创建 Swarm 集群

[root@server1 ~]# docker swarm init ##集群初始化,按照初始化指令在server2和server3上部属集群

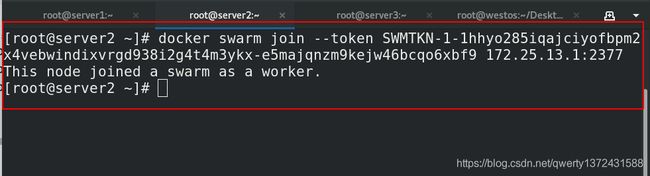

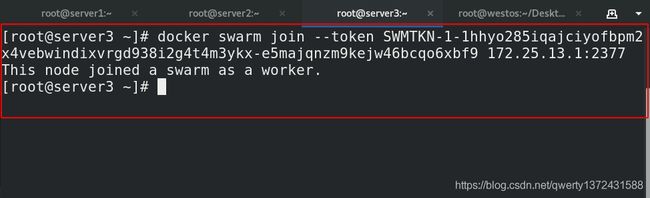

[root@server2 ~]# docker swarm join --token SWMTKN-1-1hhyo285iqajciyofbpm2x4vebwindixvrgd938i2g4t4m3ykx-e5majqnzm9kejw46bcqo6xbf9 172.25.13.1:2377

[root@server3 ~]# docker swarm join --token SWMTKN-1-1hhyo285iqajciyofbpm2x4vebwindixvrgd938i2g4t4m3ykx-e5majqnzm9kejw46bcqo6xbf9 172.25.13.1:2377

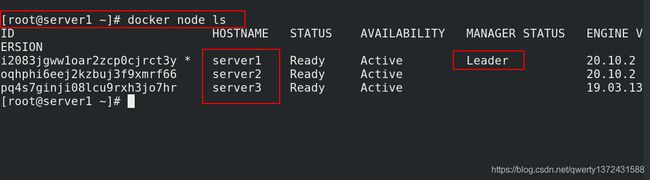

[root@server1 ~]# docker node ls ##查看节点信息,这时候server1是leader

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

i2083jgww1oar2zcp0cjrct3y * server1 Ready Active Leader 20.10.2

oqhphi6eej2kzbuj3f9xmrf66 server2 Ready Active 20.10.2

pq4s7ginji08lcu9rxh3jo7hr server3 Ready Active 19.03.13

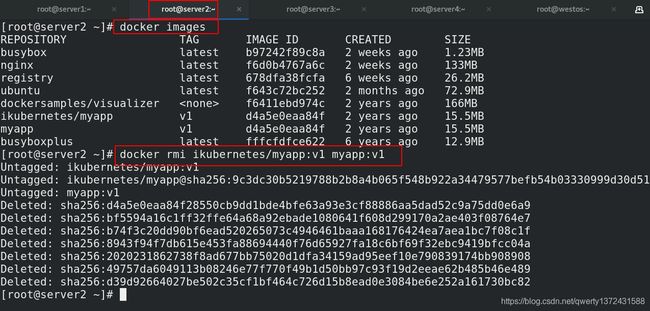

2.2 测试软件myapp

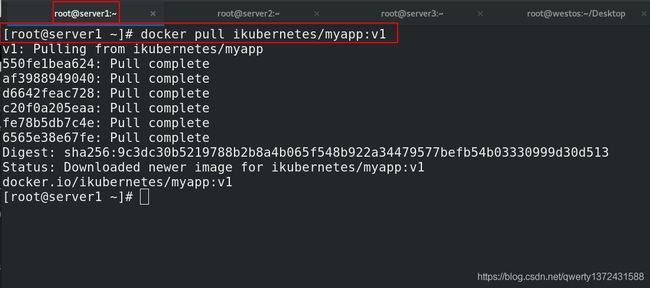

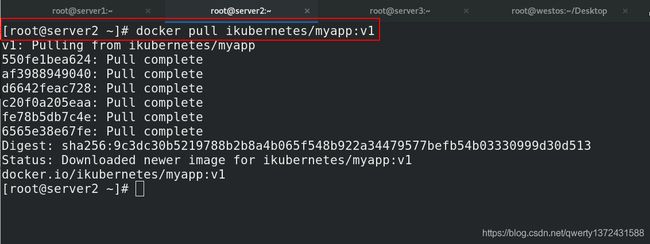

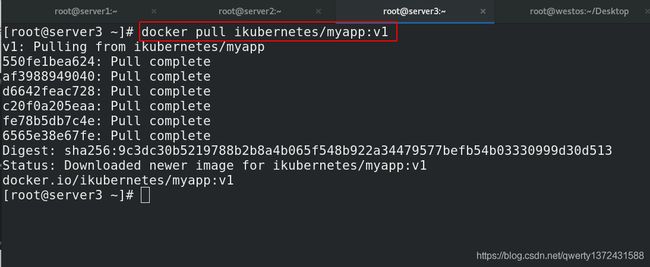

[root@server1 ~]# docker pull ikubernetes/myapp:v1

[root@server2 ~]# docker pull ikubernetes/myapp:v1

[root@server3 ~]# docker pull ikubernetes/myapp:v1

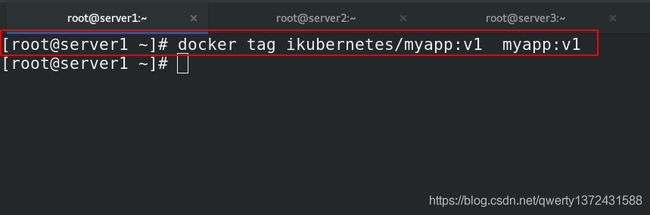

[root@server1 ~]# docker tag ikubernetes/myapp:v1 myapp:v1

[root@server2 ~]# docker tag ikubernetes/myapp:v1 myapp:v1

[root@server3 ~]# docker tag ikubernetes/myapp:v1 myapp:v1

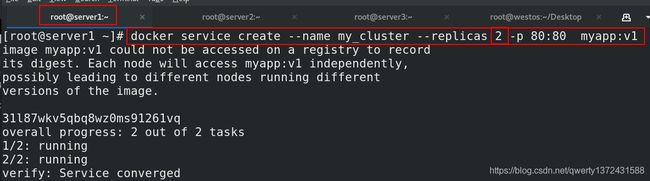

[root@server1 ~]# docker service create --name my_cluster --replicas 2 -p 80:80 myapp:v1 ##部属集群

[root@server1 ~]# docker service rm my_cluster ##删除集群,此处不需要操作

[root@server1 ~]# docker service ps my_cluster ##查看集群进程

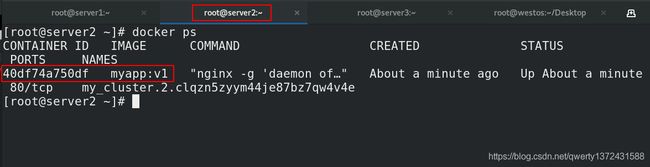

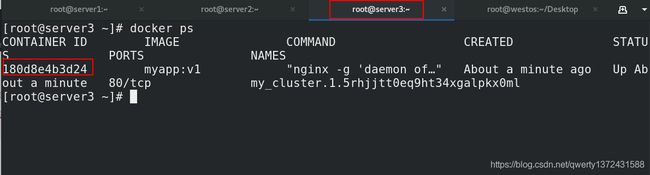

[root@server2 ~]# docker ps ##server2和server3进行查看

[root@server3 ~]# docker ps

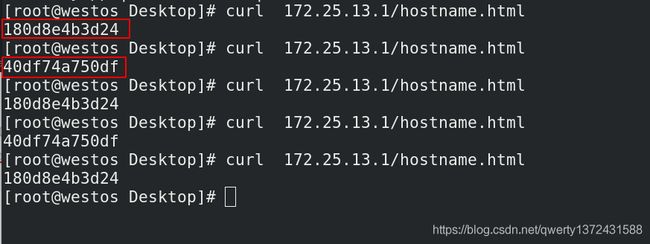

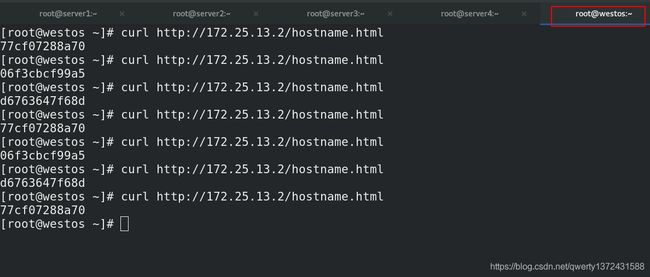

[root@westos Desktop]# curl 172.25.13.1/hostname.html ##真机测试

180d8e4b3d24

[root@westos Desktop]# curl 172.25.13.1/hostname.html

40df74a750df

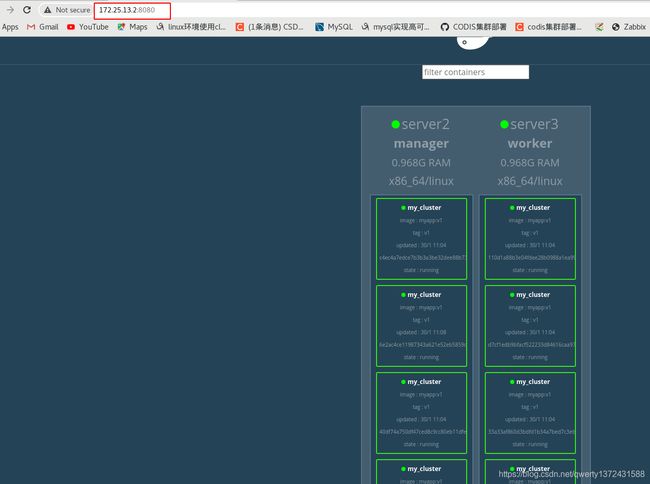

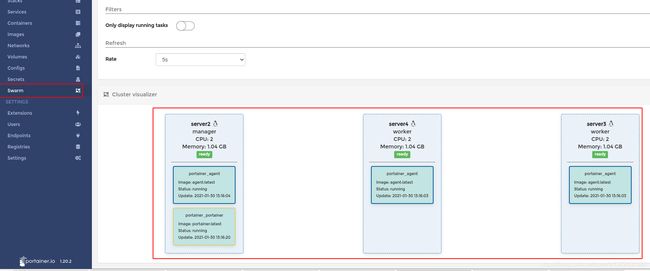

2.2 部署swarm监控:(各节点提前导入dockersamples/visualizer镜像)

- 命令解释:

docker service create 命令创建一个服务

--name 服务名称命名为 my_cluster

--network 指定服务使用的网络模型

--replicas 设置启动的示例数为3

监控的下载地址

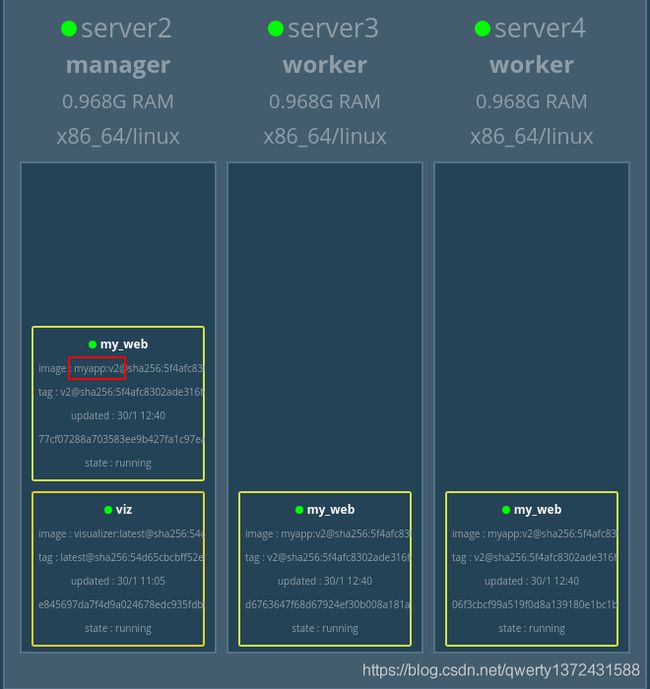

##集群完好的情况

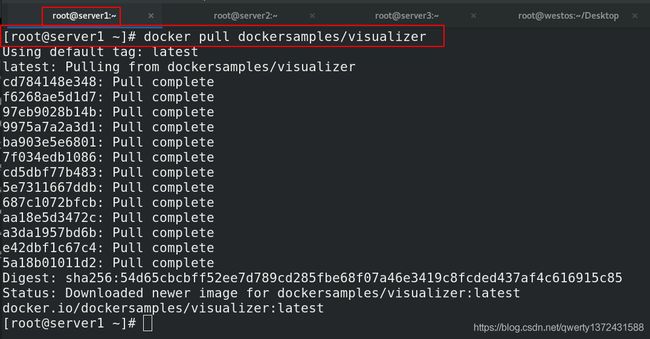

[root@server1 ~]# docker pull dockersamples/visualizer ##直接拉取监控镜像

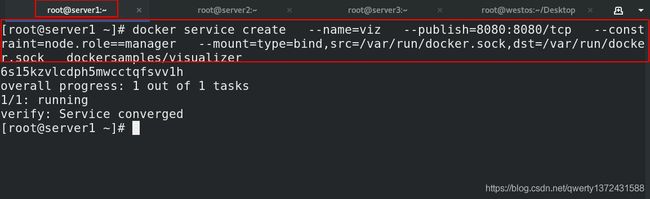

[root@server1 ~]# docker service create --name=viz --publish=8080:8080/tcp --constraint=node.role==manager --mount=type=bind,src=/var/run/docker.sock,dst=/var/run/docker.sock dockersamples/visualizer ##部属监控

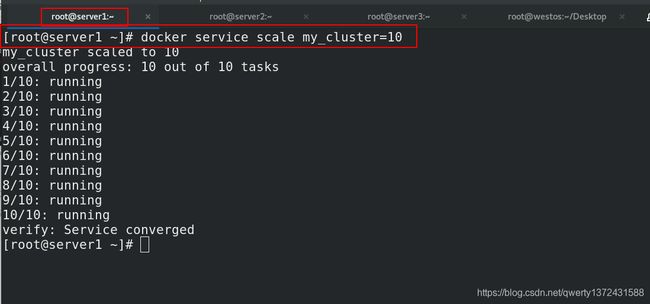

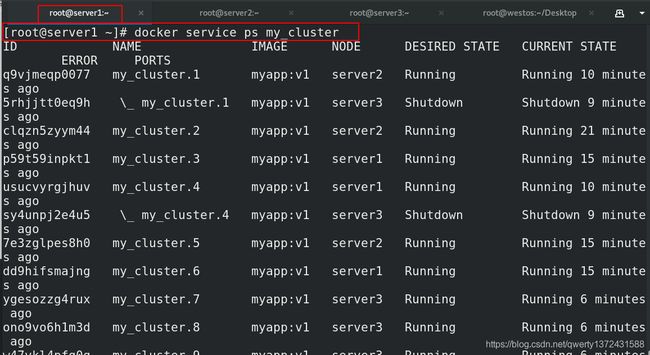

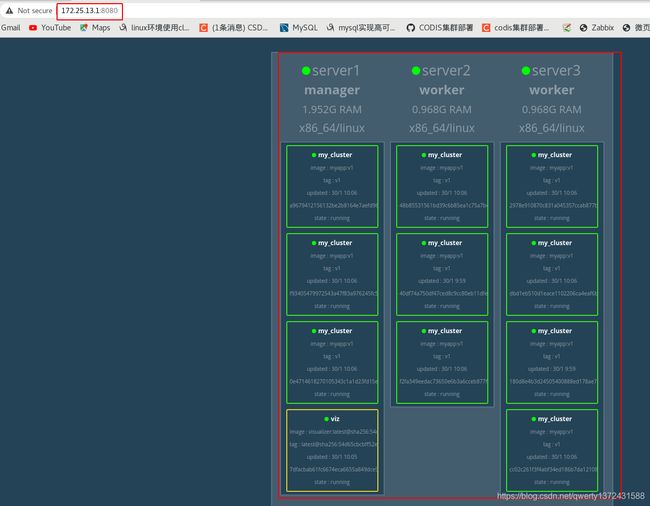

[root@server1 ~]# docker service scale my_cluster=10 ##拉伸和缩减集群,集群会自动负载均衡到三个主机上

[root@server1 ~]# docker service ps my_cluster

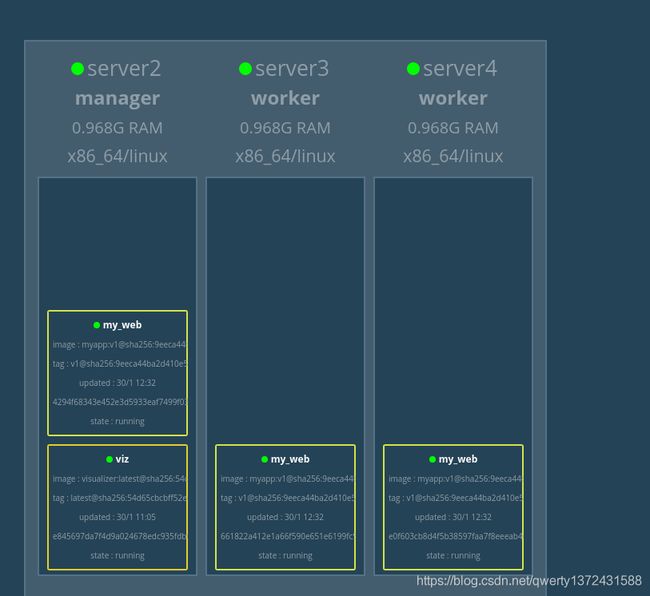

浏览器访问172.25.13.1:8080

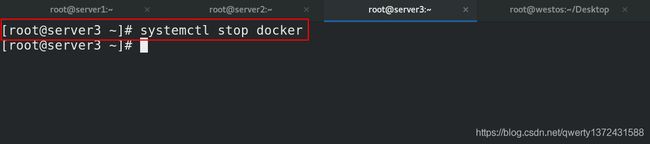

##一个集群出现故障的情况,会将集群上的任务分配到别的主机

[root@server1 ~]# docker service scale my_cluster=6 ##弹性伸缩

[root@server3 ~]# systemctl stop docker ##server3主机出现故障

[root@server3 ~]# systemctl start docker ##重启之后不会自动转移到server3,是在重新添加任务的时候,优先添加到server3上,保证负载均衡

[root@server1 ~]# docker service scale my_cluster=10 ##扩展集群,发现优先分配在server3上

2.2.1 集群完好的情况

2.2.2 一个集群出现故障的情况,会将集群上的任务分配到别的主机

[root@server3 ~]# systemctl stop docker

[root@server3 ~]# systemctl start docker

[root@server1 ~]# docker service scale my_cluster=10

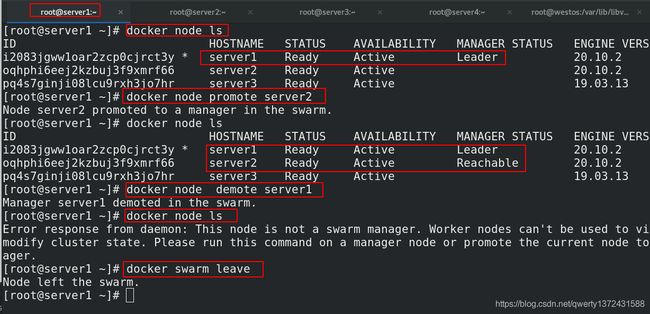

3. 节点升级降级

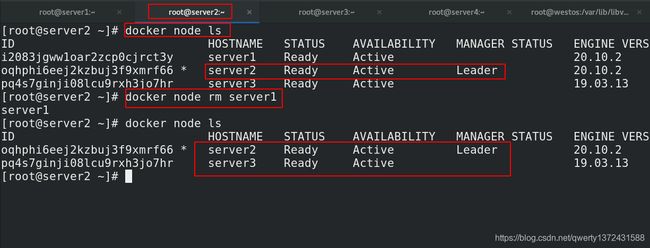

3.1 节点降级

##server1

[root@server1 ~]# docker node ls ##此时server1是leader

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

i2083jgww1oar2zcp0cjrct3y * server1 Ready Active Leader 20.10.2

oqhphi6eej2kzbuj3f9xmrf66 server2 Ready Active 20.10.2

pq4s7ginji08lcu9rxh3jo7hr server3 Ready Active 19.03.13

[root@server1 ~]# docker node promote server2 ##将server2设置为备选leader

Node server2 promoted to a manager in the swarm.

[root@server1 ~]# docker node ls ##显示主leader和备选leader

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

i2083jgww1oar2zcp0cjrct3y * server1 Ready Active Leader 20.10.2

oqhphi6eej2kzbuj3f9xmrf66 server2 Ready Active Reachable 20.10.2

pq4s7ginji08lcu9rxh3jo7hr server3 Ready Active 19.03.13

[root@server1 ~]# docker node demote server1 ##将主leader删除

Manager server1 demoted in the swarm.

[root@server1 ~]# docker node ls ##失去查看node的权限

Error response from daemon: This node is not a swarm manager. Worker nodes can't be used to view or modify cluster state. Please run this command on a manager node or promote the current node to a manager.

[root@server1 ~]# docker swarm leave ##离开集群

Node left the swarm.

##server2

[root@server2 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

i2083jgww1oar2zcp0cjrct3y server1 Ready Active 20.10.2

oqhphi6eej2kzbuj3f9xmrf66 * server2 Ready Active Leader 20.10.2

pq4s7ginji08lcu9rxh3jo7hr server3 Ready Active 19.03.13

[root@server2 ~]# docker node rm server1

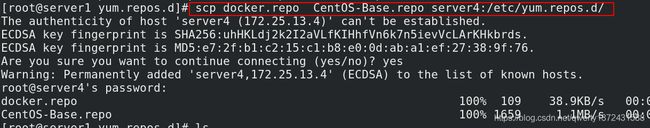

3.2 节点升级

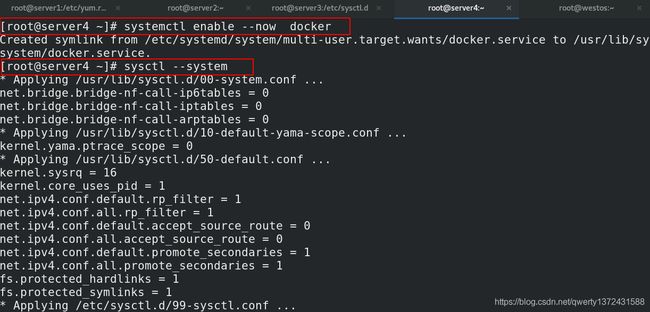

3.2.1 配置docker

[root@server1 yum.repos.d]# scp docker.repo CentOS-Base.repo server4:/etc/yum.repos.d/

[root@server4 ~]# yum install docker-ce -y

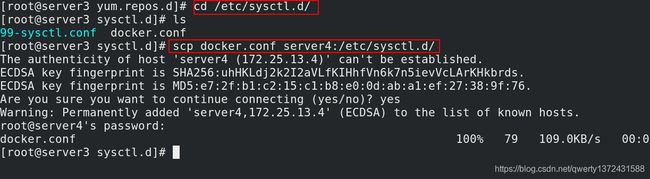

[root@server3 yum.repos.d]# cd /etc/sysctl.d/

[root@server3 sysctl.d]# ls

99-sysctl.conf docker.conf

[root@server3 sysctl.d]# scp docker.conf server4:/etc/sysctl.d/

[root@server4 ~]# systemctl enable --now docker ##启动服务

[root@server4 ~]# sysctl --system

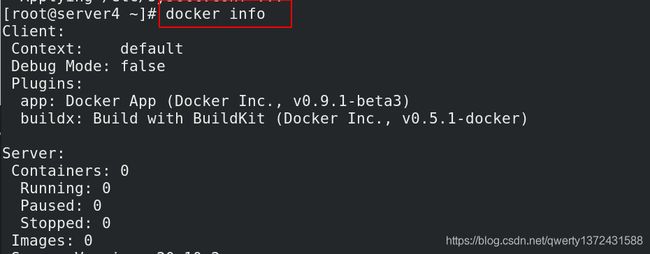

[root@server4 ~]# docker info

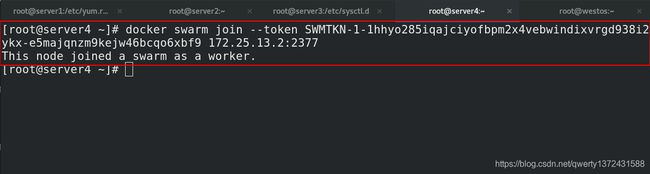

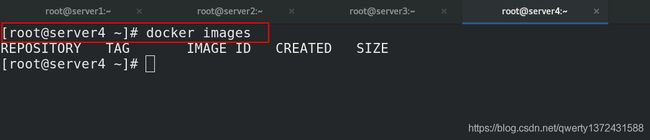

3.2.2 配置节点

[root@server4 ~]# docker swarm join --token SWMTKN-1-1hhyo285iqajciyofbpm2x4vebwindixvrgd938i2g4t4m3ykx-e5majqnzm9kejw46bcqo6xbf9 172.25.13.2:2377 ##leader是server2

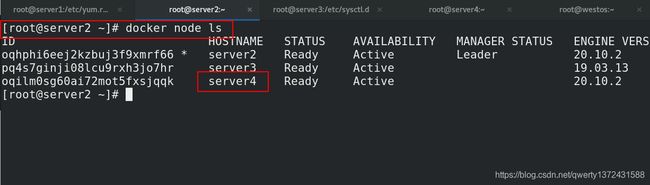

[root@server2 ~]# docker node ls ##leader查看节点

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

oqhphi6eej2kzbuj3f9xmrf66 * server2 Ready Active Leader 20.10.2

pq4s7ginji08lcu9rxh3jo7hr server3 Ready Active 19.03.13

oqilm0sg60ai72mot5fxsjqqk server4 Ready Active 20.10.2

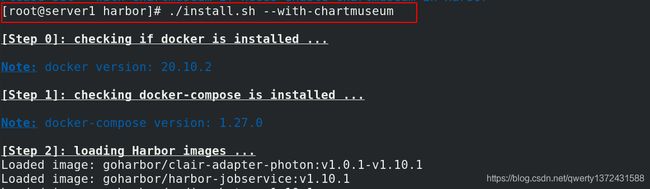

4. 加入本地私有仓库

[root@server1 harbor]# pwd

/root/harbor

[root@server1 harbor]# docker-compose down

[root@server1 harbor]# ./install.sh --with-chartmuseum

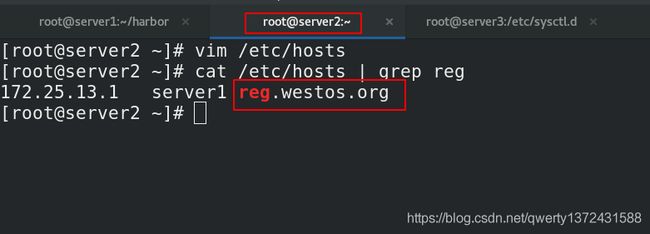

## 配置本地解析

[root@server2 ~]# vim /etc/hosts

[root@server2 ~]# cat /etc/hosts | grep reg

172.25.13.1 server1 reg.westos.org

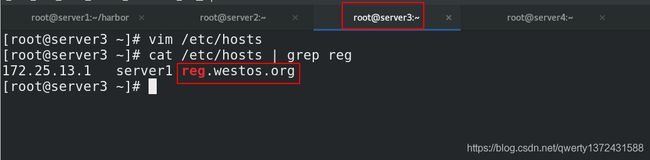

[root@server3 ~]# vim /etc/hosts

[root@server3 ~]# cat /etc/hosts | grep reg

172.25.13.1 server1 reg.westos.org

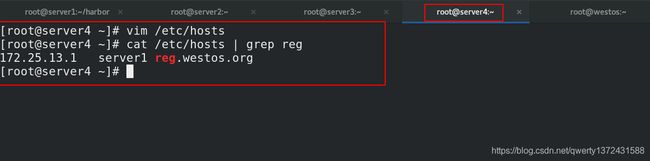

[root@server4 ~]# vim /etc/hosts

[root@server4 ~]# cat /etc/hosts | grep reg

172.25.13.1 server1 reg.westos.org

4.1 启动harbor仓库

4.2 配置本地解析

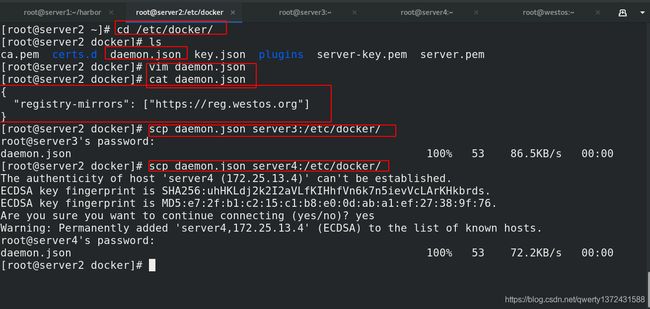

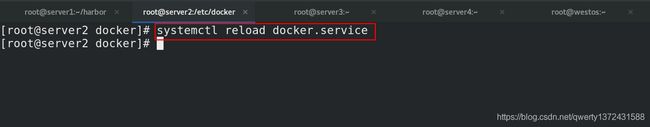

4.3 配置加速器,从本地走

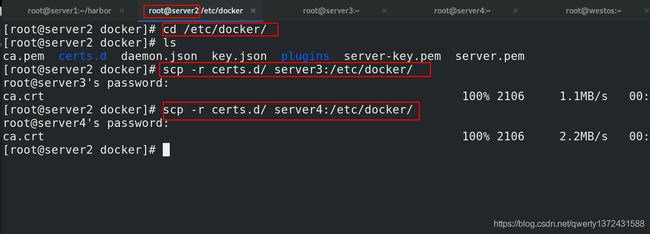

4.4 配置证书

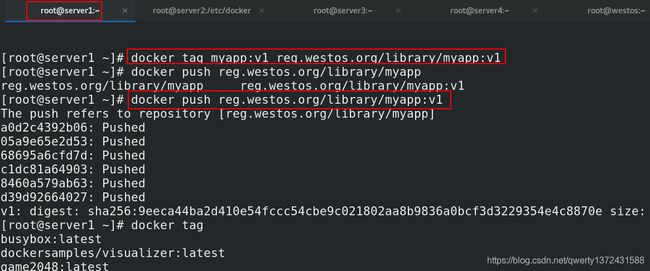

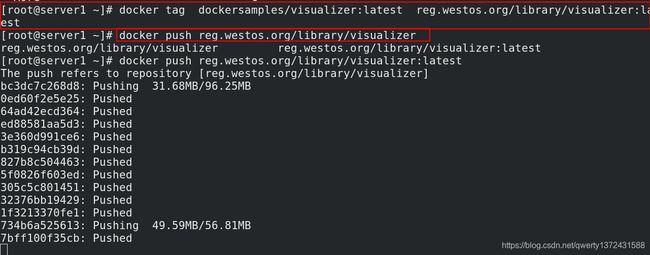

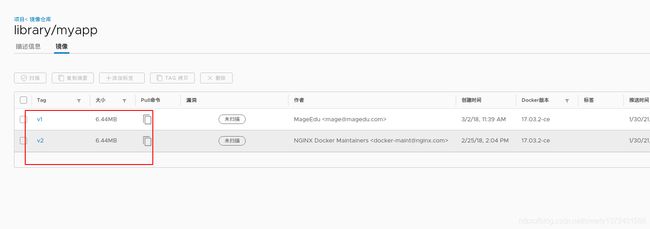

4.5上传镜像

4.6 测试

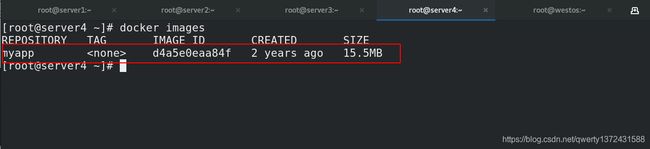

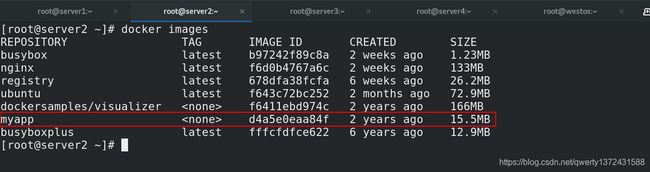

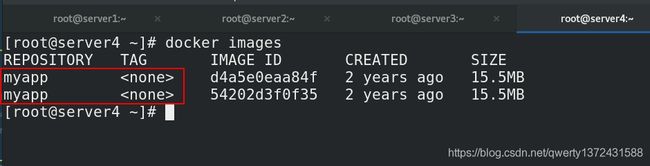

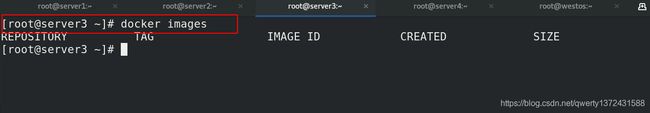

结束掉server2上的service并且删除server3和server4上的所有镜像。

4.7 部属集群

5. 实现业务滚动更新

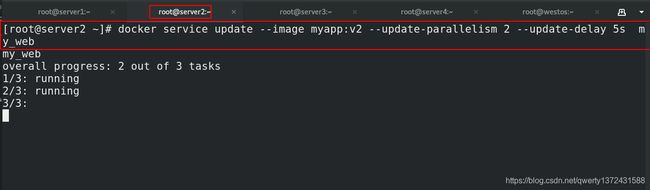

- 滚动更新

docker service update --image httpd --update-parallelism 2 --update-delay 5s my_cluster

--image 指定要更新的镜像

--update-parallelism 指定最大同步更新的任务数

--update-delay 指定更新间隔

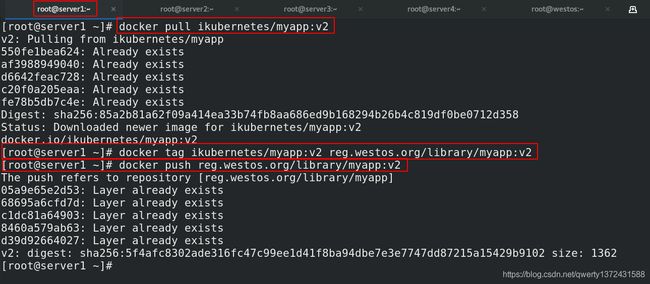

5.1 上传myapp:v2到本地仓库

[root@server1 ~]# docker pull ikubernetes/myapp:v2

[root@server1 ~]# docker tag ikubernetes/myapp:v2 reg.westos.org/library/myapp:v2

[root@server1 ~]# docker push reg.westos.org/library/myapp:v2

5.2 滚动更新

[root@server2 ~]# docker service update --image myapp:v2 --update-parallelism 2 --update-delay 5s my_web

5.3 测试

(不需要看,直接看十五Portainer可视化)

6. compose实现集群部属

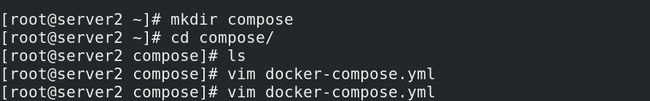

6.1 自己书写compose文件,并删除原有service

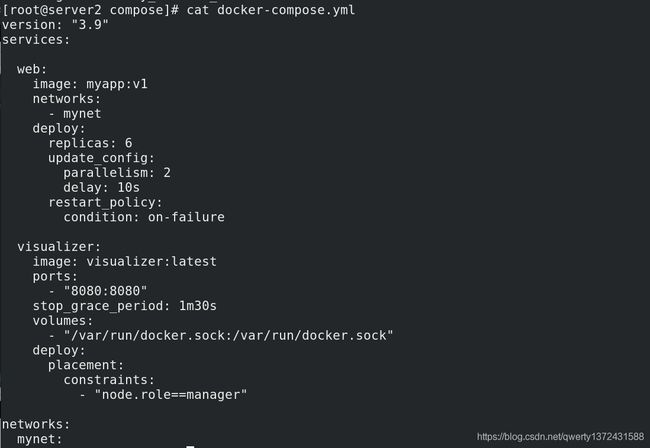

6.1.1 compose文件,并删除原有service

文件书写参考

[root@server2 ~]# mkdir compose

[root@server2 ~]# cd compose/

[root@server2 compose]# ls

[root@server2 compose]# vim docker-compose.yml

[root@server2 compose]# cat docker-compose.yml

version: "3.9"

services:

web:

image: myapp:v1

networks:

- mynet

deploy:

replicas: 6

update_config:

parallelism: 2

delay: 10s

restart_policy:

condition: on-failure

visualizer:

image: visualizer:latest

ports:

- "8080:8080"

stop_grace_period: 1m30s

volumes:

- "/var/run/docker.sock:/var/run/docker.sock"

deploy:

placement:

constraints:

- "node.role==manager"

networks:

mynet:

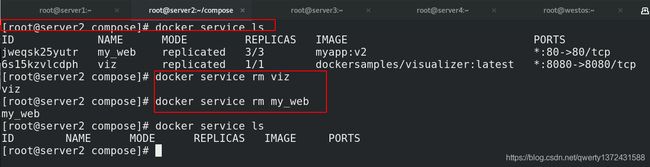

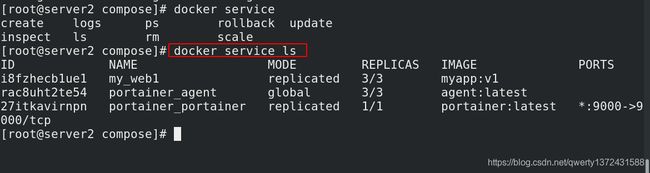

[root@server2 compose]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

jweqsk25yutr my_web replicated 3/3 myapp:v2 *:80->80/tcp

6s15kzvlcdph viz replicated 1/1 dockersamples/visualizer:latest *:8080->8080/tcp

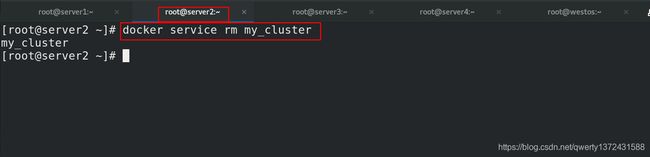

[root@server2 compose]# docker service rm viz

viz

[root@server2 compose]# docker service rm my_web

my_web

[root@server2 compose]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

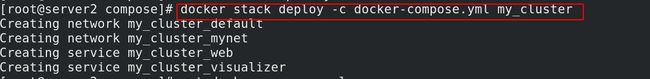

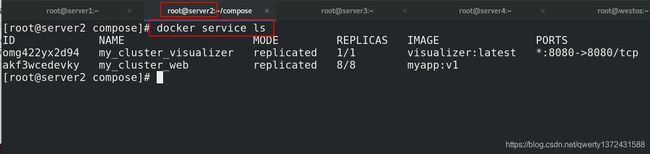

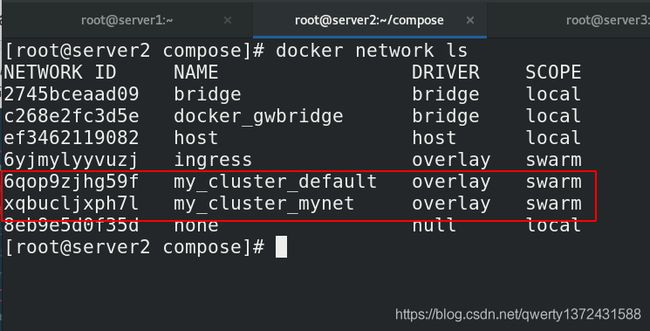

6.1.2 部署

[root@server2 compose]# docker stack deploy -c docker-compose.yml my_cluster

6.2 图形化的部署(Portainer 轻量级的 Docker 管理 UI)

portainer下载地址

##命令形式

curl -L https://downloads.portainer.io/portainer-agent-stack.yml -o portainer-agent-stack.yml

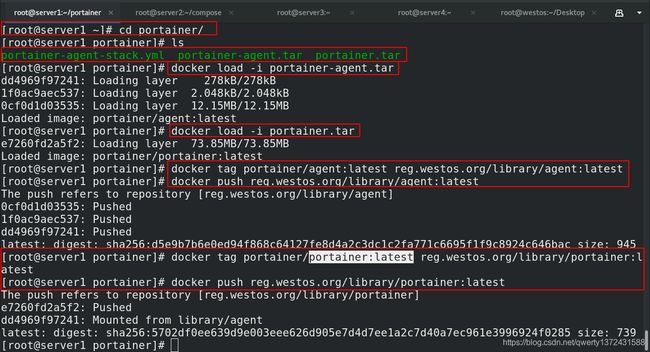

6.2.1 上传portainer镜像到仓库

[root@server1 ~]# cd portainer/

[root@server1 portainer]# ls

portainer-agent-stack.yml portainer-agent.tar portainer.tar

[root@server1 portainer]# docker load -i portainer-agent.tar

[root@server1 portainer]# docker load -i portainer.tar

[root@server1 portainer]# docker tag portainer/agent:latest reg.westos.org/library/agent:latest

[root@server1 portainer]# docker push reg.westos.org/library/agent:latest

[root@server1 portainer]# docker tag portainer/portainer:latest reg.westos.org/library/portainer:latest

[root@server1 portainer]# docker push reg.westos.org/library/portainer:latest

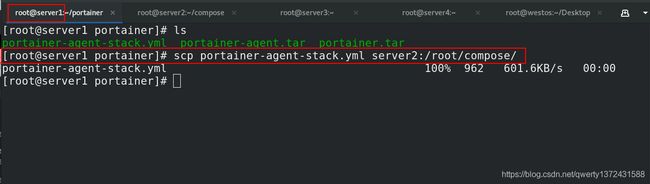

6.2.2 图形化部署

[root@server1 portainer]# scp portainer-agent-stack.yml server2:/root/compose/ ##发送yml文件到server2

[root@server2 compose]# vim portainer-agent-stack.yml

[root@server2 compose]# cat portainer-agent-stack.yml

version: '3.2'

services:

agent:

image: agent

environment:

# REQUIRED: Should be equal to the service name prefixed by "tasks." when

# deployed inside an overlay network

AGENT_CLUSTER_ADDR: tasks.agent

# AGENT_PORT: 9001

# LOG_LEVEL: debug

volumes:

- /var/run/docker.sock:/var/run/docker.sock

- /var/lib/docker/volumes:/var/lib/docker/volumes

networks:

- agent_network

deploy:

mode: global

placement:

constraints: [node.platform.os == linux]

portainer:

image: portainer

command: -H tcp://tasks.agent:9001 --tlsskipverify

ports:

- "9000:9000"

volumes:

- portainer_data:/data

networks:

- agent_network

deploy:

mode: replicated

replicas: 1

placement:

constraints: [node.role == manager]

networks:

agent_network:

driver: overlay

attachable: true

volumes:

portainer_data:

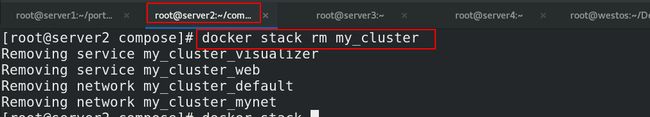

[root@server2 compose]# docker stack rm my_cluster ##删除自己书写的

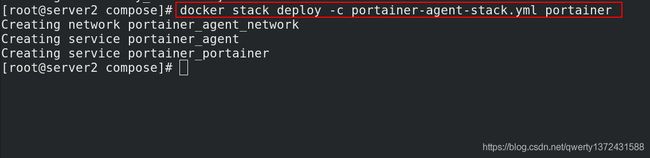

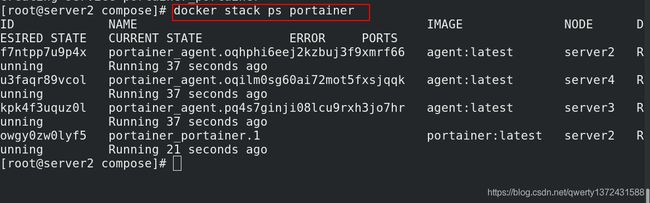

[root@server2 compose]# docker stack deploy -c portainer-agent-stack.yml portainer ##部署图形化