文章目录

- 参考

- 环境

- 省略的前置部署

- 部署

- Ingress暴露服务

- 验证

-

- 查看pod状态

- web登录

- docker登录

- containerd下载镜像

- 异常处理

参考

- https://github.com/bitnami/charts/tree/master/bitnami/harbor/#installing-the-chart

环境

CentOS Stream release 8

helm version v3.7.0

harbor 2.4.0

harbor chart 11.1.0

Kubernetes v1.22.3

省略的前置部署

- Kubernetes集群(使用containerd)

- 分布式存储 GlusterFS+Heketi

- nginx-ingress-controller

部署

# 添加bitnami源

helm repo add bitnami https://charts.bitnami.com/bitnami

# 下载chart,解压

helm pull bitnami/harbor

tar -zxvf harbor-11.1.0.tgz

# 拷贝tls证书

# 解压chart后在harbor/cert目录下有自带的自签名证书,对应虚构的域名core.harbor.domain

# 使用自己的域名k8s-harbor.xxx.com证书进行替换

cp k8s-harbor.xxx.com.pem harbor/cert/tls.crt

cp k8s-harbor.xxx.com.key harbor/cert/tls.key

# debug看看配置与自己的环境是否匹配,是否需要修改

helm install harbor ./harbor --dry-run|more

# 需要调整修改的部分

1. PVC需要指定storageClassName,要与自己本地的StorageClass匹配,本地部署的GlusterFS集群

2. PVC请求的存储空间大小大小自定义

3. Service类型由LoadBalancer改为ClusterIP

# 通过指定参数进行调整

helm install harbor ./harbor --dry-run --set \

global.storageClass="glusterfs-heketi",\ # glusterfs-heketi为本地的storageClassName

externalURL="https://k8s-harbor.xxx.com",\ # 由https://core.harbor.domain修改为实际使用的域名

service.type="ClusterIP",\ # Service类型由LoadBalancer改为ClusterIP

service.tls.commonName="k8s-harbor.xxx.com",\ # 不指定时,默认会自动生成对应域名core.harbor.domain的自签名证书,此处修改为自己实际使用的域名

persistence.persistentVolumeClaim.registry.size="1Gi",\ #自定义存储空间大小

persistence.persistentVolumeClaim.jobservice.size="200Mi",\ #自定义存储空间大小

persistence.persistentVolumeClaim.chartmuseum.size="1Gi",\ #自定义存储空间大小

persistence.persistentVolumeClaim.trivy.size="1Gi"

# 各项配置确认OK后,进行安装

helm install harbor ./harbor --set \

global.storageClass="glusterfs-heketi",\

externalURL="https://k8s-harbor.xxx.com",\

service.type="ClusterIP",\

service.tls.commonName="k8s-harbor.xxx.com",\

persistence.persistentVolumeClaim.registry.size="1Gi",\

persistence.persistentVolumeClaim.jobservice.size="200Mi",\

persistence.persistentVolumeClaim.chartmuseum.size="1Gi",\

persistence.persistentVolumeClaim.trivy.size="1Gi"

Ingress暴露服务

- 创建k8s-harbor.xxx.com证书对应的secert

kubectl create secret tls xxx --key k8s-harbor.xxx.com.key --cert k8s-harbor.xxx.com.pem -n default # secret名称xxx自己定义

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-nginx-harbor

annotations:

# 此注解指定nginx连接后端服务也使用HTTPS,不配置默认就是HTTP

# 将nginx对body的限制调大,否则过大的镜像无法上传到harbor,报413错误

nginx.ingress.kubernetes.io/backend-protocol: "HTTPS"

nginx.ingress.kubernetes.io/proxy-body-size: 900m

spec:

ingressClassName: nginx

rules:

- host: k8s-harbor.xxx.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: harbor

port:

number: 443

tls:

- hosts:

- k8s-harbor.xxx.com

secretName: xxx

验证

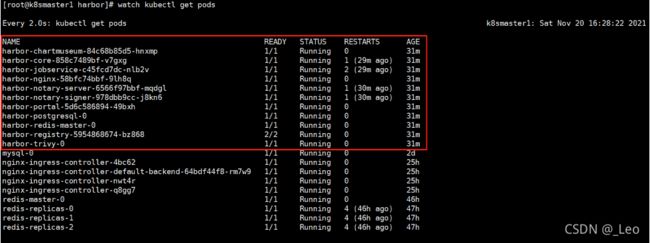

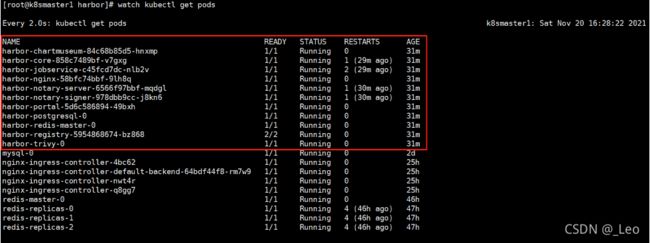

查看pod状态

# 查找admin默认密码

kubectl get secret/harbor-core-envvars -o jsonpath='{.data.HARBOR_ADMIN_PASSWORD}'|base64 --decode

web登录

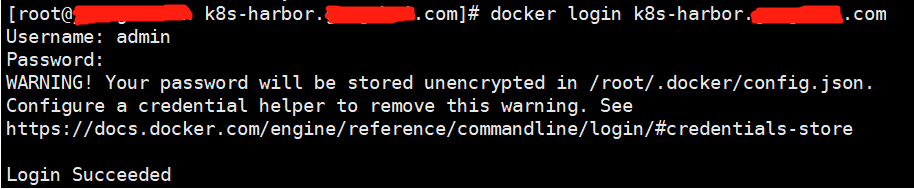

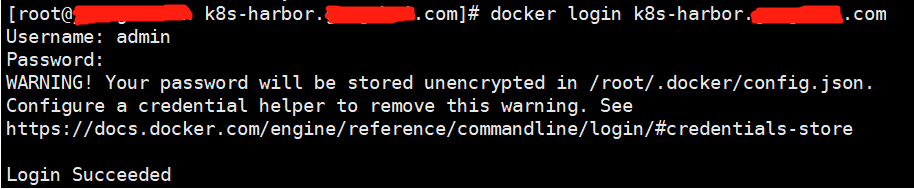

docker登录

# 拷贝证书

mkdir -p /etc/docker/certs.d/k8s-harbor.xxx.com

cp k8s-harbor.xxx.com.pem /etc/docker/certs.d/k8s-harbor.xxx.com/tls.crt

cp k8s-harbor.xxx.com.pem /etc/docker/certs.d/k8s-harbor.xxx.com/tls.cert

cp k8s-harbor.xxx.com.key /etc/docker/certs.d/k8s-harbor.xxx.com/tls.key

systemctl restart docker

containerd下载镜像

# containerd部分配置

[plugins."io.containerd.grpc.v1.cri".registry]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]

endpoint = ["https://registry-1.docker.io"]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."k8s-harbor.xxx.com"]

endpoint = ["https://k8s-harbor.xxx.com"]

[plugins."io.containerd.grpc.v1.cri".registry.configs]

[plugins."io.containerd.grpc.v1.cri".registry.configs."k8s-harbor.xxx.com".auth]

username = "admin"

password = "xxx"

# 想要跳过tls就加上

[plugins."io.containerd.grpc.v1.cri".registry.configs."k8s-harbor.xxx".tls]

insecure_skip_verify = true

异常处理

docker登录时报错:

连接到core.harbor.domain

解决办法:

externalURL="https://k8s-harbor.xxx.com"修改为自己实际的域名

docker登录时报错:

Error response from daemon: Get https://k8s-harbor.xxx.com/v2/: received unexpected HTTP status: 500 Internal Server Error

查harbor-core日志:

[DEBUG] [/server/middleware/log/log.go:30]: attach request id 5c203603a23d0759bce912920b074044 to the logger for the request GET /service/token

[DEBUG] [/server/middleware/artifactinfo/artifact_info.go:53]: In artifact info middleware, url: /service/token?account=admin&client_id=docker&offline_token=true&service=harbor-registry

[DEBUG] [/core/auth/authenticator.go:145]: Current AUTH_MODE is db_auth

[DEBUG] [/server/middleware/security/basic_auth.go:47][requestID="5c203603a23d0759bce912920b074044"]: a basic auth security context generated for request GET /service/token

[DEBUG] [/core/service/token/token.go:36]: URL for token request: /service/token?account=admin&client_id=docker&offline_token=true&service=harbor-registry

[DEBUG] [/core/service/token/creator.go:230]: scopes: []

[DEBUG] [/core/service/token/authutils.go:50]: scopes: []

[ERROR] [/core/service/token/token.go:49]: Unexpected error when creating the token, error: unable to find PEM encoded data

解决办法:

请求时token创建失败,无法使用PEM编码数据,这是证书问题

建议最好使用正规CA证书,证书放入chart的cert目录下,同时docker客户端也要配置证书

docker登录时报错:

Error response from daemon: Missing client certificate tls.cert for key tls.key

解决办法:

缺少tls.cert

cp k8s-harbor.xxx.com.pem /etc/docker/certs.d/k8s-harbor.xxx.com/tls.cert

docker push报错:

error parsing HTTP 413 response body: invalid character '<' looking for beginning of value: "\r\n413 Request Entity Too Large\r\n\r\n413 Request Entity Too Large

\r\n

nginx\r\n\r\n\r\n"

解决办法:

413错误一般是nginx限制了client body大小

查harbor nginx服务的配置:harbor/templates/nginx/configmap-https.yaml没做任何限制

# disable any limits to avoid http 413 for large image uploads

client_max_body_size 0;

那限制应该是在ingress侧

ingress策略添加以下注解,然后重新创建ingress即可

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-nginx-harbor

annotations:

nginx.ingress.kubernetes.io/backend-protocol: "HTTPS"

nginx.ingress.kubernetes.io/proxy-body-size: 900m