Celery的安装和简单使用(Redis作为消息中间件)

参考链接

[1]Celery文档-入门:http://docs.jinkan.org/docs/celery/getting-started/index.html

[2]知乎专栏-使用Celery:https://zhuanlan.zhihu.com/p/22304455

Celery简介

Celery 是一个简单、灵活且可靠的,处理大量消息的分布式系统,并且提供维护这样一个系统的必需工具。它专注于实时处理的任务队列,同时也支持任务调度。

Celery 需要一个发送和接受消息的传输者。RabbitMQ 和 Redis 中间人的消息传输支持所有特性,但也提供大量其他实验性方案的支持,包括用 SQLite 进行本地开发。

Celery 系统可包含多个职程和中间人,以此获得高可用性和横向扩展能力。

Celery 可以单机运行,也可以在多台机器上运行,甚至可以跨越数据中心运行。

Celery 是用 Python 编写的,但协议可以用任何语言实现。迄今,已有 Ruby 实现的 RCelery 、node.js 实现的 node-celery 以及一个 PHP 客户端 ,语言互通也可以通过 using webhooks 实现。

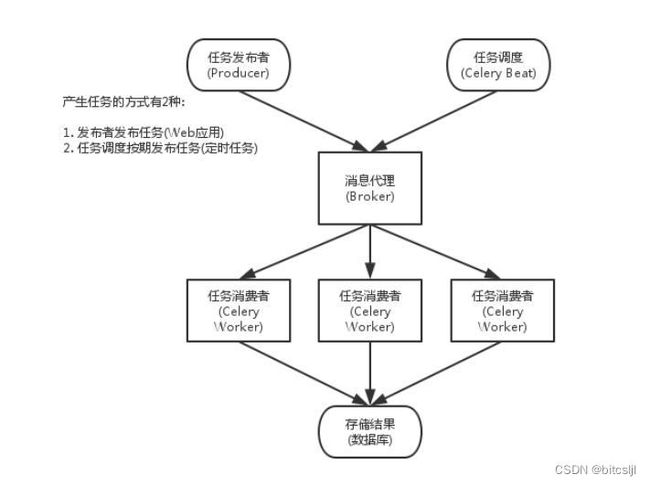

Celery架构

- Celery Beat:任务调度器,Beat进程会读取配置文件的内容,周期性地将配置中到期需要执行的任务发送给任务队列。

- Celery Worker:执行任务的消费者,通常会在多台服务器运行多个消费者来提高执行效率。

- Broker:消息代理,或者叫作消息中间件,接受任务生产者发送过来的任务消息,存进队列再按序分发给任务消费方(通常是消息队列或者数据库)。

- Producer:调用了Celery提供的API、函数或者装饰器而产生任务并交给任务队列处理的都是任务生产者。

- Result Backend:任务处理完后保存状态信息和结果,以供查询。Celery默认已支持Redis、RabbitMQ、MongoDB、Django ORM、SQLAlchemy等方式。

安装Celery

直接安装

可以从 Python Package Index(PyPI)或源码安装 Celery。

用 pip 安装:

pip install -U Celery

用 easy_install 安装:

easy_install -U Celery

捆绑安装

Celery 也定义了一组用于安装 Celery 和给定特性依赖的捆绑。可以在 requirements.txt 中指定或在 pip 命令中使用方括号。多个捆绑用逗号分隔。

pip install celery[librabbitmq]

pip install celery[librabbitmq,redis,auth,msgpack]

以下是可用的捆绑:

- 序列化

celery[auth]: 使用 auth 序列化。

celery[msgpack]: 使用 msgpack 序列化。

celery[yaml]: 使用 yaml 序列化。

并发

celery[eventlet]: 使用 eventlet 池。

celery[gevent]: 使用 gevent 池。

celery[threads]: 使用线程池。 - 传输和后端

celery[librabbitmq]: 使用 librabbitmq 的 C 库.

celery[redis]: 使用 Redis 作为消息传输方式或结果后端。

celery[mongodb]: 使用 MongoDB 作为消息传输方式( 实验性 ),或是结果后端( 已支持 )。

celery[sqs]: 使用 Amazon SQS 作为消息传输方式( 实验性 )。

celery[memcache]: 使用 memcache 作为结果后端。

celery[cassandra]: 使用 Apache Cassandra 作为结果后端。

celery[couchdb]: 使用 CouchDB 作为消息传输方式( 实验性 )。

celery[couchbase]: 使用 CouchBase 作为结果后端。

celery[beanstalk]: 使用 Beanstalk 作为消息传输方式( 实验性 )。

celery[zookeeper]: 使用 Zookeeper 作为消息传输方式。

celery[zeromq]: 使用 ZeroMQ 作为消息传输方式( 实验性 )。

celery[sqlalchemy]: 使用 SQLAlchemy 作为消息传输方式( 实验性 ),或作为结果后端( 已支持 )。

celery[pyro]: 使用 Pyro4 消息传输方式( 实验性 )。

celery[slmq]: 使用 SoftLayer Message Queue 传输( 实验性 )。

实际安装

使用Redis作为消息中间件,需要安装Redis,并捆绑安装Celery及其Redis依赖:

#安装Celery及其Redis依赖

pip install celery[redis]

简单测试

- 创建文件夹celery_test

mkdir -p celery_test

- 创建任务

cd celery_test/

vim tasks.py

tasks.py:

import time

from celery import Celery

app = Celery('tasks', broker='redis://localhost:6379/2')

@app.task

def add(x, y):

time.sleep(1)

return x + y

- Celery()的第一个参数是当前模块的名称,这个参数是必须的,这样的话名称可以自动生成。第二个参数是中间人关键字参数,指定你所使用的消息中间人的 URL。这里的URL是redis://localhost:6379/2,表示使用本地Redis的db2数据库。

- add()定义了任务:先休眠1秒然后计算和并返回。

- 启动worker

celery -A tasks worker --loglevel=info

使用命令 celery worker --help 可以看到启动worker的更多方式:

# celery worker --help

Usage: celery worker [OPTIONS]

Start worker instance.

Examples -------- $ celery --app=proj worker -l INFO $ celery -A proj worker

-l INFO -Q hipri,lopri $ celery -A proj worker --concurrency=4 $ celery -A

proj worker --concurrency=1000 -P eventlet $ celery worker --autoscale=10,0

Worker Options:

-n, --hostname HOSTNAME Set custom hostname (e.g., 'w1@%%h').

Expands: %%h (hostname), %%n (name) and %%d,

(domain).

-D, --detach Start worker as a background process.

-S, --statedb PATH Path to the state database. The extension

'.db' may be appended to the filename.

-l, --loglevel [DEBUG|INFO|WARNING|ERROR|CRITICAL|FATAL]

Logging level.

-O [default|fair] Apply optimization profile.

--prefetch-multiplier <prefetch multiplier>

Set custom prefetch multiplier value for

this worker instance.

Pool Options:

-c, --concurrency <concurrency>

Number of child processes processing the

queue. The default is the number of CPUs

available on your system.

-P, --pool [prefork|eventlet|gevent|solo|processes|threads]

Pool implementation.

-E, --task-events, --events Send task-related events that can be

captured by monitors like celery events,

celerymon, and others.

--time-limit FLOAT Enables a hard time limit (in seconds

int/float) for tasks.

--soft-time-limit FLOAT Enables a soft time limit (in seconds

int/float) for tasks.

--max-tasks-per-child INTEGER Maximum number of tasks a pool worker can

execute before it's terminated and replaced

by a new worker.

--max-memory-per-child INTEGER Maximum amount of resident memory, in KiB,

that may be consumed by a child process

before it will be replaced by a new one. If

a single task causes a child process to

exceed this limit, the task will be

completed and the child process will be

replaced afterwards. Default: no limit.

Queue Options:

--purge, --discard

-Q, --queues COMMA SEPARATED LIST

-X, --exclude-queues COMMA SEPARATED LIST

-I, --include COMMA SEPARATED LIST

Features:

--without-gossip

--without-mingle

--without-heartbeat

--heartbeat-interval INTEGER

--autoscale <MIN WORKERS>, <MAX WORKERS>

Embedded Beat Options:

-B, --beat

-s, --schedule-filename, --schedule TEXT

--scheduler TEXT

Daemonization Options:

-f, --logfile TEXT

--pidfile TEXT

--uid TEXT

--uid TEXT

--gid TEXT

--umask TEXT

--executable TEXT

Options:

--help Show this message and exit.

worker的启动日志:

/usr/local/lib/python3.8/dist-packages/celery/platforms.py:840: SecurityWarning: You're running the worker with superuser privileges: this is

absolutely not recommended!

Please specify a different user using the --uid option.

User information: uid=0 euid=0 gid=0 egid=0

warnings.warn(SecurityWarning(ROOT_DISCOURAGED.format(

-------------- celery@hecs-407155 v5.2.7 (dawn-chorus)

--- ***** -----

-- ******* ---- Linux-5.4.0-100-generic-x86_64-with-glibc2.29 2022-09-21 17:02:43

- *** --- * ---

- ** ---------- [config]

- ** ---------- .> app: tasks:0x7f9d5fe61490

- ** ---------- .> transport: redis://localhost:6379/2

- ** ---------- .> results: disabled://

- *** --- * --- .> concurrency: 2 (prefork)

-- ******* ---- .> task events: OFF (enable -E to monitor tasks in this worker)

--- ***** -----

-------------- [queues]

.> celery exchange=celery(direct) key=celery

[tasks]

. tasks.add

[2022-09-21 17:02:43,196: INFO/MainProcess] Connected to redis://localhost:6379/2

[2022-09-21 17:02:43,198: INFO/MainProcess] mingle: searching for neighbors

[2022-09-21 17:02:44,204: INFO/MainProcess] mingle: all alone

[2022-09-21 17:02:44,213: INFO/MainProcess] celery@hecs-407155 ready.

从日志可以看到,worker进程连接到了redis://localhost:6379/2。

查看启动的worker:

# ps -ef | grep celery | grep -v grep

root 1114359 1114358 1 17:02 pts/0 00:00:00 /usr/bin/python3 /usr/local/bin/celery -A tasks worker --loglevel=info

root 1114361 1114359 0 17:02 pts/0 00:00:00 /usr/bin/python3 /usr/local/bin/celery -A tasks worker --loglevel=info

root 1114362 1114359 0 17:02 pts/0 00:00:00 /usr/bin/python3 /usr/local/bin/celery -A tasks worker --loglevel=info

root@hecs-407155:/home/ljl/celery/celery_test#

可以看到,启动了3个worker子进程来处理队列,默认情况下,使用系统CPU数量作为worker子进程的总数。

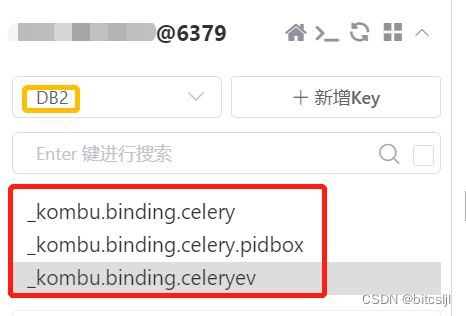

同时,Redis的db2数据库中新增了3条记录:

4. 启动生产者

vim producer.py

producer.py:

#!/usr/bin/python3

from tasks import add

add.delay(1,1)

add.delay(1,2)

add.delay(1,3)

add.delay(1,4)

生产者使用add.delay()向任务队列发送4个求和任务。

执行producer.py:

chmod u+x producer.py && ./producer.py

worker的执行日志:

[2022-09-21 16:02:37,670: INFO/MainProcess] Task tasks.add[89e1bc55-f0a3-4036-8b6f-478de1dfa9c9] received

[2022-09-21 16:02:37,672: INFO/MainProcess] Task tasks.add[bfe6a165-339c-4dd4-bf9b-e69d91acbf9d] received

[2022-09-21 16:02:37,673: INFO/MainProcess] Task tasks.add[e2b682b8-0f7f-4f18-8459-682d2044231b] received

[2022-09-21 16:02:37,675: INFO/MainProcess] Task tasks.add[2d8c8f0e-b322-4950-a438-d5bc8a7b7538] received

[2022-09-21 16:02:38,672: INFO/ForkPoolWorker-2] Task tasks.add[89e1bc55-f0a3-4036-8b6f-478de1dfa9c9] succeeded in 1.0011507477611303s: 2

[2022-09-21 16:02:38,674: INFO/ForkPoolWorker-1] Task tasks.add[bfe6a165-339c-4dd4-bf9b-e69d91acbf9d] succeeded in 1.0012883339077234s: 3

[2022-09-21 16:02:39,674: INFO/ForkPoolWorker-2] Task tasks.add[e2b682b8-0f7f-4f18-8459-682d2044231b] succeeded in 1.0011502113193274s: 4

[2022-09-21 16:02:39,676: INFO/ForkPoolWorker-1] Task tasks.add[2d8c8f0e-b322-4950-a438-d5bc8a7b7538] succeeded in 1.0011185528710485s: 5

worker成功处理了这4个求和任务,总用时2秒,小于依次执行这4个任务的总耗时4秒。