Fink连接Kafka 连接不上 : Recovery is suppressed by NoRestartBackoffTimeStrategy

出错原因:Recovery is suppressed by NoRestartBackoffTimeStrategy

代码原文:

package com.tianyi.chapter05;

import org.apache.flink.api.common.restartstrategy.RestartStrategies;

import org.apache.flink.api.common.serialization.SimpleStringSchema;

import org.apache.flink.api.common.time.Time;

import org.apache.flink.streaming.api.TimeCharacteristic;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer;

import java.util.ArrayList;

import java.util.Properties;

import java.util.concurrent.TimeUnit;

/**

* @author tianyi

* 1-3 读取有界流

*/

public class SourceTest {

public static void main(String[] args) throws Exception {

//创建执行的环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

// 1.从文件中读取数据

DataStreamSource<String> stream1 = env.readTextFile("input/clicks.txt");

// 2.从集合中读取数据

ArrayList<Integer> nums = new ArrayList<>();

nums.add(2);

nums.add(5);

DataStreamSource<Integer> numsStream = env.fromCollection(nums);

// 创建类对象读取数据

ArrayList<Event> events = new ArrayList<>();

events.add(new Event("tx", "./home", 1000L));

events.add(new Event("ll", "./home", 2000L));

DataStreamSource<Event> stream2 = env.fromCollection(events);

// 3.从元素中读取数据

DataStreamSource<Event> stream3 = env.fromElements(

new Event("tx", "./home", 1000L),

new Event("ll", "./home", 2000L)

);

// 4.从socket文本流中读取数据

DataStreamSource<String> stream4 = env.socketTextStream("hadoop102", 7777);

// stream1.print("Stream1");

// numsStream.print("nums");

// stream2.print("stream2");

// stream3.print("stream3");

// stream4.print("stream4");

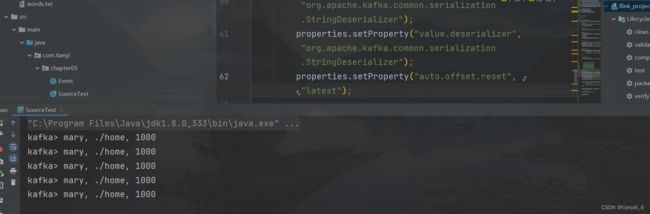

// 5.从Kafka中读取数据

Properties properties = new Properties();

properties.setProperty("bootstrap.servers", "hadoop102:9092");

properties.setProperty("group.id", "consumer-group");

properties.setProperty("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

properties.setProperty("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

properties.setProperty("auto.offset.reset", "latest");

//env.setRestartStrategy(RestartStrategies.fixedDelayRestart(

//3, // 尝试重启的次数

//Time.of(10, TimeUnit.SECONDS) // 间隔

//));

DataStreamSource<String> kafkaStream = env.addSource(new FlinkKafkaConsumer<String>("clicks", new SimpleStringSchema(), properties));

kafkaStream.print("kafka");

env.execute();

}

}

出错代码如下:

Exception in thread "main" org.apache.flink.runtime.client.JobExecutionException: Job execution failed.

at org.apache.flink.runtime.jobmaster.JobResult.toJobExecutionResult(JobResult.java:144)

at org.apache.flink.runtime.minicluster.MiniClusterJobClient.lambda$getJobExecutionResult$3(MiniClusterJobClient.java:137)

at java.util.concurrent.CompletableFuture.uniApply(CompletableFuture.java:616)

at java.util.concurrent.CompletableFuture$UniApply.tryFire(CompletableFuture.java:591)

at java.util.concurrent.CompletableFuture.postComplete(CompletableFuture.java:488)

at java.util.concurrent.CompletableFuture.complete(CompletableFuture.java:1975)

at org.apache.flink.runtime.rpc.akka.AkkaInvocationHandler.lambda$invokeRpc$0(AkkaInvocationHandler.java:237)

at java.util.concurrent.CompletableFuture.uniWhenComplete(CompletableFuture.java:774)

at java.util.concurrent.CompletableFuture$UniWhenComplete.tryFire(CompletableFuture.java:750)

at java.util.concurrent.CompletableFuture.postComplete(CompletableFuture.java:488)

at java.util.concurrent.CompletableFuture.complete(CompletableFuture.java:1975)

at org.apache.flink.runtime.concurrent.FutureUtils$1.onComplete(FutureUtils.java:1081)

at akka.dispatch.OnComplete.internal(Future.scala:264)

at akka.dispatch.OnComplete.internal(Future.scala:261)

at akka.dispatch.japi$CallbackBridge.apply(Future.scala:191)

at akka.dispatch.japi$CallbackBridge.apply(Future.scala:188)

at scala.concurrent.impl.CallbackRunnable.run(Promise.scala:60)

at org.apache.flink.runtime.concurrent.Executors$DirectExecutionContext.execute(Executors.java:73)

at scala.concurrent.impl.CallbackRunnable.executeWithValue(Promise.scala:68)

at scala.concurrent.impl.Promise$DefaultPromise.$anonfun$tryComplete$1(Promise.scala:284)

at scala.concurrent.impl.Promise$DefaultPromise.$anonfun$tryComplete$1$adapted(Promise.scala:284)

at scala.concurrent.impl.Promise$DefaultPromise.tryComplete(Promise.scala:284)

at akka.pattern.PromiseActorRef.$bang(AskSupport.scala:573)

at akka.pattern.PipeToSupport$PipeableFuture$$anonfun$pipeTo$1.applyOrElse(PipeToSupport.scala:22)

at akka.pattern.PipeToSupport$PipeableFuture$$anonfun$pipeTo$1.applyOrElse(PipeToSupport.scala:21)

at scala.concurrent.Future.$anonfun$andThen$1(Future.scala:532)

at scala.concurrent.impl.Promise.liftedTree1$1(Promise.scala:29)

at scala.concurrent.impl.Promise.$anonfun$transform$1(Promise.scala:29)

at scala.concurrent.impl.CallbackRunnable.run(Promise.scala:60)

at akka.dispatch.BatchingExecutor$AbstractBatch.processBatch(BatchingExecutor.scala:55)

at akka.dispatch.BatchingExecutor$BlockableBatch.$anonfun$run$1(BatchingExecutor.scala:91)

at scala.runtime.java8.JFunction0$mcV$sp.apply(JFunction0$mcV$sp.java:12)

at scala.concurrent.BlockContext$.withBlockContext(BlockContext.scala:81)

at akka.dispatch.BatchingExecutor$BlockableBatch.run(BatchingExecutor.scala:91)

at akka.dispatch.TaskInvocation.run(AbstractDispatcher.scala:40)

at akka.dispatch.ForkJoinExecutorConfigurator$AkkaForkJoinTask.exec(ForkJoinExecutorConfigurator.scala:44)

at akka.dispatch.forkjoin.ForkJoinTask.doExec(ForkJoinTask.java:260)

at akka.dispatch.forkjoin.ForkJoinPool$WorkQueue.runTask(ForkJoinPool.java:1339)

at akka.dispatch.forkjoin.ForkJoinPool.runWorker(ForkJoinPool.java:1979)

at akka.dispatch.forkjoin.ForkJoinWorkerThread.run(ForkJoinWorkerThread.java:107)

Caused by: org.apache.flink.runtime.JobException: Recovery is suppressed by NoRestartBackoffTimeStrategy

at org.apache.flink.runtime.executiongraph.failover.flip1.ExecutionFailureHandler.handleFailure(ExecutionFailureHandler.java:138)

at org.apache.flink.runtime.executiongraph.failover.flip1.ExecutionFailureHandler.getFailureHandlingResult(ExecutionFailureHandler.java:82)

at org.apache.flink.runtime.scheduler.DefaultScheduler.handleTaskFailure(DefaultScheduler.java:207)

at org.apache.flink.runtime.scheduler.DefaultScheduler.maybeHandleTaskFailure(DefaultScheduler.java:197)

at org.apache.flink.runtime.scheduler.DefaultScheduler.updateTaskExecutionStateInternal(DefaultScheduler.java:188)

at org.apache.flink.runtime.scheduler.SchedulerBase.updateTaskExecutionState(SchedulerBase.java:677)

at org.apache.flink.runtime.scheduler.SchedulerNG.updateTaskExecutionState(SchedulerNG.java:79)

at org.apache.flink.runtime.jobmaster.JobMaster.updateTaskExecutionState(JobMaster.java:435)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.flink.runtime.rpc.akka.AkkaRpcActor.handleRpcInvocation(AkkaRpcActor.java:305)

at org.apache.flink.runtime.rpc.akka.AkkaRpcActor.handleRpcMessage(AkkaRpcActor.java:212)

at org.apache.flink.runtime.rpc.akka.FencedAkkaRpcActor.handleRpcMessage(FencedAkkaRpcActor.java:77)

at org.apache.flink.runtime.rpc.akka.AkkaRpcActor.handleMessage(AkkaRpcActor.java:158)

at akka.japi.pf.UnitCaseStatement.apply(CaseStatements.scala:26)

at akka.japi.pf.UnitCaseStatement.apply(CaseStatements.scala:21)

at scala.PartialFunction.applyOrElse(PartialFunction.scala:123)

at scala.PartialFunction.applyOrElse$(PartialFunction.scala:122)

at akka.japi.pf.UnitCaseStatement.applyOrElse(CaseStatements.scala:21)

at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:171)

at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:172)

at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:172)

at akka.actor.Actor.aroundReceive(Actor.scala:517)

at akka.actor.Actor.aroundReceive$(Actor.scala:515)

at akka.actor.AbstractActor.aroundReceive(AbstractActor.scala:225)

at akka.actor.ActorCell.receiveMessage(ActorCell.scala:592)

at akka.actor.ActorCell.invoke(ActorCell.scala:561)

at akka.dispatch.Mailbox.processMailbox(Mailbox.scala:258)

at akka.dispatch.Mailbox.run(Mailbox.scala:225)

at akka.dispatch.Mailbox.exec(Mailbox.scala:235)

... 4 more

Caused by: org.apache.kafka.common.errors.TimeoutException: Timeout of 60000ms expired before the position for partition clicks-0 could be determined

Process finished with exit code 1

出错分析:

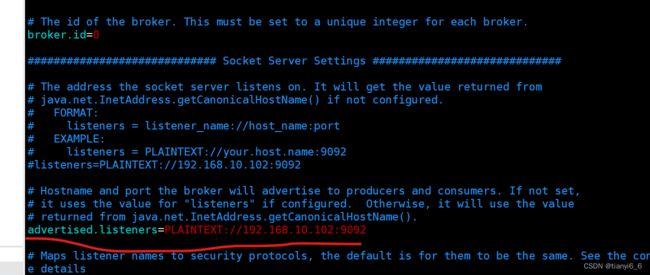

一开始连接不上,以为配置有问题,就在博客上面搜,然后修改配置文件,

[ztx@hadoop102 kafka]$ vim config/server.properties

修改之后还是报错,隔网上搜,是版本依赖的不一样,然后修改Kafka的版本依赖为 2.1.0 和 1.10.0 这两个试了之后,还是没用,然后我把集群都关闭重启,这时才发现,我一直起的是一台机子上面的集群,然后我把三台机子上的zookeeper和Kafka集群都给开启。

最后成功开启,可以传输了