GaussDB内存过载分析

问题现象

- 数据库进程内存占比较高

- 长时间占比较高

观察监控平台内存占用的变化曲线,无论当前数据库是否有业务在运行,数据库进程内存占总机器内存的比例长时间处于较高状态,且不下降。 - 执行作业期间占比较高

数据库进程在没有业务执行时,内存使用持续处于较低的状态,当有业务执行时,内存占用升高,待作业执行结束后,内存又恢复到较低的状态。 - 内存上涨不下降

数据库进程在执行业务过程中内存呈缓慢上涨趋势,且业务执行完后无下降趋势。

- 长时间占比较高

- SQL语句报内存不足错误

执行SQL语句报内存不足的错误,如下所示。

gaussdb=# select contextname, sum(totalsize)/1024/1024 sum, sum(freesize)/1024/1024, count(*) count from gs_shared_memory_detail group by contextname order by sum desc limit 10;

ERROR: memory is temporarily unavailable

DETAIL: Failed on request of size 46 bytes under queryid 281475005884780 in heaptuple.cpp:1934.

原因分析

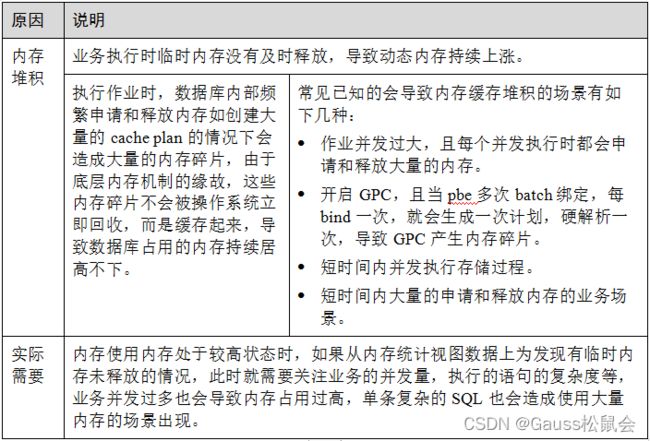

如果出现集群内存不足或者长时间内存占用处于较高状态的情况,一般是由以下几种原因造成的。

- 内存堆积

当SQL语句执行过程中临时内存未及时释放时,会导致内存堆积。 - 并发过高导致内存占用过高

客户端向server端建立的连接数过多,导致server端创建了大量的session,占用大量内存,这种场景下会出现内存出现占用较高的情况。 - 单条SQL语句内存占用较高

对于部分SQL语句,执行过程中会使用大量的内存,导致内存出现短暂性上涨,如复杂的存储过程语句,执行时内存占用可能达到几十GB大小。 - 内存缓存过多

GaussDB Kernel引入了jemalloc开源库来管理内存管理,但是jemalloc在面对大量的内存碎片时会存在内存持续缓存不释放的问题,导致数据库进程使用的内存远远超过了实际使用的内存,具体表现为使用内存视图pv_total_memory_detail查询时,other_used_memory占用过大。如下所示。

gaussdb=# select * from pv_total_memory_detail;

nodename | memorytype | memorymbytes

----------------+-------------------------+--------------

coordinator1 | max_process_memory | 81920

coordinator1 | process_used_memory | 14567

coordinator1 | max_dynamic_memory | 34012

coordinator1 | dynamic_used_memory | 1851

coordinator1 | dynamic_peak_memory | 3639

coordinator1 | dynamic_used_shrctx | 394

coordinator1 | dynamic_peak_shrctx | 399

coordinator1 | max_backend_memory | 648

coordinator1 | backend_used_memory | 1

coordinator1 | max_shared_memory | 46747

coordinator1 | shared_used_memory | 11618

coordinator1 | max_cstore_memory | 512

coordinator1 | cstore_used_memory | 0

coordinator1 | max_sctpcomm_memory | 0

coordinator1 | sctpcomm_used_memory | 0

coordinator1 | sctpcomm_peak_memory | 0

coordinator1 | other_used_memory | 1013

coordinator1 | gpu_max_dynamic_memory | 0

coordinator1 | gpu_dynamic_used_memory | 0

coordinator1 | gpu_dynamic_peak_memory | 0

coordinator1 | pooler_conn_memory | 0

coordinator1 | pooler_freeconn_memory | 0

coordinator1 | storage_compress_memory | 0

coordinator1 | udf_reserved_memory | 0

(24 rows)

定位思路

出现内存过载的问题时,如果有内存过载的环境可以实时定位,可以使用思路一中的定位流程定位内存问题,如果现场环境已经被破坏(集群重启等导致),则按照思路二中的流程进行定位。当前GaussDB Kernel的内存管理采用内存上下文机制管理,在内存使用的统计上有着精准的统计和可视化的视图方便查询定位。

思路一:有现场环境

- 查询内存统计信息

表:信息说明

| 视图 | 说明 |

|---|---|

| pv_total_memory_detail | 全局内存信息概况。 |

| pg_shared_memory_detail | 全局内存上下文内存使用详情。 |

| pv_thread_memory_context | 线程级内存上下文内存使用详情。 |

| pv_session_memory_context session | session级内存上下文使用详情,仅在线程池模式开启时生效。 |

- 确定内存占用分类

根据查询内存统计信息中查询出的内存统计视图结果,根据内存统计视图可以分析出如下结果。

表:分析结果

| 视图 | 结果 |

|---|---|

| process_used_memory | 数据库进程所使用的内存大小。 |

| dynamic_used_memory | 已使用的动态内存。 |

| dynamic_used_shrctx | 全局内存上下文已使用的动态内存。 |

| shared_used_memory | 已使用的共享内存。 |

| other_used_memory | 其他已使用的内存大小,一般进程释放后被缓存起来的内存会统计在内。 |

- 如果dynamic_used_memory较大,dynamic_used_shrctx较小,则可以确认是线程和session上内存占用较多,则直接查询线程和session上的内存上下文的内存占用即可确认内存堆积的具体内存上下文。

- 如果dynamic_used_memory较大,dynamic_used_shrctx和dynamic_used_memory相差不大,则可以确认是全局内存上下文使用的动态内存较大,直接查询全局的内存上下文的内存占用即可确认内存堆积的具体内存上下文。

- 如果只有shared_used_memory占用较大,则可以确认是共享内存占用较多,忽略即可。

- 如果是other_used_memory较大,一般情况下应该是出现了频繁的内存申请和释放的业务导致内存碎片缓存过多,此时需要联系华为工程师定位解决。

思路二:无现场环境

某些内存过载出现时会导致集群环境重启等,这种情况下没有实时的环境能够定位内存过载的原因是什么,就需要使用如下流程来定位已发生过的内存过载导致的原因。

- 查看历史内存占用曲线

在环境上查看数据库进程使用内存大小的监控数据。根据数据库在一段时间内的内存变化曲线,可以确认内存过载的时间节点,如下图所示。

从历史内存曲线中,可以看出在8月22日内存出现瞬间上涨的情况。 - 分析历史内存统计信息

根据查看历史内存占用曲线中确认的时间节点信息,使用视图gs_get_history_memory_detail可获得该时间节点的内存快照日志,快照日志以时间戳作为文件名,能够迅速查找,根据快照日志能够快速的找到内存居高的内存上下文信息,然后联系华为工程师协助即可解决。

如果没有在历史内存统计信息中找到对应时间节点的内存快照信息,此时需要关注该时间节点业务的并发量,执行语句的复杂度等,根据实际业务情况进一步分析内存上涨的原因,需联系华为工程师协助解决。

处理方法

遇到内存占用过高或者内存不足报错的场景时可以通过如下流程来分析定位原因并解决。

1.获取内存统计信息

必须要获取出现内存故障的数据库进程上的内存统计信息。

- 实时内存统计信息

查询GaussDB Kernel进程总的内存统计信息。

gaussdb=# select * from pv_total_memory_detail;

nodename | memorytype | memorymbytes

----------------+-------------------------+--------------

coordinator1 | max_process_memory | 81920

coordinator1 | process_used_memory | 14567

coordinator1 | max_dynamic_memory | 34012

coordinator1 | dynamic_used_memory | 1851

coordinator1 | dynamic_peak_memory | 3639

coordinator1 | dynamic_used_shrctx | 394

coordinator1 | dynamic_peak_shrctx | 399

coordinator1 | max_backend_memory | 648

coordinator1 | backend_used_memory | 1

coordinator1 | max_shared_memory | 46747

coordinator1 | shared_used_memory | 11618

coordinator1 | max_cstore_memory | 512

coordinator1 | cstore_used_memory | 0

coordinator1 | max_sctpcomm_memory | 0

coordinator1 | sctpcomm_used_memory | 0

coordinator1 | sctpcomm_peak_memory | 0

coordinator1 | other_used_memory | 1013

coordinator1 | gpu_max_dynamic_memory | 0

coordinator1 | gpu_dynamic_used_memory | 0

coordinator1 | gpu_dynamic_peak_memory | 0

coordinator1 | pooler_conn_memory | 0

coordinator1 | pooler_freeconn_memory | 0

coordinator1 | storage_compress_memory | 0

coordinator1 | udf_reserved_memory | 0

(24 rows)

查看数据库进程全局的内存上下文占用大小,按照内存上下文分类从大到小排序,取top10即可。

gaussdb=# select contextname, sum(totalsize)/1024/1024 sum, sum(freesize)/1024/1024, count(*) count from pg_shared_memory_detail group by contextname order by sum desc limit 10;

contextname | sum | ?column? | count

-----------------------------------+----------------------+-----------------------+-------

IncreCheckPointContext | 250.8796234130859375 | .00273132324218750000 | 1

AshContext | 64.0950317382812500 | .00772094726562500000 | 1

DefaultTopMemoryContext | 60.5699005126953125 | 1.0594177246093750 | 1

StorageTopMemoryContext | 16.7601776123046875 | .05357360839843750000 | 1

GlobalAuditMemory | 16.0081176757812500 | .00769042968750000000 | 1

CBBTopMemoryContext | 14.9503479003906250 | .04009246826171875000 | 1

Undo | 8.6680450439453125 | .21752929687500000000 | 1

DoubleWriteContext | 6.5549163818359375 | .02331542968750000000 | 1

ThreadPoolContext | 5.4042663574218750 | .00525665283203125000 | 1

GlobalSysDBCacheEntryMemCxt_16384 | 4.2232666015625000 | .89799499511718750000 | 16

(10 rows)

查看数据库进程所有线程的内存上下文占用大小,按照内存上下文分类从大到小排序,取top10即可。

gaussdb=# select contextname, sum(totalsize)/1024/1024 sum, sum(freesize)/1024/1024, count(*) count from pv_thread_memory_context group by contextname order by sum desc limit 10;

contextname | sum | ?column? | count

---------------------------------+----------------------+-----------------------+-------

LocalSysCacheShareMemoryContext | 612.5096435546875000 | 57.4630737304687500 | 543

StorageTopMemoryContext | 311.8157348632812500 | 3.2519149780273438 | 543

DefaultTopMemoryContext | 168.5756530761718750 | 10.7153015136718750 | 543

LocalSysCacheMyDBMemoryContext | 167.4375000000000000 | 65.7499847412109375 | 543

ThreadTopMemoryContext | 161.4440002441406250 | 4.0309295654296875 | 543

CBBTopMemoryContext | 109.1161880493164063 | 6.7845993041992188 | 543

LocalSysCacheTopMemoryContext | 93.4109802246093750 | 13.2236938476562500 | 543

Timezones | 43.2421417236328125 | 1.4333953857421875 | 543

gs_signal | 32.2394561767578125 | 4.9155120849609375 | 1

Type information cache | 22.9119262695312500 | .86848449707031250000 | 329

(10 rows)

查看数据库进程所有session的内存上下文占用大小,按照内存上下文分类从大到小排序,取top10即可。

gaussdb=# select contextname, sum(totalsize)/1024/1024 sum, sum(freesize)/1024/1024, count(*) count from pv_session_memory_context group by contextname order by sum desc limit 10;

contextname | sum | ?column? | count

-------------------------+----------------------+-----------------------+-------

CachedPlan | 223.4433593750000000 | 64.6083068847656250 | 12394

CachedPlanQuery | 134.7382812500000000 | 42.3366699218750000 | 12596

SessionTopMemoryContext | 132.3496398925781250 | 25.9272155761718750 | 302

CachedPlanSource | 98.6943359375000000 | 28.3841018676757813 | 12897

CBBTopMemoryContext | 60.6870880126953125 | 3.0470962524414063 | 302

GenericRoot | 35.1962890625000000 | 14.1624069213867188 | 471

Timezones | 24.0499572753906250 | .79721069335937500000 | 302

SPI Plan | 21.0664062500000000 | 6.8149719238281250 | 2396

AdaptiveCachedPlan | 17.5449218750000000 | 4.7733078002929688 | 546

Prepared Queries | 16.4062500000000000 | 7.5508117675781250 | 300

(10 rows)

- 历史内存统计信息

历史内存信息只有在没有实时现场环境的时候才会被用到,通过历史内存统计信息来定位过去发生过的内存过载的原因。

查询视图gs_get_history_memory_detail可以获得数据库在过去所有时间段的内存使用超过90%时的内存使用详情,如下所示。

gaussdb=# select * from gs_get_history_memory_detail(NULL) order by memory_info desc limit 10;

memory_info

-------------------------------

mem_log-2023-03-10_205125.log

mem_log-2023-03-10_205115.log

mem_log-2023-03-10_205104.log

mem_log-2023-03-10_205054.log

mem_log-2023-03-10_205043.log

mem_log-2023-03-10_205032.log

mem_log-2023-03-10_205022.log

mem_log-2023-03-10_205012.log

mem_log-2023-03-10_205002.log

mem_log-2023-03-10_204951.log

(10 rows)

选取其中一个log文件,执行如下查询语句即可阅览log内容,记载了全局的内存概况与全局级内存上下文,线程级内存上下文,session级内存上下的top20内存上下文占用详情,如下所示。

gaussdb=# select * from gs_get_history_memory_detail('mem_log-2023-03-10_205125.log');

memory_info

--------------------------------------------------------------------------------------

{

"Global Memory Statistics": {

"Max_dynamic_memory": 34012,

"Dynamic_used_memory": 3645,

"Dynamic_peak_memory": 3664,

"Dynamic_used_shrctx": 401,

"Dynamic_peak_shrctx": 401,

"Max_backend_memory": 648,

"Backend_used_memory": 1,

"other_used_memory": 0

},

"Memory Context Info": {

"Memory Context Detail": {

"Context Type": "Shared Memory Context",

"Memory Context": {

"context": "IncreCheckPointContext",

"freeSize": 2864,

"totalSize": 263066352

},

...

},

"Memory Context Detail": {

"Context Type": "Session Memory Context",

"Memory Context": {

"context": "CachedPlan",

"freeSize": 68041368,

"totalSize": 235937792

},

...

},

"Memory Context Detail": {

"Context Type": "Thread Memory Context",

"Memory Context": {

"context": "LocalSysCacheShareMemoryContext",

"freeSize": 60431360,

"totalSize": 644141760

},

...

}

}

(322 rows)

2.分析内存占用分类

根据获取内存统计信息中查询获得的内存占用概况可分析如下:

- 如果dynamic_used_memory较大,dynamic_used_shrctx较小,则可以确认是线程和session上内存占用较多。

- 如果dynamic_used_memory较大,dynamic_used_shrctx和dynamic_used_memory相差不大,则可以确认是全局内存上下文使用的动态内存较大。

- 如果只有shared_used_memory占用较大,则可以确认是共享内存占用较多,忽略即可。

- 如果是other_used_memory较大,一般情况是由于业务执行时频繁的内存申请和释放导致内存碎片缓存过多。

针对这几种种情况,分别按照下面的4类定位方法定位即可。

- 全局内存上下文占用较高

- 有现场环境

查询如下语句即可确认是哪个内存上下文占用内存较高。

gaussdb=# select contextname, sum(totalsize)/1024/1024 sum, sum(freesize)/1024/1024, count(*) count from pg_shared_memory_detail group by contextname order by sum desc limit 10;

contextname | sum | ?column? | count

-----------------------------------+----------------------+-----------------------+-------

IncreCheckPointContext | 250.8796234130859375 | .00273132324218750000 | 1

AshContext | 64.0950317382812500 | .00772094726562500000 | 1

DefaultTopMemoryContext | 60.5699005126953125 | 1.0594177246093750 | 1

StorageTopMemoryContext | 16.7601776123046875 | .04942321777343750000 | 1

GlobalAuditMemory | 16.0081176757812500 | .00769042968750000000 | 1

CBBTopMemoryContext | 14.9503479003906250 | .04009246826171875000 | 1

Undo | 8.6680450439453125 | .20516967773437500000 | 1

DoubleWriteContext | 6.5549163818359375 | .02331542968750000000 | 1

ThreadPoolContext | 5.3873443603515625 | .00525665283203125000 | 1

GlobalSysDBCacheEntryMemCxt_16384 | 4.3115692138671875 | 1.0470581054687500 | 16

(10 rows)

确定内存上下文之后,以IncreCheckPointContext为例,查询视图gs_get_shared_memctx_detail,确定内存堆积的代码位置。

gaussdb=# select * from gs_get_shared_memctx_detail('IncreCheckPointContext');

file | line | size

-------------------------+------+-----------

ipci.cpp | 476 | 64

pagewriter.cpp | 298 | 1024

ipci.cpp | 498 | 4096

pagewriter.cpp | 322 | 19632000

pagewriter.cpp | 317 | 33669120

storage_buffer_init.cpp | 90 | 209756160

(6 rows)

从上述查询结果可以看出,在代码storage_buffer_init.cpp的90行申请了大量的内存,可能存在内存堆积不释放的问题。

- 无现场环境

若存在内存过载时间节点的内存快照信息,则在内存统计信息中找到Shared Memory Context类型的top20的内存上下文申请详情,即可确认内存堆积的原因。

若不存在内存过载时间节点的内存快照信息,请联系华为工程师定位解决。

总结:使用上面2种方法找到内存占用过多的内存上下文后,可进行初步判断,在数据库内核执行业务时,一般占用较多的全局内存上下文有“IncreCheckPointContext”,“DefaultTopMemoryContext”,如果是这两个context占用较多,则需要减小业务的并发来降低内存占用;如果是其他内存上下文,可能是业务执行过程出现内存堆积,请联系华为工程师解决。

- 线程级内存上下文占用内存较高

- 有现场环境

查询如下语句即可确认是哪个内存上下文占用内存较高。

gaussdb=# select contextname, sum(totalsize)/1024/1024 sum, sum(freesize)/1024/1024, count(*) count from pv_thread_memory_context group by contextname order by sum desc limit 10;

contextname | sum | ?column? | count

---------------------------------+----------------------+-----------------------+-------

LocalSysCacheShareMemoryContext | 641.0926513671875000 | 60.0820159912109375 | 543

StorageTopMemoryContext | 311.8157348632812500 | 3.1896591186523438 | 543

LocalSysCacheMyDBMemoryContext | 175.0625000000000000 | 65.0446166992187500 | 543

DefaultTopMemoryContext | 168.5756530761718750 | 10.7153015136718750 | 543

ThreadTopMemoryContext | 161.9752502441406250 | 4.1196441650390625 | 543

CBBTopMemoryContext | 109.1161880493164063 | 6.7845993041992188 | 543

LocalSysCacheTopMemoryContext | 93.4109802246093750 | 13.2236938476562500 | 543

Timezones | 43.2421417236328125 | 1.4333953857421875 | 543

gs_signal | 32.2394561767578125 | 4.9155120849609375 | 1

Type information cache | 23.8869018554687500 | .90544128417968750000 | 343

(10 rows)

确定内存上下文之后,以StorageTopMemoryContext为例,查询视图gs_get_thread_memctx_detail(第一个入参为线程ID,可以通过查询视图gs_thread_memory_context获得 ),确定内存堆积的代码位置。

gaussdb=# select * from gs_get_thread_memctx_detail(140639273547520,'StorageTopMemoryContext');

file | line | size

--------------+------+--------

syncrep.cpp | 1608 | 32

elog.cpp | 2008 | 16

fd.cpp | 2734 | 128

syncrep.cpp | 1568 | 32

deadlock.cpp | 175 | 512

deadlock.cpp | 169 | 342656

deadlock.cpp | 157 | 85664

deadlock.cpp | 146 | 21416

deadlock.cpp | 144 | 32112

deadlock.cpp | 136 | 10712

deadlock.cpp | 135 | 10712

deadlock.cpp | 128 | 85664

deadlock.cpp | 126 | 21416

(13 rows)

从上述查询结果可以看出,在代码deadlock.cpp的169行申请了大量的内存,可能存在内存堆积不释放的问题。

- 无现场环境

若存在内存过载时间节点的内存快照信息,则在内存统计信息中找到Thread Memory Context类型的top20的内存上下文申请详情,即可确认内存堆积的原因。

若不存在内存过载时间节点的内存快照信息,请联系华为工程师定位解决。

总结:使用上面2种方法找到内存占用过多的内存上下文后,可进行初步判断,在数据库内核执行业务时,一般占用较多的全局内存上下文有“LocalSysCacheShareMemoryContext”,“StorageTopMemoryContext”,如果是这两个context占用较多,则需要减小业务的并发来降低内存占用;如果是其他内存上下文,可能是业务执行过程出现内存堆积,请联系华为工程师解决。

- session级内存上下文占用内存较高

- 有现场环境

查询如下语句即可确认是哪个内存上下文占用内存较高。

gaussdb=# select contextname, sum(totalsize)/1024/1024 sum, sum(freesize)/1024/1024, count(*) count from pv_session_memory_context group by contextname order by sum desc limit 10;

contextname | sum | ?column? | count

----------------------------+----------------------+-----------------------+-------

CachedPlan | 226.1093750000000000 | 67.1747817993164063 | 12450

CachedPlanQuery | 134.8027343750000000 | 41.8541030883789063 | 12612

SessionTopMemoryContext | 132.1605682373046875 | 26.1002349853515625 | 301

CachedPlanSource | 98.7617187500000000 | 28.4135513305664063 | 12912

CBBTopMemoryContext | 60.4861373901367188 | 3.0370101928710938 | 301

Timezones | 23.9703216552734375 | .79457092285156250000 | 301

SPI Plan | 21.1307907104492188 | 6.8435440063476563 | 2412

GenericRoot | 19.9628906250000000 | 7.7032165527343750 | 374

Prepared Queries | 16.4062500000000000 | 7.5508117675781250 | 300

unnamed prepared statement | 14.3437500000000000 | 6.6462554931640625 | 300

(10 rows)

确定内存上下文之后,以CachedPlan为例,查询视图gs_get_session_memctx_detail,确定内存堆积的代码位置。

gaussdb=# select * from gs_get_session_memctx_detail('CachedPlanQuery');

file | line | size

---------------+------+---------

copyfuncs.cpp | 2607 | 5031680

copyfuncs.cpp | 7013 | 4176736

copyfuncs.cpp | 7016 | 2088368

copyfuncs.cpp | 5062 | 6918144

copyfuncs.cpp | 3461 | 403552

copyfuncs.cpp | 3397 | 2727104

copyfuncs.cpp | 3401 | 487368

datum.cpp | 150 | 2048

copyfuncs.cpp | 2572 | 1113728

copyfuncs.cpp | 6204 | 32

copyfuncs.cpp | 6206 | 32

copyfuncs.cpp | 7021 | 4267200

copyfuncs.cpp | 7037 | 2832000

copyfuncs.cpp | 7048 | 2066400

bitmapset.cpp | 94 | 134400

copyfuncs.cpp | 3430 | 96000

copyfuncs.cpp | 2847 | 2150400

copyfuncs.cpp | 2551 | 5126400

copyfuncs.cpp | 3984 | 105600

list.cpp | 105 | 254400

list.cpp | 108 | 796800

copyfuncs.cpp | 3835 | 7065600

copyfuncs.cpp | 2451 | 1056000

copyfuncs.cpp | 2453 | 244800

copyfuncs.cpp | 3840 | 230400

copyfuncs.cpp | 2895 | 1113600

copyfuncs.cpp | 3442 | 38400

copyfuncs.cpp | 2645 | 115200

list.cpp | 166 | 19200

namespace.cpp | 3853 | 144000

list.cpp | 1460 | 288000

copyfuncs.cpp | 2910 | 38400

copyfuncs.cpp | 2762 | 1075200

copyfuncs.cpp | 3953 | 67200

copyfuncs.cpp | 3000 | 96000

copyfuncs.cpp | 5876 | 28800

copyfuncs.cpp | 2619 | 2400

(37 rows)

从上述查询结果可以看出,在代码copyfuncs.cpp的3835行申请了大量的内存,可能存在内存堆积不释放的问题。

- 无现场环境

若存在内存过载时间节点的内存快照信息,则在内存统计信息中找到Session Memory Context类型的top20的内存上下文申请详情,即可确认内存堆积的原因。

若不存在内存过载时间节点的内存快照信息,请联系华为工程师定位解决。

总结:使用上面2种方法找到内存占用过多的内存上下文后,可进行初步判断,在数据库内核执行业务时,一般占用较多的全局内存上下文有“CachedPlan”,“CachedPlanQuery”,“CachedPlanSource”,“SessionTopMemoryContext”,如果是这几个context占用较多,则需要减小业务的并发来降低内存占用;如果是其他内存上下文,可能是业务执行过程出现内存堆积,请联系华为工程师解决。

- 其他内存占用较高

- 内存碎片过多导致内存缓存过多

数据库执行作业时,数据库内部频繁申请和释放内存如创建大量的cache plan的情况下会造成大量的内存碎片,由于底层内存机制的缘故,这些内存碎片不会被操作系统立即回收,而是缓存起来,数据库在统计的时候会将其计算在other_used_memory里面,如下所示。

gaussdb=# select * from pv_total_memory_detail;

nodename | memorytype | memorymbytes

----------------+-------------------------+--------------

coordinator1 | max_process_memory | 81920

coordinator1 | process_used_memory | 24567

coordinator1 | max_dynamic_memory | 34012

coordinator1 | dynamic_used_memory | 1851

coordinator1 | dynamic_peak_memory | 3639

coordinator1 | dynamic_used_shrctx | 394

coordinator1 | dynamic_peak_shrctx | 399

coordinator1 | max_backend_memory | 648

coordinator1 | backend_used_memory | 1

coordinator1 | max_shared_memory | 46747

coordinator1 | shared_used_memory | 11618

coordinator1 | max_cstore_memory | 512

coordinator1 | cstore_used_memory | 0

coordinator1 | max_sctpcomm_memory | 0

coordinator1 | sctpcomm_used_memory | 0

coordinator1 | sctpcomm_peak_memory | 0

coordinator1 | other_used_memory | 11013

coordinator1 | gpu_max_dynamic_memory | 0

coordinator1 | gpu_dynamic_used_memory | 0

coordinator1 | gpu_dynamic_peak_memory | 0

coordinator1 | pooler_conn_memory | 0

coordinator1 | pooler_freeconn_memory | 0

coordinator1 | storage_compress_memory | 0

coordinator1 | udf_reserved_memory | 0

(24 rows)

- 其他原因导致内存未及时释放

此处需要注意:other_used_memory过大不全部都是因为内存碎片导致的,也可能是如下原因:

1). 业务代码中存在没有在内存上下文上申请内存直接使用了malloc接口申请内存的地方,且出现了内存堆积。

2). 第三方开源软件存在内存未及时释放的场景。

出现这两种情况时,需要联系华为工程师协助解决。

3. 解决方案

- 内存堆积导致内存满

方案:出现内存堆积长时间不释放时,需要通过做主备切换来降低内存的使用。 - 业务原因导致内存满

方案:修改客户端作业,降低并发数或者修改SQL语句,使其在执行时不占用大量内存,请联系华为工程师协助给出详细的解决方案。 - other内存缓存过多导致内存满

方案一:如果是由于业务场景导致的other内存缓存过高,则可以通过调整执行计划相关的参数或者从客户端侧调整业务来解决内存过高的问题,需要根据具体业务场景确定修改方案,请联系华为工程师协助给出详细的解决方案。

方案二:出现内存堆积长时间不释放时,且无法通过调整业务来降低内存时则需要通过做主备切换来降低内存的使用。